- 1Department of Psychology, Center for Studies and Research in Cognitive Neuroscience, University of Bologna, Bologna, Italy

- 2Department of General Psychology, University of Padua, Padua, Italy

Individuals with high levels of alexithymia, a personality trait marked by difficulties in identifying and describing feelings and an externally oriented style of thinking, appear to require more time to accurately recognize intense emotional facial expressions (EFEs). However, in everyday life, EFEs are displayed at different levels of intensity and individuals with high alexithymia may also need more emotional intensity to identify EFEs. Nevertheless, the impact of alexithymia on the identification of EFEs, which vary in emotional intensity, has largely been neglected. To address this, two experiments were conducted in which participants with low (LA) and high (HA) levels of alexithymia were assessed in their ability to identify static (Experiment 1) and dynamic (Experiment 2) morphed faces ranging from neutral to intense EFEs. Results showed that HA needed more emotional intensity than LA to identify static fearful – but not happy or disgusted – faces. On the contrary, no evidence was found that alexithymia affected the identification of dynamic EFEs. These results extend current literature suggesting that alexithymia is related to the need for more perceptual information to identify static fearful EFEs.

Introduction

The identification of emotional facial expressions (EFEs) is fundamental for social interaction and survival of the individual (Adolphs, 2002). For example, being able to correctly recognize a fearful or a happy facial expression is a crucial adaptive mechanism to infer others’ intentions and anticipate their behavior. Research has shown that this ability is affected not only by clinical conditions such as depression and anxiety (Demenescu et al., 2010) or schizophrenia (Kohler et al., 2009) but also by subclinical differences in the ability to process emotional stimuli, such as alexithymia (Grynberg et al., 2012).

Alexithymia is a personality trait characterized by difficulties in identifying and describing feelings and discriminating between feelings and bodily sensations of emotional arousal, which accompany them (Sifneos, 1973; Taylor et al., 1991). Previous research found alexithymia to be related to worse performance in EFE recognition (Lane et al., 1996; Jessimer and Markham, 1997). Specifically, previous literature mainly manipulated stimulus presentation time, showing that the difficulty in EFE identification was evident when stimuli were presented under temporal constraints but not when stimulus exposure time was extended (for a review see Grynberg et al., 2012). For example, when EFEs were presented for 66 or 100 ms, level of alexithymia was negatively correlated with labeling sensitivity of angry EFEs and marginally negatively correlated with labeling sensitivity of fearful and happy EFEs (Ihme et al., 2014a). On the contrary, no such correlations were found when the same EFEs were presented for 1 or 3 s (Pandey and Mandal, 1997; Ihme et al., 2014b). The implications of these results appear twofold. Firstly, alexithymia may be associated to the need for more time to accurately recognize EFEs. Secondly, the difficulties of alexithymic individuals in EFEs identification appear evident only under certain experimental conditions.

Despite growing evidence on the impact of alexithymia in the identification of EFEs, previous research has focused on the response to intense static EFEs. Nevertheless, these are rarely encountered in everyday life and individuals are faced with the challenge of identifying dynamic changes in emotional expression often displayed at varying degrees of intensity (Sarkheil et al., 2013). In fact, alexithymia may be hypothesized to be related not only to the need for more time but also for more perceptual information to identify EFEs, as previously hypothesized in Grynberg et al. (2012). Therefore, manipulating the intensity of EFEs using both static and dynamic stimuli would enable the extension of current literature on the impact of alexithymia on EFE identification by testing whether or not individuals with alexithymia need more emotional intensity to identify EFEs. Indeed, in the broader literature of emotion processing, the manipulation of emotional intensity can be crucial to uncover impairments in EFE recognition, which are not evident when using intense EFEs (e.g., Willis et al., 2014), making emotion recognition tasks more sensitive to subtle differences in identification (Calder et al., 1996; Wells et al., 2016).

Regarding the issue of intensity in static EFEs, two studies exist that used morphed faces to understand the impact of alexithymia in the identification of static EFEs varying in emotional intensity. Nevertheless, they have the limitations of focusing mainly on alexithymia within the autistic population, reporting contrasting results. Specifically, the first study found alexithymia to be related to less precision, expressed as higher attribution threshold, in the identification of EFEs both in the autistic and control group (Cook et al., 2013). On the contrary, the second study found high levels of alexithymia to be related to reduced accuracy in identifying EFEs at low emotional intensity in the autistic but not in the control group (Ketelaars et al., 2016) raising the possibility that autism per se may represent a confounding factor contributing to the results. Given the inconsistency of results, it appears that further research is needed in order to understand the role of emotional intensity in the relationship between alexithymia and EFE identification. In addition, no study has investigated the impact of alexithymia in the identification of dynamic EFEs varying in emotional intensity. Nevertheless, static and dynamic faces appear to convey partially different types of information. Besides being more ecologically valid, dynamic stimuli convey additional temporal information regarding the change of emotional intensity over time (Kamachi et al., 2001), which is not available in static stimuli. This seems to contribute to enhanced perceived intensity of dynamic EFEs (Yoshikawa and Sato, 2008) and has been suggested to facilitate their identification (Sarkheil et al., 2013). In fact, neuroimaging studies have shown that recognizing dynamic as opposed to static morphed EFEs appears not only to enhance the activation of areas involved in affective processing, including the amygdala and fusiform gyrus (LaBar et al., 2003; Trautmann et al., 2009), but also to activate additional brain areas involved in motion processing, including pre- and post-central gyrus, known for sensory-motor integration of motion-related information (Sarkheil et al., 2013).

Given the current literature, the aim of the present study was to investigate the impact of emotional intensity in the relationship between alexithymia and EFE identification when presenting both static and dynamic EFEs. To this end, two experiments were conducted in which participants with low (LA) and high (HA) levels of alexithymia were tested in their ability to identify static (Experiment 1) or dynamic (Experiment 2) morphed EFEs, which ranged from neutral to intense emotional expression.

In both experiments presentation of happy, fearful and disgusted EFEs was chosen for several theoretical reasons. Firstly, both positively and negatively valenced emotions were included to understand if the effect of alexithymia may be valence or emotion related. Secondly, with regards to fear and disgust, these were included because neuroimaging and lesion studies indicate that identification of fearful and disgusted EFEs is related to functional and structural integrity of a circumscribed set of brain areas (Adolphs, 2002). Specifically, the amygdala appears a crucial structure in recognition of fearful EFEs (Adolphs, 2002; Fusar-Poli et al., 2009) and lesion of this structure impairs their recognition (Adolphs et al., 1994, 1999), while the insula appears crucially involved in the recognition of disgusted EFEs (Adolphs, 2002; Fusar-Poli et al., 2009). Regarding alexithymia, aberrant activation of amygdala (e.g., Kugel et al., 2008; Pouga et al., 2010; Wingbermühle et al., 2012; Moriguchi and Komaki, 2013; Jongen et al., 2014) and insula (e.g., Karlsson et al., 2008; Heinzel et al., 2010; Reker et al., 2010) have been found among the neural correlates underlying this condition (for a meta-analysis see van der Velde et al., 2013).

In Experiment 1, compared to LA, HA were hypothesized to need more emotional intensity to identify the emotion expressed by EFEs. On the contrary, in Experiment 2, the additional information inherent to dynamic – as opposed to static – EFEs might facilitate the task, enabling HA to overcome their difficulties. Therefore, in Experiment 2, differences in the emotional intensity needed by HA and LA to identify EFEs may or may not be evident.

Experiment 1

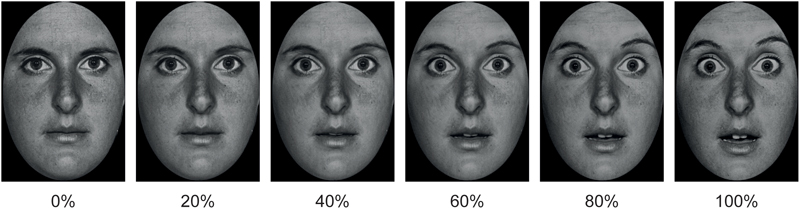

Participants were presented with pictures of static happy, disgusted and fearful EFEs. The emotion in each EFE could be expressed at 6 levels of emotional intensity: 0, 20, 40, 60, 80, and 100% (Figure 1). Participants were required to identify the emotion expressed by the EFE, by making a forced choice button press. In order to test differences between LA and HA, for each participant, expression identification rate for each EFE was calculated at each intensity level. Then, expression identification rates were fit to a psychometric function to calculate the percentage of emotional intensity at which participants had equal probability to identify the facial expression as neutral or emotional, i.e., point of subjective equality (PSE). Compared to LA, HA were hypothesized to need more emotional intensity to identify the presence of the emotional expression in the face, hence showing higher PSE.

FIGURE 1. Example of morphed pictures of fearful facial expressions used as EFEs ranging from 0 to 100% emotional intensity.

Methods

Participants

The study was designed and conducted in accordance with the ethical principles of the World Medical Association Declaration of Helsinki and the institutional guidelines of the University of Bologna and was approved by the Ethics Committee of the Department of Psychology. All participants gave informed written consent to participation after being informed about the procedure of the study.

Three-hundred university students completed the 20-item Toronto Alexithymia Scale (TAS-20; Taylor et al., 2003). Depending on the score, students were classified as LA (TAS-20 ≤ 36) or HA (TAS-20 ≥ 61) (Franz et al., 2004) and were then randomly contacted to participate in the study. Once in the laboratory, the alexithymia module of the structured interview for the Diagnostic Criteria for Psychosomatic Research (DCPR; Mangelli et al., 2006) was administered to increase reliability of screening and confirm TAS-20 classification. Participants with discordant classification on the two measures did not complete the task (n = 1). Due to the high co-occurrence of alexithymia and depression (Li et al., 2015), participants completed the Beck Depression Inventory (Beck et al., 1961) and did not complete the experimental task if their score was higher than the cut-off for severe depression (i.e., 28, n = 1). All participants had equivalent educational backgrounds and were students at the University of Bologna.

Forty volunteers with no history of major medical, neurological or psychiatric disorders completed the study: 20 LA (6 males; TAS-20 M = 30.25, SD = 4.12; age M = 24.55, SD = 2.98 years); 20 HA (6 males; TAS-20 M = 63.37, SD = 2.25; age M = 23.03, SD = 2.32 years). A priori targets for sample size and data collection stopping rule were based on sample and effect sizes reported in the literature on alexithymia and EFE identification (sample size of an average of 38 participants in total as indicated in a recent review (Grynberg et al., 2012)).

Independent Measure

Stimuli consisted of black and white photographs of 20 actors (10 males) with each actor depicting 3 EFEs, respectively of happiness, disgust and fear. Half of the pictures were taken from the Karolinska Directed Emotional Faces database (Lundqvist et al., 1998) and half from the Pictures of Facial Affect database (Ekman and Friesen, 1976). Pictures were trimmed to fit an ellipse in order to uniform them and remove distracting features from the face, such as hair or ears and non-facial contours. Each emotional facial expression was then morphed with the neutral facial expression of the corresponding identity using (Abrosoft FantaMorph, 2009) in order to create stimuli of 20% increments of emotional intensity ranging from 0 to 100% emotional intensity. This resulted in a total of 360 stimuli (20 cm × 13 cm size), i.e., 20 actors expressing 3 emotions with 6 degrees of intensity (0, 20, 40, 60, 80, and 100%; Figure 1).

Procedure

The experiment took place in a sound attenuated room with dimmed light. Participants sat in a relaxed position on a comfortable chair in front of a computer monitor (17″, 60 Hz refresh rate) used for stimuli presentation at 57 cm distance. Each trial started with the presentation of a fixation cross (500 ms) in the center of the screen followed by the stimulus (100 ms) and subsequently a black screen (3000 ms) during which participants could provide the answer by pressing a key. The experiment consisted of 360 randomized trials divided in two blocks of 180 trials so that participants could rest if desired. Stimulus presentation time was chosen based on previous literature on EFEs recognition, indicating 100 ms as a sufficiently long presentation time to identify EFEs reliably above chance level and without incurring in ceiling effects (Calvo and Lundqvist, 2008; Calvo and Marrero, 2009).

Participants were instructed that at each trial a face would briefly appear on the screen and their task would be to identify the emotion expressed by the face by pressing one of four keys with their index and middle finger of either hand. These were labeled “N” for neutral (i.e., Italian = “neutro”), “F” for happiness (i.e., Italian = “felicità”), “P” for fear (i.e., Italian = “paura”) and “D” for disgust (i.e., Italian = “disgusto”). Before beginning the task, participants familiarized with the position of keys by having the experimenter calling out loud in random order the keys and participants pressing them until they felt confident they could press them correctly while fixating the screen. The order of keys was counterbalanced among participants.

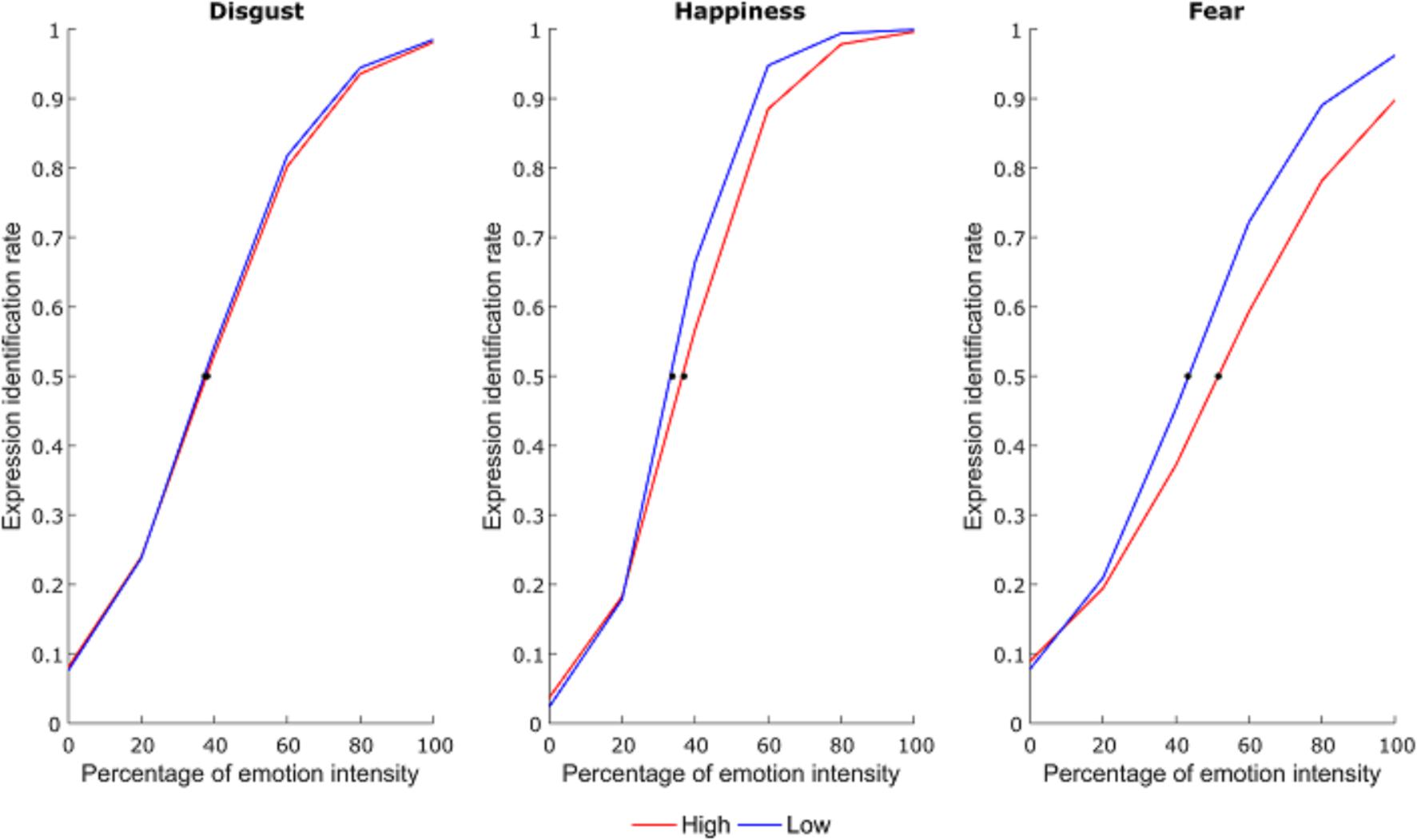

Dependent Measure

Correct responses for each emotional facial expression were used to calculate the mean expression identification rate at each intensity level. Then, for each subject, expression identification rates for each emotional facial expression were fit to a psychometric function using a generalized linear model with a binomial distribution in MATLAB software (MathWorks, Natick, MA, United States) (Nakajima et al., 2017). The point of subjective equality (PSE) was then calculated and used for statistical analysis. This represented the percentage of emotional intensity at which subjects had equal probability to identify the facial expression as neutral or emotional (Figure 2).

FIGURE 2. Average psychometric function for each emotional expression as a function of group. The dots represent the point of subjective equivalence (PSE).

Results and Discussion

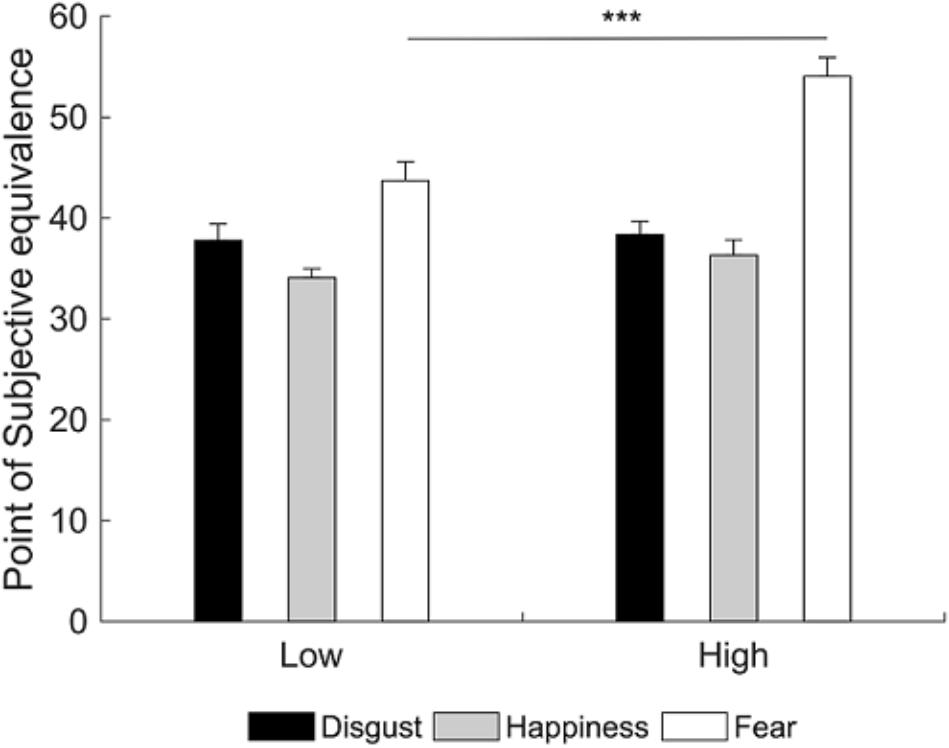

A 3×2 repeated measures analysis of variance (RM ANOVA; emotion: happiness, disgust, fear; group: LA, HA) on PSE scores showed a significant main effect of group [F(1,38) = 5.38, p = 0.026, = 0.12] and emotion [F(2,76) = 35.75, p < 0.001, = 0.48]. More importantly, there was a group by emotion interaction [F(2,76) = 4.69, p = 0.012, = 0.11]. Newman-Keuls post hoc test shows that HA had higher PSE compared to LA only for the fearful emotional facial expression (fear: p < 0.001, MHA = 54.05, MLA = 43.71; disgust: p = 0.832, MHA = 38.36, MLA = 37.78; happiness: p = 0.415, MHA = 36.32, MLA = 34.08). Therefore, HA need more emotional intensity to identify fearful facial expressions compared to LA (Figure 3).

FIGURE 3. Mean point of subjective equivalence (PSE) for each emotional facial expression as a function of group. Participants with high alexithymia have higher PSE than those with low alexithymia for the fearful facial expression, indicating they need more emotional intensity to identify fearful facial expressions. Error bars represent standard errors. Significant differences are indicated as follows: ∗∗∗p < 0.001.

In summary, results showed that HA required more emotional intensity to identify the presence of fear expression in the face compared to LA. Crucially, while previous studies showed that HA need more time to identify EFEs as efficiently as LA (Grynberg et al., 2012), the present study extends the current literature suggesting that HA also need more perceptual information, specifically to identify fearful EFEs.

Experiment 2

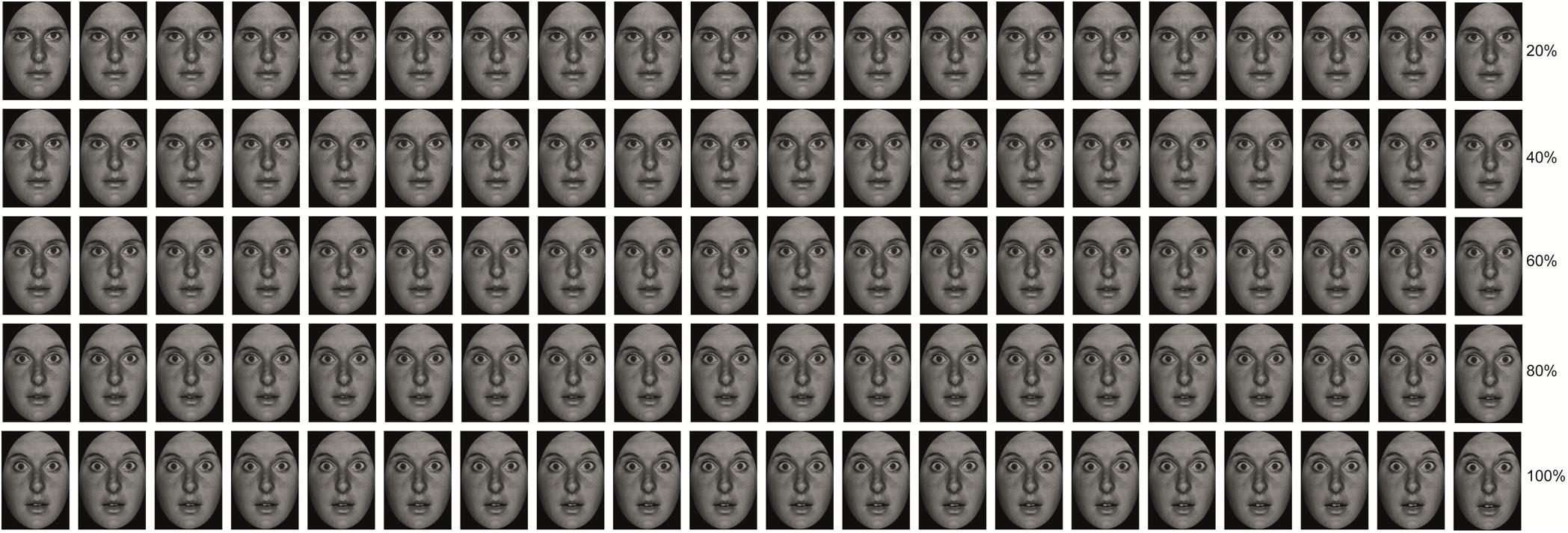

Participants were presented with videos of dynamic happy, disgusted and fearful EFEs, which started at 0% emotional intensity and terminated at 100% emotional intensity (Figure 4). Participants were required to identify the emotion expressed by the EFE, by making a forced choice button press, which would also terminate video presentation. Participants responded as soon as they recognized the emotion, without necessarily waiting for termination of the video. In order to test differences between LA and HA, accuracy and reaction times (RTs) for accurate responses were calculated. Here, RTs also represented the percentage of emotional intensity at which participants identified the emotion displayed by the face. Therefore, differences in RTs indicated differences in the percentage of emotional intensity needed to identify the emotion expressed by the EFE. Contrary to Experiment 1, here, differences in the emotional intensity needed by HA and LA to identify EFEs may or may not be evident, given that dynamic EFEs may be easier to be identified than static ones.

FIGURE 4. Example of morphed pictures of fearful facial expressions used to create the dynamic EFEs ranging from 0 to 100% emotional intensity.

Methods

Participants

Recruitment of participants followed the same procedure as Experiment 1. Two participants did not take part to the experimental task because their TAS-20 classification was not confirmed by their DCPR score. No participant reported a severe level of depression on the BDI.

Forty volunteers with no history of major medical, neurological or psychiatric disorders completed the study: 20 LA participants (8 males; TAS-20 M = 31.29, SD = 3.23; age M = 22.89 years, SD = 2.00 years) and 20 HA participants (8 males; TAS-20 M = 64.84, SD = 4.14; age M = 22.84 years, SD = 1.93 years).

Independent Measure

Stimuli consisted of black and white photographs of 10 actors (5 men) with each actor depicting 3 EFEs, respectively of happiness, disgust and fear. Pictures were chosen from the Pictures of Facial Affect database (Ekman and Friesen, 1976) and trimmed to fit an ellipse in order to uniform stimuli and remove distracting features from the face such as hair or ears and non-facial contours. Each emotional facial expression was then morphed with the neutral facial expression of the corresponding identity using (Abrosoft FantaMorph, 2009) in order to create videos of 1% increments of emotional intensity ranging from 0 to 100% of emotional intensity. Each increment lasted 1 s, resulting in a video with a total duration of 100 s (Figure 4). This resulted in a total of 30 stimuli (20 cm × 13 cm size), i.e., 10 actors expressing 3 emotions.

Procedure

The experiment took place in a sound attenuated room with dimmed light. Participants sat in a relaxed position on a comfortable chair in front of a computer monitor (17″, 60 Hz refresh rate) used for stimuli presentation at a distance of 57 cm. The experiment consisted of 30 randomized trials, each showing a dynamic facial expression changing from neutral to fear, happiness or disgust. Each trial started with the presentation of a fixation cross (3000 ms) in the center of the screen followed by the presentation of the dynamic stimulus with the duration of 100 s.

Participants were instructed that at each trial a video of a face ranging from neutral to emotional would appear on the screen and their task would be to press one of three keys (D, J, or K) as soon as they recognized the emotion expressed by the face, without having to wait for termination of the video. The keys were labeled “F” for happiness (i.e., Italian = “felicità”), “D” for disgust (i.e., Italian = “disgusto”) and “P” for fear (i.e., Italian = “paura”). Participants used the index and middle fingers of the right hand and the index finger of the left hand to press the keys. The order of keys was counterbalanced between participants. Key press terminated video presentation allowing the task to proceed to the next trial.

Dependent Measure

Accuracy (i.e., percentage of correct response) and RTs for accurate responses were calculated. It should be noted that RTs also represented the percentage of emotional intensity at which participants identified the emotion displayed by the face. For example, an average RT of 3000 ms indicated that, on average, a participant correctly identified the emotion displayed by the face when this was expressed at 30% emotional intensity. Therefore, differences in RTs indicated differences in the percentage of emotional intensity needed to identify the emotion expressed by the EFE.

Results and Discussion

The 3×2 RM ANOVA (emotion: happiness, disgust, fear; group: LA, HA) on accuracy revealed a significant main effect of emotion [F(2,76) = 13.83; p < 0.001; = 0.27]. Newman-Keuls post hoc test showed that participants were most accurate in identifying happiness (M = 96.05%) than fear (p = 0.003; M = 90.75%) and disgust (p < 0.001; M = 86.84%) and were more accurate in identifying fear than disgust (p = 0.029). Results showed no significant main effect or interaction with the factor group (all p-values ≥ 0.669) indicating that the two groups exhibited comparable accuracy in identifying the emotion expressed by dynamic faces.

Similarly the 3×2 RM ANOVA (emotion: happiness, disgust, fear; group: LA, HA) on RTs revealed a significant main effect of emotion [F(2,76) = 78.22; p < 0.001; = 0.67]. Newman-Keuls post hoc test showed that participants were fastest in identifying happiness (M = 24770 ms) than fear (p < 0.001; M = 32623 ms) and disgust (p < 0.001; M = 36549 ms) and were more accurate in identifying fear than disgust (p < 0.001). Results showed no significant main effect or interaction with the factor group (all p-values ≥ 0.142) indicating that the two groups required comparable time to identify the emotion expressed by dynamic faces. Because RTs also represent the percentage at which participants recognize the emotion, these results also show that the groups required comparable amount of emotional intensity to identify the emotion expressed by the face.

Contrary to Experiment 1, results of Experiment 2 show no significant difference between LA and HA in accuracy and RTs when identifying the emotion expressed by dynamic morphed faces.

General Discussion

The aim of the present study was to investigate the role of alexithymia in identifying the emotional expression of static and dynamic EFEs ranging from neutral to intense emotional expression, in order to test whether or not HA need more emotional intensity to identify EFEs. In fact, previous studies have focused on manipulating presentation time of intense static EFEs, revealing that HA need more time to identify EFEs, compared to LA (Grynberg et al., 2012). Here, instead, we manipulated emotional intensity of static and dynamic EFEs. Under these conditions we showed that HA need more emotional intensity to identify static fearful EFEs, compared to LA. Nevertheless, when the groups were faced by dynamic EFEs, no significant difference was found in performance, with groups requiring comparable amount of emotional intensity to identify the EFEs.

In Experiment 1, the difficulty in processing fearful EFEs is in line with previous literature, which found a difficulty of alexithymic individuals in fear processing not only limited to EFEs labeling (Jessimer and Markham, 1997; Lane et al., 2000; Montebarocci et al., 2010) but also across a broad range of stimuli, tasks and dependent measures. For example, compared to LA, HA rate the expression of fearful but not other EFEs as less intense (Prkachin et al., 2009). In addition, HA show impairment in embodied aspects of fearful stimuli processing. This is evidenced by reduced rapid facial mimicry in response to static fearful faces (Sonnby-Borgstrom, 2009; Scarpazza et al., 2018), failure to show enhanced perception of tactile stimuli delivered to their face while observing a fearful – as opposed to happy or neutral – face being simultaneously touched (Scarpazza et al., 2014, 2015) and reduced skin conductance response when viewing a conditioned stimulus predictive of a shock during classical fear conditioning (Starita et al., 2016). Finally, HA show impairments in processing fearful stimuli also when examining their electrophysiological responses. Compared to LA, HA fail to show enhanced amplitude of the N190 event related potential, during visual encoding of fearful – as opposed to happy or neutral – body postures (Borhani et al., 2016). This general difficulty in fear processing has been interpreted in light of the decreased activation of the amygdala observed in alexithymia in response to the presentation of EFEs (Kugel et al., 2008; Jongen et al., 2014), in particular fearful ones (Pouga et al., 2010), and negative emotional stimuli (Wingbermühle et al., 2012; van der Velde et al., 2013), such as observing a painful stimulation being delivered to someone’s hand (Moriguchi and Komaki, 2013). Although involved in processing EFEs in general (Fusar-Poli et al., 2009), the amygdala appears a crucial structure in processing fearful EFEs (Adolphs et al., 1994, 1999). Therefore, it is possible that a reduced response in the amygdala in HA may underlie the present results, though future studies using neuroimaging techniques should be conducted to test this hypothesis.

In contrast to the difference found in response to fearful EFEs, no difference between the groups was found when identifying happy or disgusted facial expressions. In this regard, previous behavioral studies on EFEs processing have reported mixed results. For example, in Prkachin et al. (2009), though HA showed reduced sensitivity for matching sad, angry and fearful faces to the corresponding target EFE, they showed no significant difference from LA when matching happy, disgusted or surprised EFEs; in addition, they were able to recognize all EFEs during a non-speeded task and rated the intensity of happy and disgusted EFEs similarly to LA. On the contrary, other labeling studies found that alexithymia was related to a global deficit to recognize EFEs, including happiness and disgust (Jessimer and Markham, 1997; Lane et al., 2000; Montebarocci et al., 2010). Given the contrasting results, alexithymia may affect processing of happy and disgusted EFEs depending on the experimental conditions. Specifically, here results seem to suggest that while HA require more emotional intensity to identify static fearful EFEs, they may not have such need in the identification of happy and disgusted EFEs.

Contrary to Experiment 1, when dynamic morphed faces were presented in Experiment 2, no difference was found between the two groups in EFE recognition. This result may be related to the type of information conveyed by dynamic as opposed to static stimuli. Indeed, the intensification of emotional expression over time provides additional structural and configurational information, which is not available in static stimuli (Kamachi et al., 2001) and which seems to contribute to differential processing of the two types of stimuli. For example, dynamic EFEs are perceived as more intense than static ones even when the stimulus emotional intensity is the same (Yoshikawa and Sato, 2008; Rymarczyk et al., 2011; Rymarczyk et al., 2016a,b). In addition, dynamic EFEs trigger stronger facial mimicry compared to static faces (Sato et al., 2008; Rymarczyk et al., 2011, 2016a,b). Finally, recognizing dynamic as opposed to static morphed EFEs activates an extended neural network comprising not only areas involved in affective processing (LaBar et al., 2003; Sato et al., 2004), but also motion processing (Sarkheil et al., 2013). It is possible that the involvement of such additional mechanisms during the identification of dynamic EFEs might have facilitated the task and led to the absence of significant differences in performance between HA and LA. Future studies should investigate this hypothesis and in particular test whether reduced facial mimicry found in HA in response to static fearful EFEs (Sonnby-Borgstrom, 2009; Scarpazza et al., 2018) may be restored by the presentation of dynamic EFEs and be related to improvement in dynamic EFE identification. Additionally, the comparable performance in dynamic EFEs identification between HA and LA highlights the subclinical nature of alexithymia, further supporting the notion that difficulties in EFE identification of HA become evident only under specific task conditions (Grynberg et al., 2012) and may not necessarily be evident in their everyday life.

To conclude, the present study shows that high – as opposed to low - levels of alexithymia are related to the need for more emotional intensity to perceive fear in static EFEs. On the contrary, no significant difference in performance was found when individuals with high and low levels of alexithymia were faced by dynamic EFEs, possibly due to the additional structural and configurational information regarding the change of emotional intensity over time (Kamachi et al., 2001), which may have facilitated emotion identification. Given that partially different brain networks are involved in processing the two types of stimuli, future studies should use neuroimaging techniques to elucidate the neural mechanisms underlying the current behavioral results.

Availability of Data

The raw data supporting the conclusions of this manuscript will be made available by the authors, without undue reservation, to any qualified researcher.

Author Contributions

FS, KB, CB, and CS conceived and designed the study and critically revised the manuscript for important intellectual content. FS, KB, and CB acquired and analyzed the data and drafted the manuscript.

Funding

The authors disclosed receipt of the following financial support for the research, authorship, and/or publication of this article: Preparation of this manuscript was supported by Grant PRIN (Protocol: PRIN2015 NA455) from Ministero dell’Istruzione, dell’Università e della Ricerca awarded to Elisabetta Làdavas.

Conflict of Interest Statement

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

The authors would like to thank Francesca Salucci, Giada Sberlati, Anna Carlotta Grassi and Nicoletta Russo for their assistance with data collection and Marta de Haro for her help with data processing. The authors would also like to thank Prof. Elisabetta Làdavas for her comments during manuscript preparation.

References

Abrosoft FantaMorph (2009). Abrosoft (Version 4.1). Available at: www.fantamorph.com

Adolphs, R. (2002). Recognizing emotion from facial expressions: psychological and neurological mechanisms. Behav. Cogn. Neurosci. Rev. 1, 21–62. doi: 10.1177/1534582302001001003

Adolphs, R., Tranel, D., Damasio, H., and Damasio, A. (1994). Impaired recognition of emotion in facial expressions following bilateral damage to the human amygdala. Nature 372, 669–672. doi: 10.1038/372669a0

Adolphs, R., Tranel, D., Hamann, S., Young, A. W., Calder, A. J., Phelps, E. A., et al. (1999). Recognition of facial emotion in nine individuals with bilateral amygdala damage. Neuropsychologia 37, 1111–1117. doi: 10.1016/S0028-3932(99)00039-1

Beck, A. T., Ward, C. H., Mendelson, M., Mock, J., and Erbaugh, J. (1961). An inventory for measuring depression. Arch. Gen. Psychiatry 4, 561–571.

Borhani, K., Borgomaneri, S., Làdavas, E., and Bertini, C. (2016). The effect of alexithymia on early visual processing of emotional body postures. Biol. Psychol. 115, 1–8. doi: 10.1016/j.biopsycho.2015.12.010

Calder, A. J., Young, A. W., Perrett, D. I., Etcoff, N. L., and Rowland, D. (1996). Categorical perception of morphed facial expressions. Vis. Cogn. 3, 81–118. doi: 10.1080/713756735

Calvo, M. G., and Lundqvist, D. (2008). Facial expressions of emotion (KDEF): identification under different display-duration conditions. Behav. Res. Methods 40, 109–115. doi: 10.3758/BRM.40.1.109

Calvo, M. G., and Marrero, H. (2009). Visual search of emotional faces: the role of affective content and featural distinctiveness. Cogn. Emot. 23, 782–806. doi: 10.1080/02699930802151654

Cook, R., Brewer, R., Shah, P., and Bird, G. (2013). Alexithymia, not autism, predicts poor recognition of emotional facial expressions. Psychol. Sci. 24, 723–732. doi: 10.1177/0956797612463582

Demenescu, L. R., Kortekaas, R., den Boer, J. A., and Aleman, A. (2010). Impaired attribution of emotion to facial expressions in anxiety and major depression. PLoS One 5:e15058. doi: 10.1371/journal.pone.0015058

Ekman, P., and Friesen, W. (1976). Picture of Facial Affect. Palo Alto, CA: Consulting Psychologists Press.

Franz, M., Schaefer, R., Schneider, C., Sitte, W., and Bachor, J. (2004). Visual event-related potentials in subjects with alexithymia: Modified processing of emotional aversive information? Am. J. Psychiatry 161, 728–735. doi: 10.1176/appi.ajp.161.4.728

Fusar-Poli, P., Placentino, A., Carletti, F., Landi, P., Allen, P., Surguladze, S., et al. (2009). Functional atlas of emotional faces processing: a voxel-based meta-analysis of 105 functional magnetic resonance imaging studies. J. Psychiatry Neurosci. 34, 418–432.

Grynberg, D., Chang, B., Corneille, O., Maurage, P., Vermeulen, N., Berthoz, S., et al. (2012). Alexithymia and the processing of emotional facial expressions (EFEs): systematic review, unanswered questions and further perspectives. PLoS One 7:e42429. doi: 10.1371/journal.pone.0042429

Heinzel, A., Schäfer, R., Müller, H., Schieffer, A., Ingenhag, A., Northoff, G., et al. (2010). Differential modulation of valence and arousal in high-alexithymic and low-alexithymic individuals. Neuroreport 21, 998–1002. doi: 10.1097/WNR.0b013e32833f38e0

Ihme, K., Sacher, J., Lichev, V., Rosenberg, N., Kugel, H., Rufer, M., et al. (2014a). Alexithymia and the labeling of facial emotions: response slowing and increased motor and somatosensory processing. BMC Neurosci. 15:40. doi: 10.1186/1471-2202-15-40

Ihme, K., Sacher, J., Lichev, V., Rosenberg, N., Kugel, H., Rufer, M., et al. (2014b). Alexithymic features and the labeling of brief emotional facial expressions – An fMRI study. Neuropsychologia 64, 289–299. doi: 10.1016/j.neuropsychologia.2014.09.044

Jessimer, M., and Markham, R. (1997). Alexithymia: a right hemisphere dysfunction specific to recognition of certain facial expressions? Brain Cogn. 34, 246–258. doi: 10.1006/brcg.1997.0900

Jongen, S., Axmacher, N., Kremers, N. A. W., Hoffmann, H., Limbrecht-Ecklundt, K., Traue, H. C., et al. (2014). An investigation of facial emotion recognition impairments in alexithymia and its neural correlates. Behav. Brain Res. 271, 129–139. doi: 10.1016/j.bbr.2014.05.069

Kamachi, M., Bruce, V., Mukaida, S., Gyoba, J., Yoshikawa, S., and Akamatsu, S. (2001). Dynamic properties influence the perception of facial expressions. Perception 30, 875–887.

Karlsson, H., Näätänen, P., and Stenman, H. (2008). Cortical activation in alexithymia as a response to emotional stimuli. Br. J. Psychiatry 192, 32–38. doi: 10.1192/bjp.bp.106.034728

Ketelaars, M. P., In’t Velt, A., Mol, A., Swaab, H., and van Rijn, S. (2016). Emotion recognition and alexithymia in high functioning females with autism spectrum disorder. Res. Autism Spectr. Disord. 21, 51–60. doi: 10.1016/j.rasd.2015.09.006

Kohler, C. G., Walker, J. B., Martin, E. A., Healey, K. M., and Moberg, P. J. (2009). Facial emotion perception in schizophrenia: a meta-analytic review. Schizophr. Bull. 36, 1009–1019. doi: 10.1093/schbul/sbn192

Kugel, H., Eichmann, M., Dannlowski, U., Ohrmann, P., Bauer, J., Arolt, V., et al. (2008). Alexithymic features and automatic amygdala reactivity to facial emotion. Neurosci. Lett. 435, 40–44. doi: 10.1016/j.neulet.2008.02.005

LaBar, K. S., Crupain, M. J., Voyvodic, J. T., and McCarthy, G. (2003). Dynamic perception of facial affect and identity in the human brain. Cereb. Cortex 13, 1023–1033. doi: 10.1093/cercor/13.10.1023

Lane, R. D., Lee, S., Reidel, R., Weldon, V. B., Kaszniak, A., and Schwartz, G. E. (1996). Impaired verbal and nonverbal emotion recognition in alexithymia. Psychosom. Med. 58, 203–210.

Lane, R. D., Sechrest, L., Riedel, R., Shapiro, D. E., and Kaszniak, A. W. (2000). Pervasive emotion recognition deficit common to alexithymia and the repressive coping style. Psychosom. Med. 62, 492–501.

Li, S., Zhang, B., Guo, Y., and Zhang, J. (2015). The association between alexithymia as assessed by the 20-item Toronto alexithymia scale and depression: a meta-analysis. Psychiatry Res. 227, 1–9. doi: 10.1016/j.psychres.2015.02.006

Lundqvist, D., Flykt, A., and Öhman, A. (1998). The Karolinska directed emotional faces (KDEF): CD ROM from Department of Clinical Neuroscience. Solna: Karolinska Institutet.

Mangelli, L., Semprini, F., Sirri, L., Fava, G. A., and Sonino, N. (2006). Use of the diagnostic criteria for psychosomatic research (DCPR) in a community sample. Psychosomatics 47, 143–146. doi: 10.1176/appi.psy.47.2.143

Montebarocci, O., Surcinelli, P., Rossi, N., and Baldaro, B. (2010). Alexithymia, verbal ability and emotion recognition. Psychiatr. Q. 82, 245–252. doi: 10.1007/s11126-010-9166-7

Moriguchi, Y., and Komaki, G. (2013). Neuroimaging studies of alexithymia: physical, affective, and social perspectives. Biopsychosoc. Med. 7:8. doi: 10.1186/1751-0759-7-8

Nakajima, K., Minami, T., and Nakauchi, S. (2017). Interaction between facial expression and color. Sci. Rep. 7:41019. doi: 10.1038/srep41019

Pandey, R., and Mandal, M. K. (1997). Processing of facial expressions of emotion and alexithymia. Br. J. Clin. Psychol. 36, 631–633. doi: 10.1111/j.2044-8260.1997.tb01269.x

Pouga, L., Berthoz, S., de Gelder, B., and Grèzes, J. (2010). Individual differences in socioaffective skills influence the neural bases of fear processing: the case of alexithymia. Hum. Brain Mapp. 31, 1469–1481. doi: 10.1002/hbm.20953

Prkachin, G. C., Casey, C., and Prkachin, K. M. (2009). Alexithymia and perception of facial expressions of emotion. Pers. Individ. Dif. 46, 412–417. doi: 10.1016/j.paid.2008.11.010

Reker, M., Ohrmann, P., Rauch, A. V., Kugel, H., Bauer, J., Dannlowski, U., et al. (2010). Individual differences in alexithymia and brain response to masked emotion faces. Cortex 46, 658–667. doi: 10.1016/j.cortex.2009.05.008

Rymarczyk, K., Biele, C., Grabowska, A., and Majczynski, H. (2011). EMG activity in response to static and dynamic facial expressions. Int. J. Psychophysiol. 79, 330–333. doi: 10.1016/j.ijpsycho.2010.11.001

Rymarczyk, K., Żurawski, Ł., Jankowiak-Siuda, K., and Szatkowska, I. (2016a). Do dynamic compared to static facial expressions of happiness and anger reveal enhanced facial mimicry? PLoS One 11:e0158534. doi: 10.1371/journal.pone.0158534

Rymarczyk, K., Żurawski, Ł., Jankowiak-Siuda, K., and Szatkowska, I. (2016b). Emotional empathy and facial mimicry for static and dynamic facial expressions of fear and disgust. Front. Psychol. 7:1853. doi: 10.3389/fpsyg.2016.01853

Sarkheil, P., Goebel, R., Schneider, F., and Mathiak, K. (2013). Emotion unfolded by motion: a role for parietal lobe in decoding dynamic facial expressions. Soc. Cogn. Affect. Neurosci. 8, 950–957. doi: 10.1093/scan/nss092

Sato, W., Fujimura, T., and Suzuki, N. (2008). Enhanced facial EMG activity in response to dynamic facial expressions. Int. J. Psychophysiol. 70, 70–74. doi: 10.1016/j.ijpsycho.2008.06.001

Sato, W., Kochiyama, T., Yoshikawa, S., Naito, E., and Matsumura, M. (2004). Enhanced neural activity in response to dynamic facial expressions of emotion: an fMRI study. Cogn. Brain Res. 20, 81–91. doi: 10.1016/j.cogbrainres.2004.01.008

Scarpazza, C., di Pellegrino, G., and Làdavas, E. (2014). Emotional modulation of touch in alexithymia. Emotion 14, 602–610. doi: 10.1037/a0035888

Scarpazza, C., Làdavas, E., and Catteneo, L. (2018). Invisible side of emotions: somato-motor responses to affective facial displays in alexithymia. Exp. Brain Res. 236, 195–206. doi: 10.1007/s00221-017-5118-x

Scarpazza, C., Làdavas, E., and di Pellegrino, G. (2015). Dissociation between emotional remapping of fear and disgust in alexithymia. PLoS One 10:e0140229. doi: 10.1371/journal.pone.0140229

Sifneos, P. E. (1973). The prevalence of “alexithymic” characteristics in psychosomatic patients. Psychother. Psychosom. 22, 255–262.

Sonnby-Borgstrom, M. (2009). Alexithymia as related to facial imitation, mentalization, empathy and internal working models-of-self and others. Neuropsychoanalysis 11, 111–128. doi: 10.1080/15294145.2009.10773602

Starita, F., Làdavas, E., and di Pellegrino, G. (2016). Reduced anticipation of negative emotional events in alexithymia. Sci. Rep. 6:27664. doi: 10.1038/srep27664

Taylor, G. J., Bagby, R. M., and Parker, J. D. A. (1991). The alexithymia construct: a potential paradigm for psychosomatic medicine. Psychosomatics 32, 153–164. doi: 10.1016/S0033-3182(91)72086-0

Taylor, G. J., Bagby, R. M., and Parker, J. D. A. (2003). The 20-item Toronto alexithymia scale: IV. Reliability and factorial validity in different languages and cultures. J. Psychosom. Res. 55, 277–283. doi: 10.1016/S0022-3999(02)00601-3

Trautmann, S. A., Fehr, T., and Herrmann, M. (2009). Emotion in motion: dynamic compared to static facial expressions of disgust and happiness revealed more widespread emotion-specific activations. Brain Res. 1184, 100–115. doi: 10.1016/j.brainres.2009.05.075

van der Velde, J., Servaas, M. N., Goerlich, K. S., Bruggeman, R., Horton, P., Costafreda, S. G., et al. (2013). Neural correlates of alexithymia: a meta-analysis of emotion processing studies. Neurosci. Biobehav. Rev. 37, 1774–1785. doi: 10.1016/j.neubiorev.2013.07.008

Wells, L. J., Gillespie, S. M., and Rotshtein, P. (2016). Identification of emotional facial expressions: effects of expression, intensity, and sex on eye gaze. PLoS One 11:e0168307. doi: 10.1371/journal.pone.0168307

Willis, M. L., Palermo, R., McGrillen, K., and Miller, L. (2014). The nature of facial expression recognition deficits following orbitofrontal cortex damage. Neuropsychology 28, 613–623. doi: 10.1037/neu0000059

Wingbermühle, E., Theunissen, H., Verhoeven, W. M., Kessels, R. P., and Egger, J. I. (2012). The neurocognition of alexithymia: evidence from neuropsychological and neuroimaging studies. Acta Neuropsychiatr. 24, 67–80. doi: 10.1111/j.1601-5215.2011.00613.x

Keywords: alexithymia, emotional facial expressions, morphing, dynamic facial expressions, fear

Citation: Starita F, Borhani K, Bertini C and Scarpazza C (2018) Alexithymia Is Related to the Need for More Emotional Intensity to Identify Static Fearful Facial Expressions. Front. Psychol. 9:929. doi: 10.3389/fpsyg.2018.00929

Received: 06 February 2018; Accepted: 22 May 2018;

Published: 11 June 2018.

Edited by:

Lorys Castelli, Università degli Studi di Torino, ItalyReviewed by:

Maria Stella Epifanio, Università degli Studi di Palermo, ItalyMegan L. Willis, Australian Catholic University, Australia

Copyright © 2018 Starita, Borhani, Bertini and Scarpazza. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Cristina Scarpazza, cristina.scarpazza@gmail.com; cristina.scarpazza@unipd.it

†Present address: Khatereh Borhani, Institute for Cognitive and Brain Sciences, Shahid Beheshti University, Tehran, Iran

Francesca Starita

Francesca Starita Khatereh Borhani

Khatereh Borhani Caterina Bertini

Caterina Bertini Cristina Scarpazza

Cristina Scarpazza