- 1Tuscany Rehabilitation Clinic, Montevarchi, Italy

- 2Adult Mental Health Service, NHS-USL Tuscany South-Est, Grosseto, Italy

- 3Global Brain Health Institute, Trinity College Institute of Neuroscience, Trinity College Dublin, The University of Dublin, Dublin, Ireland

- 4Physical and Rehabilitative Medicine Unit, NHS-USL Tuscany South-Est, Grosseto, Italy

Background: Existing literature suggests that age affects recognition of affective facial expressions. Eye-tracking studies highlighted that age-related differences in recognition of emotions could be explained by different face exploration patterns due to attentional impairment. Gender also seems to play a role in recognition of emotions. Unfortunately, little is known about the differences in emotion perception abilities across lifespans for men and women, even if females show more ability from infancy.

Objective: The present study aimed to examine the role of age and gender on facial emotion recognition in relation to neuropsychological functions and face exploration strategies. We also aimed to explore the associations between emotion recognition and quality of life.

Methods: 60 healthy people were consecutively enrolled in the study and divided into two groups: Younger Adults and Older Adults. Participants were assessed for: emotion recognition, attention abilities, frontal functioning, memory functioning and quality of life satisfaction. During the execution of the emotion recognition test using the Pictures of Facial Affects (PoFA) and a modified version of PoFA (M-PoFA), subject’s eye movements were recorded with an Eye Tracker.

Results: Significant differences between younger and older adults were detected for fear recognition when adjusted for cognitive functioning and eye-gaze fixations characteristics. Adjusted means of fear recognition were significantly higher in the younger group than in the older group. With regard to gender’s effects, old females recognized identical pairs of emotions better than old males. Considering the Satisfaction Profile (SAT-P) we detected negative correlations between some dimensions (Physical functioning, Sleep/feeding/free time) and emotion recognition (i.e., sadness, and disgust).

Conclusion: The current study provided novel insights into the specific mechanisms that may explain differences in emotion recognition, examining how age and gender differences can be outlined by cognitive functioning and face exploration strategies.

Introduction

Social perception can be described as the ability to understand and react appropriately to the social signals sent out by other people vocally, facially, or through body posture (Mayer et al., 2016). The value of emotional information can exert significant effects on human performance as it drives behaviors in approaching or avoiding social interactions. As facial expressions convey a wealth of social information, their recognition interacts with various cognitive processes such as attention and memory. Specific emotional information (happy and sad faces) is associated with focused or distributed attention respectively (Srinivasan and Gupta, 2010). Global processing seems to facilitate recognition of happy faces while local processing seems to facilitate recognition of sad faces (Srinivasan and Gupta, 2011). Differences in emotion-attention interaction lead to differential effects on conscious perception of irrelevant emotional stimuli. A better identification of happy faces compared to sad faces and an inhibition of unattended sad distractors faces is detected in the condition of high processing demands placed on the perceptual system. This indicates an influence of inhibitory processes during difficult attentional tasks on perception of some distractor stimuli (Gupta and Srinivasan, 2014). Social affect cues like irrelevant distractor faces with happy or sad expression can influence cognitive control as in the case of error processing. A slowness in response immediately after an error only occurs when distractors are constituted by a happy face, suggesting the need for additional processing when positive performance feedback occurs after an error (Gupta and Deák, 2015).

Emotions interact with memory improving encoding or retrieval of emotional information (Christianson, 1992; Buchanan and Adolphs, 2003). Holistic processing plays a crucial role in face identification and in long-term memory, as it determines better long-term memory for whole faces that contain emotional information (Gupta and Srinivasan, 2009).

In recent years, researchers identified a relatively universal set of emotion expressions resumed in six main categories (happiness, sadness, fear, anger, surprise, and disgust) (Ekman, 2003).

Recognition of facial expressions depend both on accurate visuo-perceptual processes and on the correct categorization of the emotions. The right hemisphere plays a central role in processing emotional facial expressions (Gur et al., 2002). Emotional stimuli specifically activate a dominant right-lateralized process while automatic processing of emotional stimuli captures right hemispheric processing resources, transiently interfering with other right hemispheric functions (Hartikainen et al., 2000). Emotional distractors also produce transient interferences on the speed of visual search tasks in which stimuli are presented in the left visual hemifield (thus processed by the right hemisphere) (Gupta and Raymond, 2012). Furthermore, a significant slowing of letter targets presented in the left visual hemifield is detected when irrelevant distractors have high motivational salience regardless of their valence, an effect not found when targets appear in the right visual hemifield (Gupta et al., 2018). This indicates that the valence of irrelevant emotional distractors and the level of perceptual load in the task play an important role in the attentional capturing of distractors (Gupta et al., 2016).

Even if there is a functional overlap among the brain areas involved in recognition of different facial aspects (Calder and Young, 2005), neurophysiological data highlighted the central role of the amygdala and orbitofrontal circuits (Adolphs, 2002), the insula and striatum (Haxby et al., 2011) and the ventromedial prefrontal cortex (Mather, 2016) in the recognition of emotions.

Functional neurophysiological models suggest different structures involved in face identification and recognition of emotional expressions (Haxby et al., 2000; O’Toole et al., 2002). Fusiform face area (FFA) in the ventral temporal lobes are implicated in the perception of static facial features (Gobbini and Haxby, 2007; Vuilleumier and Pourtois, 2007), whereas the inferior occipital gyrus and the superior temporal sulcus (STS) are involved in processing the dynamic facial cues (Haxby et al., 2000; Adolphs, 2002; Ishai, 2008). The amygdala seems to be related especially to fear recognition. Fearful faces used as irrelevant stimuli can elicit an amygdala response only in the low perceptual load condition, suggesting that emotional faces capture attention despite their irrelevance in the task (Pessoa et al., 2005). The amygdala also shows sensitivity to the intensity of positive visual stimuli, as it is more activated in response to stimuli with higher intensity (Bonnet et al., 2015). The insula is related to disgust recognition, although intracranial electroencephalography (iEEG) highlights that amygdala and insula are more related to emotional relevance or biological importance of facial expressions than to specific emotions (Tippett et al., 2018).

Finally, many studies highlighted that basal ganglia and insula seem to be specifically involved in decoding facial expressions of disgust (Calder et al., 2001; Woolley et al., 2015). Insular activation is selectively reported to be active during processing of angry and, even more, of disgusted faces (Fusar-Poli et al., 2009). Amygdala is especially implicated in decoding facial expressions of fear (Fusar-Poli et al., 2009). A recent study (McFadyen et al., 2019) describes an afferent white matter pathway from the pulvinar to the amygdala that facilitates fear recognition. In this context, and in relation to old age, some authors (Ruffman et al., 2008) relate the relative sparing of some structures into the basal ganglia with increasing age to the lack of impairment of disgust recognition. Instead, the amygdala (related to fear recognition) could have a linear reduction in volume with age, although this is not as rapid as that occurring in some other brain areas such as the frontal lobes.

Brain lesions can affect the abilities in emotion recognition, inducing behavioral impairment (Hogeveen et al., 2016; Yassin et al., 2017), but also aging seems to play a considerable role in affecting recognition of facial expressions and consequently of emotions (Ruffman et al., 2008). Aging-related changes in emotional processing were found in the intra-network functional connectivity of the visual and sensorimotor networks implicated in internally directed cognitive activities (e.g., the Default Mode Network; DMN) and of the ECN (executive control network) involved in externally directed higher-order cognitive functions (Bressler and Menon, 2010; Uddin et al., 2010). A potential compensative role for aging-related decline in brain perceptual performance, as demonstrated by the progressive decline in visual and sensory domains with chronological aging was found for higher cognitive functions represented by ECN (Goh, 2011; Lyoo and Yoon, 2017).

Recent data (Olderbak et al., 2018) highlighted a peak of performance in emotion recognition in young people aged 15 to 30, with progressive decline after the age of 30. When compared to younger adults (18–30 years), older people (over 65 years) have more difficulties in correctly decoding negative emotions as anger, sadness, and fear (Ruffman et al., 2008; Grainger et al., 2015) showing a preservation for positive emotions such as happiness (Isaacowitz et al., 2007; Ruffman et al., 2008; Horning et al., 2012; West et al., 2012; Kessels et al., 2014). Furthermore, older people showed increased motivation to engage in prosocial behaviors in the context of relevant socio-emotional cues (Sze et al., 2012; Beadle et al., 2013).

There is also evidence that older people have difficulties in identifying emotions from dynamic bodily expressions (Ruffman et al., 2009), highlighting that dynamic cues may not be particularly beneficial in late adulthood (Grainger et al., 2015). Moreover, they are unable to detect when one emotion switches to another (Sullivan and Ruffman, 2004).

Eye-tracking studies highlighted that age-related differences in recognition of emotions could be explained by different face exploration patterns due to attentional impairment. Indeed, some studies observed that older people tend to fix on the mouth region, whereas young people spend attention on the eye areas (Wong et al., 2005; Sullivan et al., 2007; Murphy and Isaacowitz, 2010), which play a central role in decoding emotions such as anger, sadness and fear. Moreover, young people gaze more at the eye regions when stimuli are presented in a dynamic rather than still way. By contrast, older people do not show any difference between dynamic and still modality (Grainger et al., 2015).

Gender also seems to play a role in emotional regulation and in recognition of emotions but empirical studies of gender differences in emotion have produced far less consistent results. Neuroimaging data reports gender differences in the laterality of amygdala response, as it relates to subsequent memory (Cahill et al., 2001, 2004) and greater amygdala activity in men compared to women (Hamann et al., 2004; Schienle et al., 2005). As regards to emotional regulation, men and women show comparable amygdala response to negative images, but men display a greater decrease in amygdala activity during the use of a cognitive strategy for emotion regulation (reappraisal) and less activity in pre-frontal regions. In addition, women show greater ventral striatal activity during the down-regulation of negative emotion compared to men (McRae et al., 2008). Investigations about gender differences are conducted to understand the neural basis of complex social emotions. In this vein, distinct brain activations in response to sexual and emotional infidelity are detected in men and women. The former show greater activation in the amygdala and hypothalamus whereas the latter demonstrate greater activation in the posterior STS (Takahashi et al., 2006). Females seem to be more susceptible toward attention and evaluative bias for processing negative emotional information (Gupta, 2012). Females also show enhanced activity during the processing of negative information in potential event related components (N2 e P3b) (Li et al., 2008).

Unfortunately, little is known about differences in emotion perception abilities across lifespan separately for men and women, even if females show more ability from infancy (McClure, 2000). Recent research (Olderbak et al., 2018) conducted on a large community sample of persons ranging from younger than 15 to older than 60 years of age, highlighted less ability in males at all ages to read emotional faces. However, the gender differences in emotion recognition seem to decrease in magnitude with increasing age.

The aim of this study was to examine the role of age and gender effects on facial emotion recognition in relation to neuropsychological functions and face exploration strategies in a sample of young and old healthy people. We also aimed to explore the associations between emotion recognition and quality of life.

We expected a decreasing ability in emotion recognition correlated with the increasing of age, as well as differences between males and females. We also expected a negative correlation between cognitive functioning and face exploration patterns. Moreover we anticipate that subjective satisfaction of life might interfere with emotion recognition.

Materials and Methods

Participants

We enrolled a sample of 60 healthy people. They were healthy relatives of patients admitted to the neurological rehabilitation ward (where the study was conducted), and healthy controls from another sample (see Mancuso et al., 2015). Participants were divided into two groups: Younger Adults Group (YAG) aged from 28 to 54 and Older Adults Group (OAG) aged from 66 to 77.

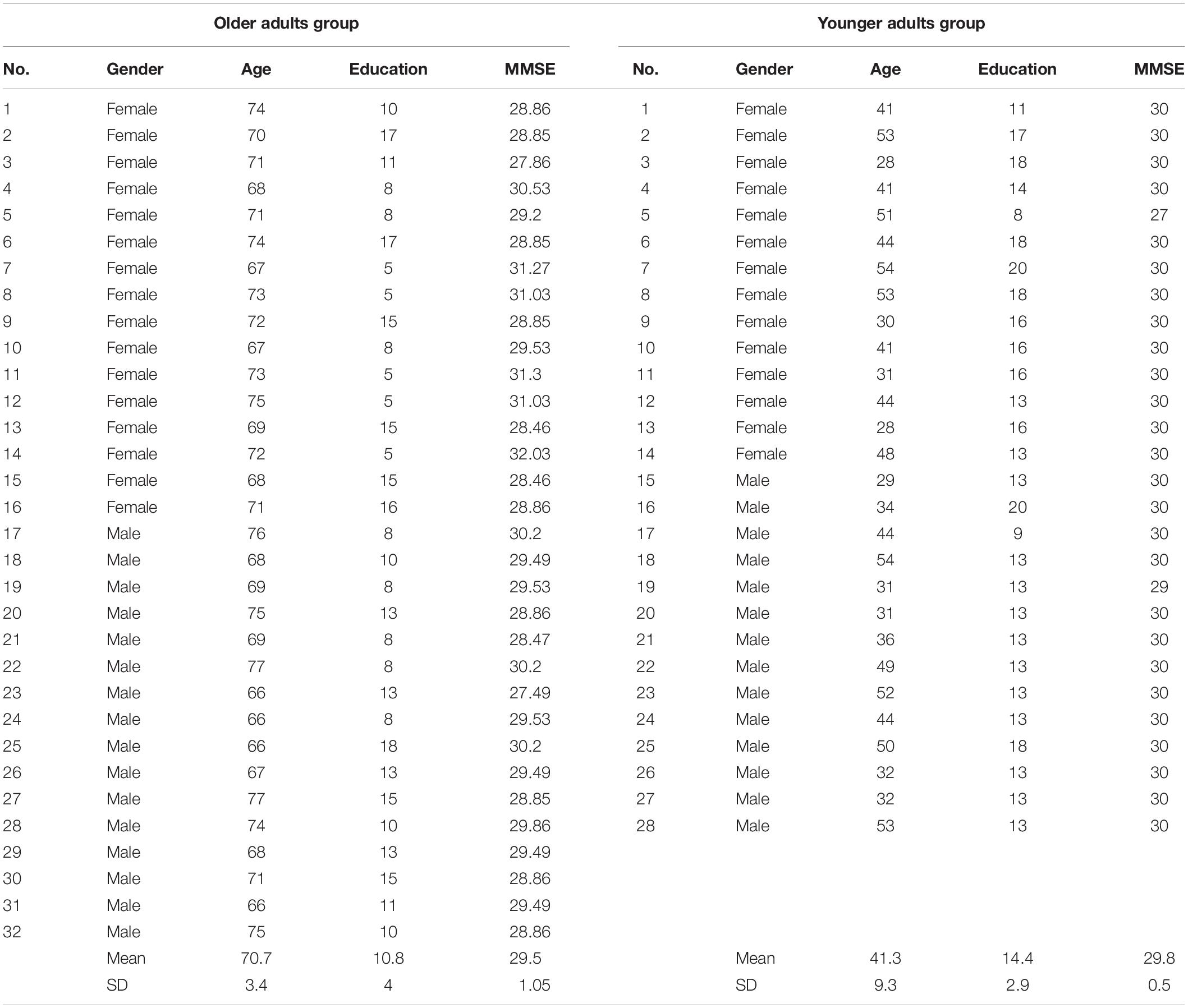

The YAG was composed of 14 males, 14 females (Mean ± SD age 41.3 ± 9.34; range = 28–54). The OAG was composed of 16 males and 16 females (Mean ± SD age 70.7 ± 3.4; range = 66–77) (Table 1). There were no statistically significant differences between groups in education.

All people were screened for cognitive decline using the Mini-Mental State Examination (MMSE) (cut-off score of 24). Visual acuity was assessed in a functional way asking to people to correctly read a newspaper. The screen was approximately 35 cm from the eyes. The use of glasses or contact lenses was allowed. Exclusion criteria were a lower score than 24 on MMSE (Folstein et al., 1975) and the presence of a visual impairment uncorrected with glasses or contact lenses. All people enrolled in the study spoke Italian as their first language and none had positive history of previous neurological or psychiatric illness. Participants did not receive any remuneration for their participation. All subjects gave informed consent to the experimental procedure. The protocol was previously approved by the local Ethical Committee.

Procedure

In order to detect differences in emotion recognition abilities, all participants underwent one session of about 2 h during which they were assessed for: emotion recognition, attention abilities, frontal functioning, memory functioning, and quality of life satisfaction.

During the execution of the emotion recognition test using the Pictures of Facial Affects (PoFA, Ekman and Friesen, 1976) and a modified version of PoFA (M-PoFA), all the eye movements of the subjects were recorded with the Tobii Eye Tracker in order to detect the exploration modality adopted by each participant.

Test for Emotion Recognition

Pictures of Facial Affects (Ekman and Friesen, 1976) consists of 110 photographs of human faces representing the six basic emotions (happiness, sadness, fear, anger, surprise, and disgust), plus one neutral expression.

We tested the recognition abilities in two different ways. In the first (Single PoFA Recognition) we displayed the 110 single photos for 5 s in a random sequence on the screen connected to an eye tracker in order to record all the eye movements made by subjects during image exploration. After each photo a list with the names of the seven emotions (the six from Ekman and the neutral one) appeared on the screen. The participants were asked to match the just-observed emotion with one of the seven choices indicating: happiness, sadness, fear, anger, surprise, disgust and neutral expression. The aim of this test was to evaluate the ability of people to correctly recognize the emotions. The number of correct match between the observed emotion on the photo and the name of the emotion were recorded obtaining seven scores (one for each emotion) and a total score (number of correct emotions identified).

In the second way (Matching of Pictures of Facial Affects: M-PoFA), we displayed on the screen the same photographs of PoFA in pairs for 5 s, in a randomized order. The subjects had to decide whether the emotions represented in the two faces were the same or different. The aim of this test was to evaluate the possible loss of the pattern which mediates the recognition of emotions, as opposed to the ability to correctly recognize and name the emotion. Three scores were detected: M-PoFA total correct score (number of correct same or different couples identified), M-PoFA total score for identical emotion pair (number of correct identical emotion pairs identified), M-PoFA total score for not identical emotion pairs (number of correct not identical emotion pairs identified).

Eye movements recording: Eye movements were recorded with the Tobii Eye Tracker (My Tobii 1750; Technology AB, Danderyd, Sweden) using infrared diodes to generate reflection patterns on the corneas of the user’s eyes, collected by image sensors. The eyes and the corneal reflection patterns were processed by image algorithms to calculate the three-dimensional position of eyeball and gaze point of viewing on the screen. Before each task, a calibration procedure was carried out.

Eye movements were recorded for the whole time spent by people to execute the PoFA test in both modalities. The registration was carried out only during photos presentation in order to detect eye movements in face stimuli scanning. Each picture displayed was divided into different areas of interest (AOI), created by means of an AOI tool button that allows to define an AOI on a static image by choosing a desired shape tool. The AOIs created on each picture were: right eye AOI (od-AOI), left eye AOI (os-AOI), frontal AOI, mouth AOI, distractor-fixation AOI (the distractor was an irrelevant small number in the upper left of each photo), global AOI (the whole photo area) and area of no interest (Not on AOI - the area of the computer screen outside the photo) with a clear net.

For each of these AOI, we measured the following parameters: time to first fixation (the time from the start of the stimulus display until the subject fixates an AOI for the first time, measured in seconds); fixation length (duration of each individual fixation within an AOI, in seconds); first fixation duration (duration of the first fixation on an AOI, in seconds); fixation count (number of times the participant fixates on an AOI); observation length (duration of all visits within each AOI, in seconds); observation count (number of visits within each AOI); fixation before (number of times the participant fixates on the stimulus before fixating on an AOI for the first time) and participants% (percentage of recordings in which participants fixated at least once within an AOI).

Test for Attentional Abilities

Sustained Attention to Response Task (SART, Robertson et al., 1997): this test was devised to evaluate sustained attention and comprises the numbers 1–9 appearing 225 times in random order and in different sizes in a white font on a black computer screen. Subjects had to respond to the appearance of each number by pressing a button, except when the number was a 3, which occurred 25 times in total. The following measures of response accuracy were assessed: mean reaction time in milliseconds, calculated over correct response trials, number of commission errors (key presses when no key should have been pressed) and number of omission errors (absent presses when a key should have been pressed).

Trail Making Test (TMT, Giovagnoli et al., 1996): composed of two subtests: part A measures the speed of visual search and psychomotor ability by connecting numbers from 1 to 25 typed on a sheet; part B measures the ability to alternate between two sequences of numbers and letters. Every subtest is preceded by a run-in, whose correct execution represents the condition to proceed with the administration of the test. Scores are given according to the time needed to complete subtests A and B. Subtraction of part B from part A gives an estimation of attentional switching capacity.

Test for Frontal Functioning

Frontal Assessment Battery (FAB, Apollonio et al., 2005): a battery for general screening of executive functions divided into six subtests. Each of them assesses an aspect of executive functions: conceptualization of similarities, lexical fluency, motor programming, response to conflicting instructions, go-no go task, prehension behavior. The score for every subtests ranges from 0 to 3. The maximum score is 18, which is corrected for age and schooling according to standardization norms.

Test for Memory

Corsi Supra-Span Learning (CSSL, Capitani et al., 1991): a supra-span sequence of 8-blocks is showed to the patient, who is asked to reproduce it immediately after its presentation. The examiner presents the same sequence until the patient reproduces it correctly three times consecutively, up to a maximum of 18 trials. If the subject does not succeed within 18 trials, the task is interrupted. Each sequence given by patients is scored on the basis of the probability of giving the correct response (or fragments of it) by chance. Subjects that reached the criterion before the 18th trial received a score corresponding to the correct performance for the remaining trials up to the 18th. The maximum score of the CSSL is 29.16, which is corrected for age and schooling according to standardization norms.

Test Assessing Satisfaction of Life

Satisfaction Profile (SAT-P, Majani and Callegari, 1998): self-administered questionnaire of 32 items assessing areas that reflects subjective satisfaction of life: sleep, eating, physical activity, sexual life, emotional status, self-efficacy, cognitive functioning, work, leisure, social and family relationships, and financial situation. Patients were requested to evaluate their personal satisfaction in the last month for each of the 32 items on a 10 cm horizontal scale ranging from “extremely dissatisfied” to “extremely satisfied.” It provides two kinds of scores: the analytic scoring that consists of 32 scores (one for each item) and the factorial scoring that consists of 5 scores, one for each of the factor extracted by factor analysis: psychological functioning, physical functioning, work, sleep/eating/leisure, and social functioning. In SAT-P higher scores correspond to better satisfaction of life (range 0–100 for both analytic and factorial scoring).

Statistical Analysis

In order to test the influence of age and gender on PoFA and M-PoFA scores, we used Multivariate Analysis of Variance (MANOVA) where assumptions for collinearity were met, otherwise univariate test were performed. Post hoc comparisons were carried out by means of Bonferroni test (p < 0.05).

Analysis of covariance (ANCOVA) was used to compare groups on the emotion recognition measures in order to control the bias of possible confounding variables. Thus, variables emerged as significant in the MANOVAs in affecting emotion recognition as confounders, were entered into the analysis as covariates.

Age effects on SAT-P were detected comparing younger and older adults by means of non-parametric Mann-Whitney test for independent samples. Spearman ρ correlation coefficient for categorical variables was used to correlate satisfaction of life with emotion recognition.

All tests were two tailed and considered significant if p < 0.05.

The Statistical Package for Social Science, version 20.0 (SPSS Inc., Chicago, IL, United States), was used for statistical analyses.

Results

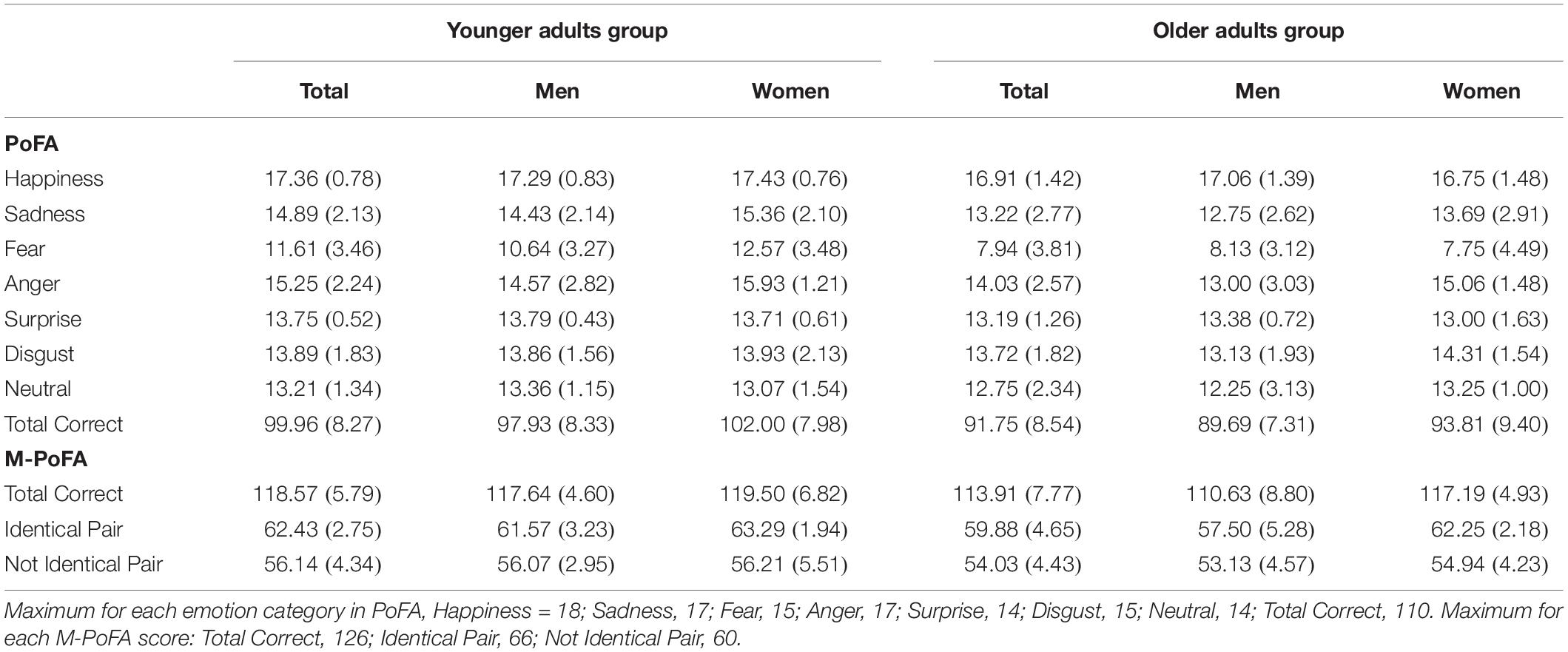

Table 2 shows Mean and Standard Deviation (SD) for PoFA single emotion recognition scores, PoFA total correct emotion recognition scores, summed over the seven emotions (total = 110) and for M-PoFA total correct score, M-PoFA total score for identical emotion pair, M-PoFA total score for not identical emotion pair.

Effects of Age on Emotion Recognition in the Sample Divided in Younger and Older Adults Group

Comparisons between YAG and OAG on PoFA and on M-PoFA were carried out by means of a multivariate ANOVA. We used the “group” variable as the between group factor and PoFA scores (happiness, sadness, fear, anger, surprise, disgust, neutral) and M-PoFA scores (total score, total score for identical emotion pair, total score for not identical emotion pairs) as the dependent variables. There was a significant effect of the group factor on emotion recognition variables (Wilk’s Lambda = 0.65, F[9,50] = 2.98; p = 0.006; η2 = 0.35). The YAG demonstrated significantly greater scores in sadness (F[1,58] = 6.7; p = 0.012; η2 = 0.10), fear (F[1,58] = 15.09; p = 0.000; η2 = 0.20), surprise recognition (F[1,58] = 4.88; p = 0.031; η2 = 0.07), PoFA total score (F[1,58] = 14.2; p = 0.000; η2 = 0.19), M-PoFA total correct score (F[1,58] = 14.2; p = 0.000; η2 = 0.19) and M-PoFA total score for identical emotion pair (F[1,58] = 6.45; p = 0.014; η2 = 0.10) when compared to OAG.

The Supplementary Table S1 shows the comparison between younger and older adults on neuropsychological variables (SART, TMT, FAB, CSSL) and on eye movements variables. A MANOVA was conducted using the between group factor “group” as the independent variable and neuropsychological variables as dependent measures (SART, TMT, FAB, CSSL). A significant effect of the group factor on cognitive functioning variables was detected (Wilk’s Lambda = 0.58, F[7,52] = 5.3; p = 0.000; η2 = 0.41). The younger adults showed significant faster reaction time (F[1,58] = 24.1; p = 0.000; η2 = 0.29), fewer omission errors (F[1,58] = 5.78; p = 0.019; η2 = 0.09) and commission errors (F[1,58] = 7.92; p = 0.007; η2 = 0.12) in the SART test, better attentional shifting abilities in TMT B-A (F[1,58] = 11.4; p = 0.001; η2 = 0.16) and higher long term visuospatial memory scores on CSSL (F[1,58] = 13.01; p = 0.001; η2 = 0.18) compared to OAG.

As regards comparisons between YAG and OAG on eye movements pattern, univariate ANOVAs showed that older adults exhibited lower duration of each individual fixation not on AOI (Not on AOI Fixation Length – F[1,58] = 11.3; p = 0.001; η2 = 0.16), shorter duration of the first fixation not on an AOI (not on AOI first fixation duration – F[1,58] = 3.99; p = 0.05; η2 = 0.06) compared to the younger adults. The younger adults showed fewer numbers of times in which each participant fixates the distractor (AOI distractor Fixation Count – F[1,58] = 4.24; p = 0.044; η2 = 0.07), lower duration of all visits into frontal AOI (AOI frontal Observation Length – F[1,58] = 3.9; p = 0.05; η2 = 0.06) and into distractor AOI (AOI distractor Observation Length – F[1,58] = 6.14; p = 0.016; η2 = 0.09) compared to the older adults.

Regarding single emotions domains, F did not reach significance after including SART, TMT B-A, CSSL, and eye-gaze fixations characteristics (Not on AOI Fixation Length, Not on AOI first fixation duration, AOI distractor Fixation Count, AOI frontal Observation Length and AOI distractor Observation Length) as covariates in happiness, sadness, anger, surprise, disgust, neutral emotion recognition and PoFA total score. Also for M-PoFA, F did not reach significance after inclusion of covariates SART, TMT B-A, CSSL, and eye-gaze fixations characteristics (Not on AOI Fixation Length, Not on AOI first fixation duration, AOI distractor Fixation Count, AOI frontal Observation Length and AOI distractor Observation Length).

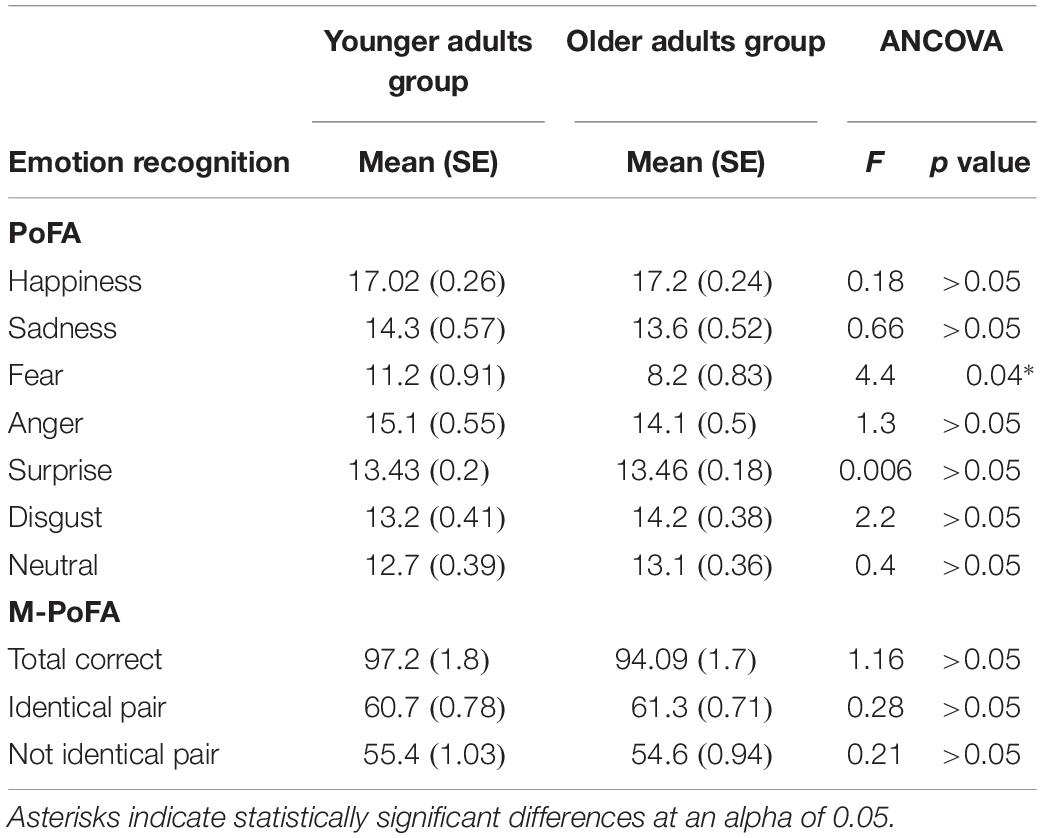

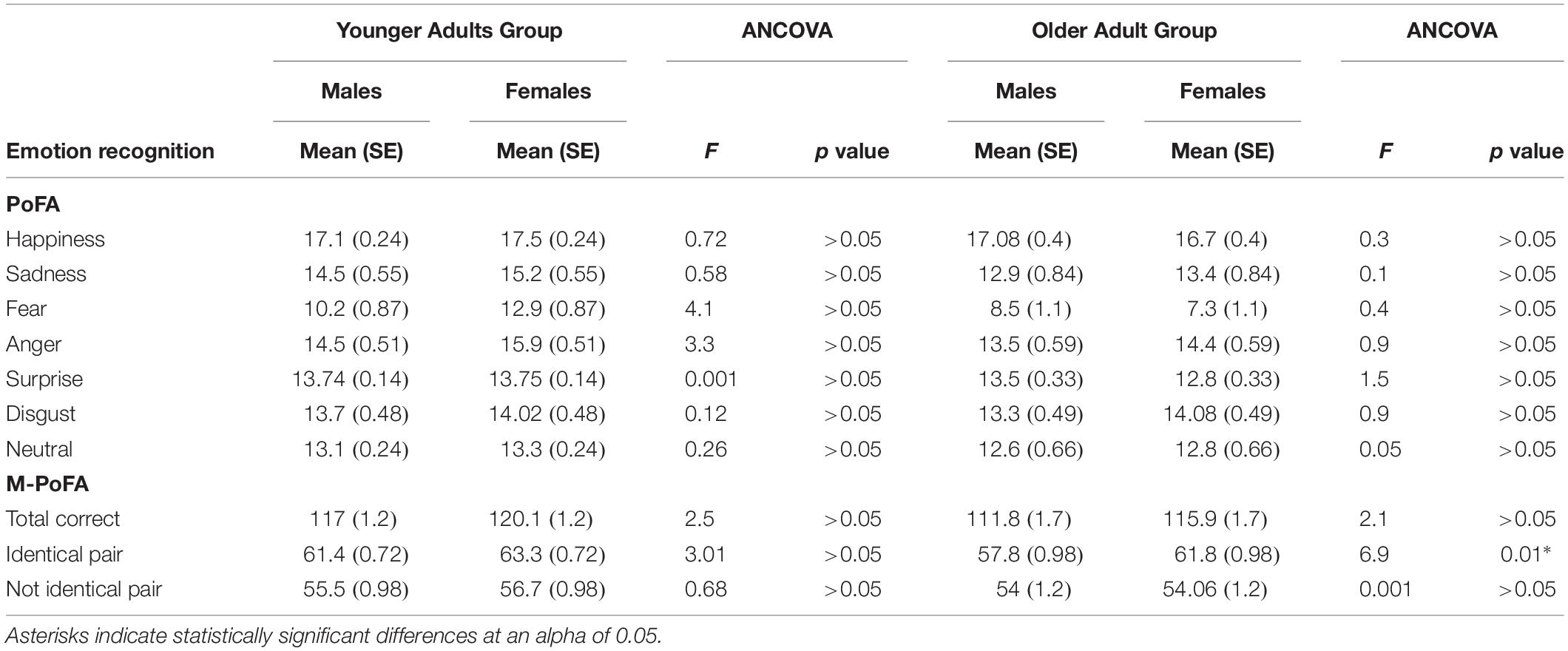

When SART, TMT B-A, CSSL, and eye-gaze fixations characteristics (Not on AOI Fixation Length, Not on AOI first fixation duration, AOI distractor Fixation Count, AOI frontal Observation Length and AOI distractor Observation Length) were included as covariates, the only significant difference between the younger and the older adults was found in fear recognition (F[1,49] = 4.37; p = 0.042; η2 = 0.08). ANCOVA analysis revealed statistically significant differences in fear recognition between young and old adults when adjusted for cognitive functioning and eye-gaze fixations characteristics: adjusted means of fear recognition were significantly higher in the younger group than in the older group (corrected) (p = 0.04) (Table 3).

Table 3. Comparison between Younger and Older Adult Groups in emotion recognition variables using neuropsychological variables and eye movement variables as confounding covariates.

Effects of Gender on Emotion Recognition in Younger and Older Adults

Multivariate ANOVAs were conducted in the older and, separately, in the younger adults. In each group we conducted two separate MANOVAs in order to compare males and females on emotion recognition and cognitive functioning. To test the influence of gender on cognitive functioning we used gender as independent variable and neuropsychological variables (SART, TMT, FAB, CSSL) as dependent measures. To test exploration strategies, males and females were compared by means of univariate ANOVAs entering as dependent variable each Eye tracker parameter. As regards emotion recognition, in the MANOVA we used gender as the independent variable and PoFA scores (happiness, sadness, fear, anger, surprise, disgust, neutral) and M-PoFA scores (total score, total score for identical emotion pair, total score for not identical emotion pairs) as dependent variables.

In the YAG, no significant differences between males and females were detected in emotion recognition (Wilk’s Lambda = 0.7, F[9,18] = 0.83; p = 0.59; η2 = 0.29), in cognitive functioning (Wilk’s Lambda = 0.78, F[6,21] = 0.9; p = 0.46; η2 = 0.21) and in eye-gaze fixations characteristics (all p > 0.05).

In the OAG, although the MANOVA didn’t show a significant effect of gender on emotion recognition variables (Wilk’s Lambda = 0.65, F[9,22] = 1.3; p = 0.28; η2 = 0.34), between-subjects effects showed a significant effect of gender on anger recognition (F[1,30] = 5.9; p = 0.02; η2 = 0.16), on M-PoFA total score for identical emotion pair (F[1,30] = 11.07; p = 0.002; η2 = 0.27) and on M-PoFA total correct score (F[1,30] = 6.7; p = 0.014; η2 = 0.18). Old males showed significant lower scores with respect to old females in anger recognition (Mean = 13; SE = 0.59 vs. Mean = 15.06; SE = 0.59), in M-PoFA total correct score (Mean = 110.6; SE = 1.7 vs. Mean = 117.1; SE = 1.7) and in M-PoFA total score for identical emotion pair (Mean = 57.5; SE = 1 vs. Mean = 62.2; SE = 1).

No significant effect of gender was found on cognitive functioning in the older adults (Wilk’s Lambda = 0.64, F[9,22] = 1.3; p = 0.27; η2 = 0.35).

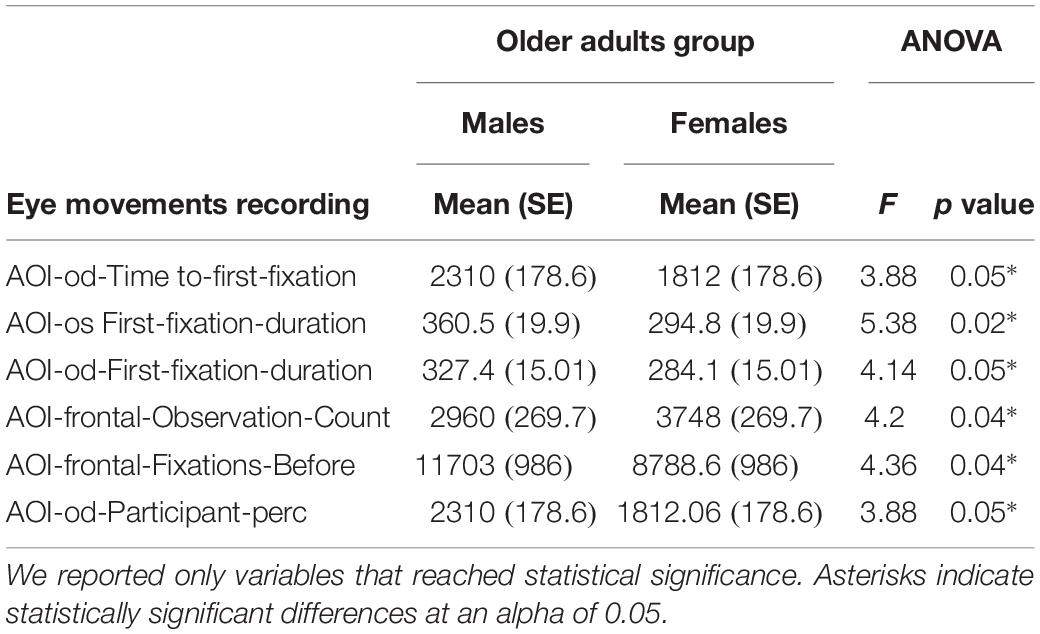

In the older adults, eye-gaze fixations characteristics on which the males and females differed were: AOI-od-Time-to-first-fixation (F[1,30] = 3.88; p = 0.058; η2 = 0.11) (Mean females = 1812; SE = 178.6 vs. Mean males = 2310; SE = 178.6), AOI-os-First-fixation-duration (F[1,30] = 5.38; p = 0.02; η2 = 0.15) (Mean females = 294.8; SE = 19.9 vs. Mean males = 360.5; SE = 19.9), AOI-od-First-fixation-duration (F[1,30] = 4.14; p = 0.05; η2 = 0.12) (Mean females = 284.1; SE = 15.01 vs. Mean males = 327.4; SE = 15.01), AOI-frontal-Observation-Count (F[1,30] = 4.2; p = 0.04; η2 = 0.12) (Mean females = 3748; SE = 269.7 vs. Mean males = 2960; SE = 269.7), AOI-frontal-Fixations-Before (F[1,30] = 4.36; p = 0.04; η2 = 0.12) (Mean females = 8788.6; SE = 986 vs. Mean males = 11703; SE = 986) and AOI-od-Participant-perc (F[1,30] = 3.88; p = 0.05; η2 = 0.11) (Mean females = 1812.06; SE = 178.6 vs. Mean males = 2310; SE = 178.6).

Old males spent more time to reach fixation on the right eye AOI for the first time (AOI-od-Time-to-first-fixation), showed more duration of the first fixation on the right eye and on the left eye AOI (AOI-os-First-fixation-duration and AOI-od-First-fixation-duration), a higher number of times in which they fixated on the stimulus before fixating on frontal AOI for the first time (AOI-frontal-Fixations-Before) and more recordings in which they fixated at least once into the right eye AOI (expressed as a fraction of the total number of recordings) (AOI-od-Participant-perc) with respect to old females.

Old females made a significantly higher number of visits (i.e., the interval of time between the first fixation on the AOI and the next fixation outside the AOI) into frontal AOI (AOI-frontal-Observation-Count) with respect to males (Table 4).

Table 4. Comparison between males and females in the Older Adults Group on neuropsychological variables and on eye movement variables.

With regards to emotion recognition, in the older adults F did not reach significance after including eye-gaze fixations characteristics (AOI-od-Time-to-first-fixation, AOI-os-First-fixation-duration, AOI-od-First-fixation-duration, AOI-frontal-Observation-Count, AOI-frontal-Fixations-Before, AOI-od-Participant-perc) as covariates in happiness, sadness, anger, surprise, disgust, neutral expression recognition, and PoFA total score.

Differences between males and females in the older adults in anger recognition can be attributed to face exploration modality: AOI-od-First-fixation-duration exerts a significant effect on anger recognition (F[1,25] = 9.17; p = 0.006; η2 = 0.26).

After inclusion of covariates there was a significant difference in the older adults between males and females only in M-PoFA total score for identical emotion pair (F[1,25] = 6.9; p = 0.014; η2 = 0.21). ANCOVA analysis revealed that recognition of identical pairs was significantly higher in females than in males (adjusted Mean = 61.8; SE = 0.98 vs. adjusted Mean = 57.8; SE = 0.98) (Table 5).

Table 5. Comparison between males and females in the Younger and the Older Adult Groups in emotion recognition variables using neuropsychological variables and eye movements variables as confounding covariates.

Relations Between Satisfaction Profile and Emotion Recognition

Factor 3 (Work) (Z = −2.5, p = 0.02) and factor 4 (Z = −2.5, p = 0.01) (Sleep/feeding/free time) scores of SAT-P were significantly higher in the older adults than in the younger adults.

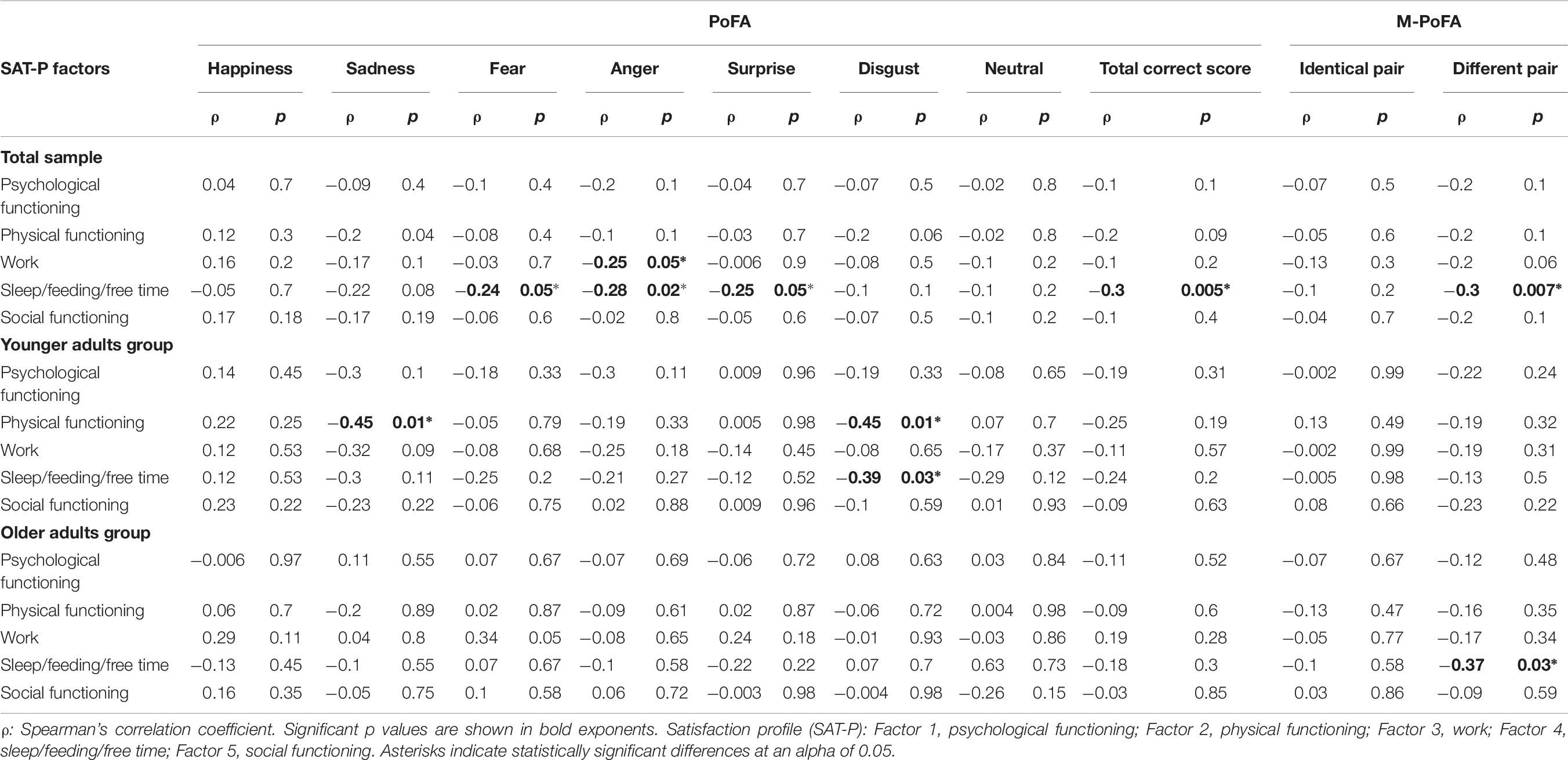

Bivariate correlations between SAT-P and emotion recognition in the total sample, in the YAG and in the OAG are documented in Table 6. A negative correlation emerged between factor 3 (Work) and anger recognition (ρ = −0.25, p = 0.05). Factor 4 of SAT-P (Sleep/feeding/free time) was negatively correlated with fear (ρ = −0.24, p = 0.05), anger (ρ = −0.28, p = 0.02), surprise recognition (ρ = −0.25, p = 0.05), PoFA total score (ρ = −0.3, p = 0.005) and M-PoFA total score for not identical emotion pair (ρ = −0.3, p = 0.007).

Table 6. Correlations between SAT-P and emotion recognition in the total sample, in the YAG and in the OAG.

As shown in Table 6, in the YAG the factor 2 (Physical functioning) was significantly negatively correlated with sadness (ρ = −0.45, p = 0.01) and disgust (ρ = −0.45, p = 0.01) recognition; factor 4 (Sleep/feeding/free time) was significantly negatively correlated with disgust recognition (ρ = −0.39, p = 0.03).

For the older adults, bivariate correlations showed a significant negative correlation between factor 4 (Sleep/feeding/free time) and recognition of not identical emotion pairs (ρ = −0.37, p = 0.03).

Discussion

The emotion recognition of a sample (n = 60) of individuals (30 males, 30 females) with different ages was examined. The aim of the study was to assess the role of age and gender effects on facial emotion recognition in relation to neuropsychological functions and face exploration strategies in a sample of young and old healthy people.

Differences in Emotion Recognition: The Influence of Aging

Many previous studies highlighted that older adults perform worse than younger adults in perceiving emotions, particularly when recognizing anger, sadness, and fear (Isaacowitz and Stanley, 2011; Sullivan et al., 2017), whereas a recent study underlined that age differences in emotion perception were limited to very low intensity expressions (Mienaltowski et al., 2019).

In our study, the YAG demonstrated significantly better scores on sadness, fear, surprise recognition and on PoFA total score compared to the OAG. In addition, the older adults got lower scores on matching identical Pictures of Facial Affects in M-PoFA, presenting more difficulties than younger adults in matching identical pairs of emotions, suggesting that they lost the ability to recognize the similarities or the differences between two specific emotional patterns, regardless of their meaning.

Our data was in contrast with previous works that reported age differences in two-choice matching tasks in which emotion recognition requires to simply perceive similarities in stimuli features only when stimuli display less intense emotions (Orgeta, 2010; Mienaltowski et al., 2013). Our paradigm required a perceptual judgment in M-PoFA test, thus eliminating cognitive factors associated with multiple verbal labels of emotion to choose from, but the facial expressions that we used in M-PoFA, as derived from PoFA test, represent highly intense emotional expressions (Carroll and Russell, 1997).

Furthermore, according to our data, age seems to negatively affect emotion recognition, but there are no differences in anger (in contrast with Sullivan et al., 2017), happiness, and disgust recognition. The absence of age differences in anger and happiness recognition in our sample is in accordance with the results from Mienaltowski et al. (2019) and it might be correlated to the high intensity of emotions expressed in the stimuli. The absence of differences reported in disgust may also be correlated to a more “visceral” emotion that involves social cognitive mechanisms to regulate or anticipate and avoid exposure to pathogens and toxins (Kavaliers et al., 2018), necessary to preserve physical integrity. Disgust is also an emotion linking inner somatic visceral perceptions to external stimuli. Perhaps, we could explain the preservation of disgust recognition in the older adults as a special type of attention given to the body, both because it is frailer and because in older age psychological distress is also expressed through body and somatic symptoms.

In addition, a meta-analytic review (Ruffman et al., 2008) observed a decline in older adults of emotion recognition with the exception of disgust (as in our study), with a non-significant trend for older adults to be better than younger adults in disgust recognition. Moreover, some authors (Ebner et al., 2011) observed an age-group related difference in misattribution of expressions, in which younger adults were more likely to label disgusted faces as angry, whereas older adults were more likely to label angry faces as disgusted.

Considering the SAT-P our results, although to be considered with caution, showed a tendency toward more satisfaction with some life dimensions in older adults than in younger subjects, especially with regard to sleep/feeding/free time, activities and economic factors. Moreover, in our sample, both in the older adults and in the younger subjects, satisfaction of life is negatively correlated with recognition of essentially negative emotions, such as sadness and disgust in the YAG, with anger, fear and surprise seen across the total sample. Previous studies have underlined a mood-congruent effect in processing face emotions. Voelkle et al. (2014) observed that older adults were more likely to perceive happiness in faces when in a positive mood and less likely to do so when in a negative mood, while a recent study (Lawrie et al., 2018) demonstrated how emotion perception can change when a controlled mood induction procedure is applied to alter mood in young and older subjects. Moreover, some authors (Ruffman et al., 2008) also observed that older adults present a positive bias in attention and memory for positive emotional information compared to negative information, as an adaptive strategy to maintain emotion regulation and avoid social conflict. In this sense, an Eye-tracking study (Wirth et al., 2017) observed that older adults, when compared to younger adults, showed fixation patterns away from negative image content, while they reacted with greater negative emotions.

However, of the six basic emotions, only happiness is unambiguously a positive emotion. Indeed, in our sample, older adult subjects did not have difficulties in happiness recognition when compared to YAG.

Moreover, in our sample, the difficulty in recognizing patterns of emotions that are useful in discriminating different pairs of emotions also seems to be related to life satisfaction. Indeed, both in the general sample and particularly in the older adults, we find an inverse correlation between accurate comparisons of different pairs of emotions on the one hand, and some aspects of life satisfaction on the other (sleep/feeding/free time).

Considering cognitive functions, as expected, the YAG was significantly faster and more accurate in sustained attention, showing better attentional shifting abilities in TMT B-A and higher long-term visuospatial memory scores on CSSL compared to the older adults.

In our study, the older adults explored the tops of faces more than younger adults. However, a previous study (Sullivan et al., 2017) underlines that time spent by older men looking at mouths, alongside time spent by women looking at the eyes, correlated with the emotion recognition of both genders respectively. As a result, looking more at the forehead is probably a disadvantage for older males.

After covarying (ANCOVA) for cognitive function (sustained attention test, attentional shifting abilities, long term visuospatial memory abilities) and exploration strategies, the older adults showed significantly impaired abilities only with fear recognition. The older adults showed similar abilities with happiness, sadness, anger, surprise, disgust, neutral expression recognition and PoFA total score when compared to the younger adults. In accordance with a recent study (Gonçalves et al., 2018), although older adults exhibited a worse performance in neuropsychological tests with respect to the younger group, this disadvantage seemed not to be reflected in emotion identification, thus suggesting that in our study neuropsychological functioning did not influence the emotion identification ability.

Also, other studies pointed out that in aging there are both attentional differences and perceptual deficits contributing to less accurate emotion recognition (Birmingham et al., 2018) and that facial emotion perception is related to the ability of perceiving faces (face perception), remembering faces (face memory), remembering faces with emotional expressions (emotion memory), and general mental ability (Hildebrandt et al., 2015).

From our data, the impairment of fear recognition seems to be unrelated both to cognitive functions and to exploration strategies in older adults. Therefore, in our sample, the difference in emotions processing between younger and older adults seems to be related to cognitive functions and strategy of face exploration for all emotions, except for fear recognition. Fear seems to be unrelated to these components, as it is a “phylogenetically ancient” emotion, closely related to the survival of the species. Indeed, fear emotion can be processed quickly and automatically by deep brain structures (amygdala), without involving the cortical areas. In this context, some authors (Zsoldos et al., 2016) suggest that automatic processing of emotion may be preserved during aging, whereas deliberate processing (as PoFA performance in our study) is impaired.

Differences in Emotion Recognition: The Influence of Gender

Many studies underline the advantage of women in decoding emotions (Thompson and Voyer, 2014; Sullivan et al., 2017; Olderbak et al., 2018). Females show an advantage over males, even from infancy. However, the studies do not always confirm that gender differences are maintained across the age span (Olderbak et al., 2018); for instance, a meta-analysis (Thompson and Voyer, 2014) showed that the largest sex differences were for teenagers and young adults, while other studies underlined that females performed better than males in recognizing emotions in middle and older age (Demenescu et al., 2014).

In our older adults, sample females seem to show significantly higher scores in anger recognition on PoFA compared to males. On total M-PoFA, females also seem to show more ability than males to recognize paired emotions. Therefore, females showed a pattern of emotion recognition that might suggest a tendency toward more “susceptibility” to anger and better emotions discrimination than males when faced with identical paired emotions in M-PoFA.

Some authors observed that identification of anger, sadness, and fear is easier when subjects explore the top half of the face, whereas disgust and happiness are better recognized when the bottom half of the face is explored. Identification of surprise is related both to the top and bottom half of faces (Calder et al., 2000; Sullivan et al., 2017; Birmingham et al., 2018). In our sample females seem to explore the top half of the face earlier than males and identify anger better than males. Therefore, we observed that, in exploring face to decode emotions, males fixated on the forehead later than females.

Differences in Emotion Recognition: The Influence of Aging and Gender

Considering the effect of gender on emotion recognition separately within the YAG and the OAG, we observed that:

(a) In the YAG, there were no differences between males and females in emotion recognition, nor in exploration strategies or cognitive functions. If confirmed by a larger sample, this result might be in contrast with studies finding that young women’s emotion recognition exceeds that of young men (Sullivan et al., 2017) and with the meta-analysis of Thompson and Voyer (2014) showing that the largest sex differences were for teenagers and young adults. It might also be in contrast with previous Eye-tracking studies finding that young females spend more time looking at eyes compared with mouth relative to young males (Hall et al., 2010). On the other hand, our data might be in accordance with Sullivan et al. (2017) who, in considering only eyes and mouth areas, observed that young men and young women were similar in exploration strategies since they spent the same time looking at the eyes area.

(b) In the older adults, males seem to show significant lower scores compared to females in anger recognition, in M-PoFA total score for identical emotion pair and in total M-PoFA total correct scores. Therefore, older adult males showed a tendency toward a worse anger recognition. It is broadly known in literature that females are more accurate in labeling negative emotions and negative facial affects (e.g., anger, disgust) compared to males (Montagne et al., 2005; Scholten et al., 2005). Furthermore, our results are similar to many studies observing that older men have worse emotion recognition compared to older women (Ruffman et al., 2010; Campbell et al., 2014; Demenescu et al., 2014). Better anger recognition in females are reported in many studies that demonstrated the link between attention and emotion (Wrase et al., 2003; Hofer et al., 2006; Li et al., 2008; Gupta, 2012). Emotional and attentional processing involves activation of similar brain regions such as fronto-parietal regions, anterior cingulate cortex, inferior frontal gyrus, and anterior insula; brain areas that are also recruited in the processing of emotional information. In this context, the amygdala responds differentially to faces with emotional content, only when sufficient attentional resources were available to process those faces (Pessoa et al., 2002).

Considering exploration strategies, old males and old females differed for many variables in exploration strategies, and in particular the latter seem to look more at the forehead than the first. In contrast to the results from Sullivan et al. (2017), in our sample old males do not seem to look more at the mouth than old females, but seem to spend more time in fixation of right eye and left eye, while older adult females explore the frontal area more.

Including as covariates the eye-gaze fixations characteristics in which old males and old females differed, the difference in anger recognition between these two groups seems to disappear whereas the difference in recognition of identical pairs of emotions (M-PoFA) was maintained.

In other terms, between old males and old females, differences in anger recognition seem to be related to differences in exploration strategies. In addition to this, our study observed that differences in discrimination of same and different pairs of emotions seem to be unrelated to differences in exploration strategies. This result could allow us to hypothesize that older adult males may present a specific impairment of the individual emotion recognition pattern.

Nevertheless, our study presents some limitations. The small sample sizes did not allow a robust comparison between males and females. The high intensity of emotions represented in the PoFA and M-PoFA tasks may induce a ceiling effect in emotion recognition and may be unsuitable for sensitive and specific detection of differences in emotion recognition for healthy people. Perhaps an examination of the role of age and gender on facial emotion recognition in relation to neuropsychological functions and face exploration strategies would be advisable using dynamic stimuli and/or stimuli that depicted emotions at different intensities. Many studies have reported that more ecologically valid stimuli (i.e., dynamic) can improve emotion perception in younger adults (Ambadar et al., 2005), while older and-middle aged adults benefited from dynamic stimuli, but only when the emotional displays were subtle (Grainger et al., 2015). Thus, static photographs of intense expressions as in the PoFA test seem not to offer an appropriate instrument to detect older adult abilities in interpreting real life situations. Furthermore, correlation analyses between emotion recognition and quality of life need to be corrected for multiple testing in order to obtain more robust results. Further investigations should also include neuroimaging data that allow clarification for specific involvement of brain areas and a measure of mood that can offer a better explanation for the data of perceived quality of life.

Conclusion

Our data confirms previous observations underlining that older adults are poorer in emotion recognition compared to younger adults, but there are no specific differences in anger, happiness, and disgust recognition. Moreover, in our study older subjects seem to show a diminished ability to recognize the similarity or the difference between two specific emotional patterns, regardless of their meaning.

As regards subjective quality of life, a better perception of some dimensions corresponds to worse recognition of emotions with negative valence. Furthermore, in the whole sample and particularly in older adults, satisfaction of life is also positively related to ability in recognizing the emotion pattern useful in discriminating different pairs of emotions, regardless of the meaning. Also in this task, we can underline the importance of psychological aspects such as well-being and motivation.

When controlled analyses for cognitive function and exploration strategies measures were conducted, in older people only the impairment of fear recognition is unrelated to cognitive functions and to exploration strategies. Finally, considering the differences between male and female, we preliminarily observed (in the general sample) that females seem to recognize anger and “discriminate” emotions better than males. After the separation of the sample into young males and young females, old males and old females, the latter showed a pattern of anger recognition that might be lower with respect to old females. Moreover, old males and old females differed in exploration strategies that appeared to determine the difference in anger recognition but not the difference in discrimination of same and different pairs of emotions.

Data Availability Statement

The datasets generated for this study are available on request to the corresponding author.

Ethics Statement

The studies involving human participants were reviewed and approved by Local Ethics Committee USL Tuscany South-Est, Grosseto, Italy. The patients/participants provided their written informed consent to participate in this study.

Author Contributions

IR, MM, and NM designed the experiment. NM and MM collected the data. LA analyzed the data. LA, NM, and MM wrote the manuscript. All authors provided critical feedback and gave final approval of the version to be published.

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

We thank Rossi Giulia, Cantagallo Anna, and Galletti Vania for their help given in collecting the data.

Supplementary Material

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fpsyg.2019.02371/full#supplementary-material

References

Adolphs, R. (2002). Recognizing emotion from facial expressions: psychological and neurological mechanisms. Behav. Cogn. Neurosci. Rev. 1, 21–62. doi: 10.1177/1534582302001001003

Ambadar, Z., Schooler, J. W., and Cohn, J. F. (2005). Deciphering the enigmatic face: the importance of facial dynamics in interpreting subtle facial expressions. Psychol. Sci. 16, 403–410. doi: 10.1111/j.0956-7976.2005.01548.x

Apollonio, I., Leone, M., Isella, V., Piamarta, F., Consoli, T., Villa, M. L., et al. (2005). The frontal assessment battery (FAB): normative values in an Italian population sample. Neurol. Sci. 26, 108–116. doi: 10.1007/s10072-005-0443-4

Beadle, J. N., Sheehan, A. H., Dahlben, B., and Gutchess, A. H. (2013). Aging, empathy and prosociality. J. Gerontol. B. Psychol. Sci. Soc. Sci. 70, 213–222. doi: 10.1093/geronb/gbt091

Birmingham, E., Svärd, J., Kanan, C., and Fischer, H. (2018). Exploring emotional expression recognition in aging adults using the moving window technique. PLoS One 3:10. doi: 10.1371/journal.pone.0205341

Bonnet, L., Comte, A., Tatu, L., Millot, J. L., Moulin, T., and Medeiros de Bustos, E. (2015). The role of the amygdala in the perception of positive emotions: an “intensity detector.”. Front. Behav. Neurosci. 9:178. doi: 10.3389/fnbeh.2015.00178

Bressler, S. L., and Menon, V. (2010). Large-scale brain networks in cognition: emerging methods and principles. Trends Cogn Sci. 14, 277–290. doi: 10.1016/j.tics.2010.04.004

Buchanan, T., and Adolphs, R. (2003). “The role of the human amygdala in emotional modulation of long-term declarative memory,” in Emotional Cognition: From Brain to Behavior, eds S. Moore, and M. Oaksford (London: John Benjamins), 9–34. doi: 10.1075/aicr.44.02buc

Cahill, L., Haier, R. J., White, N. S., Fallon, J., Kilpatrick, L., Lawrence, C., et al. (2001). Sex-related difference in amygdala activity during emotionally influenced memory storage. Neurobiol. Learn. Mem. 75, 1–9. doi: 10.1006/nlme.2000.3999

Cahill, L., Uncapher, M., Kilpatrick, L., Alkire, M. T., and Turner, J. (2004). Sex-related hemispheric lateralization of amygdala function in emotionally influenced memory: an fMRI investigation. Learn. Mem. 11, 261–266. doi: 10.1101/lm.70504

Calder, A. J., Lawrence, A. D., and Young, A. W. (2001). Neuropsychology of fear and loathing. Nat. Rev. Neurosci. 2, 352–363. doi: 10.1038/35072584

Calder, A. J., and Young, A. W. (2005). Understanding the recognition of facial identity and facial expression. Nat. Rev. Neurosci. 6, 641–651. doi: 10.1038/nrn1724

Calder, A. J., Young, A. W., Keane, J., and Dean, M. (2000). Configural information in facial expression perception. J. Exp. Psychol. Hum. Percept. Perform. 26, 527–551. doi: 10.1037//0096-1523.26.2.527

Campbell, A., Ruffman, T., Murray, J. E., and Glue, P. (2014). Oxytocin improves emotion recognition for older males. Neurobiol. Aging 35, 2246–2248. doi: 10.1016/j.neurobiolaging.2014.04.021

Capitani, E., Laiacona, M., and Ciceri, E. (1991). Sex differences in spatial memory: a reanalysis of block tapping long-term memory according to the short-term memory level. Ital. J. Neurol. Sci. 12, 461–466. doi: 10.1007/BF02335507

Carroll, J. M., and Russell, J. A. (1997). Facial expressions in Hollywood’s portrayal of emotion. J. Pers. Soc. Psychol. 72, 164–176. doi: 10.1037/0022-3514.72.1.164

Christianson, S. A. (1992). Handbook of Emotion and Memory: Current Research and Theory. Hillsdale, NJ: Lawrence Erlbaum Associates, Inc.

Demenescu, L. R., Mathiak, K. A., and Mathiak, K. (2014). Age- and gender-related variations of emotion recognition in pseudo words and faces. Exp. Aging Res. 40, 187–207. doi: 10.1080/0361073X.2014.882210

Ebner, N. C., He, Y., and Johnson, M. K. (2011). Age and emotion affect how we look at a face: visual scan patterns differ for own-age versus other-age emotional faces. Cogn. Emot. 25, 983–997. doi: 10.1080/02699931.2010.540817

Ekman, P. (2003). Emotions Inside OUT: 130 YEARS AFter Darwin’s: The Expression of the Emotions in Man and Animals. New York, NY: New York Academy of Sciences.

Ekman, P., and Friesen, W. V. (1976). Pictures of Facial Affect. Palo Alto: Consulting Psychologists Press.

Folstein, M. F., Folstein, S. E., and McHugh, P. R. (1975). Mini-mental state”. a practical method for grading the cognitive state of patients for the clinician. J. Psychiatr. Res. 12, 189–198. doi: 10.1016/0022-3956(75)90026-6

Fusar-Poli, P., Placentino, A., Carletti, F., Landi, P., Allen, P., Surguladze, S., et al. (2009). Functional atlas of emotional faces processing: a voxel-based meta-analysis of 105 functional magnetic resonance imaging studies. J. Psychiatry Neurosci. 34, 418–432.

Giovagnoli, A. R., Del Pesce, M., Masheroni, S., Simoncelli, M., Laiacona, M., and Capitani, E. (1996). Trail making test: normative values from 287 normal adult controls. Ital. J. Neurol. Sci. 17, 305–309. doi: 10.1007/BF01997792

Gobbini, M. I., and Haxby, J. V. (2007). Neural systems for recognition of familiar faces. Neuropsychologia 45, 32–41. doi: 10.1016/j.neuropsychologia.2006.04.015

Goh, J. O. S. (2011). Functional dedifferentiation and altered connectivity in older adults: neural accounts of cognitive aging. Aging Dis. 2, 30–48.

Gonçalves, A. R., Fernandes, C., Pasion, R., Ferreira-Santos, F., Barbosa, F., and Marques-Teixeira, J. (2018). Emotion identification and aging: behavioral and neural age-related changes. Clin. Neurophysiol. 129, 1020–1029. doi: 10.1016/j.clinph.2018.02.128

Grainger, S. A., Henry, J. D., Phillips, L. H., Vanman, E. J., and Allen, R. (2015). Age deficits in facial affect recognition: the influence of dynamic cues. J. Gerontol. B. Psychol. Sci. Soc. Sci. 72, 622–632. doi: 10.1093/geronb/gbv100

Gupta, R. (2012). Distinct neural systems for men and women during emotional processing: a possible role of attention and evaluation. Front. Behav. Neurosci. 6:86. doi: 10.3389/fnbeh.2012.00086

Gupta, R., and Deák, G. O. (2015). Disarming smiles: irrelevant happy faces slow post-error responses. Cogn Process. 16, 427–434. doi: 10.1007/s10339-015-0664-2

Gupta, R., Hur, Y. J., and Lavie, N. (2016). Distracted by pleasure: effects of positive versus negative valence on emotional capture under load. Emotion 16, 328–337. doi: 10.1037/emo0000112

Gupta, R., and Raymond, J. E. (2012). Emotional distraction unbalances visual processing. Psychol. Bull. Rev. 19, 184–189. doi: 10.3758/s13423-011-0210-x

Gupta, R., Raymond, J. E., and Vuilleumier, P. (2018). Priming by motivationally salient distractors produces hemispheric asymmetries in visual processing. Psychol. Res. doi: 10.1007/s00426-018-1028-1 [Epub ahead of print].

Gupta, R., and Srinivasan, N. (2009). Emotions help memory for faces: role of whole and parts. Cogn. Emot. 23, 807–816. doi: 10.1080/02699930802193425

Gupta, R., and Srinivasan, N. (2014). Only irrelevant sad but not happy faces are inhibited under high perceptual load. Cogn. Emot. 29, 747–754. doi: 10.1080/02699931.2014.933735

Gur, R. C., Schroeder, L., Turner, T., McGrath, C., Chan, R. M., Turetsky, B. I., et al. (2002). Brain activation during facial emotion processing. Neuroimage 16(Pt1), 651–662. doi: 10.1006/nimg.2002.1097

Hall, J. K., Hutton, S. B., and Morgan, M. J. (2010). Sex differences in scanning faces: does attention to the eyes explain female superiority in facial expression recognition? Cogn. Emot. 24, 629–637. doi: 10.1080/02699930902906882

Hamann, S., Herman, R. A., Nolan, C. L., and Wallen, K. (2004). Men and women differ in amygdala response to visual sexual stimuli. Nat. Neurosci. 7, 411–416. doi: 10.1038/nn1208

Hartikainen, K. M., Ogawa, K. H., and Knight, R. T. (2000). Transient interference of right hemispheric function due to automatic emotional processing. Neuropsychologia 38, 1576–1580. doi: 10.1016/S0028-3932(00)00072-5

Haxby, J. V., Gobbini, M. I., and James, V. (2011). “Distributed neural systems for face perception,” in Oxford Handbook of Face Perception, eds G. Rhodes, A. Calder, M. Johnson, and V. Haxby (Oxford: OUP Oxford), 93–110.

Haxby, J. V., Hoffman, E. A., and Gobbini, M. I. (2000). The distributed human neural system for face perception. Trends Cogn. Sci. 4, 223–233. doi: 10.1016/S1364-6613(00)01482-0

Hildebrandt, A., Sommer, W., Schacht, A., and Wilhelm, O. (2015). Perceiving and remembering emotional facial expressions - a basic facet of emotional intelligence. Intelligence 50, 52–67. doi: 10.1016/j.intell.2015.02.003

Hofer, A., Siedentopf, C. M., Ischebeck, A., Rettenbacher, M. A., Verius, M., Felber, S., et al. (2006). Gender differences in regional cerebral activity during the perception of emotion: a functional MRI study. Neuroimage 32, 854–862. doi: 10.1016/j.neuroimage.2006.03.053

Hogeveen, J., Salvi, C., and Grafman, J. (2016). ‘Emotional Intelligence’: lessons from lesions. Trends Neurosci. 39, 694–705. doi: 10.1016/j.tins.2016.08.007

Horning, S. M., Cornwell, R. E., and Davis, H. P. (2012). The recognition of facial expressions: an investigation of the influence of age and cognition. Neuropsychol. Dev. Cogn. B Aging Neuropsychol. Cogn. 19, 657–676. doi: 10.1080/13825585.2011.645011

Isaacowitz, D. M., Lockenhoff, C. E., Lane, R. D., Wright, R., Sechrest, L., Riedel, R., et al. (2007). Age differences in recognition of emotion in lexical stimuli and facial expressions. Psychol. Aging 22, 147–159. doi: 10.1037/0882-7974.22.1.147

Isaacowitz, D. M., and Stanley, J. T. (2011). Bringing an ecological perspective to the study of aging and recognition of emotional facial expressions: past, current, and future methods. J. Nonverbal Behav. 35, 261–278. doi: 10.1007/s10919-011-0113-6

Ishai, A. (2008). Let’s face it: it’s a cortical network. NeuroImage 40, 415–419. doi: 10.1016/j.neuroimage.2007.10.040

Kavaliers, M., Ossenkopp, K. P., and Choleris, E. (2018). Social neuroscience of disgust. Genes. Brain Behav. 18:e12508. doi: 10.1111/gbb.12508

Kessels, R. P., Montagne, B., Hendriks, A. W., Perrett, D. I., and de Haan, E. H. (2014). Assessment of perception of morphed facial expressions using the emotion recognition task: normative data from healthy participants aged 8-75. J. Neuropsychol. 8, 75–93. doi: 10.1111/jnp.12009

Lawrie, L., Jackson, M. C., and Phillips, L. H. (2018). Effects of induced sad mood on facial emotion perception in young and older adults. Neuropsychol. Dev. Cogn. B Aging Neuropsychol. Cogn. 26, 319–335. doi: 10.1080/13825585.2018.1438584

Li, H., Yuan, J., and Lin, C. (2008). The neural mechanism underlying the female advantage in identifying negative emotions: an event-related potential study. Neuroimage 40, 1921–1929. doi: 10.1016/j.neuroimage.2008.01.033

Lyoo, Y., and Yoon, S. (2017). Brain network correlates of emotional aging. Sci. Rep. 7:15576. doi: 10.1038/s41598-017-15572-15576

Majani, G., and Callegari, S. (1998). Sat-p/Satisfaction Profile. Soddisfazione Soggettiva e Qualità Della Vita. Trento: Centro Studi Erickson.

Mancuso, M., Magnani, N., Cantagallo, A., Rossi, G., Capitani, D., Galletti, V., et al. (2015). Emotion recognition impairment in traumatic brain injury compared with schizophrenia spectrum: similar deficits with different origins. J. Nerv. Ment. Dis. 203, 87–95. doi: 10.1097/NMD.0000000000000245

Mather, M. (2016). The affective neuroscience of aging. Annu. Rev. Psychol. 67, 213–238. doi: 10.1146/annurevpsych-122414-033540

Mayer, J. D., Caruso, D. R., and Salovey, P. (2016). The ability model of emotional intelligence: principles and updates. Emot. Rev. 8, 290–300. doi: 10.1177/1754073916639667

McClure, E. B. (2000). A meta-analytic review of sex differences in facial expression processing and their development in infants, children, and adolescents. Psychol. Bull. 126, 424–453. doi: 10.1037/0033-2909.126.3.424

McFadyen, J., Mittangley, J. B., and Garrido, M. (2019). An afferent white matter pathway from the pulvinar to the amygdala facilitates fear recognition. eLife 8:e40766. doi: 10.7554/eLife.40766

McRae, K., Ochsner, K. N., Mauss, I. B., Gabrieli, J. J. D., and Gross, J. J. (2008). Gender differences in emotion regulation: an fMRI study of cognitive reappraisal. Group Process. Intergroup Relat. 11, 143–162. doi: 10.1177/1368430207088035

Mienaltowski, A., Johnson, E. R., Wittman, R., Wilson, A., Sturycz, C., and Norman, J. F. (2013). The visual discrimination of negative facial expressions by younger and older adults. Vision Res. 81, 12–17. doi: 10.1016/j.visres.2013.01.006

Mienaltowski, A., Lemerise, E. A., Greer, K., and Burke, L. (2019). Age-related differences in emotion matching are limited to low intensity expressions. Neuropsychol. Dev. Cogn. B Aging Neuropsychol. Cogn. 26, 348–366. doi: 10.1080/13825585.2018.1441363

Montagne, B., Kessels, R. P. C., Frigerio, E., de Haan, E. H. F., and Perrett, D. I. (2005). Sex differences in the perception of affective facial expressions: do men really lack emotional sensitivity? Cogn. Process. 6, 136–141. doi: 10.1007/s10339-005-0050-6

Murphy, N. A., and Isaacowitz, D. M. (2010). Age effects and gaze patterns in recognizing emotional expressions: an in-depth look at gaze measures and covariates. Cogn. Emot. 24, 436–452. doi: 10.1080/02699930802664623

Olderbak, S., Wilhelm, O., Hildebrandt, A., and Quoidbach, J. (2018). Sex differences in facial emotion perception ability across the lifespan. Cogn. Emot. 22, 1–10. doi: 10.1080/02699931.2018.1454403

Orgeta, V. (2010). Effects of age and task difficulty on recognition of facial affect. J. Gerontol. 65B, 323–327. doi: 10.1093/geronb/gbq007

O’Toole, A. J., Roark, D. A., and Abdi, H. (2002). Recognizing moving faces: a psychological and neural synthesis. Trends Cogn. Sci. 6, 261–266. doi: 10.1016/S1364-6613(02)01908-3

Pessoa, L., McKenna, M., Gutierrez, E., and Ungerleider, G. (2002). Neural processing of emotional faces require attention. Proc. Natl. Acad. Sci. U.S.A. 99, 11458–11463. doi: 10.1073/pnas.172403899

Pessoa, L., Padmala, S., and Morland, T. (2005). Fate of the unattended fearful faces in the amygdala is determined by both attentional resources and cognitive modulation. NeuroImage 28, 249–255. doi: 10.1016/j.neuroimage.2005.05.048

Robertson, I. H., Manly, T., Andrade, J., Baddeley, B. T., and Yiend, J. (1997). ‘Oops!’: performance correlates of everyday attentional failures in traumatic brain injured and normal subjects. Neuropsychologia 35, 747–758. doi: 10.1016/S0028-3932(97)00015-8

Ruffman, T., Halberstadt, J., and Murray, J. (2009). Recognition of facial, auditory, and bodily emotions in older adults. J. Gerontol. B Psychol. Sci. Soc. Sci. 64, 696–703. doi: 10.1093/geronb/gbp072

Ruffman, T., Henry, J. D., Livingstone, V., and Phillips, L. H. (2008). A meta-analytic review of emotion recognition and aging: implications for neuropsychological models of aging. Neurosci. Biobehav. Rev. 32, 863–881. doi: 10.1016/j.neubiorev.2008.01.001

Ruffman, T., Murray, J., Halberstadt, J., and Taumoepeau, M. (2010). Verbosity and emotion recognition in older adults. Psychol. Aging 25, 492–497. doi: 10.1037/a0018247

Schienle, A., Schafer, A., Stark, R., Walter, B., and Vaitl, D. (2005). Gender differences in the processing of disgust- and fear-inducing pictures: an fMRI study. Neuro. Rep. 16, 277–280. doi: 10.1097/00001756-200502280-00015

Scholten, M. R. M., Aleman, A., Montagne, B., and Kahn, R. S. (2005). Schizophrenia and processing of facial emotions: sex matters. Schizophr. Res. 78, 61–67. doi: 10.1016/j.schres.2005.06.019

Srinivasan, N., and Gupta, R. (2010). Emotion-attention interactions in recognition memory for distractor faces. Emotion 10, 207–215. doi: 10.1037/a0018487

Srinivasan, N., and Gupta, R. (2011). Rapid communication: global–local processing affects recognition of distractor emotional faces. Q. J. Exp. Psychol. 64, 425–433. doi: 10.1080/17470218.2011.552981

Sullivan, S., Campbell, A., Hutton, S. B., and Ruffman, T. (2017). What’s good for the goose is not good for the gander: age and gender differences in scanning emotion faces. J. Gerontol. B Psychol. Sci. Soc. Sci. 72, 441–447. doi: 10.1093/geronb/gbv033

Sullivan, S., and Ruffman, T. (2004). Emotion recognition deficits in the elderly. Int. J. Neurosci. 114, 403–432. doi: 10.1080/00207450490270901

Sullivan, S., Ruffman, T., and Hutton, S. B. (2007). Age differences in emotion recognition skills and the visual scanning of emotion faces. J. Gerontol. B Psychol. Sci. Soc. Sci. 62, 53–60. doi: 10.1093/geronb/62.1.P53

Sze, J. A., Goodkind, M. S., Gyurak, A., and Levenson, R. W. (2012). Aging and emotion recognition: not just a losing matter. Psychol. Aging 27, 940–950. doi: 10.1037/a0029367

Takahashi, H., Matsuura, M., Yahata, N., Koeda, M., Suhara, T., and Okubo, Y. (2006). Men and women show distinct brain activations during imagery of sexual and emotional infidelity. Neuroimage 32, 1299–1307. doi: 10.1016/j.neuroimage.2006.05.049

Thompson, A. E., and Voyer, D. (2014). Sex differences in the ability to recognise non-verbal displays of emotion: a meta-analysis. Cogn. Emot. 28, 1164–1195. doi: 10.1080/02699931.2013.875889

Tippett, D. C., Godin, B. R., Oishi, K., Oishi, K., Davis, C., Gomez, Y., et al. (2018). Impaired recognition of emotional faces after stroke involving right amygdala or insula. Semin. Speech Lang. 39, 87–100. doi: 10.1055/s-0037-1608859

Uddin, L. Q., Supekar, K., Amin, H., Rykhlevskaia, E., Nguyen, D. A., Greicius, M. D., et al. (2010). Dissociable connectivity within human angular gyrus and intraparietal sulcus: evidence from functional and structural connectivity. Cereb. Cortex 20, 2636–2646. doi: 10.1093/cercor/bhq011

Voelkle, M. C., Ebner, N. C., Lindenberger, U., and Riediger, M. (2014). A note on age differences in mood-congruent vs. mood-incongruent emotion processing in faces. Front. Psychol. 26:635. doi: 10.3389/fpsyg.2014.00635

Vuilleumier, P., and Pourtois, G. (2007). Distributed and interactive brain mechanisms during emotion face perception: evidence from functional neuroimaging. Neuropsychologia 45, 174–194. doi: 10.1016/j.neuropsychologia.2006.06.003

West, J. T., Horning, S. M., Klebe, K. J., Foster, S. M., Cornwell, R. E., Perrett, D., et al. (2012). Age effects on emotion recognition in facial displays: from 20 to 89 years of age. Exp. Aging Res. 38, 146–168. doi: 10.1080/0361073X.2012.659997

Wirth, M., Isaacowitz, D. M., and Kunzmann, U. (2017). Visual attention and emotional reactions to negative stimuli: the role of age and cognitive reappraisal. Psychol. Aging 32, 543–556. doi: 10.1037/pag0000188

Wong, B., Cronin-Golomb, A., and Neargarder, S. (2005). Patterns of visual scanning as predictors of emotion identification in normal aging. Neuropsychology 19, 739–749. doi: 10.1037/0894-4105.19.6.739

Woolley, J. D., Strobl, E. V., Sturm, V. E., Shany-Ur, T., Poorzand, P., Grossman, S., et al. (2015). Impaired recognition and regulation of disgust is associated with distinct but partially overlapping patterns of decreased gray matter volume in the ventroanterior insula. Biol. Psychiatry 78, 505–514. doi: 10.1016/j.biopsych.2014.12.031

Wrase, J., Klein, S., Gruesser, S. M., Hermann, D., Flor, H., Mann, K., et al. (2003). Gender differences in the processing of standardized emotional visual stimuli in humans: a functional magnetic resonance imaging study. Neurosci. Lett. 348, 41–45. doi: 10.1016/s0304-3940(03)00565-2

Yassin, W., Callahan, B. L., Ubukata, S., Sugihara, G., Murai, T., and Ueda, K. (2017). Facial emotion recognition in patients with focal and diffuse axonal injury. Brain Inj. 31, 624–630. doi: 10.1080/02699052.2017.1285052

Keywords: emotion recognition, gender differences, age differences, eye movements, cognitive functioning, satisfaction of life

Citation: Abbruzzese L, Magnani N, Robertson IH and Mancuso M (2019) Age and Gender Differences in Emotion Recognition. Front. Psychol. 10:2371. doi: 10.3389/fpsyg.2019.02371

Received: 19 June 2019; Accepted: 04 October 2019;

Published: 23 October 2019.

Edited by:

Marie-Helene Grosbras, Aix-Marseille Université, FranceReviewed by:

Cali Bartholomeusz, University of Melbourne, AustraliaRashmi Gupta, Indian Institute of Technology Bombay, India

Copyright © 2019 Abbruzzese, Magnani, Robertson and Mancuso. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Laura Abbruzzese, laura.abbruzzese@libero.it

Laura Abbruzzese

Laura Abbruzzese Nadia Magnani2

Nadia Magnani2 Ian H. Robertson

Ian H. Robertson Mauro Mancuso

Mauro Mancuso