- 1Center for Healthcare Organization and Implementation Research, VA Boston Healthcare System, Boston, MA, United States

- 2Harvard Medical School, Boston, MA, United States

- 3Center of Innovation for Accelerating Discovery and Practice Transformation, Durham VA Health Care System, Durham, NC, United States

- 4Duke University School of Medicine, Durham, NC, United States

- 5Center for Innovation to Implementation, VA Palo Alto Health Care System, Menlo Park, CA, United States

Technology can improve implementation strategies' efficiency, simplifying progress tracking and removing distance-related barriers. However, incorporating technology is meaningful only if the resulting strategy is usable and useful. Hence, we must systematically assess technological strategies' usability and usefulness before employing them. Our objective was therefore to adapt the effort-vs-impact assessment (commonly used in systems science and operations planning) to decision-making for technological implementation strategies. The approach includes three components – assessing the effort needed to make a technological implementation strategy usable, assessing its impact (i.e., usefulness regarding performance/efficiency/quality), and deciding whether/how to use it. The approach generates a two-by-two effort-vs-impact chart that categorizes the strategy by effort (little/much) and impact (small/large), which serves as a guide for deciding whether/how to use the strategy. We provide a case study of applying this approach to design a package of technological strategies for implementing a 5 A's tobacco cessation intervention at a Federally Qualified Health Center. The effort-vs-impact chart guides stakeholder-involved decision-making around considered technologies. Specification of less technological alternatives helps tailor each technological strategy within the package (minimizing the effort needed to make the strategy usable while maximizing its usefulness), aligning to organizational priorities and clinical tasks. Our three-component approach enables methodical and documentable assessments of whether/how to use a technological implementation strategy, building on stakeholder-involved perceptions of its usability and usefulness. As technology advances, results of effort-vs-impact assessments will likely also change. Thus, even for a single technological implementation strategy, the three-component approach can be repeatedly applied to guide implementation in dynamic contexts.

Introduction

Technology shapes both interventions and strategies for implementing interventions. Technological interventions are receiving increased attention and undergoing enhanced specification [e.g., (1)]. For mental healthcare, behavioral intervention technologies are being actively specified and evaluated for their potential to both broaden the interventions' reach and deliver the interventions through previously unexplored modalities (2, 3). However, such specification is not yet available for technological implementation strategies, including when and how to use them.

We define technological implementation strategies as “methods or techniques that use information and communications technology to enhance the adoption, implementation, and sustainability of a clinical program or practice.” This definition builds on the World Health Organization's definition of eHealth (“the use of information and communications technology in support of health and health-related fields”) (4) and Proctor and colleagues' definition of implementation strategies (“methods or techniques used to enhance the adoption, implementation, and sustainability of a clinical program or practice”) (5). Specifically, our definition is a combination of WHO's definition (which pertains to technology as it is used in health and health-related fields, without mention of implementation strategies) and Proctor and colleagues' definition (which pertains to implementation strategies, without mention of technology). As our work discusses technological implementation strategies that are at the cross-section of the two concepts, our definition pulls together the two pre-existing definitions.

Technology can improve implementation strategies' efficiency, simplifying progress tracking and removing distance-related barriers. For example, virtual implementation facilitation that uses telecommunication to implement measurement-based mental health care in the primary care setting (6) minimizes the need for implementation experts' in-person travel to the setting. Electronic audit-and-feedback, as opposed to feedback delivered verbally or by paper, can speed the implementation of treatment guidelines for concurrent substance use and mental disorders (7).

As technology advances, and as the implementation science field seeks innovative implementation strategies, there are increasing opportunities for new technological implementation strategies. But incorporating technology is meaningful only if the resulting strategy is both usable (i.e., is easy to use) and useful (i.e., helps improve performance/efficiency/quality – e.g., by supporting equitable access to healthcare services for vulnerable populations). Hence, we must systematically assess technological strategies' usability and usefulness before employing them. This assessment is much needed for implementing evidence-based mental healthcare interventions, given that the aforementioned examples of strategies like virtual implementation facilitation and electronic audit-and-feedback are playing an increased role in implementation. The growing number of options for technological implementation strategies calls for an approach (such as ours to be introduced in this article) that implementation efforts can use to methodically decide whether and how to use the strategies.

In this article, we (i) outline an adaptation of the effort-vs-impact assessment method from systems science / operations planning (8) as a three-component approach to this decision-making and (ii) to illustrate the approach, use as a case study the development of a package of implementation strategies for increasing evidence-based tobacco cessation at a Federally Qualified Health Center (FQHC). Namely, section The Three-Component Approach to Decide Whether/How to Use a Technological Implementation Strategy describes the general three-component approach, section Case Study: Developing a Package of Technological Implementation Strategies to Promote Evidence-Based Tobacco Cessation at an FQHC describes a specific application of the approach, and section Discussion summarizes the approach and discusses its implications.

The Three-Component Approach to Decide Whether/How to Use a Technological Implementation Strategy

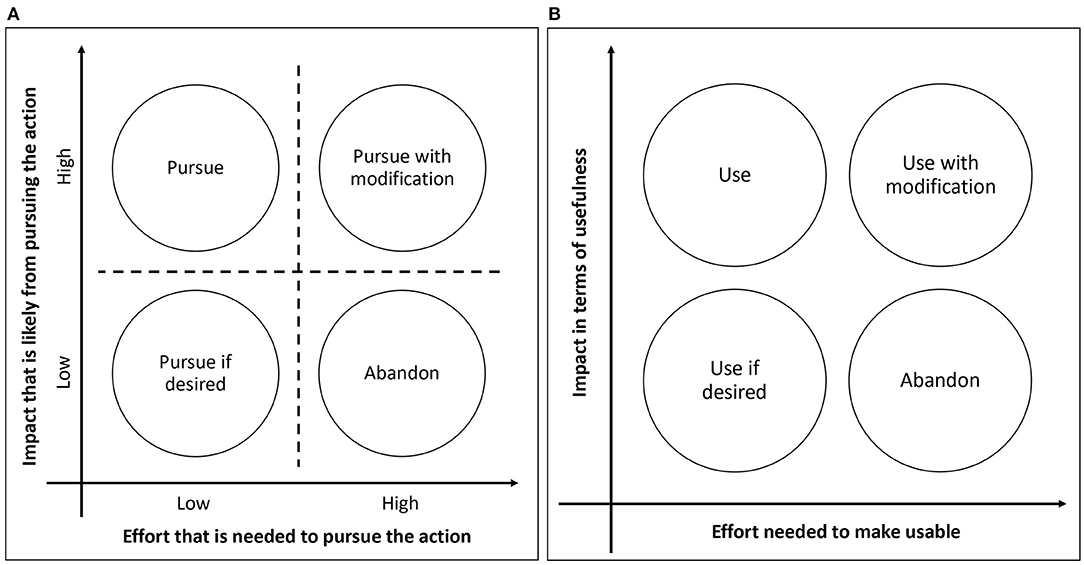

The three components are – an assessment of the effort required to use the strategy, an assessment of the strategy's potential impact, and a decision whether/how to use the strategy. The approach uses a chart-based visualization (Figure 1A) to categorize a potential action as (i) requiring little effort to make a big impact, (ii) requiring little effort to make a small impact, (iii) requiring a lot of effort to make a big impact, and (iv) requiring a lot of effort to make a small impact. The combined consideration of the effort (low/high) and the impact (low/high) serves as a rubric for deciding whether/how to pursue the action [in our case, “action” refers to the use of a technological implementation strategy – e.g., using electronic audit-and-feedback to implement treatment guidelines for concurrent substance use and mental disorders (7)]. For the electronic audit-and-feedback example, effort considerations could involve the potential time and resources needed to install and train mental healthcare staff in the electronic audit-and-feedback software, and impact considerations could involve the potential increase in mental healthcare staff's awareness of implementation progress, in turn encouraging higher use of the evidence-based intervention.

Figure 1. (A) An effort-vs-impact chart. (B) An adapted effort-vs-impact chart for assessing whether/how to use a technological implementation strategy.

The method is widely applied to operations planning (9, 10), and increasingly used in healthcare operations and quality improvement [e.g., the Agency for Healthcare Research and Quality (AHRQ)'s guide to implementing a pressure injury prevention program (11) recommends the method for prioritizing among interventions.] For example, Kashani and colleagues used the effort-vs-impact assessment as a part of implementing quality improvement initiatives that involved critical care fellows (12), and Fieldston and colleagues used the assessment as a key tool for rapid-cycle improvements that were implemented at a large independent hospital (13). These are just a few examples, and the effort-vs-impact assessment has been used by numerous initiatives, from individual clinics to health care systems, to prioritize and select among potential interventions to implement change in health care delivery (14–16). For each intervention, the needed effort for implementation (e.g., whether needed resources are already available) and the likely impact from implementation (especially compared to other options) are considered. The utility of the approach is underpinned by strategic change management concepts (17), and we describe below how we adapted the method for decision-making around technological implementation strategies.

Before Initiating the Three-Component Approach

As for any implementation strategy, the technological implementation strategy of interest must be named, defined, and specified (5) prior to initiating the three-component approach, to ensure that all stakeholders have an explicit, shared understanding of the strategy. For instance, a description of a strategy that adds clinical reminders [e.g., on compliance with a mental health clinical practice guideline (18)] should specify (i) what triggers the reminder, (ii) how often, (iii) for whom, and (iv) which actions qualify as attending to the reminder. Aligning to an established framework in describing the strategy [e.g., (5)] is recommended, as this facilitates subsequent comparisons to other strategies (both technological and non-technological).

Throughout the Approach

Comprehensive stakeholder involvement and consideration of alternatives are key. Each component should directly involve potential users of the technological implementation strategy to gauge familiarity with the technology. Also important is continuous input from the health system's technology infrastructure leads and decision makers (e.g., information technology department), to gauge the availability of resources for any infrastructure changes and trainings the technology would require. For example, trainings for using virtual communication platforms are a key aspect of implementing the delivery of evidence-based telemental healthcare (particularly increased in prevalence due to COVID-19) (19). Explicit articulation of potential alternative implementation strategies is recommended, so that the strategy being considered can be compared alongside expected levels of effort and impact of using alternatives.

Component 1: Assess the Potential Effort Needed to Make the Technological Implementation Strategy Usable

This first component aims to answer:

• How available is the technological infrastructure (e.g., equipment, information system) to potential users (i.e., individuals or teams responsible for conducting the implementation)?

• How familiar is the technology to potential users – e.g., how much training is needed?

A variety of methods can be used to gather and analyze stakeholder input on these questions. Choice of methods should be based on feasibility and can include questionnaires, facilitated group discussion, or both. The resulting assessed potential effort (e.g., expected effort to use a virtual communication platform for implementing evidence-based telemental healthcare) could thus be expressed quantitatively [e.g., if the questionnaire asked for responses on a Likert scale (20, 21)] or qualitatively [e.g., regarding consensus reached during the discussion (22, 23)]. Regardless of the method of assessing effort, defining “more or less effort compared to what” is necessary to ensure that stakeholders provide constructive feedback. Hence, as mentioned above, it is important to explicitly articulate and share available alternative implementation strategies.

Component 2: Assess the Potential Impact (i.e., Usefulness) of Using the Technological Implementation Strategy

This second component aligns with Shaw and colleagues' three eHealth domains (24) and aims to answer: To what extent would the technological strategy enable

• better monitoring, tracking, and informing of implementation progress?

• better communication among implementation stakeholders?

• better collection, management, and use of implementation data?

To ensure that innovative technologies do not inadvertently increase health disparities, this component also assesses to what extent the technological strategy might increase, maintain, or reduce disparities.

Notably, this component need not be conducted separately from Component 1 – e.g., the same questionnaire and/or group discussion can be used to gather stakeholder perspectives, or Component 2 can come before Component 1. Similar to the assessed effort under Component 1, the resulting assessed potential impact can be expressed quantitatively or qualitatively, and articulation of alternative strategies for comparison is highly recommended. Importantly, conducting these components does not preclude implementation teams from using a separate conceptual model or framework to guide their planning and/or evaluation (25). Rather, such a model or framework can provide a systematic structure through which to assess potential effort and impact as outlined under Components 1 and 2. For example, mental health-related implementation efforts being guided by the Integrated Promoting Action on Research Implementation in Health Services model (26) – e.g., (27) – can specifically assess whether the technological strategy would enable better communication between the facilitators and the recipients of the implementation, as defined by the model's “Facilitation” and “Recipients” constructs, respectively.

Component 3: Decide Whether to Use or Abandon the Technological Implementation Strategy

This component, based on the potential effort (i.e., usability) and potential impact (i.e., usefulness) assessed through Components 1 and 2 above, places the technological implementation strategy being considered onto the effort-vs-impact chart, now adapted to the context of deciding whether to use the strategy for implementation (Figure 1B). For instance, when considering the use of electronic audit-and-feedback to implement mental health treatment guidelines (7), potential effort might include needed trainings to learn the electronic audit-and-feedback system, while potential impact might include increased staff awareness of implementation progress (and consequently increased motivation to work on implementation). Especially when it is unclear which quadrant the strategy belongs to, it can help to place the previously articulated alternative strategies on to the same chart for comparison. As is the case for Components 1 and 2, it is recommended that this component closely involve stakeholders (28), who can clarify their previously expressed perspectives, when needed, to collaboratively reach a decision with the implementation team.

Case Study: Developing a Package of Technological Implementation Strategies to Promote Evidence-Based Tobacco Cessation at an FQHC

We present an ongoing implementation pilot study that illustrates our approach for decision-making around technological implementation strategies. This study, entitled Stakeholder-Engaged Implementation of Smoking Cessation Health Services (PI: SW), is developing a tailored package of technological implementation strategies to facilitate adoption and sustainment of an evidence-based tobacco cessation intervention [the 5 A's (29)] at an FQHC. Potential barriers to integration of technological tools for tobacco cessation include disruption of clinic workflow, belief that technology is burdensome, and perceived lack of usefulness of technology (30, 31). Since technology use can be affected by multiple contextual factors (32), it is essential for implementation to account for these factors. Therefore, the study combined implementation mapping methodology (33) with our three-component approach to choose implementation strategies.

Implementation Setting and Population

The implementation site is an FQHC where 75% of patients are at or below 200% of the federal poverty level (34). Intervening on patient tobacco use was deemed crucial by the organization because tobacco use remains a leading cause of death in their region (35) and is highest among those at or below 200% of the federal poverty level (36). The FQHC serves over 30,000 patients per year, 92% of whom identify as a racial and/or ethnic minority (49% Latinx and 73% Black/African American) and 55% of whom are uninsured. Patients have a variety of healthcare needs including tobacco/vaping cessation, substance abuse treatment, preventative care, urgent care, acute illness, and management of chronic illnesses. Clinic staff at the FQHC have diverse educational backgrounds, clinical roles, and specializations (adult vs. pediatric). Staff include physicians, physician assistants, nurse practitioners, nursing staff, medical assistants, and behavioral health specialists. At the FQHC, previous efforts to implement an evidence-based specialty smoking cessation clinic were not sustained due to staff turnover. The interdisciplinary research team, led by SW, developed an innovative implementation plan to increase provision of evidence-based tobacco cessation at the FQHC. Research staff entered a formal collaborative arrangement with FQHC clinical and administrative leadership in terms of sharing research project decision-making.

Target Intervention

The purpose of this project was to improve adherence to the target intervention, the “5 A's.” The 5 A's intervention is part of the AHRQ clinical guidelines for tobacco cessation (29). It consists of five sequential steps to tobacco cessation: Ask, Advise, Assess, Assist, and Arrange. Despite evidence supporting its effectiveness, medical provider adherence rates to the 5 A's are low (37). Moreover, there are documented healthcare inequities nationally in the provision of tobacco cessation treatment (38). Specific to the Assist and Arrange steps at this FQHC, previous efforts to implement an evidence-based specialty tobacco cessation clinic encountered barriers to adoption, and ultimately the tobacco cessation clinic was not sustained due to staff turnover. Additionally, for pediatric healthcare providers, there are key barriers to completing the Ask step regarding electronic nicotine delivery systems (ENDS; e.g., e-cigarettes or vape pens), including inadequate screening tools and providers lacking information on slang language (39).

Overall Study Design and Participants: Applying the Three-Component Approach

All study procedures were approved by the Duke University Health System Institutional Review Board. The study used implementation mapping methodology (33) combined with the three-component approach outlined above. First, the study team created a logic model of the problem (i.e., outline of barriers to 5 A's completion). A sample of N = 12 healthcare staff members were recruited to complete telephone interviews. Quota sampling was used to ensure representation of different staff types and clinic types, to adequately assess the potential clinic-specific barriers to 5 A's completion. Staff participants included two medical assistants, one patient educator, one nurse, two behavioral health specialists, two advanced practice providers, and four physicians. Participants worked in varying contexts, including Pediatrics, Family Medicine, and Internal Medicine. See below for qualitative methodology and findings from each component. Interviews were audio recorded and transcribed. Transcriptions were then analyzed using RADaR technique (40) to detect salient themes in the data.

Following interviews with staff, we developed a logic model of change through a guided survey with FQHC administrators and clinic leads (N = 7). Leaders (e.g., medical chiefs, supervisors, and administrators) were recruited who oversaw varying types of clinics and staff, including Behavioral Health, Pediatrics, Family Medicine, and Internal Medicine. A combination of close-ended numeric questions and open-ended text questions were asked. Quantitative questions were analyzed using exploratory descriptive statistics. Open-ended survey responses were analyzed using RADaR technique (40).

Full results of the study are reported elsewhere (41). For the purpose of this methodology paper, we will focus on one specific potential implementation strategy. In creating the Logic Model of the Problem, one theme of staff interviews was an emphasis on numerous priorities and tasks during clinical encounters with patients. This long task list for clinical encounters was contrasted with often having insufficient time. Completing the 5 A's was viewed as important but difficult because it can be seen as “one more thing to do” and may be de-prioritized over other seemingly more urgent clinical matters.

In creating the Logic Model of Change (i.e., next step toward creating an implementation package), we ascertained that integrating each step of the 5 A's into the electronic health record (EHR) could help overcome time and effort barriers to 5 A's adherence, since it would both remind staff to complete tobacco screenings as well as clearly communicate that assessing and treating tobacco use is a high programmatic priority. Below we detail how the three-component approach was used to assess the potential effort and impact of integrating the 5 A's into the EHR (Note: “Effort” and “impact” mentioned in subsections Component 1: Assess the Potential Effort Needed to Make the “Integrating the 5 A's Into the EHR” Strategy Usable through Component 3: Assess the Potential Effort-vs-Impact of the “Integrating the 5 A's Into the EHR” Strategy to Decide Whether to Use, Use-if-Desired, Use-With-Modification, or Abandon It refer to “expected effort” and “expected impact.”).

Component 1: Assess the Potential Effort Needed to Make the “Integrating the 5 A's Into the EHR” Strategy Usable

Procedure

Within interviews with staff participants, study staff asked the following questions related to the potential effort of integrating the 5 A's into the EHR: What are your thoughts about our embedding the 5 A's into [EHR Name]? What might be specific barriers? What are possible issues or complications that may arise in using a computerized 5 A's tool in [EHR Name] in your clinic? To what extent might the implementation of a computerized 5 A's tool in [EHR Name] compete with other workload demands and patient care priorities in your clinic?

Building on the staff interview findings, the guided survey for FQHC administrators and clinic leaders asked, for each step of the 5 A's, “How much logistical/organizational effort will it take to integrate the [Ask/Advise/Assess/Assist/Arrange Step] into [EHR Name]?” Leaders were asked to consider how compatible the tool would be with current clinic flow as well as how much effort it would take to train staff to use the new tool. Responses were entered on a 0 to 10 point scale, where 0 indicated “no effort at all” and 10 indicated “maximum effort.” Questions were also asked regarding how to ensure that each 5 A's step is consistently completed, and what relevant performance objectives need to be established.

Results

Data from the staff interviews underwent the RADaR technique (40)'s structured data charting and data reduction steps to arrive at the following themes related to the potential effort of integrating the 5 A's into the EHR. (i) The most salient effort-related theme (voiced by n = 7 participants) was concern that adding items to the EHR (which already contains many features and forms) could be potentially burdensome to staff (Wilson et al., (41) in preparation). (ii) However, other staff members downplayed the effort of integrating the 5 A's (n = 4), with one participant noting that integration is less burdensome “if done the right way.”

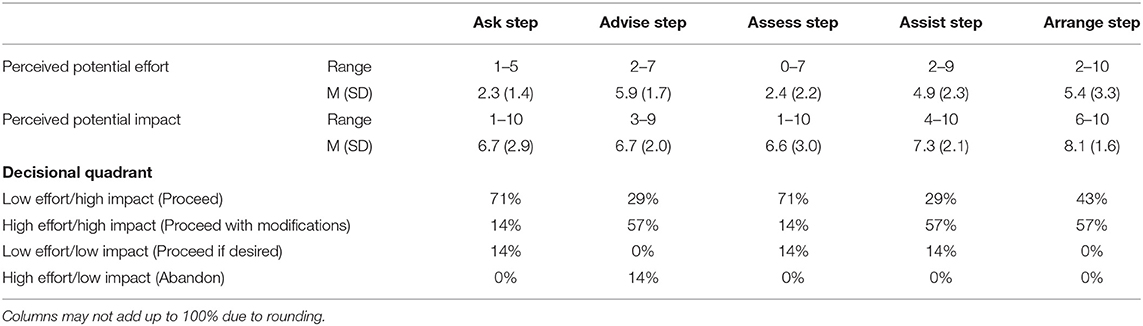

See Table 1 for a summary of findings from the FQHC leaders' survey. There was variability across steps of the 5 A's with regard to how much effort would be involved in integrating that step into the EHR. Applying the RADaR technique (40) to the open-ended survey data gave rise to the following themes related to considerations for effort. (i) Some steps of the 5 A's (most notably the first “Ask” step) were already being captured in the EHR. (ii) Also, ancillary tasks and features would require additional effort (e.g., setting up trainings, peer reviews of documentation, and tracking dashboards).

Component 2: Assess the Potential Impact (i.e., Usefulness) of Using the “Integrating the 5 A's Into the EHR” Strategy

Procedure

Clinical staff participants were asked several questions related to the potential impact of integrating the 5 A's into the EHR: What are your thoughts about our embedding the 5 A's into [EHR Name]? What would be helpful about doing this? Will it replace or enrich current stop-smoking practices? Why/how?

Interviews, focus group discussions, and structured questionnaires are assessing the impact of using the “Integrating the 5 A's into the EHR” strategy. The research team is seeking additional participant perspectives regarding the benefits of integrating the 5 A's for tobacco cessation into the EHR, including how it may enrich current tobacco cessation practices. Then, building on the interviews, the guided survey for clinic leaders asks, for each step of the 5 A's, how the tool will help enhance the quality, tracking/monitoring, and communication around the clinic's tobacco cessation care.

Results

Data from the staff interviews were methodically charted and structurally reduced using the RADaR technique (40), which led to the following themes related to the potential organizational and clinical impact of integrating the 5 A's into the EHR. (i) A majority of staff participants (n = 7) noted the helpfulness of integrating the 5 A's more closely into the EHR. (ii) One participant did not see the 5 A's as helpful to their patient population, noting that they did not view the prevalence of tobacco use in their pediatric population as an issue [Regarding this perspective, it is important to note that – contrary to this stated staff opinion – pediatric clinical guidelines recommend using the 5 A's for tobacco/vaping use for every adolescent patient (42)]. (iii) Regarding clinical impact, staff participants also noted several logistical barriers to 5 A's fidelity that would not be overcome merely by integrating the 5 A's into the EHR, such as billing not covering follow-up calls, overbooking in clinics, and staff burnout.

Regarding organizational leadership perspectives on the impact of integrating the 5 A's into the EHR, assessments of impact varied (Table 1). In general, perceived potential impact was rated highly. Open-ended responses were analyzed using the RADaR technique (40), which indicated the following themes. (i) Leaders had varying perspectives on how much impact would be made by integrating the 5 A's into the EHR because some elements of the 5 A's were already captured within the EHR. (ii) Additionally, there were several ideas from leadership regarding ways to maximize impact (e.g., supervisor dashboards, peer review of charted notes, requiring “hard stops” that would not allow a provider to complete an encounter until tobacco use is assessed).

Component 3: Assess the Potential Effort-vs-Impact of the “Integrating the 5 A's Into the EHR” Strategy to Decide Whether to Use, Use-if-Desired, Use-With-Modification, or Abandon It

Procedure

In the leader survey, a smart design was used to route leader participants to different questions based on their effort vs. impact ratings. Each 0 to 10 rating was categorized as “low” (less than 5) or “high” (5 or greater). Based on these categorizations for each 5 A's step, responses were categorized as: (1) high impact/high effort – use with modifications, (2) high impact/low effort – use, (3) low impact/high effort – abandon, or (4) low impact/low effort – use if desired. Leaders were then presented with an image and text feedback tailored to their responses. This tailored feedback stated which effort-vs-impact decisional quadrant they fell into, and what the typical way forward for that quadrant would be (e.g., use with modification). Each leader was asked open-ended questions about whether they agreed and what next steps would be involved.

Results

From the leader survey, few responses fell into the low effort/low impact or high effort/low impact quadrants. For those who indicated these responses, concerns mainly centered around the fact that some aspects of the 5 A's were already captured in the EHR. However, from our staff participant interviews, it was clear that beyond the Ask and Assess steps, there were not streamlined processes in the EHR for guiding and documenting other steps of the 5 A's intervention.

Summary of the Case Study

This case study demonstrates how our three-component approach is being used to methodically incorporate key stakeholder perspectives on specific contextual factors and potential barriers surrounding the technological implementation strategy (Integrating the 5 A's into the EHR) as it pertains to the 5 A's, to shape whether/how to use the strategy. The approach is particularly valuable to the research team and the stakeholders at the FQHC for aligning the implementation strategy to the FQHC's priorities and workflow. Use of the three-component approach facilitated concrete discussions with stakeholders regarding practicalities of using digital implementation strategies. It also helped situate this particular implementation strategy within the organizational priorities of the health center. This method also prompted stakeholders to identify additional implementation strategies that were digital (e.g., supervisor dashboard) and non-digital (e.g., a tailored clinic workflow plan, training integrated into the clinic workflow).

Discussion

We provide a three-component approach to assessing whether/how to use a technological strategy for implementing an intervention. To present the approach in a broad-to-specific way, we first describe the general approach (section The Three-Component Approach to Decide Whether/How to Use a Technological Implementation Strategy). Then, we illustrate the approach (section Case Study: Developing a Package of Technological Implementation Strategies to Promote Evidence-Based Tobacco Cessation at an FQHC) using a case study of decision-making around technological implementation strategies to promote evidence-based tobacco cessation at an FQHC, specifically describing how the strategy of “Integrating the 5 A's into the EHR" was considered for implementing the cessation intervention targeted at a largely low-income, uninsured, and racial/ethnic minority population. The approach directly contributed to the case study implementation's objective to closely engage multiple stakeholders in devising a contextually appropriate package of technological implementation strategies.

The approach is an adaptation of the effort-vs-impact assessment method, which is widely used in healthcare quality improvement. The approach includes stakeholders throughout and identifies alternative (both technological and non-technological) strategies so that stakeholders can compare options, ultimately deciding on the strategy that provides the minimal effort to impact ratio. This approach systematically assesses technological implementation strategies, going beyond a framework that merely notes the domains by which technological strategies can be characterized (e.g., usability, usefulness).

Given our focus on providing a “how-to” approach rather than a framework (i.e., an approach for assessing whether/how to use a technological implementation strategy), implementation projects that already have a guiding framework can still benefit from using our approach. For instance, the case study described above is guided by the Consolidated Framework for Implementation Research (43), and it can thus align to the framework's “Available Resources” construct when assessing whether the necessary technology infrastructure and/or capacity for technology training are available (i.e., Component 1 of our three-component approach).

As noted above, whether assessing a strategy's potential effort or impact (i.e., Components 1 and 2) is done quantitatively or qualitatively depends on what is feasible for the implementation team (e.g., administering a questionnaire, holding a group discussion, or both). Even if the axes of the effort-vs-impact chart do not have quantitative units associated with them, the chart can serve as a helpful conversation tool for the implementation team and their stakeholders, when discussing the relative effort and impact of the strategy and its alternatives. The case study above does exactly this, where each leader's perceived effort-vs-impact of the “Integrating the 5 A's into the EHR” strategy is visualized on the chart to facilitate both confirmation of the leader's perceptions about the technological implementation strategy and brainstorming of next steps to feasibly incorporate the strategy into the implementation effort.

Technological strategies vary widely, from supporting a mainly human-operationalized strategy to enabling a fully technology-operationalized strategy. For example, participatory system dynamics uses human-informed implementation simulations that are computationally generated, which in turn inform human-driven implementation (44). And types of technological strategies will certainly change over time, potentially toward increased automation [e.g., avatars for technical assistance (45)]. For the case study above, for instance, further automated tracking/monitoring of tobacco cessation care may alter the extent to which stakeholders consider the “Integrating the 5 A's into the EHR” strategy to require more or less effort.

As such, as technology advances, results of effort-vs-impact assessments of strategies will likely change. For example, natural language processing is currently effortful and computationally expensive, but may not be in the future. Evolving views on privacy will also affect how and whether health systems can use passively captured data for health purposes. Thus, even for a single technological implementation strategy, our approach is meaningful to repeat when the strategy is considered under different location and temporal contexts. For instance, prior to the current COVID-19 pandemic, using phone/video to implement evidence-based treatments may have been considered higher effort and potentially lower impact than their in-person alternatives. However, with social distancing requirements (46) and suspension of relevant HIPAA rules around telecommunication (47), using phone/video may now be considered lower effort compared to the newly heightened effort of ensuring safety from viral transmission for in-person strategies.

There are limitations to this work. We present here a case study of the three-component approach's application, and the approach is yet to be applied and evaluated across multiple behavioral health interventions. Relatedly, the approach has not been used for implementing interventions across multiple target populations. In light of these limitations, a notable strength of this work is that the components of the approach are reliant neither on population nor content specifics of our case study example, which will enable the approach to be applicable to other behavioral health interventions. Another strength is that the work is grounded in the well-established effort-vs-impact assessment method, which has been successfully used both within and beyond the health care realm.

Further work is needed to examine the approach's applicability to implementation for different vulnerable populations and beyond behavioral health. Technology is both a potential countermeasure to health disparities (e.g., better enabling access to healthcare) and a potential threat that further excludes vulnerable populations (e.g., those without equitable access to innovative technologies) (48). Therefore, especially as health disparities are increasingly viewed as an important component of implementation research and practice (49, 50), an approach such as ours that systematically assesses the effort-vs-impact balance of technological implementation strategies is essential to helping ensure that technology is optimally used based on the context into which evidence-based interventions are implemented. Particularly when considering the variations in population-based needs and availability of resources for global mental health, our three-component approach can help methodically approach deciding whether a certain technological implementation strategy is appropriate for a certain mental healthcare setting.

Data Availability Statement

The original contributions presented in the study are included in the article, further inquiries can be directed to the corresponding author.

Ethics Statement

The studies involving human participants were reviewed and approved by Duke University Health System Institutional Review Board. The ethics committee waived the requirement of written informed consent for participation.

Author Contributions

BK, SW, and JB were key conceivers of the ideas presented in this Perspective. BK led the writing of the manuscript. SW, TM, and JB provided critical revisions to the manuscript's intellectual content. All authors reviewed the final version of the manuscript.

Funding

This work was supported in part by the Implementation Research Institute (IRI) at the George Warren Brown School of Social Work, Washington University in St. Louis, through an award from the National Institute of Mental Health (5R25MH08091607), National Institute on Drug Abuse and VA Health Services Research & Development (HSR&D) Quality Enhancement Research Initiative. This work was also supported by resources provided by the National Center for Advancing Translational Sciences (UL1TR002553). SW is supported by VA HSR&D Career Development Award IK2HX002398. JB is supported by VA HSR&D Career Development Award CDA 15–257 at the VA Palo Alto.

Disclaimer

The views expressed in this article are those of the authors and do not necessarily reflect the position or policy of the Department of Veterans Affairs or the United States government.

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

The authors would like to thank the IRI faculty and fellows for their guidance in conceptualizing this work.

References

1. Hermes ED, Lyon AR, Schueller SM, Glass JE. Measuring the implementation of behavioral intervention technologies: recharacterization of established outcomes. J Med Internet Res. (2019) 21:e11752. doi: 10.2196/11752

2. Mohr DC, Burns MN, Schueller SM, Clarke G, Klinkman M. Behavioral intervention technologies: evidence review and recommendations for future research in mental health. Gen Hosp Psychiatry. (2013) 35:332–8. doi: 10.1016/j.genhosppsych.2013.03.008

3. Kazdin AE. Technology-Based interventions and reducing the burdens of mental illness: perspectives and comments on the special series. Cogn Behav Pract. (2015) 22:359–66. doi: 10.1016/j.cbpra.2015.04.004

4. World Health Organization. eHealth. (2020). Available online at: https://www.who.int/ehealth/about/en/ (accessed December 5, 2020).

5. Proctor EK, Powell BJ, McMillen JC. Implementation strategies: recommendations for specifying and reporting. Implement Sci. (2013) 8:139. doi: 10.1186/1748-5908-8-139

6. Wray LO, Ritchie MJ, Oslin DW, Beehler GP. Enhancing implementation of measurement-based mental health care in primary care: a mixed-methods randomized effectiveness evaluation of implementation facilitation. BMC Health Serv Res. (2018) 18:753. doi: 10.1186/s12913-018-3493-z

7. Pedersen MS, Landheim A, Møller M, Lien L. First-line managers' experience of the use of audit and feedback cycle in specialist mental health care: a qualitative case study. Arch Psychiatr Nurs. (2019) 33:103–09. doi: 10.1016/j.apnu.2019.10.009

8. American Society for Quality. Impact Effort Matrix. (2020). Available online at: https://asq.org/quality-resources/impact-effort-matrix (accessed December 5, 2020).

9. Chappell A, Peck H. Risk management in military supply chains: is there a role for six sigma? Int J Logistics Res Appl. (2006) 9:3, 253–267. doi: 10.1080/13675560600859276

10. Bunce MM, Wang L, Bidanda B. Leveraging six sigma with industrial engineering tools in crateless retort production. Int J Prod Res. (2008) 46:23:6701–19. doi: 10.1080/00207540802230520

11. Agency for Healthcare Research and Quality. Pressure Injury Prevention Program Implementation Guide. (2020). Available online at: https://www.ahrq.gov/patient-safety/settings/hospital/resource/pressureinjury/guide/apb.html (accessed December 5, 2020).

12. Kashani KB, Ramar K, Farmer JC, Lim KG, Moreno-Franco P, Morgenthaler TI, et al. Quality improvement education incorporated as an integral part of critical care fellows training at the mayo clinic. Acad Med. (2014) 89:1362–5. doi: 10.1097/ACM.0000000000000398

13. Fieldston ES, Jonas JA, Lederman VA, Zahm AJ, Xiao R, DiMichele CM, et al. Developing the capacity for rapid-cycle improvement at a large freestanding children's hospital. Hosp Pediatr. (2016) 6:441–8. doi: 10.1542/hpeds.2015-0239

14. Ursu A, Greenberg G, McKee M. Continuous quality improvement methodology: a case study on multidisciplinary collaboration to improve chlamydia screening. Fam Med Community Health. (2019) 7:e000085. doi: 10.1136/fmch-2018-000085

15. Goldmann D. Priority Matrix: An Overlooked Gardening Tool. (2021). Available online at: http://www.ihi.org/education/IHIOpenSchool/resources/Pages/AudioandVideo/Priority-Matrix-An-Overlooked-Gardening-Tool.aspx (accessed March 17, 2021).

16. Andersen B, Fagerhaug T, Beltz M. Root Cause Analysis and Improvement in the Healthcare Sector: A Step-by-Step Guide. Milwaukee, WI: ASQ Quality Press (2010).

17. Keenan P, Mingardon S, Sirkin H, Tankersley J. A way to assess and prioritize your change efforts. Harvard Bus Rev. (2015). Available online at: https://hbr.org/2015/07/a-way-to-assess-and-prioritize-your-change-efforts (accessed March 17, 2021).

18. Cannon DS, Allen SN. A comparison of the effects of computer and manual reminders on compliance with a mental health clinical practice guideline. J Am Med Inform Assoc. (2000) 7:196–203. doi: 10.1136/jamia.2000.0070196

19. Rosen CS, Morland LA, Glassman LH, Marx BP, Weaver K, Smith CA, et al. Virtual mental health care in the veterans health administration's immediate response to coronavirus disease-19. Am Psychol. (2021) 76:26–38. doi: 10.1037/amp0000751

20. Barwick MA, Boydell KM, Stasiulis E, Ferguson HB, Blase K, Fixsen D. Research utilization among children's mental health providers. Implement Sci. (2008) 3:19. doi: 10.1186/1748-5908-3-19

21. Kowalsky RJ, Hergenroeder AL, Barone Gibbs B. Acceptability and impact of office-based resistance exercise breaks. Workplace Health Saf. (2021) 29:2165079920983820. doi: 10.1177/2165079920983820

22. Gavrilets S, Auerbach J, van Vugt M. Convergence to consensus in heterogeneous groups and the emergence of informal leadership. Sci Rep. (2016) 6:29704. doi: 10.1038/srep29704

24. Shaw T, McGregor D, Brunner M, Keep M, Janssen A, Barnet S. What is eHealth (6)? Development of a conceptual model for eHealth: qualitative study with key informants. J Med Internet Res. (2017) 19:e324. doi: 10.2196/jmir.8106

25. Tabak RG, Khoong EC, Chambers DA, Brownson RC. Bridging research and practice: models for dissemination and implementation research. Am J Prev Med. (2012) 43:337–50. doi: 10.1016/j.amepre.2012.05.024

26. Harvey G, Kitson A. PARIHS revisited: from heuristic to integrated framework for the successful implementation of knowledge into practice. Implement Sci. (2016) 11:33. doi: 10.1186/s13012-016-0398-2

27. Hunter SC, Kim B, Mudge A, Hall L, Young A, McRae P, et al. Experiences of using the i-PARIHS framework: a co-designed case study of four multi-site implementation projects. BMC Health Serv Res. (2020) 20:573. doi: 10.1186/s12913-020-05354-8

28. Loeb DF, Kline DM, Kroenke K, Boyd C, Bayliss EA, Ludman E, et al. Designing the relational team development intervention to improve management of mental health in primary care using iterative stakeholder engagement. BMC Fam Pract. (2019) 20:124. doi: 10.1186/s12875-019-1010-z

29. Fiore MC, Jaén CR, Baker TB, et al. Treating Tobacco Use and Dependence: 2008 Update. Clinical Practice Guideline. Rockville, MD: U.S. Department of Health and Human Services. Public Health Service (2008).

30. Nápoles AM, Appelle N, Kalkhoran S, Vijayaraghavan M, Alvarado N, Satterfield J. Perceptions of clinicians and staff about the use of digital technology in primary care: qualitative interviews prior to implementation of a computer-facilitated 5As intervention. BMC Med Inform Decis Mak. (2016) 16:44. doi: 10.1186/s12911-016-0284-5

31. Yarbrough AK, Smith TB. Technology acceptance among physicians: a new take on tAM. Med Care Res Rev. (2007) 64:650–72. doi: 10.1177/1077558707305942

32. Holden RJ, Karsh BT. The technology acceptance model: its past and its future in health care. J Biomed Inform. (2010) 43:159–72. doi: 10.1016/j.jbi.2009.07.002

33. Fernandez ME, Ten Hoor GA, van Lieshout S, Rodriguez SA, Beidas RS, Parcel G, et al. Implementation mapping: using intervention mapping to develop implementation strategies. Front Public Health. (2019) 7:158. doi: 10.3389/fpubh.2019.00158

34. Health Resources and Services Administration. 2017 Lincoln Community Health Center, Inc. Health Center Profile (2018). Available online at: https://bphc.hrsa.gov/uds/datacenter.aspx?q=d&bid=040910&state=NC&year=2017 (accessed March 7, 2019).

35. Durham County Department of Public Health & Partnership for a Healthy Durham. 2017 Durham County Community Health Assessment. (2018). Available online at: http://healthydurham.org/cms/wp-content/uploads/2018/03/2017-CHA-FINAL-DRAFT.pdf (accessed December 6, 2020).

36. Drope J, Liber AC, Cahn Z, Stoklosa M, Kennedy R, Douglas CE, et al. Who's still smoking? Disparities in adult cigarette smoking prevalence in the United States. CA Cancer J Clin. (2018) 68:106–15. doi: 10.3322/caac.21444

37. Hazlehurst B, Sittig DF, Stevens VJ, Smith KS, Hollis JF, Vogt TM, et al. Natural language processing in the electronic medical record: assessing clinician adherence to tobacco treatment guidelines. Am J Prev Med. (2005) 29:434–9. doi: 10.1016/j.amepre.2005.08.007

38. Bailey SR, Heintzman J, Jacob RL, Puro J, Marino M. Disparities in smoking cessation assistance in uS primary care clinics. Am J Public Health. (2018) 108:1082–90. doi: 10.2105/AJPH.2018.304492

39. Peterson EB, Fisher CL, Zhao X. Pediatric primary healthcare providers' preferences, experiences and perceived barriers to discussing electronic cigarettes with adolescent patients. J Commun Healthcare. (2018) 11:245–51. doi: 10.1080/17538068.2018.1460960

40. Watkins DC. Rapid and rigorous qualitative dataAnalysis: the “RADaR” technique for applied research. Int J Qual Methods. (2017) 16:1–9. doi: 10.1177/1609406917712131

41. Wilson SM, Mosher TM, Crowder JC, Eisenson H, Beckham JC, Kim B, et al. Stakeholder-engaged implementation mapping to increase fidelity to best-practice tobacco cessation (Manuscript in preparation).

42. Farber HJ, Walley SC, Groner JA, Nelson KE; Section on tobacco control. Clinical practice policy to protect children from tobacco, nicotine, and tobacco smoke. Pediatrics. (2015) 136:1008–17. doi: 10.1542/peds.2015-3108

43. Damschroder LJ, Aron DC, Keith RE, Kirsh SR, Alexander JA, Lowery JC. Fostering implementation of health services research findings into practice: a consolidated framework for advancing implementation science. Implement Sci. (2009) 4:50. doi: 10.1186/1748-5908-4-50

44. Zimmerman L, Lounsbury DW, Rosen CS, Kimerling R, Trafton JA, Lindley SE. Participatory system dynamics modeling: increasing stakeholder engagement and precision to improve implementation planning in systems. Adm Policy Ment Health. (2016) 43:834–49. doi: 10.1007/s10488-016-0754-1

45. LeRouge C, Dickhut K, Lisetti C, Sangameswaran S, Malasanos T. Engaging adolescents in a computer-based weight management program: avatars and virtual coaches could help. J Am Med Inform Assoc. (2016) 23:19–28. doi: 10.1093/jamia/ocv078

46. Centers for Disease Control and Prevention. Coronavirus Disease 2019 (COVID-19). (2020). Available online at: https://www.cdc.gov/coronavirus/2019-ncov/prevent-getting-sick/social-distancing.html (accessed December 6, 2020).

47. U.S. Department of Health & Human Services. HIPAA and COVID-19. (2020). Available online at: https://www.hhs.gov/hipaa/for-professionals/special-topics/hipaa-covid19/index.html (accessed December 6, 2020).

48. Fong H, Harris E. Technology, innovation and health equity. Bull World Health Organ. (2015) 93:438–438A. doi: 10.2471/BLT.15.155952

49. Woodward EN, Matthieu MM, Uchendu US, Rogal S, Kirchner JE. The health equity implementation framework: proposal and preliminary study of hepatitis c virus treatment. Implement Sci. (2019) 14:26. doi: 10.1186/s13012-019-0861-y

Keywords: implementation strategies, technology, effort vs. impact, behavioral health, evidence-based interventions

Citation: Kim B, Wilson SM, Mosher TM and Breland JY (2021) Systematic Decision-Making for Using Technological Strategies to Implement Evidence-Based Interventions: An Illustrated Case Study. Front. Psychiatry 12:640240. doi: 10.3389/fpsyt.2021.640240

Received: 10 December 2020; Accepted: 14 April 2021;

Published: 14 May 2021.

Edited by:

Hitesh Khurana, Pandit Bhagwat Dayal Sharma University of Health Sciences, IndiaReviewed by:

Darpan Kaur, Mahatma Gandhi Missions Medical College and Hospital, IndiaAlicia Salamanca-Sanabria, ETH Zürich, Singapore

Vishal Dhiman, All India Institute of Medical Sciences, Rishikesh, India

Copyright © 2021 Kim, Wilson, Mosher and Breland. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Bo Kim, bo.kim@va.gov

Bo Kim

Bo Kim Sarah M. Wilson3,4

Sarah M. Wilson3,4