Active Fire Detection Using a Novel Convolutional Neural Network Based on Himawari-8 Satellite Images

- 1College of Information Technology, Shanghai Ocean University, Shanghai, China

- 2Meteorological Satellite Ground Station, Guangzhou, China

Fire is an important ecosystem process and has played a complex role in terrestrial ecosystems and the atmosphere environment. Sometimes, wildfires are highly destructive natural disasters. To reduce their destructive impact, wildfires must be detected as soon as possible. However, accurate and timely monitoring of wildfires is a challenging task due to the traditional threshold methods easily be suffered to the false alarms caused by small forest clearings, and the omission error of large fires obscured by thick smoke. Deep learning has the characteristics of strong learning ability, strong adaptability and good portability. At present, few studies have addressed the wildfires detection problem in remote sensing images using deep learning method in a nearly real time way. Therefore, in this research we proposed an active fire detection system using a novel convolutional neural network (FireCNN). FireCNN uses multi-scale convolution and residual acceptance design, which can effectively extract the accurate characteristics of fire spots. The proposed method was tested on dataset which contained 1,823 fire spots and 3,646 non-fire spots. The experimental results demonstrate that the FireCNN is fully capable of wildfire detection, with the accuracy of 35.2% higher than the traditional threshold method. We also examined the influence of different structural designs on the performance of neural network models. The comparison results indicates the proposed method produced the best results.

Introduction

Fire is an important ecosystem process and has played a complex role in shaping landscapes, biodiversity and terrestrial ecosystems and the atmosphere environment (Bixby et al., 2015; Ryu et al., 2018; McWethy et al., 2019; Tymstra et al., 2020). It provide nutrients and habitat for vegetation and animals, and plays multiple important roles in maintaining healthy ecosystems (Ryan et al., 2013; Brown et al., 2015; Harper et al., 2017). However, wildfires are also destructive forces—it cause great loss of human life and damage to property, atmospheric pollution, soil damage and so on. The existing studies showing an estimated global annual burning area of approximately 420 million hectares (Giglio et al., 2018). Therefore, to reduce the negative impact of fire, real-time detection of active fires should be carried out, which can provide timely and valuable information for fire management department.

With the continuous development of satellite remote sensing technology, an increasing number of researchers have chosen to use satellite multispectral images to detect forest wildfires (Allison et al., 2016; Kaku, 2019; Barmpoutis et al., 2020). The common features of fires are bright flames and smoke produced during combustion, as well as high temperatures on fire surfaces that are different from the surrounding environment. Smoke and flames produced during combustion can be detected in the visible light bands of remote sensing images, and high temperatures on the surface of fires are easily detected in the mid-infrared, shortwave infrared and thermal infrared bands (Leblon et al., 2012). In moderate or low spatial resolution images, the fire is represented as a fire spot with extremely high temperature, which also called thermal anomalies on a per-pixel basis (Xie et al., 2016). For instance, MOD14 monitors fire actively at a 1 km spatial resolution. Satellite remote sensing has the advantages of strong timeliness, wide observation range and low cost, which provides great convenience for fire detection (Coen and Schroeder, 2013; Xie et al., 2018).

Active fire detection methods can be divided into two types: those that are based on a manual design algorithm, primarily the threshold method, and the alternative approach, based on deep learning, including shallow neural networks and image-level deep networks.

The threshold-based method sets one or more thresholds for a specific imager channel, or the combination of different spectral channels, checks each pixel one by one, and classifies the pixels that meet the threshold as fire spots; otherwise, they are classified as non-fire spots. Spectral, spatial or contextual information usually involved. The major satellite remote sensing for active fire detection are: the Moderate-resolution Imaging Spectro radiometer (MODIS) sensor that equips the NASA Terra and Aqua satellites, with the spatial resolution of 250m to 1 km (Justice et al., 2002; Morisette et al., 2005; Giglio et al., 2008; Maier et al., 2013; Xie et al., 2016; Giglio et al., 2016; Earl and Simmonds, 2018); the AVHRR sensor on board NOAA satellite, with the spatial resolution of 1 km (Baum and Trepte, 1999; Boles and Verbyla, 2000); the Visible Infrared Imaging Radiometer Suite (VIIRS) on board the joint NASA/NOAA Suomi National Polar-orbiting Partnership (Suomi NPP) and NOAA-20 satellites (Schroeder et al., 2014; Li et al., 2018). In addition, the Landsat series, Sentinel-2 remote sensing images have also been used for research on this filed due to they are relatively high spatial and temporal resolution (Schroeder et al., 2008; Murphy et al., 2016; Schroeder et al., 2016; Malambo and Heatwole, 2020; Hu et al., 2021; van Dijk et al., 2021). The major improvements of these methods are concentrate on integrating contextual or temporal information (Schroeder et al., 2008; Murphy et al., 2016; Lin et al., 2018; Kumar and Roy, 2018), setting more accurate threshold (Baum and Trepte, 1999), and improving the disturbance factors algorithm, such as cloud, smoke, and snow (Giglio et al., 2016).

In October 2014, a new geostationary meteorological satellite Himawari-8 was launched by the Japan Meteorological Agency (JMA). The satellite is equipped with an Advanced Himawari Imager (AHI) 16-channel multispectral sensor with a spatial resolution of 2 km (Xu et al., 2017). AHI can collect a full-disk of data every 10 min, covering East Asia (Da, 2015). The high temporal resolution of the Himawari-8 satellite makes it more suitable for time-sensitive tasks, e.g., fire monitoring. Wickramasinghe et al. (2016) proposed a new AHI-FSA algorithm that uses the Advanced Himawari Imager (AHI) data to detect burning and unburned vegetation, and the edge between smoke-covered and non-smoke-covered areas, respectively. Xie et al. (2018) proposed a spatial and temporal context model to detect fires based on the high temporal resolution of the Himawari-8 satellite images and applied it to real fire scenarios. Na et al. (2018) used the 7, 4, and 3 bands of Himawari-8 data to monitor grassland fires in the border areas between China and Mongolia. The results show that the detected fires are highly consistent with the actual situation on the ground. More studies of fire detection algorithms can be found in Cocke et al. (2005), French et al. (2008), Boschetti et al. (2015).

As can be seen from the above studies, the traditional threshold methods have been widely used in active fire detection tasks. However, due to the different design of spectral bands and central wavelength of different sensors, the threshold method is usually applicable to specific satellites, which makes it difficult to apply to multiple satellites. Moreover, the threshold is determined according to the statistical data of the surrounding areas, where fires under different landforms, climates, and seasons have diverse characteristics. This imply that the threshold changes dynamically according to the study area and the data. Furthermore, the threshold methods are easily affected by cloud, thick smoke, when it used to assess large areas, is prone to false positives and omissions.

Deep learning techniques have achieved excellent results in the field of machine vision (LeCun et al., 2015). Deep learning has the characteristics of strong learning ability, strong adaptability and good portability. It can discover the intricate patterns in massive data by using a series of processing layers. Therefore, an increasing number of researchers have tried to use deep learning technology in the field of fire or smoke detection and have developed and designed many algorithms. These algorithms can be divided into neural networks at the image level and pixel level.

In the field of fire detection at image level, semantic segmentation models generally involved. Langford et al. (2018) applied the deep neural networks (DNN) to detect the wildfires. To solve the problem of imbalanced training samples, a weight-selection strategy was adopted during the DNN training process. The results showed that the weight-selection strategy was able to map wildfires more accurate compared to the normal DNN. Ba et al. (2019) designed a new convolutional neural network (CNN) model, SmokeNet, which integrates the attention of space and channel direction into CNN to enhance the feature representation of scene classification. The model was tested using the MODIS data. The experimental results indicate high consistency between model predictions and actual classification results. Vani et al. (2019) designed a convolutional neural network Inception-v3 method based on transfer learning to classify the fire and non-fire. Gargiulo et al. (2019) suggested a CNN-based super-resolution technique for active fire detection using Sentinel-2 images. Pinto et al. (2020) first use convolutional neural networks and Long Short-Term Memory (LSTMs) with an architecture based on U-net. The red, near-infrared and middle-infrared (MIR) bands from the VIIRS sensor, combined with the VIIRS 375 m active fire product as inputs to train the model. de Almeida Pereira et al. (2021) created training and testing images and labels using high-resolution images collected by Landsat-8 to train the improved U-Net networks. Different from most of the existing studies that use optical images, Ban et al. (2020) used CNN to detect burnt areas from Sentinel-1 SAR time series images. By analysing the temporal backscatter variations, the CNN-based deep learning method can better distinguish burnt areas with higher accuracy to traditional method. Larsen et al. (2021) and Guede-Fernández et al. (2021) adopted deep learning method to identify the fire smoke. However, the location of the fire can not be directly determined. In addition, researches are also using deep learning method to detect the fire using unmanned aerial vehicle (UAV) images or videos (Yuan et al., 2017; Jiao et al., 2019; Kinaneva et al., 2019; Bushnaq et al., 2021; Guede-Fernández et al., 2021). For instance, Muhammad et al. (2018) suggests a convolutional neural network using surveillance videos. The UAV can provide timely images of the fire. However, it may not suitable for large area forest fire detection. In terms of fire detection based on pixel level, according to the knowledge of the authors, there are only two related literatures. The first literature is that Zhanqing et al. (2001) integrated a back-propagation neural network (BPNN) and the threshold methods for extracting smoke based on AVHRR imagery. The BPNN can discover and learn complex linear and nonlinear relationships from radiation measurements between smoke, cloud, and land, it is can identify the potential area covered by smoke. To remove the misclassified pixels and improve the precision, multi-threshold testing also incorporated. In 2015, Li et al. proposed an improved algorithm based on their earlier model (Zhanqing et al., 2001). In the improved algorithm, all bands were regarded as the input vectors of the BPNN, and the training dataset was established using the multi-threshold method to train the BPNN to identify smoke.

According to the above-mentioned studies, some open problems still exist. First, the traditional threshold methods are easily affected by cloud, thick smoke, which lead to false positives and omission errors. Second, most of the existing deep learning methods use polar-orbiting satellites images which could provide fine spatial resolution, but the temporal resolution is relatively low. High temporal is critical for fire monitoring. The Himawari-8 satellite which has the temporal resolution of 10 min, can continuously monitor fire and are thus conducive to early fire detection and adopt aggressive measures. However, few deep learning methods use Himawari-8 satellite to detect the fires. Third, most of the existing deep learning methods are conducted at image level. Over the past decades, deep learning methods have been promoting major advances in artificial intelligence, and a variety of new models have been proposed, such as generative adversarial networks (GAN), deep Convolutional Neural Networks, Recurrent Neural Networks, Long Short-Term Memory. However, they are difficult to detect the fires at pixel level, due to the low spatial resolution of Himawari-8 images and the subtle target of the fire. The existing models need to be improved.

Therefore, the objective of the study is to propose an active fire detection system using a novel convolutional neural network (FireCNN) based on Himawari-8 satellite imageries, to fill the research gap of this area. The presented FireCNN uses multi-scale convolution and residual acceptance design, which can effectively extract the accurate characteristics of fire spots, and to improve the fire detection accuracy. The main contributions of our study are as follows. 1) We developed a novel active fire detection convolutional neural network (FireCNN) based on Himawari-8 satellite images. The new method utilizes multi-scale convolution to comprehensively assess the characteristics of fire spots and uses residual structures to retain the original characteristics, which makes it able to extract the key features of the fire spots. 2) A new Himawari-8 active fire detection dataset was created, which includes a training set and a test set. The training set includes 654 fire spots and 1,308 non-fire spots, and the test set includes 1,169 fire spots and 2,338 non-fire spots.

The remainder of the article is organised as follows. In the Data section, we explain the source and composition of the data and pre-processing steps and provide basic information regarding the study area as well as a detailed description of the database established in this study. In the Methodology section, the proposed algorithm is described in detail, and both the traditional threshold method and deep learning method used in the experiment are introduced. In the Experiment section, the relevant settings of the experiment, the parameters used for evaluation, and the analysis of the results are described. Finally, the key findings of the study are summarized, and possible future research is briefly discussed.

Data

Data and Pre-Processing

The fire location data (Label) and multispectral image data used in this study were obtained from the Meteorological Satellite Ground Station, Guangzhou, Guangdong, China and Himawari-8. Specifically, the Himawari-8 product used in this article is full disk Available Himawari L1 Data (Himawari L1 Gridded data), The detailed information is available on website https://www.eorc.jaxa.jp/ptree/userguide.html. The fire location data include the longitude, latitude, and time of the fire. The data are presented in the form of mask, from which the fire and non-fire points can be extracted from the multispectral image. The data have high reliability after manual identification and algorithm inversion.

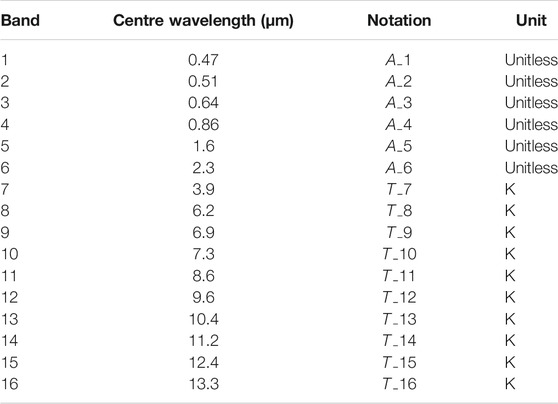

The multispectral image data were obtained from the Himawari-8 satellite Himawari-8 is comprised of 16 bands; information on each band is provided in Table 1. The spatial resolution of the visible light bands is 0.5–1 km and that of the near-infrared and infrared bands is 1–2 km. The temporal resolution is 10 min. The entire range covers the earth, from 60° N to 60° S and from 80° E to 160° W. Although the spatial resolution of the geostationary satellite is less than that of a polar orbit satellite, the geostationary satellite has the characteristics of wide coverage, time synchronisation of data acquisition, fixed observation position, and high temporal resolution, all of which make it well suited for real-time monitoring of wildfires. Moreover, the repeated visit once every 10 min can alleviate the problem of the blank monitoring period caused by the low temporal resolution of a polar orbit satellite. Among the Himawari-8 satellite multispectral images, bands 1-6 are albedo data,

Study Area

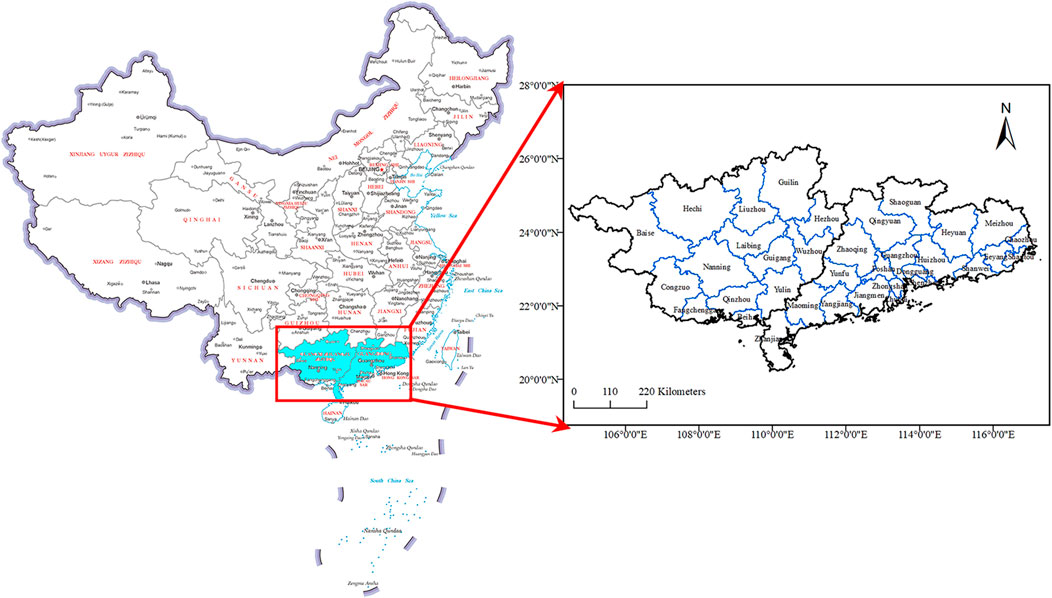

A map of Guangdong Province, in southern China, is shown in Figure 1 The whole region is located between 20° 13′ and 25° 31′ N and between 109° 39′ and 117° 19′ E. The terrain is hillier in the south than in the north. The study area is located in the East Asian monsoon region, primarily in a subtropical monsoon climate. Guangxi is adjacent to Guangdong Province, as shown in Figure 1, located between 104° 28′ and 112° 04′ E and 20° 54′ and 26° 24’ N. In Guangxi, the terrain tends to be hilly in the northwest and less so in the southeast. The main climate is subtropical monsoon and tropical monsoon climates. The two provinces have high forest coverage rates, and both lie close to the equator, making these areas prone to forest fires during dry periods. For these reasons, they were selected as the study area.

Establishing the Database

In assembling the data, the first consideration is that the fire location data should correspond to the multispectral image data in terms of position and time. A part of the study area was cut out from the multispectral image data, and a grid of M × M size was set up at the centre of each pixel. The average and standard deviation of each band in the grid were calculated as the surrounding environment information of the pixels. To ensure that the pixels at the edge of the image can also set a sufficient window size, a sufficient width of the mirror edge was added to the image before processing. The training data is provided by Meteorological Satellite Ground Station, Guangzhou, Guangdong, China, which use combination of traditional algorithm and field survey.

According to the time and latitude information of the fire spot, the information of each band and the surrounding environment information of the fire spot were taken from the corresponding Himawari-8 image as the original characteristics of the fire spot. At the same time, the original features of non-fire spots were extracted randomly according to a certain proportion on the same scene image, where the fire spots were marked as 1 and the non-fire spots were marked as 0.

The training data set included the data of Guangdong and Guangxi provinces from January to December 2020, with the data collected at 3:00 a.m. and 7:00 p.m. (UTC) every day. Due to the unbalance number of fire and non-fire points, the proportion of fire and non-fire training points was set by comparison experiment, and the result indicates that the network can fully learns the characteristics of fires and correctly distinguishes between fires and non-fires with the proportion of 1:2. A total of 654 fire spots and 1,308 non-fire spots were included in the training set, and 40% of the training set was randomly selected as the validation set, which was not involved in training and was only used to adjust the hyper-parameters of the model and preliminarily evaluate the ability of the model to determine whether continuous training can be stopped.

Methodology

Active Fire Detection With Traditional Threshold Method

In this section, we mainly introduce the fire detection algorithm proposed by Xu et al. (2017). The algorithm first uses the 3.9- and 11.2-μm bands of Himawari-8 to identify potential fire spots. The 2.3-μm band is then used to identify water, and the 0.64-, 0.86-, and 12.4-μm bands are used to identify clouds. Water pixels and cloud pixels are removed from potential fire spots to reduce false alarms. As the final fire detection results, the experimental results show that the fire detection method is robust in situations of smoke and thin clouds and is very sensitive to small fires. It can provide valuable real-time fire information for wildfire management. The conditions for the algorithm to identify potential fires during the day are as follows.

where

The conditions for non-water pixels are:

where,

The conditions for non-cloud pixels are:

Active Fire Detection Based on Convolutional Neural Network

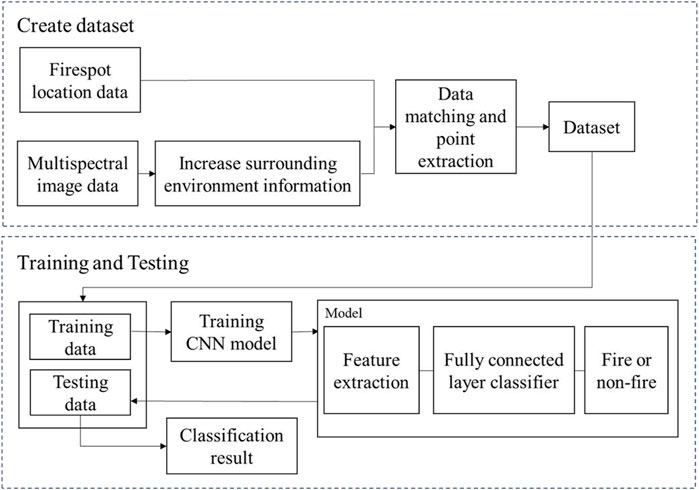

In Figure 2 we present a flow chart of the fire detection model used in this study. First, we create the training and testing data sets. The specific steps refer to the Data section. This is followed by the use of the training set to train the model. The training model is then tested and the classification results are generated.

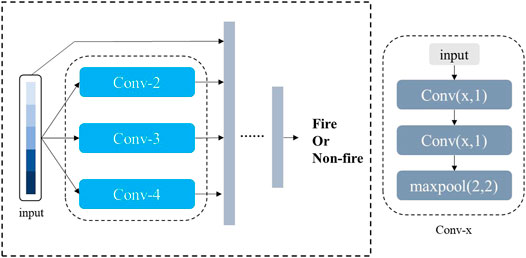

The active fire detection problem can be transformed into a two-classification problem; that is, the pixels on the satellite image are classified as fire or non-fire. The active fire detection framework based on the CNN proposed in this study (Figure 3) is composed primarily of a feature extraction component and a fully connected layer classification component. The feature extraction component performs feature extraction and feature fusion on the input samples, and then the extracted features are inputted into the fully connected layer component, finally outputting the probability that the point is a fire/non-fire spot.

Feature Extraction

The feature extraction component includes three convolution modules of different scales and residual edges. The convolution modules are Conv-2, Conv-3, and Conv-4; that is, the size of the convolution kernel is 2, 3, and 4. Each convolution module includes two convolutional layers and a maximum pooling layer, and each convolutional layer is followed by a rectified linear unit (ReLU) activation function. In this study, convolutional neural networks were used in the convolution module to select features. Through convolutional layers of different scales, feature selection and extraction can be performed in different ranges, which is not only beneficial to reduce the weight of the features with poor correlation with wildfire in the original feature, but also a more comprehensive analysis of the relationship between different quantitative features and extract the key features. In the pooling layer, we chose to use the maximum pooling to retain the key features to the greatest extent, while reducing the dimension of the features to facilitate subsequent calculations. The residual edge in the convolution module prevents the loss of original features and effectively solves the problem of neural network degradation. The feature extraction component fuses the features extracted by the three convolution modules of different scales with the original features as the output.

Fully Connected Layer Classifier

The fully connected layer takes the fused features as input, taking into account all the features, and finally outputs the probability that the sample point is a fire/non-fire spot through the Softmax function. Because the problem is finally transformed into a binary classification problem, we chose the binary cross-entropy function as the loss function. The binary cross-entropy function is defined as follows:

where

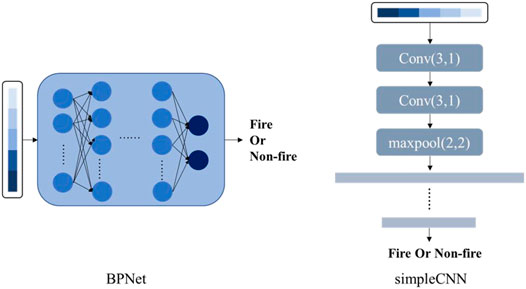

To verify that CNN has the potential to be suitable for thermal power detection tasks at fire spots, we compared our model with the threshold-based algorithm proposed by Xu et al. (2017). At the same time, we also compared FireCNN with BPNN (BPNet) and CNN (simpleCNN), removing multi-scale convolution and residual edges on the basis of FireCNN. The BP neural network includes five hidden layers; the number of neurons is 44, 22, 11, 6, and 2. Except for the last layer, each hidden layer uses the ReLU activation function, and the last layer uses the Softmax function. SimpleCNN includes two convolution kernels with a size of three convolutional layers, a maximum pooling layer, and a fully connected layer. The network structure of BPNet and simpleCNN is shown in Figure 4.

Experiment

Experimental Setup

In this study, there was no overlap between the training set (used to train the CNN model) and the test set (used to test the performance of the CNN model). The code used in this study was written using Python 3.6, and the deep learning framework used was Pytorch1.2. In terms of hardware, experiments were conducted on an Intel CoreI i5-8300H CPU at 2.30 GHz, 8 GB of RAM, running Windows, with an NVIDIA GeForce GTX 1060. In the CNN model, the Adam optimizer was selected as the parameter optimizer, the EPOCH level was 500, the batch size was 100, and the learning rate was 10–6.

Evaluation of Indicators

Precision rate, misclassification error (ME), recall rate, omission error (OE), accuracy rate, and F-measure were used to evaluate the performance of the model. TP denotes true positive (correctly classified as fire point), TN denotes false positives (non-fire point), FP denotes false positives (pixels misclassified as fire point), FN denotes false negatives (pixels incorrectly classified as non-fire point).

The precision rate (P) refers to the number of fire spots predicted by the model that are actually fire spots. The higher the value, the higher is the reliability of the fire spots predicted by the model. The formula is as follows:

The commission error (CE) refers to how many of the predicted fire spots are erroneous, and the higher the value, the more unreliable the fire spots predicted by the model. The formula is as follows:

The recall rate refers to the fire spots in the original data, how many are correctly predicted by the model, the higher the value is, the fewer the fire spots missed by the model. Recall rate is calculated, as follows:

The omission error (OE) refers to the fire spot in the original data and the extent to which it is omitted. The higher the value, the lower the comprehensiveness of the model. The formula is as follows:

Accuracy is the ratio of the number of correct predictions in all categories to the total number of predictions. The formula is as follows:

The F-measure was used to comprehensively evaluate the performance of the model. The formula is as follow:

Analysis of Results

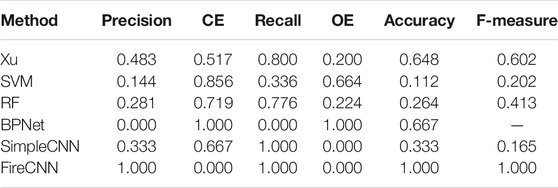

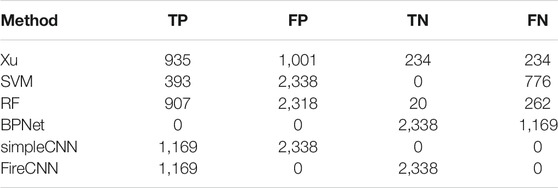

In order to test the effectiveness of the proposed FireCNN model, five related methods are selected for comparison. They are can be divided into three types, that traditional threshold method (Xu and Zhong, 2017), machine learning methods (Support Vector Machine and Random Forest), and the deep learning methods which BP neural network and simpleCNN are involved (Zhanqing et al., 2001; Li et al., 2015). The Precision, CE, Recall, OE, Accuracy and F-measure are selected as the indicators of model performance. The results are presented in Table 2. In each of the methods, the training of the deep learning network uses the Adam optimizer, EPOCH level is 500, the batch size is 200, and the learning rate is 10–6. More specific data are presented in Table 3. In particular, SVM and RF algorithms used in this study are implemented by sklrean library, where SVM kernel function is set to Gaussian kernel function; RF has 100 trees with a maximum depth of 5.

It can be observed from Table 2 that the best performance indicators are obtained with FireCNN. The algorithm proposed by Xu does not perform as well as FireCNN in each indicator, indicating the superiority of the FireCNN to the traditional threshold method. In machine learning methods, the comprehensive performance of RF is better than SVM. SVM identifies most of the points as fire points, resulting in low accuracy. At the same time, it failed to find most of the fire points. Therefore, a low recall rate is obtained. Although RF performs better than SVM in recall rate, it still has the problems of low accuracy and more false positives. Consequently, a low recall rate is obtained. In addition, it still has the problem of low precision and more false alarms. The main reason responsible for this is that in Himawari-8 images, although abundant spectral information is provided, spatial contextual information is difficult to integrate in machine learning method due to the low spatial resolution of the images. An important character of a fire point is that its temperature is higher than the surrounding temperature. Without the surrounding environment information, the machine learning method cannot completely learn the features of the fire point. In contrast, the multi-scale convolution in FireCNN can consider and analyse the hidden relationship between various features from different scales, and the residual structure prevents the loss of original features, so it has better learning ability.

Compared with deep learning method, under the same training rounds, BPNet identifies all points as non-fire points and simpleCNN identifies all points as fire points. This indicates that these two networks have not yet learned the complete fire/non-fire characteristics. This is because the main structure of BPNet is multiple perceptron (MLP). The learning efficiency of this simple network structure is relatively low, and with the deepening of the network depth, it is prone to the problem of gradient dispersion, which leads to the network unable to further learn. SimpleCNN only uses convolution structure while multi-scale convolution is not included. When convolution is carried out on the original feature, the form is single, so the learning efficiency is low. In addition, simpleCNN does not use residual structure, which is easy to cause the loss of the original feature in the learning process. In contrast, FireCNN was able to distinguish fire from non-fire spots. In general, FireCNN performs best in all indicators, indicating that FireCNN which use multi-scale convolution and residual edge structure, can reliably and comprehensively identify fire spots in this dataset, with fewer false positives and few omitted fire spots. This also shows that a CNN with a reasonable structure can fully adapt to fire-detection tasks.

To further explore the differences between FireCNN and BPNet and simpleCNN, we devised an additional set of experiments. Using the training set and test set used in the above experiments, EPOCH trained by BPNet and simpleCNN was added, and the EPOCH required for BPNet and simpleCNN to reach 1.000 accuracy on the training set was recorded. The higher the EPOCH level required, the slower the convergence speed of the algorithm. Because the initial parameters of the deep learning network are random, to reduce the impact of randomness, we take the sum of ten effective experiments as the final result. At the same time, the model trained by these ten experiments was tested on the test set, and the precision, recall, and accuracy were recorded, and the average value of ten effective experiments was taken. To show the training speed of each network more intuitively, we recorded the total time (unit: s) spent by ten trainings. The results are presented in Table 4. We also tested the results of our model against data from Guangdong and Guangxi and obtained an accuracy of 0.999 and a recall rate of 0.999. The data used for this test included all pixels in the study area, and all pixels in the study area were classified without artificially setting the ratio of fire to non-fire. On average, FireCNN spends only 4.784 s for each prediction on the data of Guangdong, and 3.659 s for Guangxi, which is far less than the 10-min time resolution of the Himawari-8 satellite. Using FireCNN, managers can fully realise the real-time monitoring of fire in Guangdong and Guangxi.

It can be seen from Table 4 that BPNet requires the highest level of EPOCH, followed by simpleCNN, and FireCNN requires the least EPOCH. The EPOCH level of simpleCNN and FireCNN is less than that of BPNet, indicating that under the same setting, the convolution network can extract the characteristics of fire/non-fire spots more efficiently. The EPOCH level required by FireCNN is only 49.9% of that required by simpleCNN, indicating that multi-scale convolution is more effective than single-scale convolution, and in the design of the network, we set multi-scale convolution for FireCNN to ensure that the network can integrate different numbers of initial features for consideration. At the same time, there may be connections between different initial features. The convolution of different scales enables the network to consider the relationship between different numbers of features and ultimately extract the more essential features of fire/non-fire. At the same time, the existence of residual edges prevents the network from losing its original features. In terms of accuracy and recall rate, the gap between the three was not more than 0.001, indicating that the three could be applied to the fire detection task. In terms of time, FireCNN spends the least time in training, BPNet takes the second place, and simpleCNN spends the most time. In fact, the BPNet network is the simplest, and the time of one EPOCH is very short. However, because of the simple network, a higher EPOCH level is needed to train the network, and simpleCNN spends the most time. This is because its network structure is more complex than that of BPNet and it has more time to train an EPOCH. In addition, it simply uses convolution to extract features, and its efficiency is not high. The results show that the network needs to be complex enough to extract features efficiently, and a reasonable network will make the training more effective.

We also recorded the test time, and the average prediction time for each point did not exceed 0.00003 s. It takes no more than 4 s to make a prediction for all data points in Guangdong Province, and no more than 5 s to make a prediction for all data points in Guangxi Province. Compared with the 10-min time resolution of Himawari-8, FireCNN is fully capable of real-time monitoring.

To place these results in context, we have provided simple statistics of the fires in Guangdong and Guangxi provinces from January 2021 to June 2021 (Table 5).

As shown in Table 5, the number of fires in Guangdong and Guangxi provinces decreased gradually from January and then again sharply in June, when the number of fires decreased to a single digit. Based on the preliminary analysis of the climate and geographical environment of Guangdong and Guangxi, the early spring, autumn, and winter rains in Guangdong and Guangxi have decreased, and the wind is dry, which leads to frequent forest fires, as evidenced by the higher number of fires in January and February. Over time, in late spring and summer, although the temperature gradually increased, it was affected by the monsoon. At this time, the rainfall was abundant, and the air humidity was high; accordingly, the number of fires decreased sharply.

Conclusion

To reduce the destructive impact of wildfires, it is crucial to detect the active fires accurately and quickly in the early stage. However, the most widely used threshold methods are confronted with the problem of relative large commission and omission errors, the thresholds are varied with the study areas and so on. There are relatively few researches focus on monitoring active fires using deep learning method in a nearly real time way. In this article, we presented an active fire detection system using a novel FireCNN. FireCNN uses multi-scale convolution structure, which can consider the relationship between features from different scales, so that the network can efficiently extract features from non-fire points, and the residual structure prevents the loss of original features. These structures improve the network learning ability and learning speed. In order to evaluate the effectiveness of the proposed algorithm, it was test on Himawari-8 satellite images and the presented algorithm is compared with threshold method and the state-of-the-art deep learning models. Finally, we explored the influence of different structural designs on the deep neural network. A number of conclusions can be made as follows:

1) The FireCNN is fully capable of wildfire detection, with the accuracy of 35.2% higher than the traditional threshold method.

2) By using combination of FireCNN and Himawari-8 satellite images, the active fires can be accurately detected in nearly real time way, which is critical important to reduce the destructive impact of the active fires.

3) Reasonable network design can make the algorithm converge faster and shorten the training time.

However, the limitation of the proposed method is that the training and testing sets are relatively small. The effectiveness of the proposed method under large amount of data sets remains to be studied. In addition, the environmental information is artificially added to the original data to strengthen the feature representation. In the future research, we will try to present a more effective and robust method under large data sets.

Data Availability Statement

The original contributions presented in the study are included in the article/Supplementary Material, further inquiries can be directed to the corresponding authors.

Author Contributions

Conceptualisation and design of study: ZH, ZT, HP, and YWZ; data collection: YWZ and ZT; data analysis and interpretation: ZH, ZT, HP, YWZ, ZZ, RZ, ZM, YZ, YH, JW, and SY; Writing and preparation of original draft: ZH, ZT, HP, and YWZ; Funding acquisition: ZH All authors contributed to the article revision and read and approved the submitted version.

Funding

This work was supported by the National Natural Science Foundation of China under Grant 41871325.

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s Note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, orclaim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Acknowledgments

Many thanks to the reviewers for their valuable comments.

References

Allison, R., Johnston, J., Craig, G., and Jennings, S. (2016). Airborne Optical and thermal Remote Sensing for Wildfire Detection and Monitoring. Sensors. 16 (8), 1310. doi:10.3390/s16081310

Ba, R., Chen, C., Yuan, J., Song, W., and Lo, S. (2019). Smokenet: Satellite Smoke Scene Detection Using Convolutional Neural Network with Spatial and Channel-wise Attention. Remote Sensing. 11 (14), 1702. doi:10.3390/rs11141702

Ban, Y., Zhang, P., Nascetti, A., Bevington, A. R., and Wulder, M. A. (2020). Near Real-Time Wildfire Progression Monitoring with sentinel-1 Sar Time Series and Deep Learning. Sci. Rep. 10, 1322. doi:10.1038/s41598-019-56967-x

Barmpoutis, P., Papaioannou, P., Dimitropoulos, K., and Grammalidis, N. (2020). A Review on Early forest Fire Detection Systems Using Optical Remote Sensing. Sensors. 20 (22), 6442. doi:10.3390/s20226442

Baum, B. A., and Trepte, Q. (1999). A Grouped Threshold Approach for Scene Identification in AVHRR Imagery. J. Atmos. Oceanic Technol. 16 (6), 793–800. doi:10.1175/1520-0426(1999)016<0793:agtafs>2.0.co;2

Bixby, R. J., Cooper, S. D., Gresswell, R. E., Brown, L. E., Dahm, C. N., and Dwire, K. A. (2015). Fire Effects on Aquatic Ecosystems: an Assessment of the Current State of the Science. Freshw. Sci. 34 (4), 1340–1350. doi:10.1086/684073

Boles, S. H., and Verbyla, D. L. (2000). Comparison of Three AVHRR-Based Fire Detection Algorithms for interior Alaska. Remote Sensing Environ. 72 (1), 1–16. doi:10.1016/s0034-4257(99)00079-6

Boschetti, L., Roy, D. P., Justice, C. O., and Humber, M. L. (2015). MODIS-landsat Fusion for Large Area 30 M Burned Area Mapping. Remote sensing Environ. 161, 27–42. doi:10.1016/j.rse.2015.01.022

Brown, L. E., Holden, J., Palmer, S. M., Johnston, K., Ramchunder, S. J., and Grayson, R. (2015). Effects of Fire on the Hydrology, Biogeochemistry, and Ecology of Peatland River Systems. Freshw. Sci. 34 (4), 1406–1425. doi:10.1086/683426

Bushnaq, O. M., Chaaban, A., and Al-Naffouri, T. Y. (2021). The Role of UAV-IoT Networks in Future Wildfire Detection. IEEE Internet Things J. 8 (23), 16984–16999. doi:10.1109/jiot.2021.3077593

Cocke, A. E., Fulé, P. Z., and Crouse, J. E. (2005). Comparison of Burn Severity Assessments Using Differenced Normalized Burn Ratio and Ground Data. Int. J. Wildland Fire. 14 (2), 189–198. doi:10.1071/wf04010

Coen, J. L., and Schroeder, W. (2013). Use of Spatially Refined Satellite Remote Sensing Fire Detection Data to Initialize and Evaluate Coupled Weather‐wildfire Growth Model Simulations. Geophys. Res. Lett. 40 (20), 5536–5541. doi:10.1002/2013gl057868

Da, C. (2015). Preliminary Assessment of the Advanced Himawari Imager (AHI) Measurement Onboard Himawari-8 Geostationary Satellite. Remote sensing Lett. 6 (8), 637–646. doi:10.1080/2150704x.2015.1066522

de Almeida Pereira, G. H., Fusioka, A. M., Nassu, B. T., and Minetto, R. (2021). Active Fire Detection in Landsat-8 Imagery: A Large-Scale Dataset and a Deep-Learning Study. ISPRS J. photogrammetry remote sensing. 178, 171–186. doi:10.1016/j.isprsjprs.2021.06.002

Earl, N., and Simmonds, I. (2018). Spatial and Temporal Variability and Trends in 2001-2016 Global Fire Activity. J. Geophys. Res. Atmospheres., 2524–2536. doi:10.1002/2017jd027749

French, N. H. F., Kasischke, E. S., Hall, R. J., Murphy, K. A., Verbyla, D. L., Hoy, E. E., et al. (2008). Using Landsat Data to Assess Fire and Burn Severity in the North American Boreal forest Region: an Overview and Summary of Results. Int. J. Wildland Fire. 17 (4), 443–462. doi:10.1071/wf08007

Gargiulo, M., Dell’Aglio, D. A. G., Iodice, A., Riccio, D., and Ruello, G. (2019). “A CNN-Based Super-resolution Technique for Active Fire Detection on Sentinel-2 Data,” in PhotonIcs Electromagnetics Research Symposium (Spring), 418–426. doi:10.1109/piers-spring46901.2019.9017857

Giglio, L., Boschetti, L., Roy, D. P., Humber, M. L., and Justice, C. O. (2018). The Collection 6 MODIS Burned Area Mapping Algorithm and Product. Remote sensing Environ. 217, 72–85. doi:10.1016/j.rse.2018.08.005

Giglio, L., Csiszar, I., Restás, Á., Morisette, J. T., Schroeder, W., Morton, D., et al. (2008). Active Fire Detection and Characterization with the Advanced Spaceborne Thermal Emission and Reflection Radiometer (ASTER). Remote Sensing Environ. 112, 3055–3063. doi:10.1016/j.rse.2008.03.003

Giglio, L., Schroeder, W., and Justice, C. O. (2016). The Collection 6 MODIS Active Fire Detection Algorithm and Fire Products. Remote sensing Environ. 178, 31–41. doi:10.1016/j.rse.2016.02.054

Guede-Fernández, F., Martins, L., Almeida, R. V. D., Gamboa, H., and Vieira, P. (2021). A Deep Learning Based Object Identification System for Forest Fire Detection. Fire. 4 (4), 75. doi:10.3390/fire4040075

Harper, A. R., Doerr, S. H., Santin, C., Froyd, C. A., and Sinnadurai, P. (2017). Prescribed Fire and its Impacts on Ecosystem Services in the UK. Sci. Total Environ. 624, 691–703. doi:10.1016/j.scitotenv.2017.12.161

Hu, X., Ban, Y., and Nascetti, A. (2021). Sentinel-2 MSI Data for Active Fire Detection in Major Fire-Prone Biomes: a Multi-Criteria Approach. Int. J. Appl. Earth Observation Geoinformation. 101, 102347. doi:10.1016/j.jag.2021.102347

Jiao, Z., Zhang, Y., Xin, J., Mu, L., Yi, Y., Liu, H., et al. (2019). “A Deep Learning Based forest Fire Detection Approach Using UAV and YOLOv3,” in 2019 1st International Conference on Industrial Artificial Intelligence (IAI) (IEEE), 1–5. doi:10.1109/iciai.2019.8850815

Justice, C., Giglio, L., Korontzi, S., Owens, J., Morisette, J., Roy, D., et al. (2002). The MODIS Fire Products. Remote sensing Environ. 83 (1-2), 244–262. doi:10.1016/s0034-4257(02)00076-7

Kaku, K. (2019). Satellite Remote Sensing for Disaster Management Support: A Holistic and Staged Approach Based on Case Studies in Sentinel Asia. Int. J. Disaster Risk Reduction. 33, 417–432. doi:10.1016/j.ijdrr.2018.09.015

Kinaneva, D., Hristov, G., Raychev, J., and Zahariev, P. (2019). “Early forest Fire Detection Using Drones and Artificial Intelligence,” in 42nd International Convention on Information and Communication Technology, Electronics and Microelectronics (MIPRO) (IEEE), 1060–1065. doi:10.23919/mipro.2019.8756696

Kumar, S. S., and Roy, D. P. (2018). Global Operational Land Imager Landsat-8 Reflectance-Based Active Fire Detection Algorithm. Int. J. Digital Earth. 11 (2), 154–178. doi:10.1080/17538947.2017.1391341

Langford, Z., Kumar, J., and Hoffman, F. (2018). “Wildfire Mapping in Interior Alaska Using Deep Neural Networks on Imbalanced Datasets,” in IEEE International Conference on Data Mining Workshops, 770–778. doi:10.1109/icdmw.2018.00116

Larsen, A., Hanigan, I., Reich, B. J., Qin, Y., Cope, M., Morgan, G., et al. (2021). A deep learning approach to identify smoke plumes in satellite imagery in near-real time for health risk communication. J. Exposure Sci. Environ. Epidem. 31, 170–176.

Leblon, B., Bourgeau-Chavez, L., and San-Miguel-Ayanz, J. (2012). Use of Remote Sensing in Wildfire Management. London: Sustainable development-authoritative and leading edge content for environmental management, 55–82.

LeCun, Y., Bengio, Y., and Hinton, G. (2015). Deep Learning. Nature. 521 (7553), 436–444. doi:10.1038/nature14539

Li, F. J., Zhang, X. Y., Kondragunta, S., and Csiszar, I. (2018). Comparison of Fire Radiative Power Estimates from VIIRS and MODIS Observations. J. Geophys. Res. Atmospheres. 123 (9), 4545. doi:10.1029/2017jd027823

Li, X., Song, W., Lian, L., and Wei, X. (2015). Forest Fire Smoke Detection Using Back-Propagation Neural Network Based on MODIS Data. Remote Sensing. 7 (4), 4473–4498. doi:10.3390/rs70404473

Lin, Z., Chen, F., Niu, Z., Li, B., Yu, B., Jia, H., et al. (2018). An Active Fire Detection Algorithm Based on Multi-Temporal Fengyun-3c VIRR Data. Remote Sensing Environ. 211, 376–387. doi:10.1016/j.rse.2018.04.027

Maier, S. W., Russell-Smith, J., Edwards, A. C., and Yates, C. (2013). Sensitivity of the MODIS Fire Detection Algorithm (MOD14) in the savanna Region of the Northern Territory, Australia. ISPRS J. photogrammetry remote sensing. 76, 11–16. doi:10.1016/j.isprsjprs.2012.11.005

Malambo, L., and Heatwole, C. D. (2020). Automated Training Sample Definition for Seasonal Burned Area Mapping. ISPRS J. Photogrammetry Remote Sensing. 160, 107–123. doi:10.1016/j.isprsjprs.2019.11.026

McWethy, D. B., Schoennagel, T., Higuera, P. E., Krawchuk, M., Harvey, B. J., Metcalf, E. C., et al. (2019). Rethinking Resilience to Wildfire. Nat. Sustain. 2 (9), 797–804. doi:10.1038/s41893-019-0353-8

Morisette, J. T., Giglio, L., Csiszar, I., and Justice, C. O. (2005). Validation of the MODIS Active Fire Product over Southern Africa with ASTER Data. Int. J. Remote Sensing. 26 (19), 4239–4264. doi:10.1080/01431160500113526

Muhammad, K., Ahmad, J., Mehmood, I., Rho, S., Baik, S. W., Na, L., et al. (2018). Convolutional Neural Networks Based Fire Detection in Surveillance videos. IEEE Access 6, 18174–18183. doi:10.1109/access.2018.2812835

Murphy, S. W., de Souza Filho, C. R., Wright, R., Sabatino, G., and Correa Pabon, R. (2016). HOTMAP: Global Hot Target Detection at Moderate Spatial Resolution. Remote sensing Environ. 177, 78–88. doi:10.1016/j.rse.2016.02.027

Pinto, M. M., Libonati, R., Trigo, R. M., Trigo, I. F., and DaCamara, C. C. (2020). A Deep Learning Approach for Mapping and Dating Burned Areas Using Temporal Sequences of Satellite Images. ISPRS J. Photogrammetry Remote Sensing. 160, 260–274. doi:10.1016/j.isprsjprs.2019.12.014

Ryan, K. C., Knapp, E. E., and Varner, J. M. (2013). Prescribed Fire in North American Forests and Woodlands: History, Current Practice, and Challenges. Front. Ecol. Environ. 11, e15–e24. doi:10.1890/120329

Ryu, J.-H., Han, K.-S., Hong, S., Park, N.-W., Lee, Y.-W., and Cho, J. (2018). Satellite-based Evaluation of the post-fire Recovery Process from the Worst forest Fire Case in South Korea. Remote Sensing. 10 (6), 918. doi:10.3390/rs10060918

Schroeder, W., Oliva, P., Giglio, L., Quayle, B., Lorenz, E., and Morelli, F. (2016). Active Fire Detection Using Landsat-8/OLI Data. Remote sensing Environ. 185, 210–220. doi:10.1016/j.rse.2015.08.032

Schroeder, W., Oliva, P., Giglio, L., and Csiszar, I. A. (2014). The New VIIRS 375 m active fire detection data product: algorithm description and initial assessment. Remote Sens. Environ. 143, 85–96.

Schroeder, W., Prins, E., Giglio, L., Csiszar, I., Schmidt, C., Morisette, J., et al. (2008). Validation of GOES and MODIS Active Fire Detection Products Using ASTER and ETM+ Data. Remote Sensing Environ. 112, 2711–2726. doi:10.1016/j.rse.2008.01.005

Tymstra, C., Stocks, B. J., Cai, X., and Flannigan, M. D. (2020). Wildfire Management in Canada: Review, Challenges and Opportunities. Prog. Disaster Sci. 5, 100045. doi:10.1016/j.pdisas.2019.100045

van Dijk, D., Shoaie, S., van Leeuwen, T., and Veraverbeke, S. (2021). Spectral Signature Analysis of False Positive Burned Area Detection from Agricultural Harvests Using sentinel-2 Data. Int. J. Appl. Earth Observation Geoinformation. 97 (80), 102296. doi:10.1016/j.jag.2021.102296

Vani, K., Wickramasinghe, C. H., Jones, S., Reinke, K., and Wallace, L. (2019). Deep Learning Based forest Fire Classification and Detection in Satellite Images. 11th International Conference on Advanced Computing (ICoAC). Remote Sensing. 8 (11), 932. doi:10.1109/ICoAC48765.2019.246817

Xie, H., Du, L., Liu, S. C., Chen, L., Gao, S., Liu, S., et al. (2016). Dynamic Monitoring of Agricultural Fires in china from 2010 to 2014 Using MODIS and Globeland30 Data. Int. J. Geo-Information. 5 (10), 172. doi:10.3390/ijgi5100172

Xie, Z., Song, W., Ba, R., Li, X., and Xia, L. (2018). A Spatiotemporal Contextual Model for forest Fire Detection Using Himawari-8 Satellite Data. Remote Sensing 10 (12), 1992. doi:10.3390/rs10121992

Xu, G., and Zhong, X. (2017). Real-Time Wildfire Detection and Tracking in Australia Using Geostationary Satellite: Himawari-8. Remote sensing Lett. 8 (11), 1052–1061. doi:10.1080/2150704x.2017.1350303

Xu, W., Wooster, M. J., Kaneko, T., He, J., Zhang, T., and Fisher, D. (2017). Major Advances in Geostationary Fire Radiative Power (FRP) Retrieval over Asia and Australia Stemming from Use of Himarawi-8 AHI. Remote Sensing Environ. 193, 138–149. doi:10.1016/j.rse.2017.02.024

Yuan, C., Liu, Z., and Zhang, Y. (2017). Fire Detection Using Infrared Images for UAV-Based forest Fire Surveillance. Proc. Int. Conf. Unmanned Aircr. Syst. (Icuas)., 567–572. doi:10.1109/icuas.2017.7991306

Keywords: active fire detection, deep learning, active fire dataset, wildfire, himawari-8 imagery, fireCNN

Citation: Hong Z, Tang Z, Pan H, Zhang Y, Zheng Z, Zhou R, Ma Z, Zhang Y, Han Y, Wang J and Yang S (2022) Active Fire Detection Using a Novel Convolutional Neural Network Based on Himawari-8 Satellite Images. Front. Environ. Sci. 10:794028. doi: 10.3389/fenvs.2022.794028

Received: 13 October 2021; Accepted: 31 January 2022;

Published: 04 March 2022.

Edited by:

Peng Liu, Institute of Remote Sensing and Digital Earth (CAS), ChinaReviewed by:

Yiyun Chen, Wuhan University, ChinaBijoy Vengasseril Thampi, Science Systems and Applications, Inc., United States

Osama M. Bushnaq, King Abdullah University of Science and Technology, Saudi Arabia

Copyright © 2022 Hong, Tang, Pan, Zhang, Zheng, Zhou, Ma, Zhang, Han, Wang and Yang. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Haiyan Pan, hy-pan@shou.edu.cn; Yuewei Zhang, weixing1132@126.com

Zhonghua Hong

Zhonghua Hong Zhizhou Tang

Zhizhou Tang Haiyan Pan

Haiyan Pan Yuewei Zhang

Yuewei Zhang Zhongsheng Zheng1

Zhongsheng Zheng1  Yanling Han

Yanling Han