- 1Affective Neuroscience and Psychophysiology Laboratory, University of Göttingen, Göttingen, Germany

- 2Leibniz ScienceCampus Primate Cognition, Göttingen, Germany

Human faces express emotions, informing others about their affective states. In order to measure expressions of emotion, facial Electromyography (EMG) has widely been used, requiring electrodes and technical equipment. More recently, emotion recognition software has been developed that detects emotions from video recordings of human faces. However, its validity and comparability to EMG measures is unclear. The aim of the current study was to compare the Affectiva Affdex emotion recognition software by iMotions with EMG measurements of the zygomaticus mayor and corrugator supercilii muscle, concerning its ability to identify happy, angry and neutral faces. Twenty participants imitated these facial expressions while videos and EMG were recorded. Happy and angry expressions were detected by both the software and by EMG above chance, while neutral expressions were more often falsely identified as negative by EMG compared to the software. Overall, EMG and software values correlated highly. In conclusion, Affectiva Affdex software can identify facial expressions and its results are comparable to EMG findings.

Introduction

Identifying which emotion somebody is expressing is a crucial skill, facilitating social interactions. The human ability to express emotions in their faces has already been studied by Darwin (1872), and since then, ample research has focused on interpreting emotional expressions. Different theories have been built, ranging from the view that many different emotional expressions are possible (e.g. Scherer, 1999; Scherer et al., 2001; Ellsworth and Scherer, 2003) to the view that a limited number of distinct and basic categories of facial emotion expressions can be distinguished (Ekman, 1992, 1999). In a laboratory context, facial expressions of emotions can be measured using Electromyography (EMG, Fridlund and Cacioppo, 1986; Van Boxtel, 2010). EMG records muscle activity using electrodes placed on the skin surface. Importantly, distinct muscle activity is observable in response to different emotions. Specifically, the zygomaticus mayor muscle reliably becomes active during expressions of happiness or more generally joy (smiles). The corrugator supercilii muscle – the muscle that draws the eyebrow downward and medially and thus produces frowning – is related to particularly angry facial expressions (Dimberg, 1982; Tan et al., 2012), to negative non-facial stimuli (e.g. Bayer et al., 2010; Künecke et al., 2015) as well as cognitive processes of increased load (Schacht et al., 2009; Schacht et al., 2010). Activity of the corrugator and zygomaticus muscles can be used to dissociate between positive and negative emotions, with particularly corrugator activity clearly related to emotions of negative valence (Larsen et al., 2003; Tan et al., 2012). Therefore, many EMG studies focused on measuring the activity of these two muscles in response to pictures of happy and angry faces (Dimberg and Lundquist, 1990; Dimberg and Thunberg, 1998; Dimberg et al., 2000; Dimberg and Söderkvist, 2011; Otte et al., 2011; Hofman et al., 2012). For example, this technique has been used to study voluntarily controlled compared to automatic facial reactions to emotional stimuli (Dimberg et al., 2002), responses to unconsciously perceived emotional faces (Dimberg et al., 2000), effects of perceived fairness on mimicry (Hofman et al., 2012), and neural mechanisms involved in facial muscle reactions (Achaibou et al., 2008). Research also investigated individual differences in muscle responses, e.g. due to gender (Dimberg and Lundquist, 1990), empathy (Dimberg et al., 2011; Dimberg and Thunberg, 2012) and anxiety of individuals (Kret et al., 2013). In summary, EMG is an established method, widely used to study facial expressions of emotion.

An alternative, well established method for assessing facial expressions of emotion is the Facial Action Coding System (FACS), developed by Ekman and Friesen (1976). This anatomy-based system allows human coders to systematically analyze facial expressions for their emotional information based on the movements of 46 observable action units (Ekman and Friesen, 1976). While the system has been widely used over the last three decades, the applicability of the method has been limited by the requirement of certified coders and highly time-consuming nature of the coding processes. However, more recently, novel automatic software solutions have been developed, which represent promising tools in overcoming the limitations of human FACS coding through an easier applicability and faster processing of expressions recorded. These automatized approaches were found to be as reliable as human coding for emotion detection (Terzis et al., 2010; Taggart et al., 2016; Stöckli et al., 2018), while saving time (e.g. Bartlett et al., 2008).

Software includes e.g. EmoVu (Eyevis, 2013), FaceReader (N. I. Technology, 2007), FACET (iMotions, 2013), and Affectiva Affdex (iMotions, 2015). To investigate the suitability of this software for research, it needs to be verified whether it (i) reliably detects emotions and (ii) is comparable to previously established methods like EMG. The investigation of the performance of this new technology might benefit future research by establishing attractive alternative methods for the automatic detection of facially expressed emotion. For example, while EMG is widely used because of its mentioned benefits, it is restricted to laboratory settings, and applying electrodes on a participant’s face might not always be desirable for all kinds of experiments. Automatic software may help to overcome these limitations as only a camera for video recording is needed, which makes it easier for experimenters to investigate facial expressions under more natural conditions. The present study will focus on the Affectiva software, which classifies images and videos of facial expressions for the displayed emotions based on a frame-by-frame analysis. The reliability of the Affectiva software has already been confirmed in validation studies on static images (Stöckli et al., 2018) and videos (Taggart et al., 2016), that demonstrated reliable emotion recognition by the software. Its comparability with EMG remains, however, unclear. Other face emotion recognition software [FaceReader (D’Arcey, 2013) and FACET (Beringer et al., 2019)] has been validated by comparing the software computations for happy and angry expressions with EMG results. D’Arcey (2013) correlated FaceReader scores with EMG measures of the zygomaticus and the corrugator supercilii muscle to investigate comparability. Based on this approach, the aim of the current study was to compare the capability of the Affectiva software to appropriately classify emotions with EMG measurements of the zygomaticus mayor and the corrugator supercilii muscles. Note that Beringer et al. (2019) only measured expressions of people who were trained in the expression of happy and angry emotions. In contrast, the current study used a large sample of untrained participants, to ensure that more natural expressions were measured.

Participants are faster at producing facial expressions of emotions and show stronger activations of the responsible muscle groups if they view a face expressing the same emotion (Korb et al., 2010; Otte et al., 2011). Therefore, in the current study, participants were instructed to imitate a facial expression (happy, angry, neutral), while equivalent face stimuli were presented to them on a computer screen. These three expressions were chosen because they can be reliably measured with EMG (Dimberg and Lundquist, 1990; Dimberg and Thunberg, 1998; Dimberg et al., 2000; Dimberg and Söderkvist, 2011; Otte et al., 2011; Hofman et al., 2012). Muscle responses to emotional faces can arise as early as 300–400 ms after exposure (Dimberg and Thunberg, 1998), and therefore were measured for a 10-s period starting with the onset of the face presentation. As muscle responses have been shown to occur stronger on the left side of the face (Dimberg and Petterson, 2000), we recorded EMG from this side. Participants were asked to imitate facial expressions based on images from a validated face database, in order to create highly controlled facial expressions, related to a face database commonly used for research. However, it should be noted that this task neither required the person to feel the emotion nor necessarily triggered it and rather prioritized controlled expressions over natural and spontaneous emotional processes.

The current study investigated whether the Affectiva software can identify different facial expressions (smile, brow furrow) and the emotions related to them (termed in the software as “joy”1 and “anger” vs. neutral) as efficiently as EMG, by testing participants once with EMG and once with a video recording in separate sessions, with the latter analyzed off-line with Affectiva software.

We expected Affectiva to report a higher probability for a happy expression compared to a neutral or angry expression in the happy condition and a higher probability for an angry expression compared to a neutral or happy expression in the angry condition.

We further expected significant correlations between EMG and Affectiva measures. The study was preregistered with the Open Science Framework (doi: osf.io/75j9z) and all methods and analyses were conducted in line with the preregistration unless noted otherwise. In addition to these planned analyses we conducted an exploratory test to investigate whether EMG and the Affectiva software can be used simultaneously. For this, video recordings of the EMG condition were analyzed with the Affectiva software to investigate whether the software can reliably identify facial emotions when these are partially covered up with electrodes.

Materials and Methods

Participants

Twenty students between 18 and 29 years (mean age = 21 years, SD = 2.6, 17 female) from the University of Göttingen and the HAWK Göttingen participated in return for course credit. The sample size was based on previous research (Dimberg, 1982; Otte et al., 2011). All participants were right- handed, had normal or corrected to normal vision (only by contact lenses, no glasses) and no neurological or psychiatric disorders according to self-report. Five additional participants were tested but excluded due to not fulfilling the original inclusion criteria (2), technical failure (2), or because they did not complete the study (1).

Task Design and Stimuli

Three types of facial expressions were recorded from the participants (happy, angry and neutral) using a video camera and EMG electrodes, respectively. The task was implemented in PsychoPy (Peirce et al., 2019) and consisted of two blocks, the order of which was counterbalanced. Facial expressions of participants were recorded with a C922 Pro Stream Webcam (Logitech, resolution: 1080 × 720 px, 30 frames per second) during both blocks. In addition, one of the blocks included a facial electromyogram (EMG) recording. Lighting was kept constant during recordings with one direct current light source illuminating the room from above. A sufficient video quality for the Affectiva analysis was achieved by averaging the Affectiva AFFDEX quality score for every participant and excluding participants with a mean score below 75%. Participants were asked to position their face between the two bars of an adjustable headrest to ensure a central position of the face during recordings; however, their heads were not constrained by the chinrest, but it rather served as an orientation for position. The experimental task for the participants was to imitate the emotion expressed on each of 60 frontal portrait pictures (20 per emotion category) selected from the Karolinska Directed Emotional Faces (KDEF) database (Lundqvist et al., 1998), with 10 female and 10 male faces in each condition (happy, angry, and neutral). Face pictures were presented in a randomized order. Images were edited to the same format with Adobe Photoshop CS6 by matching luminance across images and applying a gray mask rendering only the facial area visible. The task started with a short, written introduction after which the participants could proceed by pressing the space button. During each trial, a fixation cross was presented for 5 s, followed by the stimulus being presented for 10 s at the center of the screen. Participants could take a short break after a sequence of 20 stimuli had passed.

iMotion Affectiva

Videos were recorded within intervals ranging from the onset to the offset of the stimulus (10 s). Videos were imported in iMotions Biometric Research Platform 6.2 software (iMotions, 2015), and analyzed using the Affectiva facial expression recognition engine. Note that due to an update this is a more recent software version, than originally preregistered. Emotion probabilities were exported for all 60 stimuli per subject.

EMG Recording and Pre-processing

The EMG was recorded from 10 electrodes during one of the two blocks in order to measure zygomaticus mayor and corrugator supercilii activity [two electrodes per muscle, based on Fridlund and Cacioppo (1986), and additional reference electrodes, as described below]. All data were recorded with a Biosemi ActiveTwo AD-box at a sampling rate of 2048 Hz. The skin around electrode placement sites was cleaned with a soft peeling and an ethanol solution to enhance electrical contact between skin and electrode. Electrodes were prepared with electrode gel (Signagel) before the arrival of the participant. Electrodes were attached at the left side of the face using bipolar placement as suggested by Fridlund and Cacioppo (1986). For measuring Zygomaticus major activity, a line joining the cheilion and the preauricular depression was drawn with an eye pencil. The first electrode was placed midway along this line and the reference electrode was placed 1 cm inferior and medial to the first. To measure corrugator supercilii activity, an electrode was placed directly above the brow and the reference was affixed 1 cm lateral and slightly above the other electrode. As a precautionary measure, alternative reference electrodes were attached behind both ears above the mastoids but not considered for further analysis. The two ground electrodes (required for online display of channels) were placed 0.5 cm left and right from the midline directly below the hairline. Two electrodes were attached to the Orbicularis oculi (1 cm below right border of the eye and 0.5 cm inferior and lateral to the first) to measure eye blink artifacts.

Data was processed with Brain Vision Analyzer 2.1 (Brain Products GmbH, Munich Germany). The following steps were conducted for each subject separately: A high-pass filter at 20 Hz, a low-pass filter at 400 Hz and a notch filter at 50 Hz were applied. The zygomaticus major and corrugator supercilii channels were re-referenced to their respective reference electrode to remove common noise between bipolar channels. The resulting data was rectified and segmented into three emotion-specific segments (happy, angry, and neutral), which consisted of 20 epochs of 10.200 ms, respectively, starting 200 ms before stimulus onset. A baseline period from 200 ms before until stimulus onset was subtracted from each data point. Segments were averaged per subject and the mean amplitude between 0 and 10 s after target onset was exported.

Procedure

This study was approved by the Ethics Committee of the Institute of Psychology at the University of Göttingen and conducted in accordance with the World Medical Association’s Declaration of Helsinki. After arrival, the participant was seated in an electromagnetic shielded chamber and provided written informed consent as well as relevant personal information. Participants were shortly briefed about the process of electrode attachment and then prepared for EMG recording directly before the EMG trial. After the EMG setup was complete, the electrode offset was checked and adjusted to below 30 mV. Participants were instructed to imitate the facial expressions they viewed. Each block lasted approximately 20 min.

Results

Confirmatory Analyses

Datasets are available in Supplementary Table S1. A difference value was calculated separately for happy, angry and neutral trials. This value was computed from the results of the Affectiva analysis and the EMG results using the following equations: (a) , (b) and (c) . The difference scores were used, as the EMG and Affectiva measures were obtained in different units. The scores ensured that all measures were transformed to lie within a scale between −1 and 1, ensuring the comparability of EMG and Affectiva measures. The computation of these scores resulted in one value for the EMG measure, one value for the Affectiva measure of emotion and one value for the Affectiva measure of expression. One-tailed tests were used for all analyses as pre-registered, as hypotheses were directional; however, note that this did not make a difference for significance of results in the current study.

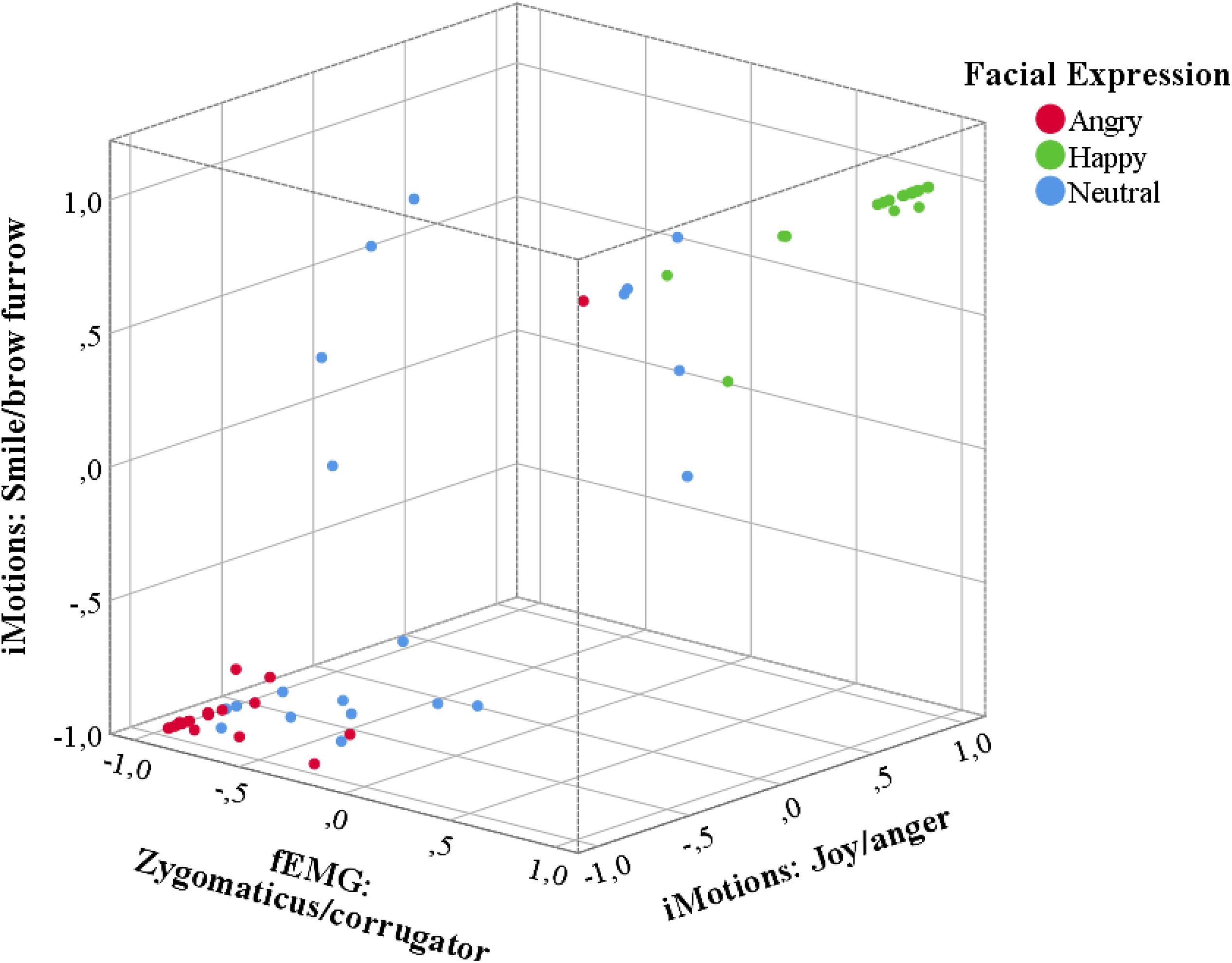

Correlations between the Affectiva difference score values and the EMG difference score were computed. Both of these values range from a scale between −1, indicating an angry expression to +1, indicating a happy expression. There was a significant correlation of the EMG measures with the difference score for Affectiva smile and brow furrow values, r(60) = 0.761, p < 0.001, and the difference score for Affectiva “joy” and “anger” values, r(60) = 0.789, p < 0.001 (Figure 1).

Figure 1. Three-dimensional scatter plot of mean difference scores between the Affectiva scores for smile and brow furrow and the difference scores between “joy” and “anger,” as well as between the EMG amplitudes for zygomaticus mayor and corrugator supercilii activity. Note that the difference score is computed to be more negative (closer to –1) if the respective measure indicates a more negative (i.e. angry) expression and more positive (closer to 1) if the measure indicated a positive (i.e. happy) expression. Red dots indicate the difference scores in the angry condition, green dots in the happy condition and blue dots in the neutral condition. Difference scores of all three types were significantly positive (close to 1) in the happy condition and significantly negative (close to –1) in the angry condition.

Furthermore, for each expression condition (happy, angry, neutral) separate one-sample-t-tests were computed to investigate whether each of the difference scores (joy/angry; smile/brow furrow, zygomaticus mayor/corrugator supercilii) differs from zero. Regarding the Affectiva Software, difference scores were significantly positive in the happy condition for both the smile/brow furrow, M = 0.97, CI = [0.92, 1.02], t(19) = 40.81, p < 0.001 and the joy/anger score, M = 1.00, CI = [0.9996, 1.00], t(19) = 8837.84, p < 0.001. They were significantly negative in the angry condition, for both the smile/brow furrow, M = −0.88, CI = [−1.08, −0.68], t(19) = −9.13, p < 0.001 and the joy/anger score, M = −0.80, CI = [−1.00; −0.59], t(19) = −8.25, p < 0.001. They did not differ from zero in the neutral condition for both the smile/brow furrow, M = −0.24, CI = [−0.64, 0.16], t(19) = −1.24, p = 0.116 and the joy/anger score, M = −0.24, CI = [−0.57, 0.08], t(19) = −1.55, p = 0.069. Regarding EMG, scores were also significantly positive in the happy, M = 0.63, CI = [0.44, 0.81], t(19) = 7.08, p < 0.001, and significantly negative in the angry condition, M = −0.79, CI = [−0.83; −0.74], t(19) = −34.41, p < 0.001, but they were also significantly negative in the neutral condition, M = −0.43, CI = [−0.55, −0.32], t(19) = −7.71, p < 0.001.

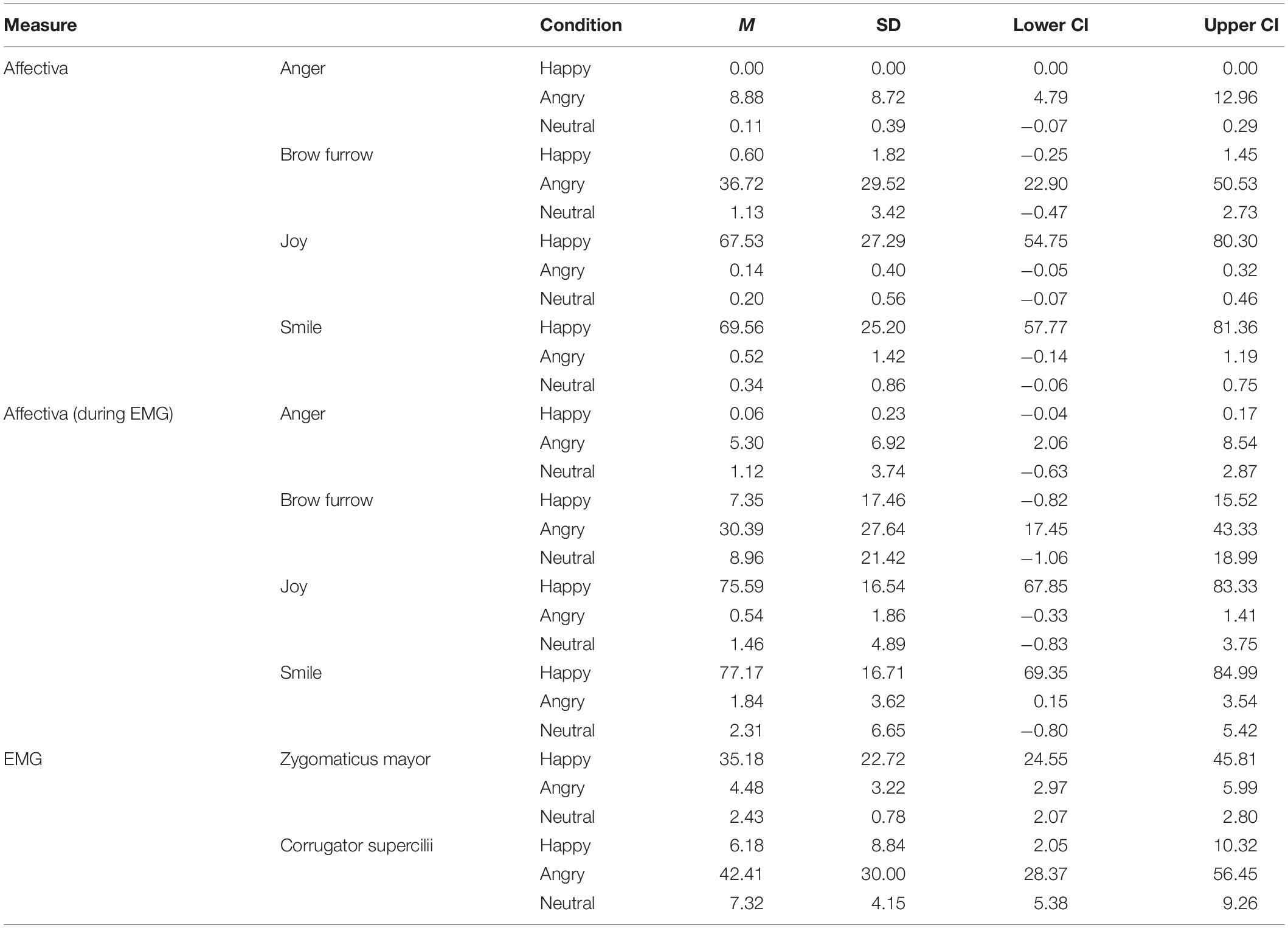

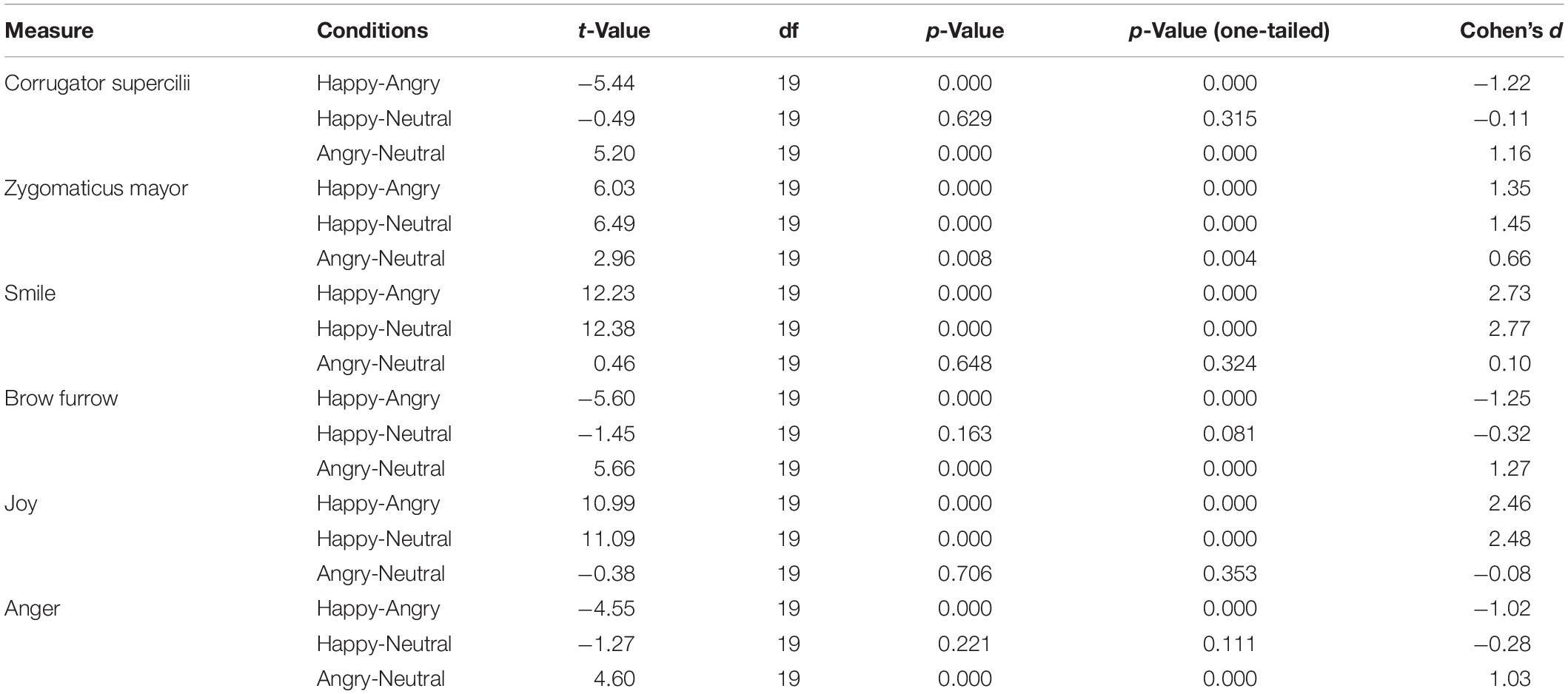

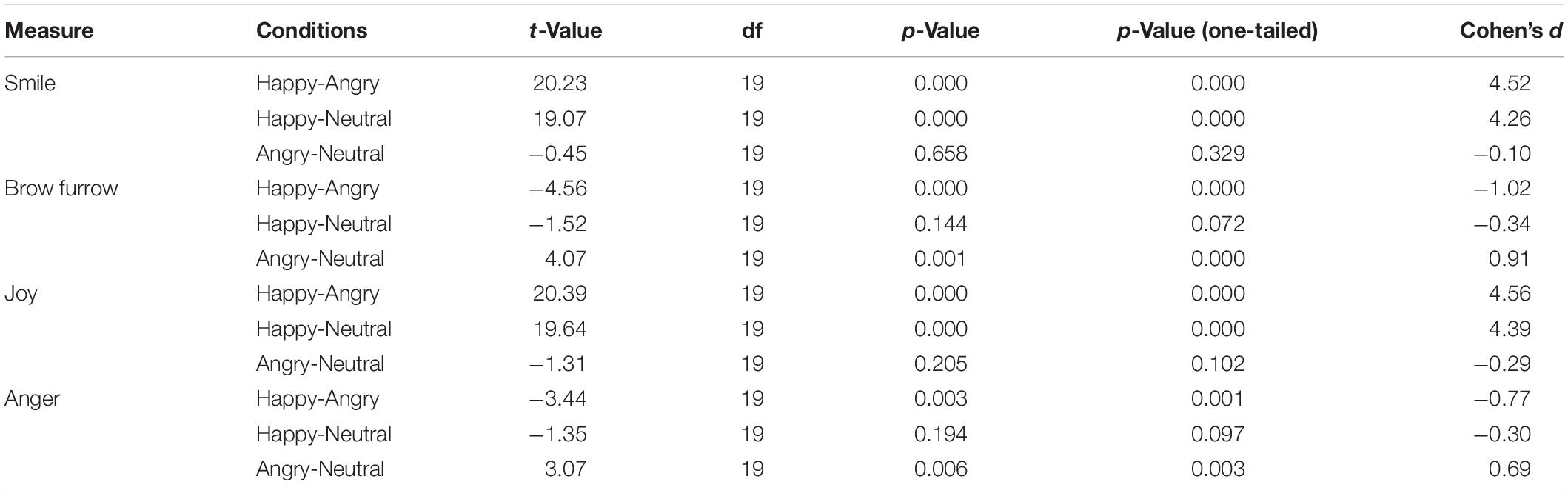

Following a reviewer suggestion, non-preregistered repeated measure ANOVAs were conducted, showing that mean Affectiva scores and mean EMG amplitudes were significantly different between the three conditions (all Greenhouse-Geisser corrected). Affectiva score “smile”: F(2, 38) = 151.07, p < 0.001, ηp2 = 0.89, Affectiva score “brow furrow”: F(2, 38) = 31.65, p < 0.001, ηp2 = 0.63, Affectiva score “joy”: F(2, 38) = 121.73, p < 0.001, ηp2 = 0.87, Affectiva score “anger”: F(2, 38) = 20.9, p < 0.001, ηp2 = 0.52, EMG zygomaticus mayor amplitude: F(2, 38) = 38.93, p < 0.001, ηp2 = 0.67, EMG corrugator supercilii amplitude: F(2, 38) = 26.75, p < 0.001, ηp2 = 0.59. Descriptive statistics are displayed in Table 1, showing that the Affectiva scores for joy and smile, as well as the EMG amplitudes of the zygomaticus mayor were highest in the happy condition, while the Affectiva scores for anger and brow furrow and the corrugator supercilii amplitude were highest in the angry condition. The pre-registered dependent sample t-tests (specifically addressing the hypotheses) confirmed these observations (Table 2). Note that the only exception from this pattern was the zygomaticus mayor score which was also significantly higher in the angry than the neutral condition.

Exploratory Analyses

To explore the reliability of the Affectiva software in trials, in which participants were wearing the electrodes, identical analyses were conducted with the difference scores of Affectiva Software computed during the EMG session. This exploratory analysis was conducted, firstly, to investigate whether both measures can be collected simultaneously, in case researchers want to combine the high sensitivity of EMG with the wide variety of different emotions recognized by Affectiva. Secondly, a direct within trial comparison of both measures was possible this way, using identical trials and therefore excluding the possibility of random variations in emotional expressions between trials.

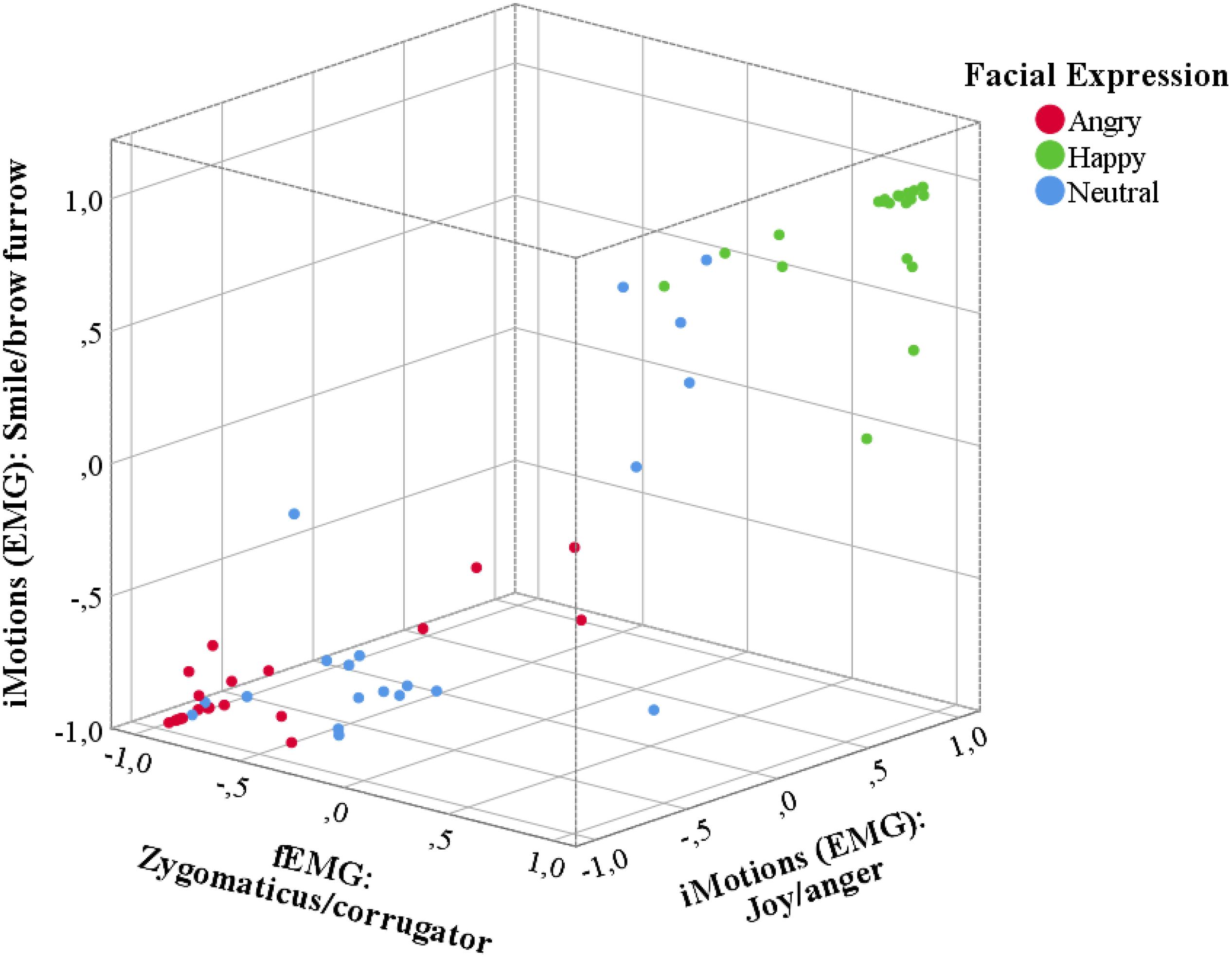

Correlations between the Affectiva values and the EMG were computed, showing a significant correlation of the EMG measures with the difference score for Affectiva smile and brow furrow values, r(60) = 0.754, p < 0.001, and the difference score for Affectiva joy and anger values, r(60) = 0.686, p < 0.001 (Figure 2).

Figure 2. Three-dimensional scatter plot of mean difference scores between the Affectiva scores for smile and brow furrow and the scores for “joy” and “anger” during the EMG condition, as well as the EMG amplitudes for zygomaticus mayor and corrugator supercilii activity. Note that the difference score is computed to be more negative (closer to –1) if the respective measure indicates a more negative (i.e., angry) expression and more positive (closer to 1) if the measure indicated a positive (i.e., happy) expression. Red dots indicate the difference scores in the angry condition, green dots in the happy condition and blue dots in the neutral condition.

One-sample t-tests showed that difference scores were still significantly positive in the happy condition for both the smile/brow furrow, M = 0.88, CI = [0.77, 0.99], t(19) = 16.69, p < 0.001 and the joy/anger score, M = 1.00, CI = [1.00, 1.00], t(19) = 667.11, p < 0.001. They were significantly negative in the angry condition, for both the smile/brow furrow, M = −0.80, CI = [−0.94, −0.65], t(19) = −11.43, p < 0.001, and the joy/anger score, M = −0.62, CI = [−0.92; −0.32], t(19) = −4.36, p < 0.001. However, there now was a significant difference from zero in the neutral condition for the smile/brow furrow, M = −0.48, CI = [−0.82, −0.13], t(19) = −2.88, p = 0.005, though not the joy/anger score, M = −0.17, CI = [−0.54, 0.20], t(19) = −0.96, p = 0.176. Absolute value differences between conditions were investigated using dependent sample t-tests, revealing the same pattern as for the Affectiva scores without simultaneous EMG recording (Table 3).

Table 3. Dependent sample t-tests comparing the Affectiva Scores during EMG testing between conditions.

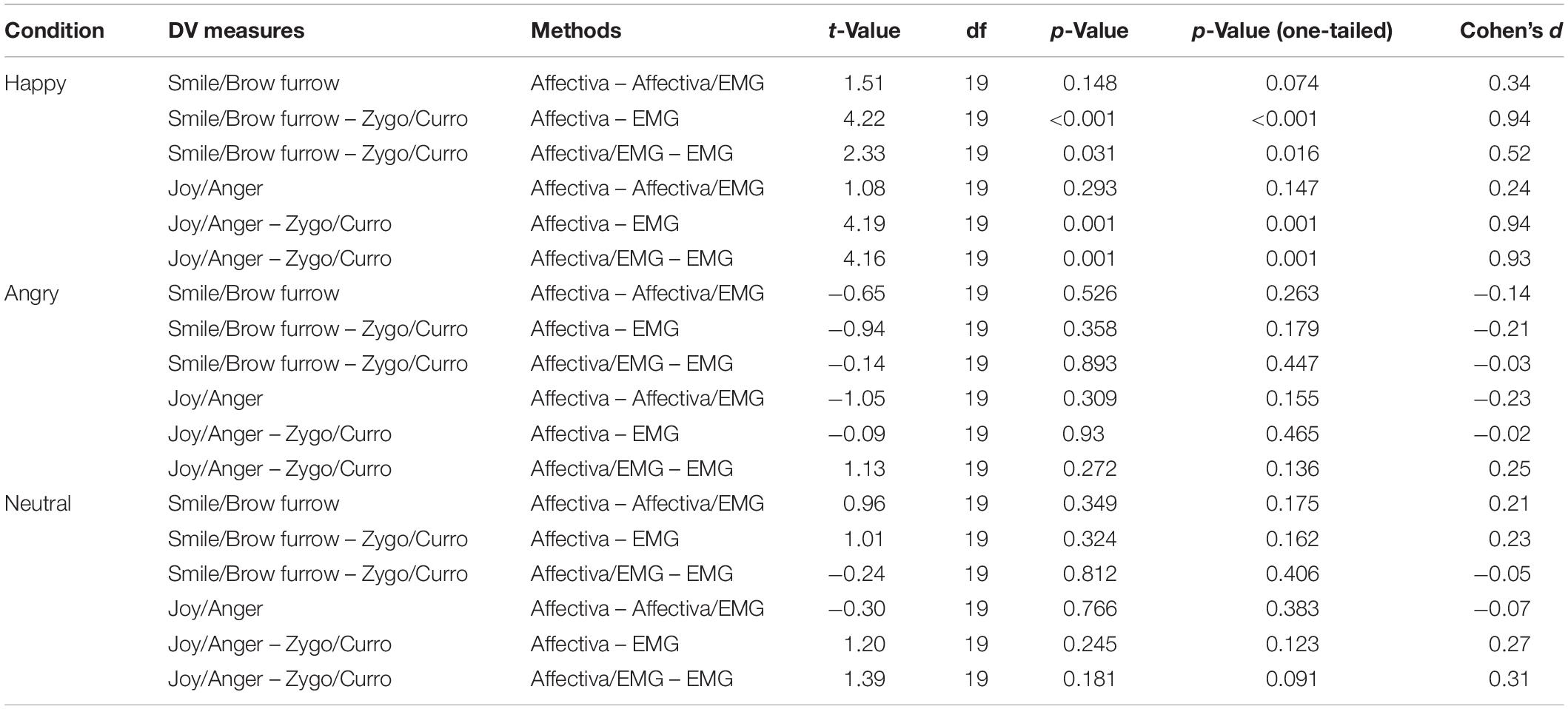

Finally, paired t-tests were computed to explore differences in mean difference scores between the three different methods (Affectiva, Affectiva with attached EMG electrodes and EMG amplitudes) within each of the three emotion conditions (happy, angry, neutral). These analyses revealed no significant differences between the DV scores of the two Affectiva methods (videos with- and without attached EMG electrodes) for any of the three conditions. Affectiva DV scores (both for the measures “Smile” and “Brow furrow”, as well as the measures “Joy” and “Anger”) were significantly higher than the EMG DV scores in the happy condition, suggesting that Affectiva indicates a stronger positive expression than EMG. No differences between the measures were significant in the angry or neutral condition (Table 4).

Table 4. Comparison of mean difference scores between the three different methods (Affectiva, Affectiva with attached EMG electrodes and EMG amplitudes) within each of the three emotion conditions (happy, angry, neutral).

Discussion

The current study aimed at comparing the Affectiva software with EMG concerning the identification of the imitated emotions happiness and anger compared to neutral expressions. We expected measures of Affectiva scores to be comparable with EMG measures. In line with our hypotheses, there was a significant correlation between EMG and Affectiva measures. Difference scores were significantly positive in happy conditions for all outcome measures (Affectiva joy/anger and smile/brow furrow scores and EMG zygomaticus mayor/corrugator supercilii scores) and negative in angry conditions. Only in the neutral condition, EMG scores were significantly negative, indicating that there was more corrugator supercilii than zygomaticus mayor activity. Contrasts between conditions (happy, angry, neutral) in raw scores of the measures confirmed the expected findings, with the emotion that was measured with each respective measure scoring significantly higher than both other emotions, which in turn did not significantly differ from one another, as they scored generally low. The only exception was that the Zygomaticus amplitude was also higher in the negative than the neutral condition.

In addition, we explored whether Affectiva is still applicable even when participants are wearing electrodes. Correlations with EMG scores were again very high. Happy expressions showed significantly positive scores and angry expressions negative scores. However, as for the EMG measures, neutral expressions now received negative scores.

In summary, both EMG and Affectiva Software could correctly identify happy and angry emotions imitated by participants and differentiate them from neutral faces. High correlations show that both methods are generally comparable. Furthermore, our exploratory analysis demonstrated that Affectiva software can still be used on videos of participants wearing electrodes used for facial EMG recording. However, compared to the videos in which participants were not wearing electrodes, the software was now less accurate, considering neutral facial expressions as negative. A previous study correlating FaceReader scores with fMEG, also suggested a tendency of the software to recognize neutral faces as negative – in this case “sad” (Suhr, 2017). However, note that in the current study during the EMG session both EMG and the Affectiva Software considered the neutral expression as negative. Therefore, one alternative explanation is that subjects might have displayed more negative facial expressions in the EMG condition, leading to negative scores in neutral conditions for EMG and Affectiva. The same participants completed all conditions, excluding the possibility that inert features of their faces caused the effect. Furthermore, the order of blocks with and without EMG was counterbalanced, excluding the possibility of an order effect. Possibly, the electrodes applied according to standard procedures affect facial expressions, leading to the observed differences. For example, there might be additional tension in the face due to the electrodes placed on the cheeks. In this case, Affectiva software used on videos without electrodes would provide more reliable values than EMG.

The current study focused on the most commonly researched emotions – happiness and anger – by investigating zygomaticus mayor and corrugator supercilii responses. Future research could explore other emotions. Furthermore, the current study explicitly instructed participants to imitate emotional expressions. Therefore, the intensity of displayed emotions may have been particularly strong (highest level intensity on the Facial Action Coding System). However, Stöckli et al. (2018) found the Affectiva software to be less precise in identifying emotional expressions when expressed in natural contexts and therefore more subtle emotions were analyzed than when participants were explicitly asked to display emotions. In contrast, EMG is highly sensitive and can therefore also detect very subtle or implicit displays of emotions. Additional research could explore implicit expressions of emotion in response to stimuli, to investigate the suitability of Affectiva compared to EMG in recognizing more subtle realistic emotional expressions. Furthermore, it should be noted that participants were asked to imitate emotional expressions. They may therefore not be experiencing these emotions but rather just acting them out. This method was chosen because previous research suggests that participants find it easier to display emotional expressions when they see them (Korb et al., 2010; Otte et al., 2011). Furthermore, the use of KDEF stimuli ensured that the expressions in the current study are related to a stimulus set that is often used for research. However, posed facial expressions may differ from spontaneously and naturally elicited expressions of emotions and may even be independent of the actual emotions that a person is feeling (Fridlund, 2014). The posed expressions from the validated KDEF database may furthermore differ from the expressions that each individual participant may display when expressing a specific emotion. Therefore, more natural displays of emotions should be used in future investigations. These more natural displays could either involve asking participants to display a specific emotion on their own, although these natural emotions may be less pronounced than facial displays while participants view the specific emotional expression (Korb et al., 2010; Otte et al., 2011). Alternatively, emotions could be elicited naturally, for example by presenting emotion-inducing videos. This manipulation may lead to more natural, though less controlled, facial displays of emotion.

Participants in the current study were asked to keep their head in a specific location during the recording. As people tend to keep a constant distance to computer screens this behavior is fairly natural in the lab. However, if more realistic scenarios are studied in the real world, the position may play a role. The temporal resolution of EMG can be very high, while the resolution of software is defined by the technical configuration of the camera used. In the current study a sampling rate of 60 Hz was sufficient to measure emotional displays.

In conclusion, the current study showed that the Affectiva software can detect the emotions “happy” and “angry” from faces and distinguish them from neutral expressions. The determined values further significantly correlate with EMG measures, suggesting that both methods are comparable.

Data Availability Statement

All datasets generated for this study are included in the article/Supplementary Material.

Ethics Statement

The studies involving human participants were reviewed and approved by the Ethics Committee of the Institute of Psychology at the University of Göttingen. The participants provided their written informed consent to participate in this study.

Author Contributions

LK was involved in the conception and design of the work, supervising the acquisition and data analysis, interpreting the data, drafting the manuscript, and revising it critically for important intellectual content. DF was involved in the design of the work, the acquisition, analysis and interpretation of the data, and revising the manuscript. AS was involved in the conception and design of the work, supervising the data analysis, interpreting the data, and revising the manuscript critically for important intellectual content.

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

We would like to thank Hannah Gessler for her help in preparing stimuli and set up, and Fabian Bockhop for assistance during the data collection.

Supplementary Material

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fpsyg.2020.00329/full#supplementary-material

Footnotes

- ^ Note that the term “joy” is used to describe happy facial expressions in the Affectiva software. However, in definitions used for research purposes, joy may describe a more general feeling, while the term “happy” is more often used to describe facial expressions related to basic emotions.

References

Achaibou, A., Pourtois, G., Schwartz, S., and Vuilleumier, P. (2008). Simultaneous recording of EEG and facial muscle reactions during spontaneous emotional mimicry. Neuropsychologia 46, 1104–1113. doi: 10.1016/j.neuropsychologia.2007.10.019

Bartlett, M., Littlewort, G., Wu, T., and Movellan, J. (2008). “Computer expression recognition toolbox,” in Proceesings of the 2008 8th IEEE International Conference on Automatic Face & Gesture Recognition, Amsterdam.

Bayer, M., Sommer, W., and Schacht, A. (2010). Reading emotional words within sentences: the impact of arousal and valence on event-related potentials. Int. J. Psychophysiol. 78, 299–307. doi: 10.1016/j.ijpsycho.2010.09.004

Beringer, M., Spohn, F., Hildebrandt, A., Wacker, J., and Recio, G. (2019). Reliability and validity of machine vision for the assessment of facial expressions. Cogn. Syst. Res. 56, 119–132. doi: 10.1016/j.cogsys.2019.03.009

D’Arcey, J. T. (2013). Assessing the Validity of FaceReader using Facial EMG. Chico: California State University.

Dimberg, U. (1982). Facial reactions to facial expressions. Psychophysiology 19, 643–647. doi: 10.1111/j.1469-8986.1982.tb02516.x

Dimberg, U., Andréasson, P., and Thunberg, M. (2011). Emotional empathy and facial reactions to facial expressions. J. Psychophysiol. 25, 26–31. doi: 10.1027/0269-8803/a000029

Dimberg, U., and Lundquist, L.-O. (1990). Gender differences in facial reactions to facial expressions. Biol. Psychol. 30, 151–159. doi: 10.1016/0301-0511(90)90024-q

Dimberg, U., and Petterson, M. (2000). Facial reactions to happy and angry facial expressions: evidence for right hemisphere dominance. Psychophysiology 37, 693–696. doi: 10.1111/1469-8986.3750693

Dimberg, U., and Söderkvist, S. (2011). The voluntary facial action technique: a method to test the facial feedback hypothesis. J. Nonverbal Behav. 35, 17–33. doi: 10.1007/s10919-010-0098-6

Dimberg, U., and Thunberg, M. (1998). Rapid facial reactions to emotional facial expressions. Scand. J. Psychol. 39, 39–45. doi: 10.1111/1467-9450.00054

Dimberg, U., and Thunberg, M. (2012). Empathy, emotional contagion, and rapid facial reactions to angry and happy facial expressions. Psych J. 1, 118–127. doi: 10.1002/pchj.4

Dimberg, U., Thunberg, M., and Elmehed, K. (2000). Unconscious facial reactions to emotional facial expressions. Psychol. Sci. 11, 86–89. doi: 10.1111/1467-9280.00221

Dimberg, U., Thunberg, M., and Grunedal, S. (2002). Facial reactions to emotional stimuli: automatically controlled emotional responses. Cogn. Emot. 16, 449–471. doi: 10.1080/02699930143000356

Ekman, P. (1992). An argument for basic emotions. Cogn. Emot. 6, 169–200. doi: 10.1016/j.concog.2017.10.008

Ekman, P. (1999). “Basic emotions,” in Handbook of Cognition and Emotion, eds T. Dalgleish and M. J. Power (Hoboken, NJ: John Wiley & Sons Ltd), 45–60.

Ekman, P., and Friesen, W. V. (1976). Measuring facial movement. Environ. Psychol. Nonverbal Behav. 1, 56–75. doi: 10.1007/bf01115465

Ellsworth, P. C., and Scherer, K. R. (2003). Appraisal processes in emotion. Handb. Affect. Sci. 572:V595.

Eyevis (2013). EmoVu Emotion Recognition Software. Available at: http://www.discoversdk.com/products/eyeris-emovu-sdk (accessed February 17, 2020).

Fridlund, A. J. (2014). Human Facial Expression: An Evolutionary View. Cambridge, MA: Academic Press.

Fridlund, A. J., and Cacioppo, J. T. (1986). Guidelines for human electromyographic research. Psychophysiology 23, 567–589. doi: 10.1111/j.1469-8986.1986.tb00676.x

Hofman, D., Bos, P. A., Schutter, D. J., and van Honk, J. (2012). Fairness modulates non-conscious facial mimicry in women. Proc. R. Soc. Lond. B Biol. Sci. 279, 3535–3539. doi: 10.1098/rspb.2012.0694

iMotions (2013). Facet iMotions Biometric Research Platform. Available at: https://imotions.com/emotient/ (accessed August 21, 2019).

iMotions (2015). Affectiva iMotions Biometric Research Platform. Available at: https://imotions.com/affectiva/ (accessed August 21, 2019).

Korb, S., Grandjean, D., and Scherer, K. R. (2010). Timing and voluntary suppression of facial mimicry to smiling faces in a Go/NoGo task—An EMG study. Biol. Psychol. 85, 347–349. doi: 10.1016/j.biopsycho.2010.07.012

Kret, M. E., Stekelenburg, J. J., Roelofs, K., and De Gelder, B. (2013). Perception of face and body expressions using electromyography, pupillometry and gaze measures. Front. Psychol. 4:28. doi: 10.3389/fpsyg.2013.00028

Künecke, J., Sommer, W., Schacht, A., and Palazova, M. (2015). Embodied simulation of emotional valence: facial muscle responses to abstract and concrete words. Psychophysiology 52, 1590–1598. doi: 10.1111/psyp.12555

Larsen, J. T., Norris, C. J., and Cacioppo, J. T. (2003). Effects of positive and negative affect on electromyographic activity over zygomaticus major and corrugator supercilii. Psychophysiology 40, 776–785. doi: 10.1111/1469-8986.00078

Lundqvist, D., Flykt, A., and Öhman, A. (1998). The Karolinska directed emotional faces (KDEF). Stockholm: Karolinska Institutet.

Otte, E., Habel, U., Schulte-Rüther, M., Konrad, K., and Koch, I. (2011). Interference in simultaneously perceiving and producing facial expressions—Evidence from electromyography. Neuropsychologia 49, 124–130. doi: 10.1016/j.neuropsychologia.2010.11.005

Peirce, J., Gray, J. R., Simpson, S., MacAskill, M., Höchenberger, R., Sogo, H., et al. (2019). PsychoPy2: experiments in behavior made easy. Behav. Res. Methods 51, 195–203. doi: 10.3758/s13428-018-01193-y

Schacht, A., Dimigen, O., and Sommer, W. (2010). Emotions in cognitive conflicts are not aversive but are task specific. Cogn. Affect. Behav. Neurosci. 10, 349–356. doi: 10.3758/cabn.10.3.349

Schacht, A., Nigbur, R., and Sommer, W. (2009). Emotions in go/nogo conflicts. Psychol. Res. PRPF 73, 843–856. doi: 10.1007/s00426-008-0192-0

Scherer, K. R. (1999). “Appraisal theory,” in Handbook of Cognition and Emotion, eds T. Dalgleish and M. J. Power (Hoboken, NJ: John Wiley & Sons Ltd), 637–663.

Scherer, K. R., Schorr, A., and Johnstone, T. (2001). Appraisal Processes in Emotion: Theory, Methods, Research. Oxford: Oxford University Press.

Stöckli, S., Schulte-Mecklenbeck, M., Borer, S., and Samson, A. C. (2018). Facial expression analysis with AFFDEX and FACET: a validation study. Behav. Res. Methods 50, 1446–1460. doi: 10.3758/s13428-017-0996-1

Suhr, Y. T. (2017). FaceReader, a Promising Instrument for Measuring Facial Emotion Expression? A Comparison to Facial Electromyography and Self-Reports. Ph.D. thesis, Utrecht University, Utrecht.

Taggart, R. W., Dressler, M., Kumar, P., Khan, S., and Coppola, J. F. (2016). “Determining emotions via facial expression analysis software,” in Proceedings of Student-Faculty Research Day, CSIS (New York, NY: Pace University).

Tan, J.-W., Walter, S., Scheck, A., Hrabal, D., Hoffmann, H., Kessler, H., et al. (2012). Repeatability of facial electromyography (EMG) activity over corrugator supercilii and zygomaticus major on differentiating various emotions. J. Ambient Intell. Humaniz. Comput. 3, 3–10. doi: 10.1007/s12652-011-0084-9

N. I. Technology (2007). Face Reader. Available at: http://noldus.com/human-behavior-research/products/facereader (accessed August 21, 2019).

Terzis, V., Moridis, C. N., and Economides, A. A. (2010). “Measuring instant emotions during a self-assessment test: the use of FaceReader,” in Proceedings of the 7th International Conference on Methods and Techniques in Behavioral Research, Eindhoven.

Keywords: facial expressions of emotion, automatic recognition, EMG, emotion recognition software, affectiva

Citation: Kulke L, Feyerabend D and Schacht A (2020) A Comparison of the Affectiva iMotions Facial Expression Analysis Software With EMG for Identifying Facial Expressions of Emotion. Front. Psychol. 11:329. doi: 10.3389/fpsyg.2020.00329

Received: 21 August 2019; Accepted: 11 February 2020;

Published: 28 February 2020.

Edited by:

Wenfeng Chen, Renmin University of China, ChinaReviewed by:

Antje B. M. Gerdes, Universität Mannheim, GermanyJacqueline Ann Rushby, University of New South Wales, Australia

Copyright © 2020 Kulke, Feyerabend and Schacht. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Louisa Kulke, lkulke@uni-goettingen.de

Louisa Kulke

Louisa Kulke Dennis Feyerabend

Dennis Feyerabend Annekathrin Schacht1,2

Annekathrin Schacht1,2