- 1Department of Psychiatry, University of Pennsylvania, Philadelphia, PA, United States

- 2State of Hawaii Child and Adolescent Mental Health Division, Honolulu, HI, United States

- 3City of Philadelphia Department of Behavioral Health and Intellectual disAbility Services, Philadelphia, PA, United States

- 4American Psychological Association, Washington, DC, United States

Objective: Public-sector behavioral health systems seeking to implement evidence-based treatments (EBTs) may face challenges selecting EBTs given their limited resources. This study describes and illustrates one method to calculate cost related to training and consultation to assist system-level decisions about which EBTs to select.

Methods: Training, consultation, and indirect labor costs were calculated for seven commonly implemented EBTs. Using extant literature, we then estimated the diagnoses and populations for which each EBT was indicated. Diagnostic and demographic information from Medicaid claims data were obtained from a large behavioral health payer organization and used to estimate the number of covered people with whom the EBT could be used and to calculate implementation-associated costs per consumer.

Results: Findings suggest substantial cost to therapists and service systems related to EBT training and consultation. Training and consultation costs varied by EBT, from Dialectical Behavior Therapy at $238.07 to Cognitive Behavioral Therapy at $0.18 per potential consumer served. Total cost did not correspond with the number of prospective consumers served by an EBT.

Conclusion: A cost-metric that accounts for the prospective recipients of a given EBT within a given population may provide insight into how systems should prioritize training efforts. Future policy should consider the financial burden of EBT implementation in relation to the context of the population being served and begin a dialog in creating incentives for EBT use.

Introduction

In recent years, many efforts to improve mental health have focused on increasing the use of evidence-based treatments (EBTs) within public-sector service systems. Therapist training is a necessary—but not sufficient—implementation strategy to increase EBT use (1). For public-sector service systems, large-scale training of therapists is often the first or only EBT implementation strategy. A combination of experiential and active learning (e.g., didactic and case consultation) tends to produce the most favorable therapist behavior change over time (2, 3). As a result, many EBT developers and certifying organizations now require that therapists receive both didactic foundational training and ongoing case consultation to be “certified” in an EBT (e.g., PCIT International).1 Training and consultation require an investment on the part of therapists, their agencies, and, especially in publicly funded systems, the city or state agency that oversees payment for care. For example, therapists and organizations may incur initial direct costs like attending week-long trainings to first learn about the EBT and subsequently participate in weekly consultation calls for 6–12 months to ensure treatment fidelity. Therapists’ time required to participate often results in substantial cost to the agencies which they work (4–8). For example, Lang and Connell (6) estimated that an agency participating in a Trauma Focused-Cognitive Behavioral Therapy learning collaborative, which included agency-wide training and ongoing consultation, spent $89,575 in direct (e.g., training) and indirect (e.g., preparation hours) costs.

While public-sector service systems have typically used other strategies to select EBT, such as stakeholder feedback in combination with federal- and/or state-policy (9, 10), the breadth of the population served, and the associated costs should be important drivers of choice. Utilizing existing service system data is important for strategic decision-making and implementation tailored to the population (11). Information about the population served is needed to make decisions about where to invest their limited resources by understanding the extent to which an EBT provides diagnostic and demographic “coverage” within a service system (9). Costs associated with EBTs are often noted as significant barriers for implementation (12–14) and thus far the cost-analysis metrics that have been used to study implementation have not considered the population coverage relative to the implementation cost (4–8). A metric that considers the potential consumer served allows for population-based and data-informed decisions when selecting the right EBT. This metric can also inform cost-evaluative decisions on how applicable an EBT will be for each relevant consumer within the service system.

In this study, we introduce a strategy for calculating a cost per prospective consumer metric to determine the extent to which an EBT covers a given service system. To generate this metric, population data derived from that existing service system are needed; and within behavioral health, insurance claims (15), or practice-monitoring data tied to billing (9, 16) have predominantly been used. These large person-period datasets typically contain information regarding consumer age, gender, diagnoses, service utilization, and medication prescribed. This study was conducted to demonstrate the impact of therapist training and consultation costs in a large public behavioral health system and to describe a complimentary metric for system decision-making when selecting EBT for their population. First, training and consultation requirements for certification among seven EBTs were documented. Next, training, consultation, and indirect labor costs for each EBT were calculated. Finally, the total cost of training, consultation, and indirect labor for each EBT was divided across the number of potential consumers based on diagnostic and demographic information.

Materials and Methods

Evidence-Based Treatments

Identification of EBT

We identified EBTs for this study using registries created by the American Psychological Association (17), which rely on Chambless and Hollon (18) definitions of EBT. The APA’s Division 12 (Society for Clinical Psychology)2 and Division 53 (Society for Child and Adolescent Clinical Psychology)3 websites were consulted to determine EBTs that fit the criteria of (a) having an in-person training, (b) ongoing consultation period, and (c) a certifying body through which therapists can become “certified” in the particular EBT. Seven EBTs were identified through these websites: (a) Cognitive Behavioral Therapy/Cognitive Therapy (19), (b) Cognitive Processing Therapy (20), (c) Dialectical Behavior Therapy (21), (d) Parent–Child Interaction Therapy (22, 23), (e) Prolonged Exposure (24, 25), (f) Modular Approach to Therapy for Children with Anxiety, Depression, Trauma, and Conduct Problems (26), and (g) Trauma Focused-Cognitive Behavioral Therapy (27).

Training and Consultation Cost

The cost of training and consultation was determined using information from the certifying body for each EBT (see Table 1 for certifying bodies for each EBT). First, the certifying body’s website was referenced for certification requirements, upcoming trainings, and cost associated with training, consultation, and certification. When prices were not listed, we contacted the certifying body to solicit current prices and requirements for training and consultation to obtain certification. Revenue loss was defined as the total amount of therapist hours spent on training and consultation, as opposed to providing therapy (i.e., billable hours). Hourly wage for therapists, as determined by the US Bureau of Labor Statistics for Philadelphia,4 was established as $38.37 per hour.

Diagnostic and Age Applicability

To determine the population to which an EBT was applicable, diagnostic and age profiles were created for each EBT. We referenced APA’s Divisions 12 and 53 websites, the credentialing body’s website, and PracticeWise Evidence-based Services Database (28) to identify the studies used to establish each EBT’s efficacy. For example, Division 12’s website lists DBT as having Strong Research Support for Borderline Personality Disorder,5 with six efficacy trials used to determine that status. The Division 12 website also lists Strong Research Support for CBT/CT for Attention-Deficit/Hyperactivity Disorder, Insomnia, Binge Eating Disorder, Bipolar Disorders, Bulimia Nervosa, Depressive Disorders, Generalized Anxiety Disorder, Obsessive Compulsive Disorder, Social Phobia, Panic Disorder, and Schizophrenia; CPT for Posttraumatic Stress Disorder; and PE for Posttraumatic Stress Disorder. Efficacy trials for PCIT, MATCH, and TF-CBT were identified through comprehensive literature reviews cited by Division 53 (29–31) and the credentialing body’s website (PCIT International, PracticeWise, and TF-CBT National Therapist Certification Program, respectively).

Efficacy trials were coded by two independent raters (Kelsie H. Okamura and Courtney L. Benjamin Wolk) for diagnosis and age range used within each trial. Coders met to regularly resolve discrepancies, using clinical judgment and the conservative criteria of only including diagnoses that the EBT was intended to treat. Specifically for youth CBT, the PracticeWise Evidence-based Services Database (32, 33), a searchable database synthesizing more than 800 treatment studies for youth with psychiatric disorders, was referenced to determine a CBT youth diagnostic and age profile. The database was searched for CBT trials to identify diagnoses and age ranges that met well-established criteria proposed by Chambless and Hollon (18).

Population-Based Data Source and Study Sample

Philadelphia County behavioral health Medicaid claims (N = 903,980) were used to identify a subset of consumers (N = 60,391) who received outpatient behavioral health services during November 2015 through October 2016. This 1-year time period was chosen because of the shift from ICD-9 and DSM-IV-TR diagnoses to ICD-10 and DSM-5 diagnoses. De-identified claims included age at the first claim, sex, race, psychiatric diagnosis, and behavioral health service use. Behavioral health services were categorized based on level of care codes and only claims reflective of outpatient therapy services were retained (i.e., assessment and medication management codes were excluded). The final sample included the consumers with two or more outpatient claims aggregated by ICD-10 diagnosis. Consumers may have been counted more than once across but not within ICD-10 diagnoses. This allowed for more consumer coverage and the ability to account for multiple psychiatric diagnoses. The University of Pennsylvania and the City of Philadelphia Department of Public Health Institutional Review Boards determined that this study was exempt from review due to the masking of identifiable information.

The final study sample included 897,064 claims representing 53,475 unique consumers. There were 6,916 duplicate consumers removed from analyses due to multiple claims being submitted for the same consumer for more than one diagnosis. In instances of multiple claims, the first claim per consumer was retained. Consumers were 53.4% female (n = 34,507) and averaged 29.91 (SD = 17.99) years of age. Race included African-American (42.7%, n = 27,573), Hispanic (37.8%, n = 24,339), White (15.6%, n = 10,061), and Other (3.9%, n = 2,531).

Cost-Analysis Metric

The cost of therapist training, consultation, certification, and revenue loss were summed to calculate a total training and consultation cost for each EBT. This total training and consultation therapist cost was then divided by the number of consumers within Philadelphia County Medicaid claims who matched the EBT diagnostic and age profile. This formula resulted in an EBT training and consultation cost per potential consumer:

Results

Training and Consultation Requirements

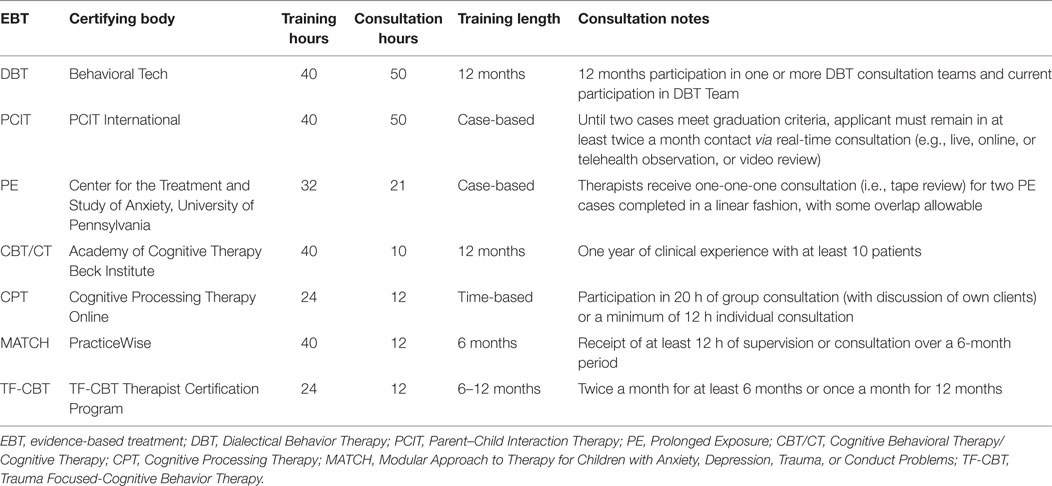

Certifying bodies, training hours, consultation hours, training length, and specific criteria related to consultation are detailed in Table 1. Across EBTs, 2–5 days of in-person training were required for certification. TF-CBT and CPT both required online training in addition to the in-person training. Trainings were provided by certified trainers in each respective EBT, identified by the certifying body. Regarding consultation, DBT and PCIT required the most ongoing consultation (i.e., bimonthly contact for approximately a year), whereas CBT/CT required fewer hours (i.e., 1 year of clinical experience with 10 h of consultation). Live feedback in the form of tape review or telehealth observation was included in the consultation descriptions for PCIT and PE. Consultation hours typically spanned 6–12 months. MATCH and TF-CBT gave the option of meeting twice per month for 6 months or once per month for 12 months. PCIT and PE consultation were based on completion of two cases rather than a set time frame. CPT was similar in that it required 20 h of group or 12 h of individual consultation. Consultation was provided by a certified supervisor identified by the certifying body.

Training and Consultation Cost

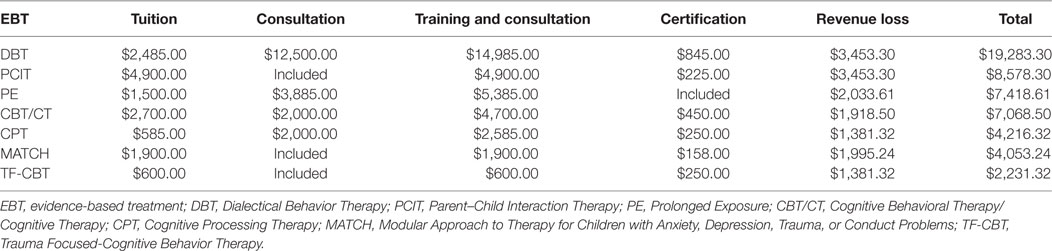

Training, consultation, certification, and revenue loss costs were summed to form a total cost in Table 2. EBT are rank ordered by their total cost, with DBT being the most expensive to TF-CBT being the least expensive. Training costs ranged from $585 for CPT to $4,900 for PCIT per therapist. However, consultation costs are included in the PCIT training cost. In addition to PCIT, MATCH and TF-CBT included the cost of consultation into their training cost. Stand-alone consultation prices ranged from $2,000 to $12,500, with consultation costs as either a set rate (i.e., $2,000 for CBT/CT consultation), per session rate (i.e., $185 for PE), or an hourly rate (i.e., $250 per hour for DBT, $200 per hour for CPT).

Cost per Prospective Consumer

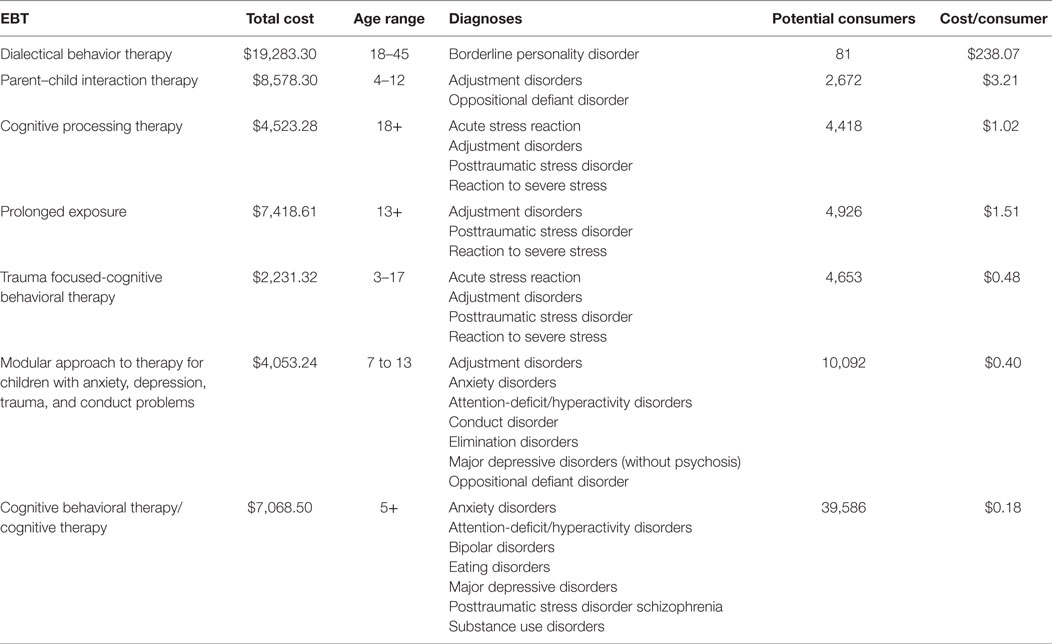

Prospective consumer costs were calculated by summing the total cost of training, consultation, certification, and revenue loss, and dividing that among the number of unique consumers fitting each EBT diagnostic and age profile. Table 3 details the total cost, age range in years, diagnoses, number of unique consumers fitting the diagnostic and age profile, and a cost per consumer (total cost/consumers) and is ordered by the per prospective consumer cost (most to least expensive). Cognitive Behavioral Therapy/Cognitive Therapy was the least expensive per consumer ($0.18) and covered the most prospective consumers (n = 39,586). In contrast, DBT was the most expensive per consumer ($238.07) and covered the fewest prospective consumers (n = 81).

Discussion

The goal of this study was to develop a cost-analysis metric around the specific implementation strategy of EBT training and consultation while considering the population being served. This is particularly important given the financial pressures that large behavioral health services systems face to effectively implement EBT and manage tax-payer dollars and costs to the system, agencies, therapists, and consumers. Our study used seven common EBTs and compared training and consultation hours and prices and calculated per prospective consumer costs in a large behavioral health system. Training and consultation requirements and costs varied widely across EBT. Training and consultation costs ranged from $600 to $14,985 per therapist, and when considering certification fees and revenue loss from time spent in training rather than serving consumers, total costs ranged from $2,231.32 to $19,283.30. This represents a substantial investment to therapists, organizations, and systems. For some EBTs, consultation emerged as the most time-consuming and costly aspect, which is often emphasized as an important implementation strategy (2). Total cost did not correspond with the number of prospective consumers served by an EBT in our current behavioral health system sample. That is, the most expensive EBTs were not those that the most prospective consumers would benefit. This cost-analysis metric utilizing prospective consumer behavioral health outpatient claims appears to be a useful tool for large system decision-making in choosing EBT.

The costliest EBT to train (i.e., DBT) covered the fewest consumers in the system, likely because few consumers had a borderline personality disorder diagnosis. It is important to reiterate here that we used conservative diagnostic criteria for classifying which disorders a treatment was evidence-based for, and as such, may have excluded groups of consumers that may benefit from DBT (e.g., youth with suicidal ideation). We discuss this more in our limitations section as well as the cost-savings of having such a specialized EBT within a behavioral health system. Some of the less expensive EBTs provided greater consumer coverage. Systems considering which EBTs to invest in may wish to consider a tiered approach. That is, begin with (a) a generalist EBT (i.e., CBT/CT and MATCH) and then consider adding on (b) trauma focused (i.e., TF-CBT, PE, or CPT), and (c) other specialty EBT (i.e., PCIT and DBT) depending on the prospective consumers served. The proposed cost-analysis metric may be particularly useful for systems seeking to understand the financial impact of specialty EBT (34). While most costly in our study, if a specialty EBT like DBT aligns well with system priorities, such as reducing inpatient hospitalization rates, residential treatment utilization, or other out of home placement, it may make the additional investment worthwhile. Furthermore, it may be beneficial for systems to create a ratio of therapists trained to prospective consumers served to inform future training efforts. This tiered approach also has implications for research which is beginning to suggest that attitudes (35) and knowledge (36) vary by practices and EBT, suggesting that our field’s conceptualization of EBT as all-encompassing may be misguided. Moreover, treatment developers may wish to consider building modularity and tiered decision-making into interventions to increase applicability to a broader range of consumers. A tiered approach to choosing and conceptualizing EBT may facilitate decisions about which EBTs to compare and study within effectiveness and implementation studies (e.g., comparing two generalist type EBTs rather than a specialty EBT and generalist EBT).

In-person didactic training and ongoing consultation were required across all seven EBTs for certification. The typical time period for in-person training was 1 week (40 h); however, CPT and TF-CBT required only 2 days (24 h) in-person training with completion of an additional online course as a pre-requisite for certification. Reviews of empirical studies on training have concluded that didactic training alone does not produce change in therapist behavior and should be combined with ongoing feedback and consultation (2, 3). However, it is unclear from the literature the extent to which didactic trainings need to be delivered in-person and the requisite amount of training hours to attain competency. Our findings suggest an emerging standard of 40 h for didactic training. From a system’s perspective, taking cohorts of service-delivering therapists offline for a week may be perceived as both costly and detrimental to consumers receiving services. However, if multiple systems begin to adopt this convention of training and consultation as requirements for employment and credentialing as well as enhance outpatient rates to absorb some of those costs, they may be more acceptable and feasible to provider agencies.

Ongoing consultation requirements also place considerable demand on the therapist and system. In this study, consultation requirements were observed to vary even more than didactic training requirements. For example, CPT, MATCH, and TF-CBT required 12 h of supervision across varying time frames (e.g., 6–12 months, see Table 1), whereas DBT and PCIT required a year of ongoing consultation with bimonthly attendance. Research has suggested that the purpose of ongoing consultation is to give the trainee the opportunity to apply the skills learned in didactics with sufficient supervision and support (37, 38). Typically, consultation entails ongoing case-review, which may or may not take the form of reviewing session recordings or live feedback. Indeed, only PCIT and PE included live or taped feedback as a part of their consultation model. Consistent with didactic training, the frequency, and depth of consultation needed to fully achieve competency has not been established and this may impact cost. For example, consultation with review of session recordings is more time-consuming than case-based discussions. Furthermore, research on training and sustainability has noted that even when therapists are comprehensively trained and supervised in EBT they do not use EBT frequently in their practice (2). Determining the optimal duration and format for didactic training and consultation should be an implementation science priority. For public-sector service systems, there are likely many considerations when deciding which EBT(s) to invest in including time, cost, policy, and population-based characteristics. For example, should a service system first choose an EBT that requires less training and consultation (e.g., MATCH) over one that requires a longer training and consultation time frame (e.g., DBT) to increase EBT capacity quickly? The answer to this question is beyond the scope of this study. However, initial findings suggest that the variation between EBTs is substantial enough to warrant further attention.

The results of this study should be considered within the context of several limitations. First, our study used administrative Medicaid claims data, which may not be reflective of the entire service-seeking population (e.g., private insurance covered consumers or population prevalence within the community). Furthermore, several studies have suggested that Medicaid claims data may not be diagnostically accurate (39–41). However, studies have demonstrated that the agreement of Medicaid claims diagnoses to clinical data is around 85% (39, 40) suggesting that the inaccuracy of claims may be related to an under-identification of disorders rather than inaccuracy of diagnosis. Also related to diagnosis, some of the efficacy trials that we coded to create age and diagnostic profiles included multiple psychiatric diagnoses, which may suggest that the corresponding EBT would be appropriate for both the intended and comorbid conditions. In these instances, we took a conservative approach and only considered the diagnoses for which an EBT primarily targeted. For example, a trial of DBT for individuals with borderline personality disorder and cooccurring substance use was coded as effective for adults with a diagnosis of borderline personality disorder but not for individuals with a primary substance use disorder diagnosis. Future studies may wish to examine broader diagnostic categories (e.g., depressive disorders versus major depressive disorder) or behavioral codes (e.g., suicidality), which may represent a more inclusive approach. In addition, replication using national epidemiological data with standardized diagnostic assessments [e.g., Ref. (42, 43)] would circumvent concerns about diagnostic accuracy and provide additional insight into the proportion nationally that might benefit from specific EBTs. It is also important to note that this cost-analysis metric, while not statistically or methodologically difficult to apply, does require some expertise in using claims data. Therefore, public-sector service systems will need administrators, analysts, or external research/evaluation partners to apply the cost-analysis metric to claims datasets.

We examined the costs associated with specific implementation strategies (i.e., training and consultation) without considering the effectiveness of the intervention itself (i.e., cost-effectiveness analysis, especially in the case of DBT). Raghavan (44) has noted that estimating implementation costs is different from cost-effectiveness as it is influenced by the entity (e.g., system, agency, and therapist) to which the cost is associated as well as the strategy, EBT, and setting (45). Our goal was to understand the direct and indirect costs at the population level that may be associated with the implementation strategy of EBT training and consultation in a large public behavioral health system. One important caveat was that training and consultation costs were calculated at the individual therapist level, which may not parallel costs for system-wide trainings in the community (46). Often, partnerships and contracts are executed to train and provide consultation for large cohorts of therapists within the system versus using a cost per therapist model (47). In addition, indirect costs were calculated based on therapist wage loss during training and consultation (and not revenue loss to the provider agency), without accounting for other contributing activities to sustaining the EBT including supervision, non-billable preparation hours, and travel time. Again, our focus was on the implementation strategy of training and consultation and is consistent with other studies that have evaluated a discrete amount of time as a part of the indirect implementation cost (44). Furthermore, Beidas et al. (12) have demonstrated that high turnover often affects the fiscal landscape of EBP implementation and our study did not account for loss on investment or the extent to which a therapist needed to stay within the system for a good return on investment. System policy makers, administrators, and researchers will need to collaboratively set standards for training requirements and cost and conduct cost-effectiveness studies that are linked to consumer outcomes.

Despite these limitations, this study proposes a methodology for considering which EBT to choose within a large behavioral health system. We propose a tiered approach to selecting EBT, allowing our cost-analysis metric, stakeholder feedback, and system priorities to influence the selection. Our cost calculations may also serve as a basis for policy around incentivizing the use of EBT (1), especially in the early stages of implementation when the system and agency can expect a loss in revenue due to therapist productivity and agency revenue. For example, Timmer and Urquiza (48) described a demonstration project in Los Angeles County Department of Mental Health that reimbursed agencies for lost productivity hours during an initial training initiative. While some systems have mandated the use of EBT (49, 50), few systems have begun to incentivize the use of EBT (i.e., Chester County, PA, USA; City of Philadelphia Department of Behavioral Health and Intellectual disAbility Services). Understanding the effectiveness of mandates and incentives in therapist utilization and consumer receipt of EBT as well as improved clinical outcomes will be the next era of implementation research, and developing pragmatic cost-analysis metrics will enable large systems to make decisions about which EBT to adopt for whom. Moreover, developing methods and testing them within and across large systems of care will enhance implementation science and generalizability of findings in health services research.

Author Contributions

KO was responsible for all aspects of this manuscript, from conceptualization to writing. CW and DM provided consultation in conceptualization and editing. CK-Y performed data analysis of Medicaid claims data. ZC provided consultation in health economics. RS, RB, RR, SW, and AE provided additional feedback and editing of the manuscript.

Conflict of Interest Statement

No authors declare any personal, professional, or financial relationships that could potentially be construed as a conflict of interest.

The reviewer AB and handling Editor declared their shared affiliation.

Funding

KO is a 2017 recipient of the Child Intervention, Prevention, and Services (CHIPS) Fellowship, funded through an award from the National Institute of Mental Health (5R25MH06836713) and a Robert Wood Johnson Foundation New Connections Scholar. CW is an investigator with the Implementation Research Institute (IRI), at the George Warren Brown School of Social Work, Washington University in St. Louis; funded through an award from the National Institute of Mental Health (5R25MH08091607) and the Department of Veterans Affairs, Health Services Research & Development Service, Quality Enhancement Research Initiative (QUERI). RS (F32MH103960) and RB (K23MH099179) receive research support through the National Institute of Mental Health. RB, ZC, DM, KO, and CW are fellows of the Leonard Davis Institute for Health Economics, University of Pennsylvania.

Footnotes

- ^http://www.pcit.org/.

- ^http://www.div12.org/psychological-treatments/.

- ^http://effectivechildtherapy.org/.

- ^https://www.bls.gov/bls/blswage.htm.

- ^http://www.div12.org/psychological-treatments/disorders/borderline-personality-disorder/dialectical-behavior-therapy-for-borderline-personality-disorder/.

References

1. Powell BJ, Waltz TJ, Chinman MJ, Damschroder LJ, Smith JL, Matthieu MM, et al. A refined compilation of implementation strategies: results from the expert recommendations for implementing change (ERIC) project. Implement Sci (2015) 10(1):21. doi:10.1186/s13012-015-0209-1

2. Beidas RS, Kendall PC. Training therapists in evidence-based practice: a critical review of studies from a systems-contextual perspective. Clin Psychol SciPract (2010) 17:1–30. doi:10.1111/j.1468-2850.2009.01187.x

3. Herschell AD, Kolko DJ, Baumann BL, Davis AC. The role of therapist training in the implementation of psychosocial treatments: a review and critique with recommendations. Clin Psychol Rev (2010) 30(4):448–66. doi:10.1016/j.cpr.2010.02.005

4. Crome E, Shaw J, Baillie A. Costs and returns on training investment for empirically supported psychological interventions. Aust Health Rev (2017) 41(1):82–8. doi:10.1071/AH15129

5. Dopp AR, Hanson RF, Saunders BE, Dismuke CE, Moreland AD. Community-based implementation of trauma-focused interventions for youth: economic impact of the learning collaborative model. Psychol Serv (2017) 14(1):57–65. doi:10.1037/ser0000131

6. Lang JM, Connell CM. Measuring costs to community-based agencies for implementation of an evidence-based practice. J Behav Health Serv Res (2017) 44(1):122–34. doi:10.1007/s11414-016-9541-8

7. Roundfield KD, Lang JM. Costs to community mental health agencies to sustain an evidence-based practice. Psychiatr Serv (2017) 68:876–82. doi:10.1176/appi.ps.201600193

8. Saldana L, Chamberlain P, Bradford WD, Campbell M, Landsverk J. The cost of implementing new strategies (COINS): a method for mapping implementation resources using the stages of implementation completion. Child Youth Serv Rev (2014) 39:177–82. doi:10.1016/j.childyouth.2013.10.006

9. Chorpita BF, Bernstein A, Daleiden EL. Empirically guided coordination of multiple evidence-based treatments: an illustration of relevance mapping in children’s mental health services. J Consult Clin Psychol (2011) 79(4):470–80. doi:10.1037/a0023982

10. McHugh RK, Barlow DH. The dissemination and implementation of evidence-based psychological treatments: a review of current efforts. Am Psychol (2010) 65(2):73–84. doi:10.1037/a0018121

11. Daleiden EL, Chorpita BF. From data to wisdom: quality improvement strategies supporting large-scale implementation of evidence-based services. Child Adolesc Psychiatr Clin N Am (2005) 14(2):329–49. doi:10.1016/j.chc.2004.11.002

12. Beidas RS, Stewart RE, Adams DR, Fernandez T, Lustbader S, Powell BJ, et al. A multi-level examination of stakeholder perspectives of implementation of evidence-based practices in a large urban publicly-funded mental health system. Adm Policy Ment Health (2016) 43(6):893–908. doi:10.1007/s10488-015-0705-2

13. Honberg R, Kimball A, Diehl S, Usher L, Fitzpatrick M. State Mental Health Cuts: The Continuing Crisis. Arlington, VA: National Alliance on Mental Illness (2011).

14. Stewart RE, Adams DR, Mandell DS, Hadley TR, Evans AC, Rubin R, et al. The perfect storm: collision of the business of mental health and the implementation of evidence-based practices. Psychiatr Serv (2016) 67(2):159–61. doi:10.1176/appi.ps.201500392

15. Mandell DS, Listerud J, Levy SE, Pinto-Martin JA. Race differences in the age at diagnosis among Medicaid-eligible children with autism. J Am Acad Child Adolesc Psychiatry (2002) 41(12):1447–53. doi:10.1097/00004583-200212000-00016

16. Child and Adolescent Mental Health Division. Instructions and Codebook for Provider Monthly Summaries. Honolulu, HI: State of Hawaii Department of Health (2005).

17. American Psychological Association. Policy Statement on Evidence-Based Practice in Psychology. Washington, DC: American Psychological Association (2005).

18. Chambless DL, Hollon SD. Defining empirically supported therapies. J Consult Clin Psychol (1998) 66(1):7–18. doi:10.1037/0022-006X.66.1.7

20. Resick PA, Schnicke MK. Cognitive processing therapy for sexual assault victims. J Consult Clin Psychol (1992) 60(5):748–56. doi:10.1037/0022-006X.60.5.748

21. Linehan MM, Armstrong HE, Suarez A, Allmon D, Heard HL. Cognitive-behavioral treatment of chronically parasuicidal borderline patients. Arch Gen Psychiatry (1991) 48:1060–4. doi:10.1001/archpsyc.1991.01810360024003

22. Bagner DM, Eyberg SM. Parent-child interaction therapy for disruptive behavior in children with mental retardation: a randomized controlled trial. J Clin Child and Adolesc Psychol (2007) 36(3):418–29. doi:10.1080/15374410701448448

23. Chaffin M, Silovsky JF, Funderburk B, Valle LA, Brestan EV, Balachova T, et al. Parent-child interaction therapy with physically abusive parents: efficacy for reducing future abuse reports. J Consult Clin Psychol (2004) 72(3):500–10. doi:10.1037/0022-006X.72.3.500

24. Foa EB, Dancu CV, Hembree EA, Jaycox LH, Meadows EA, Street GP. A comparison of exposure therapy, stress inoculation training, and their combination for reducing posttraumatic stress disorder in female assault victims. J Consult Clin Psychol (1999) 67(2):194–200. doi:10.1037/0022-006X.67.2.194

25. Foa EB, Hembree EA, Cahill SP, Rauch SA, Riggs DS, Feeny NC, et al. Randomized trial of prolonged exposure for posttraumatic stress disorder with and without cognitive restructuring: outcome at academic and community clinics. J Consult Clin Psychol (2005) 73(5):953–64. doi:10.1037/0022-006X.73.5.953

26. Chorpita BF, Weisz JR. MATCH-ADTC: Modular Approach to Therapy for Children with Anxiety, Depression, Trauma, or Conduct Problems. Satellite Beach, FL: PracticeWise (2009).

27. Cohen JA, Mannarino AP, Iyengar S. Community treatment of posttraumatic stress disorder for children exposed to intimate partner violence. Arch Pediatr Adolesc Med (2011) 165(1):16–21. doi:10.1001/archpediatrics.2010.247

28. PracticeWise LLC. PracticeWise Evidence-Based Services Database. (2017). Avaliable from: http://www.practicewise.com

29. Eyberg SM, Nelson MM, Boggs SR. Evidence-based psychosocial treatments for children and adolescents with disruptive behavior. J clin child Adolesc Psychol (2008) 37(1):215–37. doi:10.1080/15374410701820117

30. Higa-McMillan CK, Francis SE, Rith-Najarian L, Chorpita BF. Evidence base update: 50 years of research on treatment for child and adolescent anxiety. J Clin Child Adolesc Psychol (2016) 45(2):91–113. doi:10.1080/15374416.2015.1046177

31. Silverman WK, Ortiz CD, Viswesvaran C, Burns BJ, Kolko DJ, Putnam FW, et al. Evidence-based psychosocial treatments for child and adolescent exposed to traumatic events: a review and meta-analysis. J Clin Child Adolesc Psychol (2008) 37:156–83. doi:10.1080/15374410701818293

32. Lyon AR, Borntrager C, Nakamura B, Higa-McMillan C. From distal to proximal: routine educational data monitoring in school-based mental health. Adv Sch Ment Health Promot (2013) 6(4):263–79. doi:10.1080/1754730X.2013.832008

33. Okamura KH, Hee PJ, Jackson D, Nakamura BJ. Furthering our understanding of therapist knowledge and attitudinal measurement in youth community settings. Admin Policy Ment Health (Forthcoming).

34. Rubin RM, Hurford MO, Hadley T, Matlin S, Weaver S, Evans AC. Synchronizing watches: the challenge of aligning implementation science and public systems. Admin Policy Ment Health (2016) 43(6):1023–8. doi:10.1007/s10488-016-0759-9

35. Reding ME, Chorpita BF, Lau AS, Innes-Gomberg D. Providers’ attitudes toward evidence-based practices: is it just about providers, or do practices matter, too? Admin Policy Ment Health (2014) 41(6):767–76. doi:10.1007/s10488-013-0525-1

36. Okamura KH, Nakamura BJ, Mueller C, Hayashi K, McMillan CKH. An exploratory factor analysis of the knowledge of evidence-based services questionnaire. J Behav Health Serv Res (2016) 43(2):214–32. doi:10.1007/s11414-013-9384-5

37. Beidas RS, Cross W, Dorsey S. Show me, don’t tell me: behavioral rehearsal as a training and analogue fidelity tool. Cogn Behav Pract (2014) 21(1):1–11. doi:10.1016/j.cbpra.2013.04.002

38. Nakamura BJ, Selbo-Bruns A, Okamura K, Chang J, Slavin L, Shimabukuro S. Developing a systematic evaluation approach for training programs within a train-the-trainer model for youth cognitive behavior therapy. Behav Res Ther (2014) 53:10–9. doi:10.1016/j.brat.2013.12.001

39. Lurie N, Popkin M, Dysken M, Moscovice I, Finch M. Accuracy of diagnoses of schizophrenia in Medicaid claims. Psychiatr Serv (1992) 43(1):69–71. doi:10.1176/ps.43.1.69

40. Steele LS, Glazier RH, Lin E, Evans M. Using administrative data to measure ambulatory mental health service provision in primary care. Med Care (2004) 42(10):960–5. doi:10.1097/00005650-200410000-00004

41. Walkup JT, Boyer CA, Kellermann SL. Reliability of Medicaid claims files for use in psychiatric diagnoses and service delivery. Admin Policy Ment Health (2000) 27(3):129–39. doi:10.1023/A:1021308007343

42. Kessler RC, McGonagle KA, Zhao S, Nelson CB, Hughes M, Eshleman S, et al. Lifetime and 12-month prevalence of DSM-III-R psychiatric disorders in the United States: results from the National Comorbidity Survey. Arch Gen Psychiatry (1994) 51(1):8–19. doi:10.1001/archpsyc.1994.03950010008002

43. Kessler RC, Berglund P, Demler O, Jin R, Merikangas KR, Walters EE. Lifetime prevalence and age-of-onset distributions of DSM-IV disorders in the National Comorbidity Survey Replication. Arch Gen Psychiatry (2005) 62(6):593–602. doi:10.1001/archpsyc.62.6.617

44. Raghavan IR. The role of economic evaluation in dissemination and implementation research. In: Brownson RC, Colditz GA, Proctor EK, editors. Dissemination and Implementation Research in Health: Translating Science to Practice. New York: Oxford University Press (2012). p. 94–113.

45. Proctor E, Silmere H, Raghavan R, Hovmand P, Aarons G, Bunger A, et al. Outcomes for implementation research: conceptual distinctions, measurement challenges, and research agenda. Admin Policy Ment Health (2011) 38(2):65–76. doi:10.1007/s10488-010-0319-7

46. Creed TA, Stirman SW, Evans AC. A model for implementation of cognitive therapy in community mental health: the beck initiative. Behav Ther (2014) 37(3):56–64.

47. Stirman SW, Buchhofer R, McLaulin JB, Evans AC, Beck AT. Public-academic partnerships: the Beck initiative: a partnership to implement cognitive therapy in a community behavioral health system. Psychiatr Serv (2009) 60(10):1302–4. doi:10.1176/ps.2009.60.10.1302

48. Timmer SG, Urquiza AJ. Empirically based treatments for maltreated children: a developmental perspective. In: Timmer SG, Urquiza AJ editors. Evidence-Based Approaches for the Treatment of Maltreated Children. Netherlands: Springer (2014). p. 351–76.

49. Jensen-Doss A, Hawley KM, Lopez M, Osterberg LD. Using evidence-based treatments: the experiences of youth providers working under a mandate. Prof Psychol Res Pract (2009) 40(4):417. doi:10.1037/a0014690

Keywords: evidence-based treatment, therapist, training and consultation, cost-analysis, population health

Citation: Okamura KH, Benjamin Wolk CL, Kang-Yi CD, Stewart R, Rubin RM, Weaver S, Evans AC, Cidav Z, Beidas RS and Mandell DS (2018) The Price per Prospective Consumer of Providing Therapist Training and Consultation in Seven Evidence-Based Treatments within a Large Public Behavioral Health System: An Example Cost-Analysis Metric. Front. Public Health 5:356. doi: 10.3389/fpubh.2017.00356

Received: 17 October 2017; Accepted: 15 December 2017;

Published: 08 January 2018

Edited by:

Ross Brownson, Washington University in St. Louis, United StatesReviewed by:

Ana Baumann, Washington University in St. Louis, United StatesBeth Prusaczyk, Vanderbilt University Medical Center, United States

Copyright: © 2018 Okamura, Benjamin Wolk, Kang-Yi, Stewart, Rubin, Weaver, Evans, Cidav, Beidas and Mandell. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) or licensor are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Kelsie H. Okamura, kelsie.h.okamura@gmail.com

Kelsie H. Okamura

Kelsie H. Okamura Courtney L. Benjamin Wolk1

Courtney L. Benjamin Wolk1 Christina D. Kang-Yi

Christina D. Kang-Yi Rinad S. Beidas

Rinad S. Beidas