Connecting the science and practice of implementation – applying the lens of context to inform study design in implementation research

- 1Caring Futures Institute, Flinders University, Adelaide, SA, Australia

- 2Faculty of Health and Medicine, Lancaster University, Lancaster, United Kingdom

- 3Warwick Medical School, Faculty of Science, University of Warwick, Coventry, United Kingdom

- 4Centre for Primary Care and Health Services Research, University of Manchester, Manchester, United Kingdom

- 5School of Nursing, Faculty of Health, Dalhousie University, Halifax, NS, Canada

- 6Faculty of Health, Dalhousie University, Halifax, NS, Canada

- 7College of Health Professions, Virginia Commonwealth University, Richmond, VA, United States

- 8Wolfson Palliative Care Research Centre, Hull York Medical School, Hull, United Kingdom

- 9Ingram School of Nursing, Faculty of Medicine and Health Sciences, McGill University, Montreal, QC, Canada

- 10Centre for Research on Health and Nursing, Faculty of Health Sciences, University of Ottawa, Ottawa, ON, Canada

- 11School of Epidemiology and Public Health, Faculty of Medicine, University of Ottawa, Ottawa, ON, Canada

The saying “horses for courses” refers to the idea that different people and things possess different skills or qualities that are appropriate in different situations. In this paper, we apply the analogy of “horses for courses” to stimulate a debate about how and why we need to get better at selecting appropriate implementation research methods that take account of the context in which implementation occurs. To ensure that implementation research achieves its intended purpose of enhancing the uptake of research-informed evidence in policy and practice, we start from a position that implementation research should be explicitly connected to implementation practice. Building on our collective experience as implementation researchers, implementation practitioners (users of implementation research), implementation facilitators and implementation educators and subsequent deliberations with an international, inter-disciplinary group involved in practising and studying implementation, we present a discussion paper with practical suggestions that aim to inform more practice-relevant implementation research.

Introduction

Implementation science has advanced significantly in the last two decades. When the journal Implementation Science launched in 2006, it defined implementation research as “the scientific study of methods to promote the systematic uptake of research findings and other evidence-based practices into routine practice, and, hence, to improve the quality and effectiveness of health services” (1, p.1) and subsequent work has advanced theoretical and empirical development in the field. Yet questions remain as to whether implementation science is achieving impact at the level of health systems and population health (2) and if implementation science is in danger of re-creating the type of evidence-practice gap it was intended to address (2–5). In relation to this latter point – the apparent dis-connect between implementation science and implementation practice – critics have challenged the dominant paradigm of implementation research as it is currently conducted, notably a reliance on methodologies that emphasize experimental control and adherence to clearly specified protocols (3, 6). Why is this problematic and what should we be doing to address it? These are questions that we set out to explore with inter-disciplinary colleagues working in the field of implementation research and practice. In exploring these issues, we recognize that views will differ according to the ontological and epistemological positioning of the individuals and teams undertaking implementation research as this will guide the question/s they are seeking to address, and how. Our starting point is essentially a pragmatic one; we believe that implementation science should be useful to and used in practice. Indeed, some authors conceptualize implementation science more broadly than the study of implementation methods, positioning it as a “connection between two equally important components, implementation research and implementation practice” (7, p.2). As such, whilst “implementation research seeks to understand and evaluate approaches used to translate evidence to the real world. Implementation practice seeks to apply and adapt these approaches in different contexts and settings to achieve positive outcomes” (8, p.238).

This inter-connectedness between implementation research and implementation practice reflects our starting position and a belief that implementation research should generate transferable and applicable knowledge for implementation practice. In turn, this requires responsiveness and changes to modifiable contextual factors that influence implementation. For example, studies of the effectiveness of facilitation as an implementation strategy have shown mixed results (9, 10) and demonstrated that an important contextual factor is the level of support from clinical leaders in the implementation setting. Whilst this can be factored into the design of future research, leaders may change during the conduct of the study, potentially reducing the level of support for the facilitation intervention. This is a modifiable factor, which can either be reported on, or (the alternative option) acted upon, for example, by an additional strategy to engage the new leader and secure greater support. It is this type of more responsive approach to implementation research that the paper is advocating for.

Context and the complexity of implementation

Although initially conceptualized as a rational, linear process underpinned by traditional biomedical approaches to research translation (11), the complex, iterative and context-dependent nature of implementation is now well recognized (12, 13). This is apparent in the growing interest in applying complexity theory and complex adaptive systems thinking to implementation and implementation science, including attempts to combine different research paradigms to address the complex reality of health systems (13–15). Central to an understanding of complexity is the mediating role of context in presenting barriers and/or enablers of implementation (16–18). Many definitions of context exist in the literature. In this paper we adopt a broad interpretation of context as “any feature of the circumstances in which an intervention is implemented that may interact with the intervention to produce variation in outcomes” (19, p.24). As such, contextual factors exist at multiple levels of implementation from individuals and teams, through to organizations and health systems (17, 20). They do not work in isolation but interact in complex ways to impact implementation success. Contextual factors are represented to varying degrees in an array of implementation theories, frameworks, and models (21, 22), which can help to design theory-informed implementation interventions and predict and explain implementation processes and outcomes (23).

Advances in implementation science

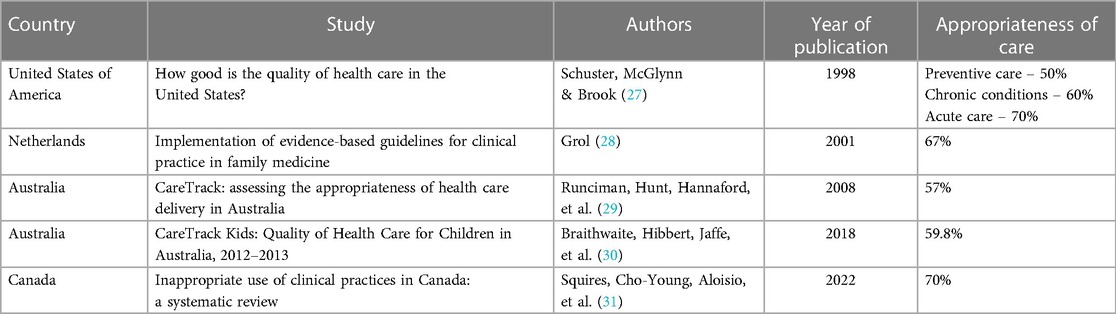

Alongside the growth of implementation theories and frameworks, empirical studies have helped to establish an evidence base on the relative effectiveness of different implementation strategies, including, for example, audit and feedback, education and training, local opinion leaders and computerized reminders (24). Methodological developments are also apparent, particularly the introduction of hybrid trial designs that aim to simultaneously evaluate intervention and implementation effectiveness (25), increased use of pragmatic trial designs, and published guidance on improving the quality of randomized implementation trials (26). However, against this background of the developing science, the evidence-practice gap has remained largely static over the last 20 years. A key study in the US in 1998 indicated that 30%–50% of health care delivery was not in line with best available evidence (27); subsequent studies, published for example, in Europe (2001), Australia (2012 and 2018) and most recently in Canada (2022) reached similar conclusions (28–31) (Table 1). This suggests that a 30%–40% gap between the best available evidence and clinical practice persists, despite the investment that has gone into building the science of implementation. In turn, this could indicate that we are not putting into practice what we know from empirical and theoretical evidence on implementation and that the promise of implementation science is not being realized in terms of improving putative benefits on health systems and health outcomes. That is, we need to put more focus on the implementation of implementation science.

Approaches to studying implementation

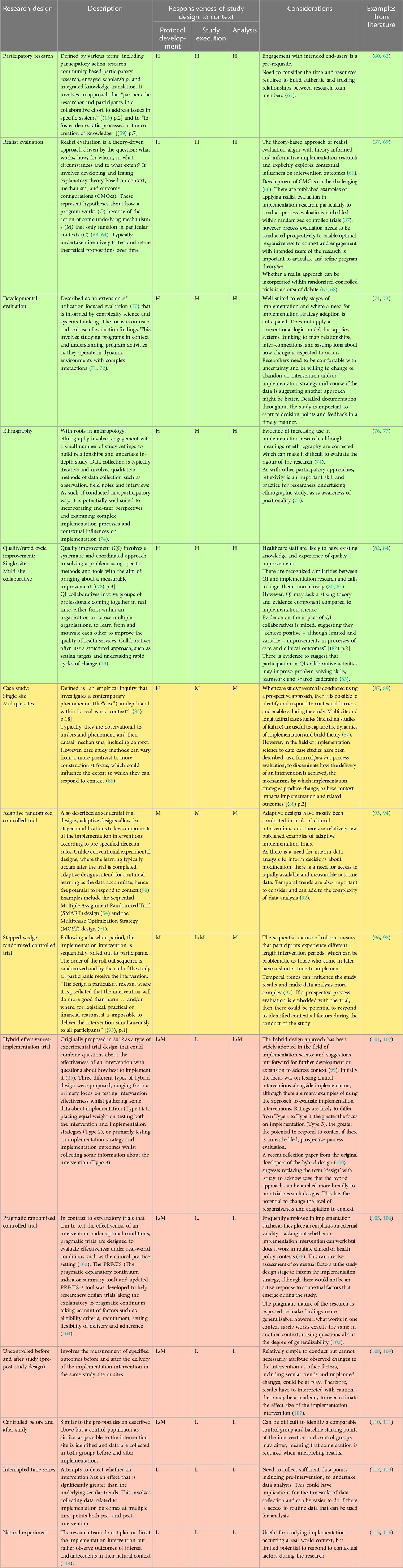

Research to derive the evidence base for different implementation strategies has tended to emphasize questions of effectiveness, with a corresponding focus on experimental study designs that seek to control for, rather than respond to, contextual variation. This runs counter to the recognition that implementation is complex, non-linear, and heavily context-dependent, a fact borne out by large robust implementation trials that report null outcomes and demonstrate through embedded process evaluations the contextual variables that contributed to this result (Table 2). Typically, process evaluations are conducted and reported retrospectively to provide an explanatory account of the trial outcomes – describing rather than responding and adapting to contextual factors that influence the trajectory of implementation during the study. Furthermore, when considering implementation studies, there are likely to be broader questions of interest than simply the effectiveness of an implementation intervention, including recognized implementation outcomes such as acceptability, appropriateness, affordability, practicability, unintended consequences, equity and feasibility (40, 41). In this paper, we make the case for re-thinking the relationship between implementation research and implementation practice, highlighting the need to become better at working with context throughout the entire research process, from planning to conduct, analysis, interpretation, and dissemination of results, whilst maintaining relevance and rigour at all stages.

We engaged in a series of activities to explore these issues further and contribute to the debate on connecting the science and practice of implementation. Our intent is not to promote one research study design over another, but to stimulate debate about the range of research approaches needed to align the science and practice of implementation.

Connecting the science and practice of implementation: issues, challenges and opportunities

Our central aim was to produce a discussion paper and practical guidance to enable implementation teams to make better decisions about what study designs to apply and when. This started with a roundtable workshop and meetings amongst a small group of the authors (JRM, KS, PW, GH, IG), followed by wider engagement and consultation with an international group of implementation researchers and practitioners.

Our initial activity started with a two-day face-to-face meeting and subsequent virtual meetings to explore the relationship between context and implementation research methods, particularly how implementation research studies could be designed and conducted in a way that was more responsive to context in real-time. From our own experiences of conducting large implementation trials where contextual factors were highly influential (9, 10, 37, 42, 43), we wanted to explore how we could conduct robust research where context was more than a backdrop to the study. Our intent was to examine whether and how context could be addressed in a formative and flexible way throughout an implementation study, rather than the more typical way of considering it at the beginning (e.g., by assessing for likely contextual barriers and enablers) and/or at the end of the research (e.g., analyzing and reflecting on how well the implementation process went). In these initial deliberations, we considered several different issues including strengths and weaknesses of different research designs in terms of attending to and responding to context; the role of theory in connecting implementation science and practice; the role of process/implementation evaluation; and interpretations of fidelity in implementation research.

The output of these initial discussions was used to develop content for an interactive workshop at the 2019 meeting of the international Knowledge Utilization (KU) Colloquium (KU19). Prior to the COVID-19 pandemic this meeting had been held annually since its establishment in 2001 with participants representing implementation researchers, practitioners, and PhD students. Evaluation and research methods in implementation had been a discussion theme at a number of previous meetings of the colloquium. At the 2019 meeting in Montebello, Quebec, Canada, two of the authors (GH and JRM) ran a workshop session for approximately 80 colloquium participants, titled “Refreshing and advancing approaches to evaluation in implementation research”. The objectives of the workshop were presented as an opportunity:

i. For participants to share their experiences of undertaking implementation research and the related challenges and successes.

ii. To engage the community in a discussion about whether and how to refresh our thinking and approaches to evaluation.

iii. To share and discuss ideas about factors that might be usefully considered in the evaluation of implementation interventions.

A short introduction outlined some of the issues for consideration and discussion in relation to taking account of context, adaptation of implementation strategies, summative vs. formative process evaluation and issues of fidelity. Participants were then split into smaller roundtable groups to discuss the following question:

How could we design more impactful implementation intervention evaluation studies? Consider:

- the whole research cycle from planning and design to implementation and evaluation

- designs and methods that enable attention to context, adaptability, engagement, and connecting implementation research and practice.

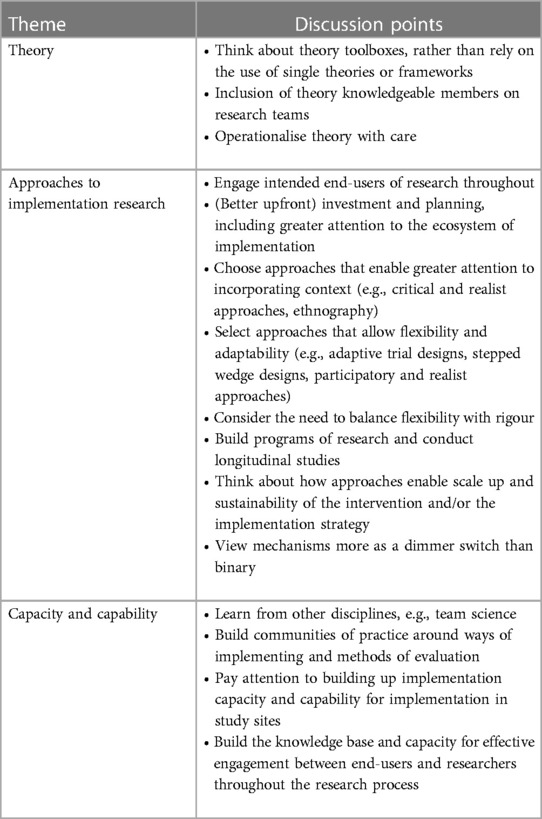

After a period of discussion, each table nominated a spokesperson to take part in a facilitated feedback discussion, using a goldfish bowl approach. JRM and GH facilitated the feedback process with other workshop participants observing the “goldfish” bowl. Discussion centred on three main themes: the appropriate use and operationalization of theory in implementation research; consideration of a broader range of study designs in implementation research; and building capacity and capability to undertake impactful implementation research. Notes of the discussion were captured and collated into an overall summary (Table 3). At the end of the session, participants were asked to self-nominate if they were interested in forming a working group to further develop the ideas put forward. Twenty-four responded in the affirmative to this invitation.

Following the KU19 event, the participants who had expressed an interest in continued involvement, were emailed a short template to complete. The template asked them to list up to 5 key issues they thought should be considered in relation to implementation research that was attentive to context and enabled adaptability, noting why the issue was important and when in the research cycle it was relevant to consider. This feedback was synthesized and fed back in a second round of consultation, giving participants the opportunity to add any further commentary or reflections and asking them to suggest exemplar study designs that could address the issues identified and any benefits and drawbacks of the approach.

Connecting the science and practice of implementation – a way forward?

Ten participants responded to the first round of consultation (September 2019) and 9 to the second round (February 2020). A diversity of views was expressed in the feedback, however, there was clear support for working with more engaged, flexible, and context-responsive approaches that could bring implementation practice and research closer together. Suggestions of appropriate research designs were put forward, including theoretical and practical issues to be considered. Feedback was analyzed inductively and findings synthesized by the initial core group of authors (JRM, KS, PW, GH, IG) to identify key themes, presented below.

Engagement with intended users of implementation research

In line with approaches such as co-design, co-production and integrated knowledge translation (44, 45), participants highlighted the importance of engaging with intended users of implementation research, from community members and patients to clinicians, managers, and policy makers. Different groups can play different roles at different times in relation to implementation research. For example, patients, community members, clinicians and decision-makers can generate questions to be addressed by implementation research, clinicians (working with patients and the public) could be expected to apply the research findings in practice, and managers, educators, and policy makers could have a role in enabling, guiding, and supporting implementation. As such, involvement of intended users of the implementation research should be considered throughout the process of research, from identifying the significant priorities for implementation research to ensuring that implementation strategies are relevant, and findings are appropriately disseminated and actioned, thus increasing the likelihood of success and sustainment. The level of involvement can vary along a spectrum, ranging from passive information giving and consultation through to more active involvement and collaboration, with a corresponding shift in power-sharing amongst those involved (46). For the purposes of consistency throughout the paper, we use the term engagement to refer to the more active level of involvement, namely an equal partnership with intended users of implementation research, herewith referred to as implementation practitioners. We recognize that some roles such as clinical academics and embedded researchers may merge the implementation researcher and implementation practitioner roles (47).

Context responsiveness and flexibility

The need to embrace a wider range of methods to achieve greater engagement, flexibility and context responsiveness was emphasized, recognizing that different approaches have their own strengths and weaknesses in terms of supporting adaptation to context. Several important challenges were highlighted in relation to adopting more flexible methods, such as understanding the complexity of balancing the requirements of fidelity with adaptation of implementation interventions, and the practicalities of operationalizing concepts in complexity theory, particularly when applying it prospectively. Issues of equity, diversity and inclusion were also viewed as important to consider when thinking about all types of implementation research methods and designs, for example, in terms of representative membership of the research team and the potential influence of contextual factors on accessibility and inclusiveness of the implementation strategy.

Alternative research approaches

Suggestions of alternative methodologies that could enable greater alignment with and consideration of context included participatory research, case study designs, realist evaluation, mixed methods approaches and trial designs such as stepped wedge and adaptive trials. A key point was raised related to the underlying ontological and epistemological position of implementation researchers. Adopting more context-responsive and adaptive approaches to implementation research was seen to align more closely with constructivist or realist ontology with related implications for interpretations of scientific rigour, fidelity, and the role and influence of the researcher. For example, views on whether and how tailoring and adapting interventions to context presents a threat to the rigour of a study varies according to the underlying philosophy adopted by the research team and the choice of research design. The feedback highlighted a need for this to be considered more clearly and explicitly described by implementation researchers.

Theoretical and practical considerations

The importance of program theory was highlighted, particularly in relation to theorizing the intended change prior to the start of a research study and focusing on theoretical rather than programmatic fidelity of implementation research (48, 49). Alongside methodological and theoretical positioning, a number of more practical considerations were raised, including clarity about thresholds for intervening to adapt the study design and/or implementation strategy and whether and how adaptation should be actively pursued to maintain equity, diversity and inclusion. Other practical issues identified related to how best to define and capture adaptations over time, how to resource detailed, prospective process evaluations that could fully inform and observe adaptations, and the timeframe for evaluation, which was often seen to be insufficient.

The synthesis of feedback from the consultation process informs the subsequent discussion and suggestions for moving the agenda forward.

Discussion

Much has been learned from studying and applying implementation methods over the last two decades. However, the persistent gap between research evidence and practice indicates a need to get better at connecting implementation research and implementation practice. From an implementation research perspective, this involves thinking differently about what methods are appropriate to use and when. Whilst perceptions of the implementation process have shifted from a rational-linear view to something that is multi-faceted and emergent, it could be argued that some implementation research has become stagnant and ignores or over-simplifies how context influences real-world implementation rather than working flexibly with the inherent complexity of implementation contexts. From our collective deliberation, we propose that implementation research needs to align more closely with the reality of implementation practice, so that it achieves the ultimate aim of improving the delivery of evidence-informed health care and accelerating the resulting impact on health, provider and health system outcomes.

To achieve this alignment requires several actions that embrace engagement between implementation researchers and implementation practitioners (4, 50). These actions also require an appreciation and acceptance of study designs that enable a higher degree of adaptability and responsiveness to context.

Engagement with intended users of implementation research

Engagement with implementation practitioners should underpin the research process, as exemplified by approaches such as co-design, co-production, and integrated knowledge translation (51, 52). This helps to ensure that the necessary relationships are in place to clearly understand the implementation problems to be addressed, the goals to be achieved, resource and support requirements, and what research methods and adaptations will be required to achieve identified goals. This includes clarity around the implementation outcomes of interest, for example, effectiveness of the implementation strategy; acceptability to key user groups, including patients, consumers and staff; feasibility; and costs of implementation. These are all factors that should be taken into account when selecting an appropriate evaluation study design. It is important to highlight that this approach to engagement is not simply a feature of research approaches such as participatory research but should be a principle underpinning all implementation research studies that aim to improve the uptake of research evidence in practice and policy. It requires particular attention to the relational aspects of implementation, such as fostering local ownership of the problem to be addressed and building capability and capacity amongst both researchers and end-users of research to engage in effective collaboration. Clinical academics and embedded researchers offer one way of bridging the implementation research-practice boundary, including insights into specific contextual factors that could affect implementation processes and outcomes (53).

Appreciation and acceptance of study designs to enable responsiveness to context

Contextual influences are important at the planning (protocol development), execution and/or analysis phases of an implementation project. Some research designs lend themselves better to engaged approaches with intended end-users of the research to identify, manage and interpret contextual factors. Other than natural experiments, most designs have the potential to consider contextual factors at the protocol development phase, for example by assessing for potential barriers and enablers posed by contextual factors. However, not all study designs present an opportunity to act upon and modify the identified contextual factors in a responsive way. This is particularly the case for experimental studies that are purposefully designed to neutralise context throughout the research process, although more recent developments such as the adaptive trial design offer greater flexibility to account for contextual factors (54). Similarly, all designs present an opportunity to reflect upon contextual influences that affected the outcomes of implementation, particularly if there is a concurrent process evaluation of what is happening during implementation. However, whether this analysis is undertaken prospectively or retrospectively will determine the extent to which the data can inform real-time responsiveness to contextual factors. There is also variability during the execution of the study, as some designs are more amenable to adaptation of the implementation strategy, in response to (often unanticipated) contextual barriers and enablers. Typically, the more responsive or flexible approaches, such as participatory research and quality improvement, have inbuilt feedback loops which allow real-time monitoring, evaluation, and adaptation.

It is interesting to reflect on the effects that the COVID-19 pandemic had in terms of catalysing rapid change in a health system that is known to be slow to transform (55). Flexible approaches to implementation that were responsive to health system needs were critical for enabling rapid change (56). The pandemic response has highlighted the potential for adaptation to context in real-time and contributed to calls for rapid implementation approaches (57). However, rapid approaches to implementation must be considered alongside intentional engagement of end-users. Recent research, which aligns with our anecdotal experience, has shown a decrease in engagement among patients, the health system, and researchers during pandemic planning and response (58); some argue that there is no time to work in true partnership so researchers are falling back into more traditional directive modes of working. In part, this reflects the expectations of research commissioners and policy makers who, drawing on the COVID-19 experience, have a general expectation of more rapid approaches to translation and implementation. While we argue for research designs with higher degrees of adaptability and responsiveness to context, we caution those responsible for conducting and commissioning implementation research not to prioritize speed at the expense of effective collaboration.

Applying a lens of context to select appropriate research study designs

Building upon the feedback from our iterative discussions and consultation with implementation researchers and practitioners, we have developed a “horses for courses” table of study designs in terms of their potential to respond and adapt to contextual factors at different stages of the research process (Table 4). For each study design, we provide a brief description before indicating when and to what degree it can respond to contextual factors at the protocol development, study execution and/or analysis phase. For each of the three phases, we indicate the potential (high, medium or low) to respond to contextual factors, resulting in an overall high, medium, or low rating (colour coded accordingly in the table). This does not necessarily mean that some approaches are “better” than others, as each needs to be considered in terms of their strengths and weaknesses and the potential trade-offs when selecting one design or another. These considerations are addressed in the final column of the table.

Table 4. Summary of selective study designs with potential to respond to context during research phases of protocol development, execution of the study and analysis of findings.

Informed by our deliberative discussions, there are several pre-conditions to the study designs described in the table that help to optimise the impact of implementation research. These include a starting position that context is an important consideration in implementation research; the relationship between researchers and end-users of research; the need for process evaluation; and the role and contribution of theory.

As noted, we start from an assumed position that context mediates the effects of implementation and, as such, is something that we should work with, rather than seek to control, in implementation research. The ratings assigned to study design in Table 4 are through a lens that “context matters”. If this is a view shared by the implementation research team, then it is important to select a study design that will enable responsiveness and adaptation to context. We recognize that questions of fidelity arise when adapting implementation interventions to context. One way to address this is by specifying the core and adaptable components of the intervention to inform decisions about when tailoring to context is appropriate (117). Additionally, and as noted in the consultation feedback, it is important to consider fidelity alongside the program theory underpinning an implementation strategy. Theoretical fidelity is concerned with achieving the intended mechanisms of action of an intervention, as opposed to fidelity to component parts of the intervention (48, 49). A second condition relates to active engagement between the researcher/s and the intended users of the implementation research. This has important implications for the researchers' role as they can only optimise adaptation to context if they are working in an engaged way to monitor and respond to context in real-time. Thirdly, we highlight the importance of process evaluation in implementation research, in particular process evaluation that is embedded and prospective to capture changes in context that could have implications for implementation success and to inform timely adaptations to the implementation strategy, as well as potential effects the implementation intervention may have on context over time. A final condition relates to the central role of theory and theorising in study design. In line with established guidance on the development and evaluation of complex interventions (23), our starting position is that implementation studies should be informed by theories that are relevant to implementation. Alongside applying theory to guide study design and evaluation, opportunities to move from theory-informed to theory-informative implementation research should be considered, for example, by theorising the dynamic relationships between implementation strategies, implementers and context during data analysis and interpretation (118). Careful documentation within process evaluations of what adaptations occurred, when, how and why can make important contributions to such theorising. The extent to which these conditions are met or not will influence the level of adaptability and responsiveness to context. All the study designs listed in the table have potential to be responsive to context or increase the level of responsiveness in the way they plan and conduct the study and data analysis. So, for example, study designs rated lower in the table could enhance their responsiveness to context by increasing engagement with intended end-users of their research and/or embedding a prospective process evaluation with iterative data analysis in their study.

How to use the table

As noted, Table 4 is intended to be used when context is seen as an important consideration in implementation research. It is not intended to be prescriptive or a “rule-book” for study design selection as there is no definitive answer to the question “what is the right implementation research design”? Rather it aims to help implementation research teams (including implementation practitioners partnering with researchers) who believe context is important to implementation success to select study designs that will best enable them to identify and then respond to contextual factors during the development, conduct and analysis phases of research. Exactly which study design is appropriate will depend upon several factors including the stage and scale of the research and what trade-offs are acceptable to the research team in terms of strengths and weaknesses of different study designs. For example, if the study is concerned with early-stage development and field testing of an implementation strategy, questions of interest are likely to focus on feasibility, practicability, appropriateness and fit. Here, approaches classified as highly responsive are particularly beneficial to test and refine the implementation strategies in real-time and develop an in-depth understanding of the mechanisms of action and the relationships between mechanisms, context, and outcomes. At a later stage, questions of effectiveness and cost-effectiveness may become more important, in which case an adaptive trial design (coded as medium level) would be relevant as it can enable a continuing (although more limited) responsiveness to contextual factors.

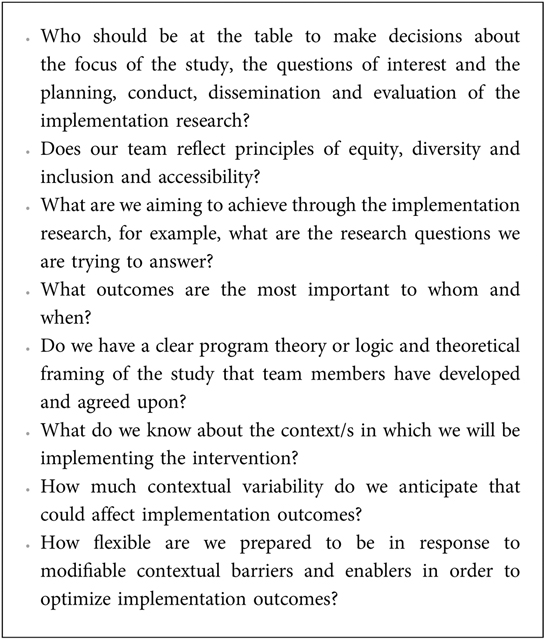

The important point is that research teams should more critically reflect on who they involve as part of their research team and their choice of research design, according to the questions they are attempting to answer and the outcomes they are seeking to achieve (see Box 1). It is also important to note that the designs presented in Table 4 are not exhaustive nor mutually exclusive. Indeed, there are many examples in the literature where different study designs are combined to bring together their relative strengths (15, 67), although this can raise questions about epistemological fit (68). Similarly, there are variations within some of the study designs listed, such as case studies (86) and hybrid studies (100), reflecting different worldviews and approaches within an overarching study design type.

Box 1Reflective questions to guide the selection of context-responsive study design in implementation research

Conclusions

To optimise the potential for implementation research to contribute to improving health and health system outcomes, this paper outlines a paradigm shift in how we conceptualise the relationship between implementation research and implementation practice. We argue that implementation research requires the use of study designs with higher degrees of adaptability and responsiveness to context to align more closely with the reality of implementation practice. Such approaches are critical to improve the delivery of evidence-informed health care and positively impact on patient experience, population health, provider experience, and health system outcomes, contributing to health equity and social justice (119). We recognise that the paper raises questions that require ongoing discussion and exploration, such as how best to balance rigour, fidelity and adaptation to context and how to truly address issues of equity, diversity, accessibility and inclusion. Important debates and developments are already underway in these areas [for example, (120–123)] as are ongoing methodological developments in study design that can help to inform future application and refinement of the ideas proposed in this paper.

Data availability statement

The original contributions presented in the study are included in the article, further inquiries can be directed to the corresponding author.

Ethics statement

Ethical review and approval was not required for this study in accordance with the local legislation and institutional requirements.

Author contributions

GH, JR-M, KS, PW and IDG: conceived the original ideas for the paper. GH and JR-M: facilitated a workshop at the Knowledge Utilisation 2019 meeting to explore the ideas further and establish a working group to write the paper. All authors contributed to the article and approved the submitted version.

Acknowledgments

The authors would like to thank colleagues from the KU19 meeting for their contributions and participation in the workshop reported in the paper. IDG is a recipient of a CIHR Foundation Grant (FDN# 143237).

Conflict of interest

The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

The author JH declared that they were an editorial board member of Frontiers, at the time of submission. This had no impact on the peer review process and the final decision.

Publisher's note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

1. Eccles MP, Mittman BS. Welcome to implementation science. Implement Sci. (2006) 1(1):1. doi: 10.1186/1748-5908-1-1

2. Beidas RS, Dorsey S, Lewis CC, Lyon AR, Powell BJ, Purtle J, et al. Promises and pitfalls in implementation science from the perspective of US-based researchers: learning from a pre-mortem. Implement Sci. (2022) 17(1):55. doi: 10.1186/s13012-022-01226-3

3. Lyon AR, Comtois KA, Kerns SEU, Landes SJ, Lewis CC. Closing the science–practice gap in implementation before it widens. In: Albers B, Shlonsky A, Mildon R, editors. Implementation science 3.0. Cham: Springer International Publishing (2020). p. 295–313.

4. Westerlund A, Nilsen P, Sundberg L. Implementation of implementation science knowledge: the research-practice gap paradox. Worldviews Evid Based Nurs. (2019) 16(5):332–4. doi: 10.1111/wvn.12403

5. Rapport F, Clay-Williams R, Churruca K, Shih P, Hogden A, Braithwaite J. The struggle of translating science into action: foundational concepts of implementation science. J Eval Clin Pract. (2018) 24(1):117–26. doi: 10.1111/jep.12741

6. Glasgow RE, Chambers D. Developing robust, sustainable, implementation systems using rigorous, rapid and relevant science. Clin Trans Sci. (2012) 5(1):48–55. doi: 10.1111/j.1752-8062.2011.00383.x

7. Ramaswamy R, Mosnier J, Reed K, Powell BJ, Schenck AP. Building capacity for public health 3.0: introducing implementation science into an MPH curriculum. Implement Sci. (2019) 14(1):18. doi: 10.1186/s13012-019-0866-6

8. Metz A, Albers B, Burke K, Bartley L, Louison L, Ward C, et al. Implementation practice in human service systems: understanding the principles and competencies of professionals who support implementation. Hum Serv Organ Manag Leadersh Gov. (2021) 45(3):238–59. doi: 10.1080/23303131.2021.189540

9. Seers K, Rycroft-Malone J, Cox K, Crichton N, Edwards RT, Eldh AC, et al. Facilitating implementation of research evidence (FIRE): an international cluster randomised controlled trial to evaluate two models of facilitation informed by the promoting action on research implementation in health services (PARIHS) framework. Implement Sci. (2018) 13(1):137. doi: 10.1186/s13012-018-0831-9

10. Bucknall TK, Considine J, Harvey G, Graham ID, Rycroft-Malone J, Mitchell I, et al. Prioritising responses of nurses to deteriorating patient observations (PRONTO): a pragmatic cluster randomised controlled trial evaluating the effectiveness of a facilitation intervention on recognition and response to clinical deterioration. BMJ Qual Saf. (2022) 31:818–30. doi: 10.1136/bmjqs-2021-013785

11. Sung NS, Crowley WF Jr, Genel M, Salber P, Sandy L, Sherwood LM, et al. Central challenges facing the national clinical research enterprise. JAMA. (2003) 289(10):1278–87. doi: 10.1001/jama.289.10.1278

12. Kitson A, Rycroft-Malone J, Harvey G, McCormack B, Seers K, Titchen A. Evaluating the successful implementation of evidence into practice using the PARIHS framework: theoretical and practical challenges (2008). Implement Sci. (2008) 3:1. doi: 10.1186/1748-5908-3-1

13. Braithwaite J, Churruca K, Ellis LA, Long J, Clay-Williams R, Damen N, et al. Complexity science in healthcare: Aspirations, approaches, applications and accomplishments. A White Paper. Sydney: Macquarie University, Australia: Australian Institute of Health Innovation (2017).

14. Kitson A, Brook A, Harvey G, Jordan Z, Marshall R, O’Shea R, et al. Using complexity and network concepts to inform healthcare knowledge translation. Int J Health Policy Manag. (2018) 7(3):231–43. doi: 10.15171/ijhpm.2017.79

15. Leykum LK, Pugh JA, Lanham HJ, Harmon J, McDaniel RR. Implementation research design: integrating participatory action research into randomized controlled trials. Implement Sci. (2009) 4(1):69. doi: 10.1186/1748-5908-4-69

16. Daivadanam M, Ingram M, Sidney Annerstedt K, Parker G, Bobrow K, Dolovich L, et al. The role of context in implementation research for non-communicable diseases: answering the “how-to” dilemma. PLoS One. (2019) 14(4):e0214454. doi: 10.1371/journal.pone.0214454

17. Squires JE, Hutchinson AM, Coughlin M, Bashir K, Curran J, Grimshaw JM, et al. Stakeholder perspectives of attributes and features of context relevant to knowledge translation in health settings: a multi-country analysis. Int J Health Policy Manag. (2022) 11(8):1373–90. doi: 10.34172/IJHPM.2021.32

18. May CR, Johnson M, Finch T. Implementation, context and complexity. Implement Sci. (2016) 11(1):141. doi: 10.1186/s13012-016-0506-3

19. Craig P, Di Ruggiero E, Frohlich KL, Mykhalovskiy E, White M, Campbell R, et al. Taking account of context in population health intervention research: guidance for producers, users and funders of research. Southampton: NIHR Journals Library (2018).

20. Squires JE, Graham I, Bashir K, Nadalin-Penno L, Lavis J, Francis J, et al. Understanding context: a concept analysis. J Adv Nurs. (2019) 75(12):3448–70. doi: 10.1111/jan.14165

21. Nilsen P, Bernhardsson S. Context matters in implementation science: a scoping review of determinant frameworks that describe contextual determinants for implementation outcomes. BMC Health Serv Res. (2019) 19(1):189. doi: 10.1186/s12913-019-4015-3

22. Tabak RG, Khoong EC, Chambers DA, Brownson RC. Bridging research and practice. A J Prev Med. (2012) 43(3):337–50. doi: 10.1016/j.amepre.2012.05.024

23. Skivington K, Matthews L, Simpson SA, Craig P, Baird J, Blazeby JM, et al. A new framework for developing and evaluating complex interventions: update of medical research council guidance. Br Med J. (2021) 374:n2061. doi: 10.1136/bmj.n2061

24. Grimshaw JM, Eccles MP, Lavis JN, Hill SJ, Squires JE. Knowledge translation of research findings. Implement Sci. (2012) 7(1):50. doi: 10.1186/1748-5908-7-50

25. Curran GM, Bauer M, Mittman B, Pyne JM, Stetler C. Effectiveness-implementation hybrid designs: combining elements of clinical effectiveness and implementation research to enhance public health impact. Med Care. (2012) 50(3):217–26. doi: 10.1097/MLR.0b013e3182408812

26. Wolfenden L, Foy R, Presseau J, Grimshaw JM, Ivers NM, Powell BJ, et al. Designing and undertaking randomised implementation trials: guide for researchers. Br Med J. (2021) 372:m3721. doi: 10.1136/bmj.m3721

27. Schuster M, McGlynn E, Brook R. How good is the quality of health care in the United States? Milbank Q. (1998) 76:517–63. doi: 10.1111/1468-0009.00105

28. Grol R. Successes and failures in the implementation of evidence-based guidelines for clinical practice. Med Care. (2001) 39:1146–54. doi: 10.1097/00005650-200108002-00003

29. Runciman WB, Coiera EW, Day RO, Hannaford NA, Hibbert PD, Hunt TD, et al. Towards the delivery of appropriate health care in Australia. Med J Aust. (2012) 197(2):78–81. doi: 10.5694/mja12.10799

30. Braithwaite J, Hibbert PD, Jaffe A, White L, Cowell CT, Harris MF, et al. Quality of health care for children in Australia, 2012–2013. JAMA. (2018) 319(11):1113–24. doi: 10.1001/jama.2018.0162

31. Squires JE, Cho-Young D, Aloisio LD, Bell R, Bornstein S, Brien SE, et al. Inappropriate use of clinical practices in Canada: a systematic review. CMAJ. (2022) 194(8):E279–96. doi: 10.1503/cmaj.211416

32. Clarke DJ, Hawkins R, Sadler E, Harding G, McKevitt C, Godfrey M, et al. Introducing structured caregiver training in stroke care: findings from the TRACS process evaluation study. BMJ Open. (2014) 4(4):e004473. doi: 10.1136/bmjopen-2013-004473

33. Kennedy A, Rogers A, Chew-Graham C, Blakeman T, Bowen R, Gardner C, et al. Implementation of a self-management support approach (WISE) across a health system: a process evaluation explaining what did and did not work for organisations, clinicians and patients. Implement Sci. (2014) 9:129. doi: 10.1186/s13012-014-0129-5

34. Ellard DR, Thorogood M, Underwood M, Seale C, Taylor SJC. Whole home exercise intervention for depression in older care home residents (the OPERA study): a process evaluation. BMC Med. (2014) 12(1):1. doi: 10.1186/1741-7015-12-1

35. Jäger C, Steinhäuser J, Freund T, Baker R, Agarwal S, Godycki-Cwirko M, et al. Process evaluation of five tailored programs to improve the implementation of evidence-based recommendations for chronic conditions in primary care. Implement Sci. (2016) 11(1):123. doi: 10.1186/s13012-016-0473-8

36. Wensing M. The tailored implementation in chronic diseases (TICD) project: introduction and main findings. Implement Sci. (2017) 12(1):5. doi: 10.1186/s13012-016-0536-x

37. Rycroft-Malone J, Seers K, Eldh AC, Cox K, Crichton N, Harvey G, et al. A realist process evaluation within the facilitating implementation of research evidence (FIRE) cluster randomised controlled international trial: an exemplar. Implement Sci. (2018) 13(1):138. doi: 10.1186/s13012-018-0811-0

38. Stephens TJ, Peden CJ, Pearse RM, Shaw SE, Abbott TEF, Jones EL, et al. Improving care at scale: process evaluation of a multi-component quality improvement intervention to reduce mortality after emergency abdominal surgery (EPOCH trial). Implement Sci. (2018) 13(1):142. doi: 10.1186/s13012-018-0823-9

39. McInnes E, Dale S, Craig L, Phillips R, Fasugba O, Schadewaldt V, et al. Process evaluation of an implementation trial to improve the triage, treatment and transfer of stroke patients in emergency departments (T3 trial): a qualitative study. Implement Sci. (2020) 15(1):99. doi: 10.1186/s13012-020-01057-0

40. Proctor E, Silmere H, Raghavan R, Hovmand P, Aarons G, Bunger A, et al. Outcomes for implementation research: conceptual distinctions, measurement challenges, and research agenda. Adm Policy Ment Health. (2011) 38(2):65–76. doi: 10.1007/s10488-010-0319-7

41. Michie S, Atkins L, West R. The behaviour change wheel: A guide to designing interventions. London: Silverback publishing (2014).

42. Graham ID, Harrison MB, Cerniuk B, Bauer S. A community-researcher alliance to improve chronic wound care. Healthc Policy. (2007) 2(4):72–8. doi: 10.12927/hcpol.2007.18876

43. Munce SEP, Graham ID, Salbach NM, Jaglal SB, Richards CL, Eng JJ, et al. Perspectives of health care professionals on the facilitators and barriers to the implementation of a stroke rehabilitation guidelines cluster randomized controlled trial. BMC Health Serv Res. (2017) 17(1):440. doi: 10.1186/s12913-017-2389-7

44. Pérez Jolles M, Willging CE, Stadnick NA, Crable EL, Lengnick-Hall R, Hawkins J, et al. Understanding implementation research collaborations from a co-creation lens: recommendations for a path forward. Front Health Serv. (2022) 2:942658. doi: 10.3389/frhs.2022.942658

45. Graham I, Rycroft-Malone J, Kothari A, McCutcheon C. Research coproduction in healthcare. Oxford: Wiley-Blackwell (2022).

46. Internation Association for Public Participation. IAP2 spectrum of public participation (2018). Available at: https://www.iap2.org/page/pillars (Accessed February 3, 2023).

47. Damschroder LJ, Knighton AJ, Griese E, Greene SM, Lozano P, Kilbourne AM, et al. Recommendations for strengthening the role of embedded researchers to accelerate implementation in health systems: findings from a state-of-the-art (SOTA) conference workgroup. Healthc. (2021) 8:100455. doi: 10.1016/j.hjdsi.2020.100455

48. Hawe P, Shiell A, Riley T. Complex interventions: how “out of control” can a randomised controlled trial be? Br Med J. (2004) 328(7455):1561–3. doi: 10.1136/bmj.328.7455.1561

49. Chen H-T, Rossi PH. Evaluating with sense: the theory-driven approach. Eval Rev. (1983) 7(3):283–302. doi: 10.1177/0193841X8300700301

50. Juckett LA, Bunger AC, McNett MM, Robinson ML, Tucker SJ. Leveraging academic initiatives to advance implementation practice: a scoping review of capacity building interventions. Implement Sci. (2022) 17(1):49. doi: 10.1186/s13012-022-01216-5

51. Langley G, Nolan K, Nolan T, Norman C, Provost L. The improvement guide. A practical approach to enhancing organizational performance. San Francisco, California: Jossey-Bass (1996).

52. Nguyen T, Graham ID, Mrklas KJ, Bowen S, Cargo M, Estabrooks CA, et al. How does integrated knowledge translation (IKT) compare to other collaborative research approaches to generating and translating knowledge? Learning from experts in the field. Health Res Policy Syst. (2020) 18(1):35. doi: 10.1186/s12961-020-0539-6

53. Varallyay NI, Bennett SC, Kennedy C, Ghaffar A, Peters DH. How does embedded implementation research work? Examining core features through qualitative case studies in Latin America and the Caribbean. Health Policy Plan. (2020) 35(Supplement_2):ii98–111. doi: 10.1093/heapol/czaa126

54. Almirall D, Nahum-Shani I, Sherwood NE, Murphy SA. Introduction to SMART designs for the development of adaptive interventions: with application to weight loss research. Transl Behav Med. (2014) 4(3):260–74. doi: 10.1007/s13142-014-0265-0

55. Salvador-Carulla L, Rosenberg S, Mendoza J, Tabatabaei-Jafari H. Rapid response to crisis: health system lessons from the active period of COVID-19. Health Policy Technol. (2020) 9(4):578–86. doi: 10.1016/j.hlpt.2020.08.011

56. Romanelli RJ, Azar KMJ, Sudat S, Hung D, Frosch DL, Pressman AR. Learning health system in crisis: lessons from the COVID-19 pandemic. Mayo Clin Proc Innov Qual Outcomes. (2021) 5(1):171–6. doi: 10.1016/j.mayocpiqo.2020.10.004

57. Smith J, Rapport F, O’Brien TA, Smith S, Tyrrell VJ, Mould EVA, et al. The rise of rapid implementation: a worked example of solving an existing problem with a new method by combining concept analysis with a systematic integrative review. BMC Health Serv Res. (2020) 20(1):449. doi: 10.1186/s12913-020-05289-0

58. Cassidy C, Sim M, Somerville M, Crowther D, Sinclair D, Elliott Rose A, et al. Using a learning health system framework to examine COVID-19 pandemic planning and response at a Canadian health centre. PLoS One. (2022) 17(9):e0273149. doi: 10.1371/journal.pone.0273149

59. Jull J, Giles A, Graham ID. Community-based participatory research and integrated knowledge translation: advancing the co-creation of knowledge. Implement Sci. (2017) 12(1):150. doi: 10.1186/s13012-017-0696-3

60. MacFarlane A, O'Reilly-de Brún M, de Brún T, Dowrick C, O'Donnell C, Mair F, et al. Healthcare for migrants, participatory health research and implementation science–better health policy and practice through inclusion. The RESTORE project. Eur J Gen Pract. (2014) 20(2):148–52. doi: 10.3109/13814788.2013.868432

61. Cargo M, Mercer SL. The value and challenges of participatory research: strengthening its practice. Annu Rev Public Health. (2008) 29:325–50. doi: 10.1146/annurev.publhealth.29.091307.083824

62. Ramanadhan S, Davis MM, Armstrong R, Baquero B, Ko LK, Leng JC, et al. Participatory implementation science to increase the impact of evidence-based cancer prevention and control. Cancer Causes Control. (2018) 29(3):363–9. doi: 10.1007/s10552-018-1008-1

64. Flynn R, Rotter T, Hartfield D, Newton AS, Scott SD. A realist evaluation to identify contexts and mechanisms that enabled and hindered implementation and had an effect on sustainability of a lean intervention in pediatric healthcare. BMC Health Serv Res. (2019) 19(1):912. doi: 10.1186/s12913-019-4744-3

65. Sarkies MN, Francis-Auton E, Long JC, Pomare C, Hardwick R, Braithwaite J. Making implementation science more real. BMC Med Res Methodol. (2022) 22(1):178. doi: 10.1186/s12874-022-01661-2

66. Salter KL, Kothari A. Using realist evaluation to open the black box of knowledge translation: a state-of-the-art review. Implement Sci. (2014) 9(1):115. doi: 10.1186/s13012-014-0115-y

67. Bonell C, Fletcher A, Morton M, Lorenc T, Moore L. Realist randomised controlled trials: a new approach to evaluating complex public health interventions. Soc Sci Med. (2012) 75(12):2299–306. doi: 10.1016/j.socscimed.2012.08.032

68. Marchal B, Westhorp G, Wong G, Van Belle S, Greenhalgh T, Kegels G, et al. Realist RCTs of complex interventions – an oxymoron. Soc Sci Med. (2013) 94:124–8. doi: 10.1016/j.socscimed.2013.06.025

69. Dossou J-P, Van Belle S, Marchal B. Applying the realist evaluation approach to the complex process of policy implementation—the case of the user fee exemption policy for cesarean section in Benin. Front Public Health. (2021) 9:553980. doi: 10.3389/fpubh.2021.553980

70. Patton MQ, Campbell-Patton CE. Utilization-focused evaluation. 5th edn. Thousand Oaks, CA: Sage Publications (2021).

71. Laycock A, Bailie J, Matthews V, Bailie R. Using developmental evaluation to support knowledge translation: reflections from a large-scale quality improvement project in indigenous primary healthcare. Health Res Policy Syst. (2019) 17(1):70. doi: 10.1186/s12961-019-0474-6

72. Patton MQ. Developmental evaluation: Applying complexity concepts to enhance innovation and use. New York: The Guildford Press (2011).

73. Conklin J, Farrell B, Ward N, McCarthy L, Irving H, Raman-Wilms L. Developmental evaluation as a strategy to enhance the uptake and use of deprescribing guidelines: protocol for a multiple case study. Implement Sci. (2015) 10(1):91. doi: 10.1186/s13012-015-0279-0

74. Gertner AK, Franklin J, Roth I, Cruden GH, Haley AD, Finley EP, et al. A scoping review of the use of ethnographic approaches in implementation research and recommendations for reporting. Implement Res Pract. (2021) 2:2633489521992743. doi: 10.1177/2633489521992743

75. Steketee AM, Archibald TG, Harden SM. Adjust your own oxygen mask before helping those around you: an autoethnography of participatory research. Implement Sci. (2020) 15(1):70. doi: 10.1186/s13012-020-01002-1

76. Grant S, Checkland K, Bowie P, Guthrie B. The role of informal dimensions of safety in high-volume organisational routines: an ethnographic study of test results handling in UK general practice. Implement Sci. (2017) 12(1):56. doi: 10.1186/s13012-017-0586-8

77. Conte KP, Shahid A, Grøn S, Loblay V, Green A, Innes-Hughes C, et al. Capturing implementation knowledge: applying focused ethnography to study how implementers generate and manage knowledge in the scale-up of obesity prevention programs. Implement Sci. (2019) 14(1):91. doi: 10.1186/s13012-019-0938-7

78. The Health Foundation. Quality improvement made simple: What everyone should know about quality improvement. London: The Health Foundation (2021).

79. The Health Foundation. Improvement collaboratives in health care: Evidence scan. London: The Health Foundation (2014).

80. Nilsen P, Thor J, Bender M, Leeman J, Andersson-Gäre B, Sevdalis N. Bridging the silos: a comparative analysis of implementation science and improvement science. Front Health Serv. (2022) 1:817750. doi: 10.3389/frhs.2021.817750

81. Leeman J, Rohweder C, Lee M, Brenner A, Dwyer A, Ko LK, et al. Aligning implementation science with improvement practice: a call to action. Implementat Sci Commun. (2021) 2(1):99. doi: 10.1186/s43058-021-00201-1

82. Rohweder C, Wangen M, Black M, Dolinger H, Wolf M, O'Reilly C, et al. Understanding quality improvement collaboratives through an implementation science lens. Prev Med. (2019) 129:105859. doi: 10.1016/j.ypmed.2019.105859

83. Zamboni K, Baker U, Tyagi M, Schellenberg J, Hill Z, Hanson C. How and under what circumstances do quality improvement collaboratives lead to better outcomes? A systematic review. Implement Sci. (2020) 15(1):27. doi: 10.1186/s13012-020-0978-z

84. Tyler A, Glasgow RE. Implementing improvements: opportunities to integrate quality improvement and implementation science. Hosp Pediatr. (2021) 11(5):536–45. doi: 10.1542/hpeds.2020-002246

86. Yazan B. Three approaches to case study methods in education: Yin, Merriam and Stake. Qual Rep. (2015) 20(2):134–52. doi: 10.46743/2160-3715/2015.2102

87. Billings J, de Bruin SR, Baan C, Nijpels G. Advancing integrated care evaluation in shifting contexts: blending implementation research with case study design in project SUSTAIN. BMC Health Serv Res. (2020) 20(1):971. doi: 10.1186/s12913-020-05775-5

88. Beecroft B, Sturke R, Neta G, Ramaswamy R. The “case” for case studies: why we need high-quality examples of global implementation research. Implement Sci Commun. (2022) 3(1):15. doi: 10.1186/s43058-021-00227-5

89. Stover AM, Haverman L, van Oers HA, Greenhalgh J, Potter CM. Using an implementation science approach to implement and evaluate patient-reported outcome measures (PROM) initiatives in routine care settings. Qual Life Res. (2021) 30(11):3015–33. doi: 10.1007/s11136-020-02564-9

90. Thorlund K, Haggstrom J, Park JJ, Mills EJ. Key design considerations for adaptive clinical trials: a primer for clinicians. Br Med J. (2018) 360:k698. doi: 10.1136/bmj.k698

91. Collins LM, Murphy SA, Strecher V. The multiphase optimization strategy (MOST) and the sequential multiple assignment randomized trial (SMART): new methods for more potent eHealth interventions. Am J Prev Med. (2007) 32(5 Suppl):S112–8. doi: 10.1016/j.amepre.2007.01.022

92. Lauffenburger JC, Choudhry NK, Russo M, Glynn RJ, Ventz S, Trippa L. Designing and conducting adaptive trials to evaluate interventions in health services and implementation research: practical considerations. BMJ Med. (2022) 1(1):e000158. doi: 10.1136/bmjmed-2022-000158

93. Kilbourne AM, Almirall D, Goodrich DE, Lai Z, Abraham KM, Nord KM, et al. Enhancing outreach for persons with serious mental illness: 12-month results from a cluster randomized trial of an adaptive implementation strategy. Implement Sci. (2014) 9(1):163. doi: 10.1186/s13012-014-0163-3

94. Lauffenburger JC, Isaac T, Trippa L, Keller P, Robertson T, Glynn RJ, et al. Rationale and design of the novel uses of adaptive designs to guide provider engagement in electronic health records (NUDGE-EHR) pragmatic adaptive randomized trial: a trial protocol. Implement Sci. (2021) 16(1):9. doi: 10.1186/s13012-020-01078-9

95. Brown CA, Lilford RJ. The stepped wedge trial design: a systematic review. BMC Med Res Methodol. (2006) 6(1):54. doi: 10.1186/1471-2288-6-54

96. Liddy C, Hogg W, Singh J, Taljaard M, Russell G, Deri Armstrong C, et al. A real-world stepped wedge cluster randomized trial of practice facilitation to improve cardiovascular care. Implement Sci. (2015) 10(1):150. doi: 10.1186/s13012-015-0341-y

97. Hemming K, Haines TP, Chilton PJ, Girling AJ, Lilford RJ. The stepped wedge cluster randomised trial: rationale, design, analysis, and reporting. Br Med J. (2015) 350:h391. doi: 10.1136/bmj.h391

98. Russell GM, Long K, Lewis V, Enticott JC, Gunatillaka N, Cheng I-H, et al. OPTIMISE: a pragmatic stepped wedge cluster randomised trial of an intervention to improve primary care for refugees in Australia. Med J Aust. (2021) 215(9):420–6. doi: 10.5694/mja2.51278

99. Kemp CG, Wagenaar BH, Haroz EE. Expanding hybrid studies for implementation research: intervention, implementation strategy, and context. Front Public Health. (2019) 7:00325. doi: 10.3389/fpubh.2019.00325

100. Curran GM, Landes SJ, McBain SA, Pyne JM, Smith JD, Fernandez ME, et al. Reflections on 10 years of effectiveness-implementation hybrid studies. Front Health Serv. (2022) 2:1053496. doi: 10.3389/frhs.2022.1053496

101. Jurczuk M, Bidwell P, Martinez D, Silverton L, Van der Meulen J, Wolstenholme D, et al. OASI2: a cluster randomised hybrid evaluation of strategies for sustainable implementation of the obstetric anal sphincter injury care bundle in maternity units in Great Britain. Implement Sci. (2021) 16(1):55. doi: 10.1186/s13012-021-01125-z

102. Spoelstra SL, Schueller M, Basso V, Sikorskii A. Results of a multi-site pragmatic hybrid type 3 cluster randomized trial comparing level of facilitation while implementing an intervention in community-dwelling disabled and older adults in a medicaid waiver. Implement Sci. (2022) 17(1):57. doi: 10.1186/s13012-022-01232-5

103. Patsopoulos NA. A pragmatic view on pragmatic trials. Dialogues Clin Neurosci. (2011) 13(2):217–24. doi: 10.31887/DCNS.2011.13.2/npatsopoulos

104. Loudon K, Treweek S, Sullivan F, Donnan P, Thorpe KE, Zwarenstein M. The PRECIS-2 tool: designing trials that are fit for purpose. Br Med J. (2015) 350:h2147. doi: 10.1136/bmj.h2147

105. Rycroft-Malone J, Seers K, Crichton N, Chandler J, Hawkes C, Allen C, et al. A pragmatic cluster randomised trial evaluating three implementation interventions. Implement Sci. (2012) 7(1):80. doi: 10.1186/1748-5908-7-80

106. Eccles MP, Steen IN, Whitty PM, Hall L. Is untargeted educational outreach visiting delivered by pharmaceutical advisers effective in primary care? A pragmatic randomized controlled trial. Implement Sci. (2007) 2(1):23. doi: 10.1186/1748-5908-2-23

107. Eccles M, Grimshaw J, Campbell M, Ramsay C. Research designs for studies evaluating the effectiveness of change and improvement strategies. Qual Saf Health Care. (2003) 12(1):47–52. doi: 10.1136/qhc.12.1.47

108. Titler MG, Conlon P, Reynolds MA, Ripley R, Tsodikov A, Wilson DS, et al. The effect of a translating research into practice intervention to promote use of evidence-based fall prevention interventions in hospitalized adults: a prospective pre-post implementation study in the U.S. Appl Nurs Res. (2016) 31:52–9. doi: 10.1016/j.apnr.2015.12.004

109. Russell DJ, Rivard LM, Walter SD, Rosenbaum PL, Roxborough L, Cameron D, et al. Using knowledge brokers to facilitate the uptake of pediatric measurement tools into clinical practice: a before-after intervention study. Implement Sci. (2010) 5(1):92. doi: 10.1186/1748-5908-5-92

110. Ray-Coquard I, Philip T, de Laroche G, Froger X, Suchaud JP, Voloch A, et al. A controlled “before-after” study: impact of a clinical guidelines programme and regional cancer network organization on medical practice. Br J Cancer. (2002) 86(3):313–21. doi: 10.1038/sj.bjc.6600057

111. Chen HY, Harris IA, Sutherland K, Levesque JF. A controlled before-after study to evaluate the effect of a clinician led policy to reduce knee arthroscopy in NSW. BMC Musculoskelet Disord. (2018) 19(1):148. doi: 10.1186/s12891-018-2043-5

112. Siriwardena AN, Shaw D, Essam N, Togher FJ, Davy Z, Spaight A, et al. The effect of a national quality improvement collaborative on prehospital care for acute myocardial infarction and stroke in England. Implement Sci. (2014) 9(1):17. doi: 10.1186/1748-5908-9-17

113. Hébert HL, Morales DR, Torrance N, Smith BH, Colvin LA. Assessing the impact of a national clinical guideline for the management of chronic pain on opioid prescribing rates: a controlled interrupted time series analysis. Implement Sci. (2022) 17(1):77. doi: 10.1186/s13012-022-01251-2

114. Hwang S, Birken SA, Melvin CL, Rohweder CL, Smith JD. Designs and methods for implementation research: advancing the mission of the CTSA program. J Clin Transl Sci. (2020) 4(3):159–67. doi: 10.1017/cts.2020.16

115. Dogherty EJ, Harrison MB, Baker C, Graham ID. Following a natural experiment of guideline adaption and early implementation: a mixed methods study of facilitation. Implement Sci. (2012) 7:9. doi: 10.1186/1748-5908-7-9

116. Gnich W, Sherriff A, Bonetti D, Conway DI, Macpherson LMD. The effect of introducing a financial incentive to promote application of fluoride varnish in dental practice in Scotland: a natural experiment. Implement Sci. (2018) 13(1):95. doi: 10.1186/s13012-018-0775-0

117. Denis JL, Hébert Y, Langley A, Lozeau D, Trottier LH. Explaining diffusion patterns for complex health care innovations. Health Care Manage Rev. (2002) 27(3):60–73. doi: 10.1097/00004010-200207000-00007

118. Kislov R, Pope C, Martin GP, Wilson PM. Harnessing the power of theorising in implementation science. Implement Sci. (2019) 14(1):103. doi: 10.1186/s13012-019-0957-4

119. Nundy S, Cooper LA, Mate KS. The quintuple aim for health care improvement: a new imperative to advance health equity. JAMA. (2022) 327(6):521–2. doi: 10.1001/jama.2021.25181

120. Pérez D, Van der Stuyft P, Zabala M, Castro M, Lefèvre P. A modified theoretical framework to assess implementation fidelity of adaptive public health interventions. Implement Sci. (2016) 11(1):91. doi: 10.1186/s13012-016-0457-8

121. Haley AD, Powell BJ, Walsh-Bailey C, Krancari M, Gruß I, Shea CM, et al. Strengthening methods for tracking adaptations and modifications to implementation strategies. BMC Med Res Methodol. (2021) 21(1):133. doi: 10.1186/s12874-021-01326-6

122. Brownson RC, Kumanyika SK, Kreuter MW, Haire-Joshu D. Implementation science should give higher priority to health equity. Implement Sci. (2021) 16(1):28. doi: 10.1186/s13012-021-01097-0

Keywords: implementation research, implementation practice, context, adaptation, study design

Citation: Harvey G, Rycroft-Malone J, Seers K, Wilson P, Cassidy C, Embrett M, Hu J, Pearson M, Semenic S, Zhao J and Graham ID (2023) Connecting the science and practice of implementation – applying the lens of context to inform study design in implementation research. Front. Health Serv. 3:1162762. doi: 10.3389/frhs.2023.1162762

Received: 10 February 2023; Accepted: 21 June 2023;

Published: 7 July 2023.

Edited by:

Jeanette Kirk, Hvidovre Hospital, DenmarkReviewed by:

Anna Bergström, Uppsala University, SwedenMeagen Rosenthal, University of Mississippi, United States

© 2023 Harvey, Rycroft-Malone, Seers, Wilson, Cassidy, Embrett, Hu, Pearson, Semenic, Zhao and Graham. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Gillian Harvey gillian.harvey@flinders.edu.au

†Present address Jungiang Zhao, Waypoint Research Institute, Waypoint Centre for Mental Health Care Penetanguishene, ON, Canada

Gillian Harvey

Gillian Harvey Jo Rycroft-Malone

Jo Rycroft-Malone Kate Seers3

Kate Seers3  Christine Cassidy

Christine Cassidy Jiale Hu

Jiale Hu Mark Pearson

Mark Pearson Sonia Semenic

Sonia Semenic Junqiang Zhao

Junqiang Zhao