Semantic Geometric Modelling of Unstructured Indoor Point Cloud

Abstract

:1. Introduction

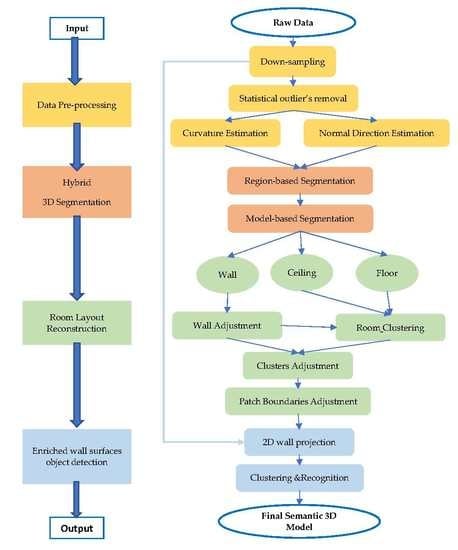

- A novel framework for the automatic reconstruction of indoor building models from BLS, which provides a realistic construction with semantic information. Only the 3D point cloud is needed for the processing;

- A new hybrid segmentation approach that enhances the segmentation process of the point cloud;

- An adjustment of patch boundaries using the wall segments which enhance the geometry of structural elements;

- An enriched wall-surface object detection that can detect not only open objects, but closed objects as well.

2. Related Work

2.1. Manhattan World Assumption

2.2. Scanner Prior Knowledge

3. Methodology

3.1. Overview

3.2. Preprocessing

3.2.1. Down-Sampling

3.2.2. Statistical Outlier Removal

3.2.3. Local Surface Properties

3.3. Hybrid 3D Point Segmentation

3.4. Room Layout Reconstruction

3.4.1. Re-Orientation and Ceiling Detection

3.4.2. Wall Refinement

3.4.3. Room Clustering

3.4.4. Boundaries Reconstruction

3.4.5. In-Hole Reconstruction

3.4.6. Boundary Line Segments Refinement

3.5. Enriched Wall-Surface Objects Detection

- ❖

- Area term (): This term penalizes the total area of the node based on the following criteria:

- ❖

- Floor ceiling term (): This term penalizes nodes, the centroid of which is near to ceilings or floors, based on the following criteria:

- ❖

- Linearity term (): This term penalizes nodes if the ratio between length and width is larger than the threshold as shown below:where : Mean, : Standard deviation, : Thresholds, : Upper, lower, ceiling, and floor heights, respectively.

4. Experiments and Discussion

4.1. Datasets Description

4.2. Parameter Settings

4.3. Evaluation

5. Conclusions and Future Work

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Xiao, J.; Furukawa, Y. Reconstructing the World’s Museums. Int. J. Comput. Vis. 2014, 110, 243–258. [Google Scholar] [CrossRef] [Green Version]

- Cabral, R.; Furukawa, Y. Piecewise planar and compact floorplan reconstruction from images. In Proceedings of the 2014 IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 628–635. [Google Scholar]

- Dai, Y.; Gong, J.; Li, Y.; Feng, Q. Building segmentation and outline extraction from UAV image-derived point clouds by a line growing algorithm. Int. J. Digit. Earth 2017, 8947, 1–21. [Google Scholar] [CrossRef]

- Sanchez, V.; Zakhor, A. Planar 3D modeling of building interiors from point cloud data. In Proceedings of the 2012 19th IEEE International Conference on Image Processing, Orlando, FL, USA, 30 September–3 October 2012; pp. 1777–1780. [Google Scholar]

- Zhang, G.; Vela, P.A.; Brilakis, I. Detecting, Fitting, and Classifying Surface Primitives for Infrastructure Point Cloud Data. In Proceedings of the 2013 ASCE International Workshop on Computing in Civil Engineering, Los Angeles, CA, USA, 23–25 June 2013; pp. 6–9. [Google Scholar]

- Pang, Y.; Zhang, C.; Zhou, L.; Lin, B.; Lv, G. Extracting Indoor Space Information in Complex Building Environments. ISPRS Int. J. Geo-Inf. 2018, 7, 321. [Google Scholar] [CrossRef]

- Shaffer, E.; Garland, M. Efficient adaptive simplification of massive meshes. In Proceedings of the Conference on Visualization ’01, San Diego, CA, USA, 21–26 October 2001; pp. 127–551. [Google Scholar]

- Mura, C.; Mattausch, O.; Villanueva, A.J.; Gobbetti, E.; Pajarola, R. Automatic room detection and reconstruction in cluttered indoor environments with complex room layouts. Comput. Graph. 2014, 44, 20–32. [Google Scholar] [CrossRef] [Green Version]

- Wang, R.; Xie, L.; Chen, D. Modeling Indoor Spaces Using Decomposition and Reconstruction of Structural Elements. Photogramm. Eng. Remote Sens. 2017, 83, 827–841. [Google Scholar] [CrossRef]

- Michailidis, G.T.; Pajarola, R. Bayesian graph-cut optimization for wall surfaces reconstruction in indoor environments. Vis. Comput. 2017, 33, 1347–1355. [Google Scholar] [CrossRef]

- Lehtola, V.; Kaartinen, H.; Nüchter, A.; Kaijaluoto, R.; Kukko, A.; Litkey, P.; Honkavaara, E.; Rosnell, T.; Vaaja, M.T.; Virtanen, J.P.; et al. Comparison of the Selected State-Of-The-Art 3D Indoor Scanning and Point Cloud Generation Methods. Remote Sens. 2017, 9, 796. [Google Scholar] [CrossRef]

- Nguatem, W.; Drauschke, M.; Meyer, H. Finding Cuboid-Based Building Models in Point Clouds. ISPRS Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2012, XXXIX-B3, 149–154. [Google Scholar] [CrossRef]

- Lin, Z.; Xu, Z.; Hu, D.; Hu, Q.; Li, W. Hybrid Spatial Data Model for Indoor Space: Combined Topology and Grid. ISPRS Int. J. Geo-Inf. 2017, 6, 343. [Google Scholar] [CrossRef]

- Ochmann, S.; Vock, R.; Wessel, R.; Klein, R. Automatic reconstruction of parametric building models from indoor point clouds. Comput. Graph. 2016, 54, 94–103. [Google Scholar] [CrossRef]

- Murali, S.; Speciale, P.; Oswald, M.R.; Pollefeys, M. Indoor Scan2BIM: Building Information Models of House Interiors. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Vancouver, BC, Canada, 24–28 September 2017. [Google Scholar]

- Ikehata, S.; Yan, H.; Furukawa, Y. Structured Indoor Modeling. In Proceedings of the ICCV, Las Condes, Chile, 11–18 December 2015. [Google Scholar]

- Coughlan, J.M.; Yuille, A.L. The Manhattan World Assumption: Regularities in Scene Statistics Which Enable Bayesian Inference. In Proceedings of the NIPS, Denver, CO, USA, 27 November–2 December 2000; pp. 845–851. [Google Scholar]

- Previtali, M.; Barazzetti, L.; Brumana, R.; Scaioni, M. Towards automatic indoor reconstruction of cluttered building rooms from point clouds. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2014, II-5, 281–288. [Google Scholar] [CrossRef]

- Oesau, S.; Lafarge, F.; Alliez, P. Indoor scene reconstruction using feature sensitive primitive extraction and graph-cut. ISPRS J. Photogramm. Remote Sens. 2014, 90, 68–82. [Google Scholar] [CrossRef] [Green Version]

- Leys, C.; Ley, C.; Klein, O.; Bernard, P.; Licata, L. Detecting outliers: Do not use standard deviation around the mean, use absolute deviation around the median. J. Exp. Soc. Psychol. 2013, 49, 764–766. [Google Scholar] [CrossRef] [Green Version]

- Sampath, A.; Shan, J. Building boundary tracing and regularization from airborne lidar point clouds. Photogramm. Eng. Remote Sens. 2007, 73, 805–812. [Google Scholar] [CrossRef]

- Knorr, E.M.; Ng, R.T. A Unified Notion of Outliers: Properties and Computation. In Proceedings of the International Conference on Knowledge Discovery and Data Mining, Newport Beach, CA, USA, 14–17 August 1997; pp. 219–222. [Google Scholar]

- Pauly, M.; Gross, M.; Kobbelt, L.P. Efficient simplification of point-sampled surfaces. In Proceedings of the 13th IEEE Visualization Conference, No. Section 4, Boston, MA, USA, 27 October–1 November 2002; pp. 163–170. [Google Scholar] [Green Version]

- Tovari, D.; Pfeifer, N. Segmentation based robust interpolation—A new approach to laser data filtering. Laserscanning 2005, 6. [Google Scholar]

- Schnabel, R.; Wahl, R.; Klein, R. Efficient RANSAC for Point-Cloud Shape Detection. Comput. Graph. Forum 2007, 26, 214–226. [Google Scholar] [CrossRef] [Green Version]

- Păun, C.D.; Oniga, V.E.; Dragomir, P.I. Three-Dimensional Transformation of Coordinate Systems using Nonlinear Analysis—Procrustes Algorithm. Int. J. Eng. Sci. Res. Technol. 2017, 6, 355–363. [Google Scholar]

- Maltezos, E.; Ioannidis, C. Automatic Extraction of Building Roof Planes from Airborne Lidar Data Applying an Extended 3d Randomized Hough Transform. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2016, III-3, 209–216. [Google Scholar] [CrossRef]

- Sampath, A.; Shan, J. Segmentation and reconstruction of polyhedral building roofs from aerial lidar point clouds. IEEE Trans. Geosci. Remote Sens. 2010, 48, 1554–1567. [Google Scholar] [CrossRef]

- Boykov, Y.; Veksler, O.; Zabih, R. Fast approximate energy minimization via graph cuts. IEEE Trans. Pattern Anal. Mach. Intell. 2001, 23, 1222–1239. [Google Scholar] [CrossRef] [Green Version]

- Chen, S.; Li, M.; Ren, K.; Qiao, C. Crowd Map: Accurate Reconstruction of Indoor Floor Plans from Crowdsourced Sensor-Rich Videos. In Proceedings of the International Conference on Distributed Computing Systems, Columbus, OH, USA, 29 June–2 July 2015; pp. 1–10. [Google Scholar]

| Approach | Data Type | Main Concept | Final Model | ||||

|---|---|---|---|---|---|---|---|

| SE | Do | Wi | VO | Texture | |||

| [1] | Images Laser scanner | Manhattan world assumption Scanner position | ✓ | ✕ | ✕ | ✕ | ✓ |

| [2] | Panoramic images | Manhattan world assumption | ✓ | ✕ | ✕ | ✕ | ✓ |

| [16] | Panoramic RGBD images | Manhattan world assumption | ✓ | ✕ | ✕ | ✕ | ✓ |

| [15] | RGBD | Manhattan world assumption | ✓ | ✓ | ✕ | ✕ | ✕ |

| [14] | Terrestrial laser scanner | Scanner position | ✓ | ✓ | ✓ | ✓ | ✕ |

| [9] | Mobile laser scanner | Laser scanner trajectory | ✓ | ✓ | ✕ | ✕ | ✕ |

| [10] | Terrestrial laser scanner | Scanner position | ✕ | ✓ | ✓ | ✕ | ✕ |

| Parameter | Value | Units | Parameter | Value | Units |

|---|---|---|---|---|---|

| Preprocessing | |||||

| Voxel size | 0.05 | meter | # of point neighbors | 16 | - |

| Outliers distance threshold | 1.0 | meter | |||

| Hybrid 3D Point Segmentation | Wall-Surface Reconstruction | ||||

| ppd | 0.1 | meter | Minimum width | 0.25 | meter |

| Area | 0.5 | square meter | Distance to wall | 0.5 | meter |

| Difference angle | 15 | degree | Door height | 1.75 | meter |

| # of points per plane | 500 | - | Door Width | 1.7 | meter |

| Room Layout Construction | Rasterization space | 0.1 | meter | ||

| # of points per region | 100 | - | Region distance | 0.2 | meter |

| R, region distance | 0.25 | meter | 0.5 | - | |

| ppd | 0.025 | meter | 0.5 | - | |

| # of points per line | 5 | meter | 0.6 | - | |

| Difference angle | 5 | degree | 3 | - | |

| Precession (P) % | Recall (R) % | Harmonic Factor (F) % | |

|---|---|---|---|

| Syn. 1 | 99.8 | 99.8 | 99.8 |

| Syn. 2 | 99.7 | 99.2 | 99.5 |

| Syn. 3 | 99.7 | 99.5 | 99.6 |

| BLS1 | 97.4 | 99.1 | 98.2 |

| BLS 2 | 99.5 | 99.3 | 99.4 |

| BLS 3 | 99.3 | 99 | 99.2 |

| Down-Sampled % | Do (Opened) | Do (Closed) | Wi | VO | |

|---|---|---|---|---|---|

| Syn. 1 | 1 | 13/13 | - | 16/16 | - |

| Syn. 2 | 2 | 3/3 | - | 3/3 | - |

| Syn. 3 | 1 | 4/4 | - | 3/3 | - |

| BLS 1 | 1.5 | 5/5 | 23/20 | 100 | 2/3 |

| BLS 2 | 0.1 | 1/1 | 1/1 | 4/1 | - |

| BLS 3 | 0.6 | 1/1 | - | 3/3 | - |

| Precession (P) % | Recall (R) % | Harmonic Factor (F) % | |

|---|---|---|---|

| Syn. 1 | 84.7 | 90.1 | 86.1 |

| Syn. 2 | 91.7 | 98.7 | 95.0 |

| Syn. 3 | 91.7 | 95.4 | 93.5 |

| BLS 1 | 76.0 | 87.6 | 79.7 |

| BLS 2 | 97.6 | 81.4 | 88.50 |

| BLS 3 | 99.1 | 65.9 | 78.7 |

| Dataset | Preprocessing | Hybrid Segmentation | Room Layout Reconstruction | Enriched Wall-Surface Object Detection |

|---|---|---|---|---|

| Syn. 1 | 95.91 | 49.78 | 468.14 | 69.30 |

| Syn. 2 | 33.38 | 23.20 | 281.29 | 39.68 |

| Syn. 3 | 37.16 | 6.90 | 278.32 | 37.313 |

| BLS 1 | 161.53 | 219.16 | 164.18 | 39.91 |

| BLS 2 | 31.25 | 48.03 | 105.24 | 28.35 |

| BLS 3 | 60.72 | 24.71 | 112.45 | 29.45 |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Shi, W.; Ahmed, W.; Li, N.; Fan, W.; Xiang, H.; Wang, M. Semantic Geometric Modelling of Unstructured Indoor Point Cloud. ISPRS Int. J. Geo-Inf. 2019, 8, 9. https://0-doi-org.brum.beds.ac.uk/10.3390/ijgi8010009

Shi W, Ahmed W, Li N, Fan W, Xiang H, Wang M. Semantic Geometric Modelling of Unstructured Indoor Point Cloud. ISPRS International Journal of Geo-Information. 2019; 8(1):9. https://0-doi-org.brum.beds.ac.uk/10.3390/ijgi8010009

Chicago/Turabian StyleShi, Wenzhong, Wael Ahmed, Na Li, Wenzheng Fan, Haodong Xiang, and Muyang Wang. 2019. "Semantic Geometric Modelling of Unstructured Indoor Point Cloud" ISPRS International Journal of Geo-Information 8, no. 1: 9. https://0-doi-org.brum.beds.ac.uk/10.3390/ijgi8010009