FeelMusic: Enriching Our Emotive Experience of Music through Audio-Tactile Mappings

Abstract

:1. Introduction

2. Materials and Methods

2.1. Experiment 1

2.1.1. Experiment Setup

2.1.2. Participants

2.1.3. Experiment Stimuli

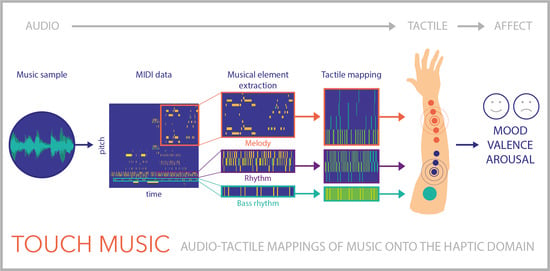

2.2. FeelMusic Interface Development

2.2.1. Pump-and-Vibe Design and Hardware

2.2.2. Pump-and-Vibe as a FeelMusic Interface

2.3. Mapping Music onto Pump-and-Vibe

Composing Novel Tactile Pieces for Pump-and-Vibe

2.4. Experiment 2

2.4.1. Experiment Stimuli

2.4.2. Experimental Setup

2.4.3. Participants

3. Results

3.1. Experiment 1 Results

3.2. Experiment 2 Results

3.2.1. Pleasantness Responses to Pump-and-Vibe

3.2.2. Adjective Responses to Pump-and-Vibe

4. Discussion

4.1. Participant Comments and Experiment Limitations

4.2. Future Work

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| MDPI | Multidisciplinary Digital Publishing Institute |

| DOAJ | Directory of open access journals |

Appendix A

References

- Fritz, T.; Jentschke, S.; Gosselin, N.; Sammler, D.; Peretz, I.; Turner, R.; Friederici, A.D.; Koelsch, S. Universal recognition of three basic emotions in music. Curr. Biol. 2009, 19, 573–576. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Clayton, M. The Cultural Study of Music: A Critical Introduction; Routledge: London, UK, 2013. [Google Scholar]

- Field, T. Touch for socioemotional and physical well-being: A review. Dev. Rev. 2010, 30, 367–383. [Google Scholar] [CrossRef]

- MacDonald, R.; Kreutz, G.; Mitchell, L. Music, Health, and Wellbeing; Oxford University Press: Oxford, UK, 2013. [Google Scholar]

- Gomez, P.; Danuser, B. Affective and physiological responses to environmental noises and music. Int. J. Psychophysiol. 2004, 53, 91–103. [Google Scholar] [CrossRef] [PubMed]

- Hoffman, H.G. Physically touching virtual objects using tactile augmentation enhances the realism of virtual environments. In Proceedings of the IEEE 1998 Virtual Reality Annual International Symposium (Cat. No. 98CB36180), Atlanta, GA, USA, 14–18 March 1998; pp. 59–63. [Google Scholar]

- Eid, M.A.; Al Osman, H. Affective haptics: Current research and future directions. IEEE Access 2015, 4, 26–40. [Google Scholar] [CrossRef]

- Bach-Y-Rita, P. Tactile sensory substitution studies. Ann. N. Y. Acad. Sci. 2004, 1013, 83–91. [Google Scholar] [CrossRef] [Green Version]

- Merchel, S.; Leppin, A.; Altinsoy, E. Hearing with your body: The influence of whole-body vibrations on loudness perception. In Proceedings of the 16th International Congress on Sound and Vibration (ICSV), Kraków, Poland, 5–9 July 2009; Volume 4. [Google Scholar]

- Merchel, S.; Altinsoy, M.E. The influence of vibrations on musical experience. J. Audio Eng. Soc. 2014, 62, 220–234. [Google Scholar] [CrossRef]

- Altinsoy, M.E.; Merchel, S. Cross-modal frequency matching: Sound and whole-body vibration. In Proceedings of the International Workshop on Haptic and Audio Interaction Design; Springer: Berlin, Germany, 2010; pp. 37–45. [Google Scholar]

- Pérez-Bellido, A.; Barnes, K.A.; Yau, J.M. Auditory and tactile frequency representations overlap in parietal operculum. Perception 2016, 45, 333. [Google Scholar]

- CuteCircuit. Sound Shirt. 2016. Available online: http://cutecircuit.com/soundshirt/ (accessed on 19 September 2019).

- Yao, L.; Shi, Y.; Chi, H.; Ji, X.; Ying, F. Music-touch shoes: Vibrotactile interface for hearing impaired dancers. In Proceedings of the ACM Fourth International Conference on Tangible, Embedded, and Embodied Interaction; Association for Computing Machinery: New York, NY, USA, 2010; pp. 275–276. [Google Scholar]

- Allwood, J. Real-Tme Sound to Tactile Mapping. 2010. Available online: http://www.coroflot.com/jackjallwood/outer-ear (accessed on 19 September 2019).

- Podzuweit, F. Music for Deaf People. 2010. Available online: http://www.coroflot.com/frederik/Music-for-deaf-people (accessed on 19 September 2019).

- Novich, S.D.; Eagleman, D.M. A vibrotactile sensory substitution device for the deaf and profoundly hearing impaired. In Proceedings of the IEEE Haptics Symposium (HAPTICS), Houston, TX, USA, 23–26 February 2014; p. 1. [Google Scholar]

- Eagleman, D. Sensory Substitution. 2015. Available online: https://www.eagleman.com/research/sensory-substitution (accessed on 19 September 2019).

- Gunther, E.; OModhrain, S. Cutaneous grooves: Composing for the sense of touch. J. New Music Res. 2003, 32, 369–381. [Google Scholar] [CrossRef] [Green Version]

- Karam, M.; Branje, C.; Nespoli, G.; Thompson, N.; Russo, F.A.; Fels, D.I. The emoti-chair: An interactive tactile music exhibit. In Proceedings of the ACM CHI’10 Extended Abstracts on Human Factors in Computing Systems, Atlanta, GA, USA, 10–15 April 2010; pp. 3069–3074. [Google Scholar]

- Karam, M.; Russo, F.A.; Fels, D.I. Designing the model human cochlea: An ambient crossmodal audio-tactile display. IEEE Trans. Haptics 2009, 2, 160–169. [Google Scholar] [CrossRef]

- Jack, R.; McPherson, A.; Stockman, T. Designing tactile musical devices with and for deaf users: A case study. In Proceedings of the International Conference on the Multimodal Experience of Music, Sheffield, UK, 23–25 March 2015. [Google Scholar]

- Gomez, P.; Danuser, B. Relationships between musical structure and psychophysiological measures of emotion. Emotion 2007, 7, 377. [Google Scholar] [CrossRef] [Green Version]

- Peretz, I.; Gagnon, L.; Bouchard, B. Music and emotion: Perceptual determinants, immediacy, and isolation after brain damage. Cognition 1998, 68, 111–141. [Google Scholar] [CrossRef]

- Eitan, Z.; Rothschild, I. How music touches: Musical parameters and listeners audio-tactile metaphorical mappings. Psychol. Music 2011, 39, 449–467. [Google Scholar] [CrossRef]

- Peeva, D.; Baird, B.; Izmirli, O.; Blevins, D. Haptic and sound correlations: Pitch, Loudness and Texture. In Proceedings of the IEEE Eighth International Conference on Information Visualisation, London, UK, 16 July 2004; pp. 659–664. [Google Scholar]

- Vant Klooster, A.R.; Collins, N. In A State: Live Emotion Detection and Visualisation for Music Performance. In Proceedings of the NIME, London, UK, 30 June–3 July 2014; pp. 545–548. [Google Scholar]

- Ekman, P. An argument for basic emotions. Cognit. Emot. 1992, 6, 169–200. [Google Scholar] [CrossRef]

- Russell, J.A. A circumplex model of affect. J. Pers. Soc. Psychol. 1980, 39, 1161. [Google Scholar] [CrossRef]

- Watson, D.; Wiese, D.; Vaidya, J.; Tellegen, A. The two general activation systems of affect: Structural findings, evolutionary considerations, and psychobiological evidence. J. Pers. Soc. Psychol. 1999, 76, 820. [Google Scholar] [CrossRef]

- Thayer, R.E. The Origin of Everyday Moods: Managing Energy, Tension, and Stress; Oxford University Press: Oxford, UK, 1997. [Google Scholar]

- Ritossa, D.A.; Rickard, N.S. The relative utility of pleasantness and liking dimensions in predicting the emotions expressed by music. Psychol. Music 2004, 32, 5–22. [Google Scholar] [CrossRef]

- Yoo, Y.; Yoo, T.; Kong, J.; Choi, S. Emotional responses of tactile icons: Effects of amplitude, frequency, duration, and envelope. In Proceedings of the 2015 IEEE World Haptics Conference (WHC), Evanston, IL, USA, 22–26 June 2015; pp. 235–240. [Google Scholar]

- Salminen, K.; Surakka, V.; Lylykangas, J.; Raisamo, J.; Saarinen, R.; Raisamo, R.; Rantala, J.; Evreinov, G. Emotional and behavioral responses to haptic stimulation. In Proceedings of the ACM SIGCHI Conference on Human Factors in Computing Systems, Yokohama, Japan, 8–13 May 2008; pp. 1555–1562. [Google Scholar]

- Kryssanov, V.V.; Cooper, E.W.; Ogawa, H.; Kurose, I. A computational model to relay emotions with tactile stimuli. In Proceedings of the IEEE 2009 3rd International Conference on Affective Computing and Intelligent Interaction and Workshops, Amsterdam, The Netherlands, 10–12 September 2009; pp. 1–6. [Google Scholar]

- Takahashi, K.; Mitsuhashi, H.; Murata, K.; Norieda, S.; Watanabe, K. Feelings of animacy and pleasantness from tactile stimulation: Effect of stimulus frequency and stimulated body part. In Proceedings of the 2011 IEEE International Conference on Systems, Man, and Cybernetics, Anchorage, AK, USA, 9–12 October 2011; pp. 3292–3297. [Google Scholar]

- Essick, G.K.; McGlone, F.; Dancer, C.; Fabricant, D.; Ragin, Y.; Phillips, N.; Jones, T.; Guest, S. Quantitative assessment of pleasant touch. Neurosci. Biobehav. Rev. 2010, 34, 192–203. [Google Scholar] [CrossRef]

- Bianchi, M.; Valenza, G.; Serio, A.; Lanata, A.; Greco, A.; Nardelli, M.; Scilingo, E.P.; Bicchi, A. Design and preliminary affective characterization of a novel fabric-based tactile display. In Proceedings of the 2014 IEEE Haptics Symposium (HAPTICS), Houston, TX, USA, 23–26 February 2014; pp. 591–596. [Google Scholar]

- Swindells, C.; MacLean, K.E.; Booth, K.S.; Meitner, M. A case-study of affect measurement tools for physical user interface design. In Proceedings of the Graphics Interface 2006; Canadian Information Processing Society: Mississauga, ON, Canada, 2006; pp. 243–250. [Google Scholar]

- Suk, H.J.; Jeong, S.H.; Hang, T.; Kwon, D.S. Tactile sensation as emotion elicitor. Kansei Eng. Int. 2009, 8, 147–152. [Google Scholar] [CrossRef]

- Design, D. Tactor. 2019. Available online: http://www.dancerdesign.co.uk/products/tactor.html (accessed on 19 September 2019).

- Francisco, E.; Tannan, V.; Zhang, Z.; Holden, J.; Tommerdahl, M. Vibrotactile amplitude discrimination capacity parallels magnitude changes in somatosensory cortex and follows Weberś Law. Exp. Brain Res. 2008, 191, 49. [Google Scholar] [CrossRef]

- Vallbo, Å.; Olausson, H.; Wessberg, J. Unmyelinated afferents constitute a second system coding tactile stimuli of the human hairy skin. J. Neurophysiol. 1999, 81, 2753–2763. [Google Scholar] [CrossRef] [Green Version]

- Ackerley, R.; Saar, K.; McGlone, F.; Backlund Wasling, H. Quantifying the sensory and emotional perception of touch: Differences between glabrous and hairy skin. Front. Behav. Neurosci. 2014, 8, 34. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Badde, S.; Heed, T. Towards explaining spatial touch perception: Weighted integration of multiple location codes. Cognit. Neuropsychol. 2016, 33, 26–47. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Ravaja, N.; Harjunen, V.; Ahmed, I.; Jacucci, G.; Spapé, M.M. Feeling touched: Emotional modulation of somatosensory potentials to interpersonal touch. Sci. Rep. 2017, 7, 40504. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Schumann, F.; O’Regan, J.K. Sensory augmentation: Integration of an auditory compass signal into human perception of space. Sci. Rep. 2017, 7, 42197. [Google Scholar] [CrossRef] [Green Version]

- Rizza, A.; Terekhov, A.V.; Montone, G.; Olivetti-Belardinelli, M.; O’Regan, J.K. Why early tactile speech aids may have failed: No perceptual integration of tactile and auditory signals. Front. Psychol. 2018, 9, 767. [Google Scholar] [CrossRef] [Green Version]

- Rossiter, J. Pump and Vibe. 2021. Available online: https://0-data-bris-ac-uk.brum.beds.ac.uk/data/dataset/1vj7fcw8m7gzl2uo35fsb3l4zn (accessed on 20 May 2021). [CrossRef]

- Schutte, K. MathWorks File Exchange; MathWorks: Natick, MA, USA, 2009. [Google Scholar]

- Occelli, V.; Spence, C.; Zampini, M. Audiotactile interactions in temporal perception. Psychon. Bull. Rev. 2011, 18, 429–454. [Google Scholar] [CrossRef] [Green Version]

- Adelstein, B.D.; Begault, D.R.; Anderson, M.R.; Wenzel, E.M. Sensitivity to haptic-audio asynchrony. In Proceedings of the 5th International Conference on Multimodal Interfaces, Vancouver, BC, Canada, 5–7 November 2003; pp. 73–76. [Google Scholar]

- Imbir, K.; Gołąb, M. Affective reactions to music: Norms for 120 excerpts of modern and classical music. Psychol. Music 2017, 45, 432–449. [Google Scholar] [CrossRef]

- Hevner, K. Expression in music: A discussion of experimental studies and theories. Psychol. Rev. 1935, 42, 186. [Google Scholar] [CrossRef]

- Gregory, A.H.; Worrall, L.; Sarge, A. The development of emotional responses to music in young children. Motiv. Emot. 1996, 20, 341–348. [Google Scholar] [CrossRef]

- Gregory, A.H.; Varney, N. Cross-cultural comparisons in the affective response to music. Psychol. Music 1996, 24, 47–52. [Google Scholar] [CrossRef]

| Modality | Stimuli |

|---|---|

| Auditory | Short music samples |

| M1: Major chord progression * | |

| M2: Minor chord progression * | |

| M3: Ashes to Ashes, David Bowie * | |

| M4: Soul Bossa Nova, Quincy Jones * | |

| M5: Main title (Jaws theme), John Williams * | |

| M6: Is this Love, Bob Marley * | |

| M7: Sweet sixteen, BB King * | |

| Tactile | Mappings of music onto the Pump-and-Vibe (see Figure 5B) |

| T1: Tactile mapping of M1 | |

| T2: Tactile mapping of M2 | |

| T3: Tactile mapping of M3 | |

| T4: Tactile mapping of M4 | |

| T5: Tactile mapping of M5 | |

| T6: Tactile mapping of M6 | |

| T7: Random tactile sensations (see S5) | |

| Tactile | Compositions for the Pump-and-Vibe (see Figure 5A) |

| S1: Fast sawtooth stroking effect | |

| S2: Slower stroking effect | |

| S3: Increasing speed stroking | |

| S4: Slow progression, increasing intensity | |

| S5: Random pulses | |

| S6: Pinching effect, increasing intensity | |

| S7: Fast sawtooth with pulsing | |

| S8: All motors pulsing simultaneously | |

| Combined | Combination of music and tactile mappings |

| C1: M1 played with T1 | |

| C2: M2 played with T2 | |

| C3: M3 played with T3 | |

| C4: M4 played with T4 | |

| C5: M5 played with T5 | |

| C6: M6 played with T6 | |

| C7: M7 played with T7 (not matching) |

| 1 | 2 | 3 | 4 |

| Serious | Sad | Sentimental | Tranquil |

| Dignified | Melancholy | Tender | Leisurely |

| Lofty | Depressing | Dreamy | Satisfying |

| Sober | Heavy | Longing | Lyrical |

| Solemn | Tragic | Yearning | Quiet |

| Spiritual | Dark | Pleading | Soothing |

| 5 | 6 | 7 | 8 |

| Playful | Happy | Exciting | Vigorous |

| Humorous | Joyous | Dramatic | Robust |

| Sprightly | Gay | Passionate | Emphatic |

| Light | Merry | Exhilarated | Ponderous |

| Delicate | Cheerful | Restless | Majestic |

| Whimsical | Bright | Triumphant | Exalting |

| Song | p-Value for Hypothesis : | ||

|---|---|---|---|

| A: Major chords | 0.000 | 0.001 | 0.001 |

| B: Minor chords | 0.281 | 0.585 | 0.719 |

| C: Ashes to Ashes | 0.001 | 0.683 | 0.001 |

| D: Soul Bossa Nova | 0.002 | 0.210 | 0.018 |

| E: Jaws theme | 0.001 | 0.437 | 0.007 |

| F: Is this love | 0.381 | 0.203 | 0.969 |

| G: Sweet sixteen | 0.000 | 0.209 | 0.000 |

| Stimulus | Modal Adjectives |

|---|---|

| S1 | Exciting, Restless, Vigorous |

| S2 | Restless, Dramatic, Playful, Cheerful, Bright |

| S3 | Restless, Dramatic, Exciting, Vigorous, Playful, Exhilarating |

| S4 | Yearning, Sad, Leisurely, Sober, Light |

| S5 | Restless, Vigorous, Playful, Exciting, Heavy |

| S6 | Sad, Tragic, Solemn |

| S7 | Vigorous, Restless, Dramatic, Emphatic, Bright, Exhilarating |

| S8 | Vigorous, Dramatic, Robust, Serious, Heavy |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Haynes, A.; Lawry, J.; Kent, C.; Rossiter, J. FeelMusic: Enriching Our Emotive Experience of Music through Audio-Tactile Mappings. Multimodal Technol. Interact. 2021, 5, 29. https://0-doi-org.brum.beds.ac.uk/10.3390/mti5060029

Haynes A, Lawry J, Kent C, Rossiter J. FeelMusic: Enriching Our Emotive Experience of Music through Audio-Tactile Mappings. Multimodal Technologies and Interaction. 2021; 5(6):29. https://0-doi-org.brum.beds.ac.uk/10.3390/mti5060029

Chicago/Turabian StyleHaynes, Alice, Jonathan Lawry, Christopher Kent, and Jonathan Rossiter. 2021. "FeelMusic: Enriching Our Emotive Experience of Music through Audio-Tactile Mappings" Multimodal Technologies and Interaction 5, no. 6: 29. https://0-doi-org.brum.beds.ac.uk/10.3390/mti5060029