1. Introduction

With the development of remote sensing technology, remote sensing images are widely used in land cover monitoring, environmental monitoring, geographic mapping and target recognizing and other fields [

1,

2,

3,

4,

5]. The Global Energy and Water Cycle Experiment (GEWEX) Cloud Assessment database estimates that the global annual average cloud cover is approximately 68% [

6]. As a result, clouds will cause a lot of difficulty to target detection, object recognition and other tasks and ultimately cause incorrect analysis results [

7,

8]. Therefore, cloud detection is an important preprocessing step in various remote sensing applications.

Over the years, a number of cloud detection methods have been proposed for optical remote sensing images. In general, these methods can be mainly classified into two categories [

9]: single-image-based methods and multiple-images-based methods. The multiple-images-based methods [

10,

11,

12] require a set of images taken under the same grounds at different times. Hagolle et al. [

13] developed a Multi-Temporal Cloud Detection (MTCD) method using time series of images from Formosat-2 and Landsat, and the results indicate that the MTCD method is more accurate than the Automatic Cloud Cover Assessment method. Chen et al. [

14] proposed an iterative haze optimized transformation for automatic cloud/haze detection of Landsat image with the help of a corresponding clear image. Qian et al. [

15] designed an automated cloud detection algorithm named Mean Shift Cloud Detection (MSCD) using multi-temporal Landsat 8 Operational Landsat Imager (OLI) data without any reference images. However, these methods need more images over a short time period to ensure that the ground surface underneath does not change much. In addition, a clear reference image is difficult to acquire.

Compared with multiple-images-based methods, cloud detection methods based on a single scene are more common and popular due to the reduced requirement for input data. Early single-image-based methods are mainly based on threshold [

16,

17,

18], which extract various spectral features for each pixel, then use several thresholds to determine cloud pixels [

19]. Cihlar and Howarth [

20] used the Normalized Difference Vegetation Index (NDVI) to detect cloud pixels in AVHRR images. Irish [

21] performed the Automated Cloud Cover Assessment (ACCA) system to screen clouds in Landsat data by applying a number of spectral filters, and depending heavily on the thermal infrared band. Zhu et al. [

22,

23] provided a cloud detection method for Landsat images by detecting potential cloud pixels combined with the cloud probability mask. Yang et al. [

24] developed a progressive optimal scheme for detecting clouds in Landsat 8 image, which is based on the statistical properties and spectral properties derived from a large number of the images with cloud layers. The threshold-based cloud detection methods usually utilize only the low-level spectral information, and neglect the spatial information, which do not always work well for cloudy images under different imaging conditions because of its sensitivity to the underlying surface and the range of cloud cover [

25,

26].

Subsequently, more sophisticated methods have been developed [

27,

28,

29]. Danda et al. [

30] presented a morphology-based approach to coarsely detect clouds in remote sensing images based on a single band. As cloud and cloud shadow always occur in pairs, the relationship between cloud and cloud shadow as well as sun and satellite angles were used to improve the detection accuracy of cloud and cloud shadow [

31]. An automatic multi-feature combined method for cloud and cloud shadow detection in GaoFen-1 wide field of view image was proposed by Li et al. [

32], and acquired relatively satisfactory performance. In these methods, not only simple spectral information, but also other manually selected information of images was used for cloud detection, such as texture information, structure information, geometric information, and so on.

With the development of machine learning technology, machine-learning-based cloud detection methods [

33,

34] have attracted increasing study, which can extract more robust and high-level information from images. Hu et al. [

35] adopted a visual attention technique in computer vision based on random forest to automatically identify images with a significant cloud cover. Li et al. [

36] exploited the brightness and texture features to detect clouds based on SVM. An and Shi [

37] proposed an automatic supervised remote-sensing image cloud detection approach based on the scene-learning scheme with two modules: feature data simulating and cloud detector learning and applying. In these machine-learning-based methods, a larger number of features were artificially designed and extracted as the input of classifier. These artificially designed features rely on prior knowledge and sensor, and they are difficult to accurately represent the characteristics of clouds with complex underlying surface.

Recently, deep learning methods have made significant progress in many computer visual tasks [

38,

39,

40,

41], and the cloud detection methods based on deep learning have attracted attention [

42,

43]. Shi et al. [

44] and Goff et al. [

45] used superpixel segmentation and deep Convolutional Neural Networks (CNNs) to detect clouds from Quickbird and Google Earth images and SPOT 6 images, respectively. Ozkan et al. [

46] adapted a deep pyramid network to tackle cloud detection task, which can obtain accurate pixel-level segmentation and classification results from a set of noisy labeled RGB color remote sensing images. These methods achieved higher cloud detection accuracy than traditional machine learning methods.

In this paper, a novel cloud detection method is proposed for the multispectral remote sensing images with 9 bands from Landsat 8 OLI. The proposed method firstly obtains the coarse result by a spectral threshold function, then the accurate cloud detection result is obtained by a double-branch PCANet combined with SVM. The remainder of this paper is organized as follows.

Section 2 presents the details of the proposed cloud detection method. Experimental results and analysis are described and discussed in

Section 3. Finally, a conclusion is drawn in

Section 4.

2. Methodology

Cloud is an aerosol comprising a visible mass of condensed droplets or frozen crystals suspended in the atmosphere. In the visible bands, the optical properties of water droplets and frozen crystals not only make clouds reflective, but also increase light absorption, thus reducing the transmittance of the radiation information from the underlying surface [

47]. Intuitively, it seems that clouds are easily separable from ground objects, as clouds are generally white and bright compared to the underlying surface. Nevertheless, there are clouds that are not white or bright as well as bright ground objects, such as semitransparent thin clouds, bright buildings and snow, which are difficult to distinguish by spectral characteristics alone. Therefore, for high-accuracy cloud detection, both the spectral and spatial information of remote sensing image should be adequately considered.

In this paper, a novel cloud detection method considering both spectral and spatial information is proposed for the multispectral remote sensing images, which is a combination of threshold-based method and machine-learning-based method.

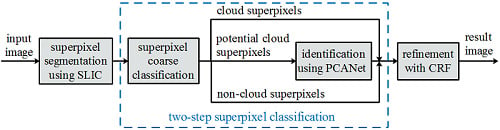

Figure 1 shows the flow chart of the proposed method. Firstly, the image is divided into spectrally homogeneous segments, namely superpixels, by SLIC [

48] method. Then, a two-step classification strategy is used to divide these superpixels into cloud and non-cloud. The first step coarsely divides the superpixels into cloud, non-cloud and potential cloud by a spectral threshold function. The second step further classifies the potential cloud as cloud and non-cloud using the PCANet combined with SVM. Finally, a fully connected CRF [

49] model is employed to refine the cloud detection result and accurate cloud borders are obtained.

Cloud detection methods based on sub-region can utilize the local spatial information of the image to avoid isolated point noise. Two deep-learning-based methods in [

44,

45] extracted features and predicted the class of each sub-region to detect clouds. It is time- consuming when use supervised learning methods to predict the class for all sub-regions. In addition, they learn features from all sub-regions including easy distinguished samples and hard distinguished samples, which increases the learning difficulty and causes low generalization ability.

In our framework, we pay more attention to hard samples through a two-step superpixel classification strategy. These hard samples are usually corresponding to bright ground objects and thin semitransparent clouds, which cannot be identified directly by spectral features. We use a threshold function to separate the obvious cloud and non-cloud superpixels from the ambiguous superpixels, thus improving cloud detection speed. Then, a double-branch PCANet is designed and combined with SVM to further predict the ambiguous superpixels as cloud or non-cloud. The inputs of the two branches of the designed PCANet are 9-dimensional Top of Atmosphere (TOA) reflectance data and 5-dimensional spectral feature data, respectively. The double-branch PCANet takes both spectral and spatial information into account. In addition, the classifier only needs to learn the features of the ambiguous superpixels without interference from the easy distinguished superpixels, which make the classification of the ambiguous superpixels become easier. Therefore, the combination of the two steps can obtain a more accurate cloud detection result.

2.1. Data and Preprocessing

The Landsat 8 OLI data is used to evaluate our method in this paper, which consists of 9 spectral bands with a spatial resolution of 30 m for Bands 1 to 7 and 9, and the resolution for Band 8 is 15 m. Band 1 is specifically designed for water resources and coastal zone investigation, and Band 9 is useful for cirrus cloud detection.

Table 1 shows the details of the 9 bands.

In the proposed method, the input data are TOA reflectances for Landsat 8 OLI bands. Standard Landsat 8 OLI data products consist of quantized and calibrated scaled Digital Numbers (DN) representing multispectral image data. To work on Landsat 8 OLI data, DN firstly need to be converted to TOA reflectance following equations described in [

50].

where

is TOA reflectance without correction for solar angle,

is band-specific multiplicative rescaling factor,

is quantized and calibrated standard product pixel values (DN), and

is band-specific additive rescaling factor. TOA reflectance with a correction for the sun angle is then:

where

is TOA reflectance with correction for solar angle,

is TOA reflectance without correction for solar angle,

is local solar zenith angle and

is local sun elevation angle.

In addition, as the spatial resolution of Band 8 is 15 m, which is higher than other bands, we first resample it to 30 m spatial resolution for a uniform size.

2.2. Superpixel Segmentation

In [

36], cloudy image was divided into sub-blocks of the same size according to the spatial position, then, the brightness and textural features were calculated to predict the class for each sub-block. However, the rigid division cannot be adaptive for the varied shape and size of clouds. In this paper, SLIC [

48] is used to divide remote sensing image into superpixels, which are taken as the basic unit in the following procedures.

SLIC performs a local clustering of pixels and can efficiently generate compact and regular superpixels as sub-regions, which adhere well to the boundaries. According to [

48], the clustering is applied in the

labxy color-image plane space defined by the pixel’s CIELAB color space

and the pixel’s position

. The distance measure between the pixel

i and pixel

j in the

labxy space is defined as:

where

S is the square root of the required pixel number of the superpixel,

m is the weight to control the importance between color similarity and spatial proximity. In this paper,

m and

S are set to 20 and 50, respectively.

For Landsat 8 images, the Bands 6, 3 and 2 correspond to the short-wave infrared, green and blue bands, respectively. In their color composite image, the cloud is more obvious and easily distinguishable from snow/ice [

51]. In this paper, SLIC method is executed on the color composite image using Bands 6, 3 and 2.

Figure 2 is an example of superpixel segmentation, where (a) is the color composite image of Bands 6, 3 and 2, (b) is the superpixel segmentation result of (a). It can be seen that the cloud superpixels adhere well to cloud boundaries. In this paper, we adopt a two-step classification strategy to recognize each superpixel as cloud or non-cloud.

2.3. Superpixel Coarse Classification

It can be seen from

Figure 2b that there are three kinds of superpixels in the image: (1) bright and thick cloud superpixels, (2) obvious non-cloud superpixels, (3) potential cloud superpixels, including dark cloud, semi-transparent thin cloud and bright non-cloud. The first two kinds of superpixels are easily recognized, and we use a brightness feature combined with the threshold method to separate them from the potential cloud superpixels, which can simplify the calculation and improve detection speed.

Clouds on the remote sensing images are mainly uniform flat, and a large proportion of them are brighter than most of the Earth’s surface [

9,

36]. We define a brightness feature to enhance the difference between cloud pixels and non-cloud pixels:

where Band

i is the TOA reflectance of the

i-th band, which is normalized to [0, 1].

For a cloud pixel, the TOA reflectance values are large and close to each other in Bands 6, 3 and 2, and therefore the value of b is big. While for a non-cloud pixel, the TOA reflectance values are varied in the three bands, and the average TOA reflectance value is small, and therefore the value of b is small.

Then, we calculate the brightness feature for each pixel. We selected 24 images from L8 CCA dataset [

51] (Landsat ID and Cloud Cover of the 24 images are given in

Table A1), and calculated the cumulative probability distribution of

b for cloud pixels and non-cloud pixels, respectively, as shown in

Figure 3. The red line is the cumulative probability curve of non-cloud pixel, and the blue line is that of cloud pixel. It can be observed that about 98% of the non-cloud pixels have a

b value less than 0.176, and about 98% of the cloud pixels have a

b value greater than 0.073.

Therefore, for a superpixel, we define a threshold function as follows:

where

is the number of pixels in the superpixel

, and

is the brightness feature

b of pixel

z.

If

, the superpixel

is predicted as non-cloud, and if

, the superpixel

is predicted as cloud, and otherwise, it is potential cloud.

Figure 2c shows the superpixel coarse classification result of

Figure 2b, where white represents the cloud superpixels, black represents the non-cloud superpixels and gray represents the potential cloud superpixels.

2.4. Identification of the Potential Cloud Superpixels

The potential cloud superpixels generally contain high brightness ground objects and thin semitransparent clouds, which are difficult to be identified by the low-level spectral information. We use PCANet to extract the high-level features of clouds, which are fed to a linear SVM classifier to obtain a better classification result.

2.4.1. A Brief Introduction to PCANet

PCANet is a simple deep learning network for image classification [

52,

53]. Compared with CNN, the most important change in PCANet is that the data-adapting convolution filter bank in each stage is replaced by basic PCA filter, and the nonlinear layer adopting the simplest binary quantization (hashing), and for the feature pooling layer, the block-wise histograms of the binary codes are employed, which are the final extracted features of the network. PCANet is easy to be trained and adapted to different data and tasks, and can avoid the problem of parameter training in traditional CNN that consumes too much time and requires various ad hoc tricks [

54,

55].

PCANet is a cascaded network, which is based on three basic data processing components: cascaded principal component analysis (PCA), binary hashing, and block-wise histograms. Suppose that there are

N training images

of size

, and the filter size is

at all stages. For the

i-th training image

, PCANet takes a

patch around each pixel, and collects all the vectorized mean-removed patches to form a matrix

, where

n is the number of patches extracted from

. By constructing the same matrix for all training images and combining them, we obtain

Assuming that the number of filters in the first layer is

. PCA minimizes the reconstruction error within a family of orthonormal filters, i.e.,

where

is an identity matrix with size

. The solution is the

principal eigenvectors of

. Therefore, the PCA filters can be expressed as

where

is a function that reshapes a vector

to a matrix

, and

denotes the

l-th principal eigenvector of

. Then, the

l-th filter output of the first layer can be obtained by

where * denotes 2D convolution. For each input image

, the first layer will output

images. Executing the same process as in the first layer for all the obtained

, the output of the second layer is obtained. Assuming that the number of filters in the second layer is

, PCANet would output

images for each input image. A deeper PCANet architecture can be built by repeating the above process, to extract higher level features. Two layers are enough for most tasks according to [

52].

After the process of PCA filter, a binary hashing process is followed. This process binarizes outputs and converts them into a single integer-valued “image”. Subsequently, each of the images is divided into many local blocks. Then, computing the histogram of each block (with bins), and concatenating all the histograms into one vector, which is the feature expression for input image . Finally, the classification result is determined by a linear SVM classifier.

2.4.2. Our Designed Double-Branch PCANet

In this paper, a double-branch PCANet is designed, as shown in

Figure 4. The input of the first branch is the 9-dimensional TOA reflectance data (Band 1–Band 9), which fuses the spectral information of nine bands. In addition, the input of the second branch is five spectral feature maps including Normalized Difference Snow Index (NDSI), NDVI, B5/B6, “Whiteness”, and Haze Optimized Transformation (HOT), which are defined as:

In [

21], Irish used NDSI, NDVI and B5/B6 indexes to separate clouds from the snow, vegetation and bright rocks, respectively. “Whiteness” index was employed by Gomez-Chova et al. [

56] to exclude pixels that are not white enough to be clouds. The HOT index was firstly proposed by Zhang et al. [

57], which has been widely used for haze reduction and cloud detection. Zhu et al. [

22] identified the potential cloud pixels by combining five spectral feature maps. In this paper, we use the five spectral feature maps to describe the features of the potential cloud pixels. The two branches of the designed PCANet are used to retrieve the high-level features from 9-dimensional TOA reflectance data and 5-dimensional spectral feature data, respectively. Finally, these high-level features are fused and fed to a linear SVM classifier to obtain the final classification result.

Figure 2d shows the two-step superpixel classification result of

Figure 2b.

2.5. Refinement with Fully Connected CRFs

Through

Section 2.3 and

Section 2.4, each superpixel is classified as cloud or non-cloud, and the cloud detection result is based on superpixel. However, the regions around the boundaries of the clouds are usually thin semitransparent clouds, which will contain non-cloud pixels sometimes, and thus leading to wrong classification result. In [

24,

32,

58], a guided filter was implemented to refine the detected cloud boundaries. It only utilizes the local spatial information and the binary cloud detection result, which ignores the global spatial information and the cloud probability of each pixel. In this paper, a global optimization strategy with fully connected CRF model [

49] is applied to capture the thin clouds around cloud boundaries, then a more accurate refined cloud detection result is obtained.

The model employs the energy function [

59]:

where

is the label of pixels, and

and

denote the labels of pixels

i and

j, respectively.

The first term in Equation (

18) is a unary potential:

, where

is the cloud probability of pixel

i. In

Section 2.3, the superpixels are divided into three classes: cloud, non-cloud and potential cloud. The cloud probabilities of the pixels in cloud superpixel are set to 1, and those of the pixels in non-cloud superpixel are set to 0. For the pixels in the potential cloud superpixel, their cloud probabilities are obtained using the output of SVM in

Section 2.4, which are normalized to [0, 1].

The second term in Equation (

18) is a pairwise potential, which is the combination of two Gaussian kernels in terms of the colors and positions for pixels

i and

j:

where

is a binary function, which is 0 when pixel

i has the same label with pixel

j, otherwise 1.

and

are the position and color vector (including Bands 6, 3 and 2 in this paper) of pixel

i. The first bilateral kernel depends on both pixel position and color, which forces nearby pixels with similar color to have the same labels, and the second kernel only depends on pixel position, which only considers spatial proximity when enforcing smoothness. The parameters

and

are linear combination weights of the two Gaussian kernels, and

,

and

control the scale of Gaussian kernels.

Minimizing the CRF energy function

in Equation (

18) yields the most probable label assignment

for the given image. Since this strict minimization is intractable, a mean field approximation to the CRF distribution

is used for approximate maximum posterior marginal inference.

Figure 2e shows the fully connected CRF refinement result of

Figure 2d.

3. Results and Discussion

In this paper, a cloud detection framework is proposed for multispectral remote sensing images, which is implemented in MATLAB release 2016a on a computer with Intel CPU i7-6700K at 4.00 GHz and 32.00 GB RAM.

The experimental images are from the L8 CCA dataset [

51], which contains totally 96 images and their ground truth, the image effective size is approximately 6400 × 6400. In these experimental images, there are eight biomes including Barren, Forest, Grass/Cropland, Shrubland, Snow/Ice, Urban, Water, and Wetlands, and each biome has 12 scenes. These images are divided to two parts: 24 images (three for each biome) for training and the remaining 72 ones for test. The 24 training images are also used to determine the threshold in

Section 2.3 when coarsely classifying the superpixels.

We used four metrics to quantitatively evaluate the performance of the methods, including the Right Rate (

RR), Error Rate (

ER), False Alarm Rate (

FAR), and ratio of

RR to

ER (

RER). They are defined as:

where

CC denotes the number of cloud pixels detected as cloud pixels,

TC denotes the total number of cloud pixels in ground truth,

CN denotes the number of cloud pixels detected as non-cloud pixels,

NC denotes the number of non-cloud pixels detected as cloud pixels, and

TP denotes the total number of pixels in the input image.

According to the definition, it is obvious that RR represents the information of correctly detected clouds, ER provides us the information about the errors, and FAR indicates the information of incorrectly detected clouds. Using only one of them to evaluate algorithms is insufficient, as some methods may obtain high RR but also high ER. On the contrary, some methods may obtain low FAR but also low RR. Therefore, RER is defined to obtain an integrated result as it considers the RR and ER. A higher value of RER denotes better cloud detection result.

3.1. Training and Detection

For the proposed cloud detection framework, as shown in

Figure 1, we need to train the PCANet combined with a linear SVM classifier, and determine the optimal parameters of the fully connected CRF model.

In the training stage, for the 24 training images, the superpixels are extracted using the SLIC method. Through superpixel coarse classification, 140,000 potential cloud superpixels are obtained. From the 140,000 superpixels (their ground truth are known), we randomly extract 35,000 cloud patches as positive samples, and 35,000 non-cloud patches as negative samples, and the patch size is 55 × 55. All the 70,000 samples are used to train the designed double-branch PCANet, which parameters setting is: two layers, 7 × 7 patch size, eight filters in each layer, 7 × 7 size of block histograms (non-overlapping).

In the refinement post-processing stage, we use default values of and , and search for the best values of , and by cross-validation on the training set, and the final values of , and are 10, 300 and 3, respectively. We employ 20 mean field iterations in this paper.

In the detection stage, superpixels are first obtained from the test image using the SLIC method. Then, we calculate the brightness feature of the superpixels, and divide them into cloud, non-cloud and potential cloud by the spectral threshold function. For each potential superpixel, an image patch (55 × 55) centered at its geometric center pixel is extracted and inputted into the trained PCANet model, and thus, the class of the potential superpixel is predicted. Merging the classification results of all superpixels in the test image, the superpixel-based cloud detection result is obtained. Finally, a fully connected CRF model is employed to refine the cloud detection result and accurate cloud borders are obtained.

3.2. Effectiveness of Designed PCANet Architecture

Compared with the traditional CNNs, PCANet is simpler and faster. In this paper, a double-branch PCANet architecture is designed to extract the features of the potential clouds, and using these features, a linear SVM classifier is trained to classify the potential clouds.

In order to verify the effectiveness of the designed double-branch PCANet architecture, We compare our PCANet architecture with three CNN architectures, including AlexNet [

38], VGG-16 [

39] and ResNet-20 [

40], and two single-branch PCANet (see the two branches of

Figure 4). For the three compared CNNs, their inputs are the 9-dimensional TOA reflectance data. Since this experiment is to verify the performance of different network architectures, it does not include the superpixel coarse classification and the CRF refinement process. We extract 80,000 cloud patches and 320,000 non-cloud patches from 24 training images as training set for AlexNet, VGG-16 and ResNet-20, and the patch size is 55 × 55. Considering PCANet does not need a large training set, we extract 35,000 cloud patches and 35,000 non-cloud patches for training.

Table 2 shows the statistical results of different network architectures on the test set. It can be seen that, compared with the three CNNs, the 9-dimensional single-branch PCANet has the best values in metrics

ER,

FAR and

RER, in addition, its

RR, the same as that of ResNet-20, is also the best. Therefore, the 9-dimensional single-branch PCANet outperforms the three compared CNNs. In two single-branch PCANets, the 5-dimensional PCANet has lower performance than the 9-dimensional one due to the incomplete spectral information input. In addition, when combining the two single-branch PCANets together, it is exactly our final double-branch PCANet, which obtains a better cloud detection result than both of the two single-branch PCANets. Overall, with the highest

RR and

RER and the lowest

ER, our double-branch PCANet outperforms the five compared network architectures.

3.3. Effectiveness of the Proposed Cloud Detection Framework

A cloud detection framework is proposed in this paper. Firstly, we separate the obvious cloud and non-cloud superpixels from the potential cloud superpixels by superpixel coarse classification. Then, the designed double-branch PCANet is used to identify the potential cloud superpixels. Finally, the fully connected CRF is used to refine the cloud detection result, and we obtain the final finer result. In order to evaluate the effectiveness of the proposed framework, we compare it with three other frameworks, including the simple double-branch PCANet (without superpixel coarse classification and refinement process), the two-step superpixel classification strategy (superpixels coarse classification combined with double-branch PCANet, and without refinement process), and the two-step superpixel classification strategy combined with guided filter based refinement process. Here, the simple double-branch PCANet is exactly the double-branch PCANet used in

Section 3.2, and the other two compared frameworks have the same training stage with our final cloud detection framework.

Figure 5 shows two instances of cloud detection, where (a1,a2) are the cloudy images composited of Bands 6, 3 and 2, (b1,b2) are ground truth and the white is cloud region, from (c1,c2) to (f1,f2) are the results of three compared method and our proposed final framework. It can be seen that the cloud in (a1) of

Figure 5 is thick and clumpy, and the boundaries of the cloud detected by the first two compared methods are unsmooth and with blocking effect, because the first two compared methods are based on superpixel sub-region. While the last two methods (two-step strategy combined with guided filter and our method) use guided filter and CRF to correct the misclassified pixels, respectively, which are based on pixel, therefore the results have more accurate cloud boundary. The cloud is fractional in (a2) of

Figure 5. When a superpixel contains non-cloud region much more than thin and small cloud region, it will probably be classified as non-cloud, and the cloud pixels in it are incorrectly detected. Conversely, when a superpixel contains cloud region much more than non-cloud region, it will probably be classified as cloud, and the non-cloud pixels in it are false detected. Therefore, the first two compared superpixel-based methods will cause missed detection and false detection, see (c2,d2) in

Figure 5. When combining the two-step strategy with guided filter, the missed and false detected pixels around the boundaries of the cloud regions are corrected. Because guided filter only considers local information, the misclassified pixels are still obvious. Our method uses the global optimal CRF model to refine the cloud detection result, and a more accurate result is obtained.

Table 3 shows the statistical results of three compared cloud detection frameworks and the proposed framework on the test set. The last column in

Table 3 shows the average detection time of each image. In the two-step superpixel classification, the first-step threshold method separates the easily distinguished superpixels from the potential cloud superpixels firstly, and the second-step PCANet pays more attention to the potential cloud superpixels. It can be seen that the two-step superpixel classification has a better cloud detection result than the simple double-branch PCANet, and its time cost is only 52.3% of the latter. Guided filter improves slightly the detection accuracy of the two-step superpixel classification, but its time cost increased greatly. While our proposed framework uses CRF to correct the misclassified pixels, the accuracy is obviously improved. In addition, our proposed framework only takes 26.7% more time than the two-step superpixel classification, but it still saves 33.7% of the time compared to the simple double-branch PCANet. Considering that it yields the best detection result, our final framework outperforms the other three methods.

3.4. Comparison with Other Cloud Detection Methods

We compare our method with five other cloud detection methods including Zhu’s method [

23], Yang’s method [

24], Li’s method [

32], Shi’s method [

44] and Goff’s method [

45], where the first three methods are unsupervised, and the last two methods are based on deep learning. In addition, Zhu’s method was an implementation from Fmask 3.2 version (download from

http://ftp-earth.bu.edu/public/zhuzhe/Fmask_Windows_3.2v/), Shi’s method was implemented by Shi et al. (download from

http://xfy.buaa.edu.cn/), and we reproduced Yang’s method, Li’s method and Goff’s method with default values.

Figure 6 shows several cloud detection instances, where (a1–a3) are the original color composite images, (b1–b3) are ground truth and the white is cloud region, (c1–c3)–(h1–h3) are the results of five compared methods and our method. In

Figure 6, the second image (a2) contains both cloud and snow, which are easily confused with each other, and the last image (a3) only has snow, without cloud. It can be observed that, for the three unsupervised methods, Li’s method is completely failed for the second and the third images and the snow pixels are misidentified as cloud, Zhu’s method misses many cloud pixels for the second image and falsely detects the snow pixels as cloud for the last image; Yang’s result is better than Li’s and Zhu’s results, but its false detection is obvious for the last image. For the two deep-learning-based methods, Shi’s method causes obviously false detection in the first image, Goff’s method has a similar cloud detection result with our method, but the former causes slight false detection in the last image. Overall, our cloud detection result is the best among the six methods.

Table 4 is the statistical results of different methods on the test set. It can be observed that Li’s method has the best

RR, but its

ER and

FAR are rather high, which means there are many misidentified clouds in the result. Our

RR is better than the other four compared methods, and in addition, our method acquires the best value in metrics

ER,

FAR and

RER, our

RER especially is much higher than the other five cloud detection methods. Therefore, our method greatly outperforms the compared methods overall.

3.5. Accuracy Assessment of Cloud and Cloud Shadow Detection

Cloud shadow always occur in pairs with cloud, and it is also important for remote sensing applications. Our method can detect cloud and cloud shadow simultaneously, when the designed two-branch PCANet is trained with cloud, cloud shadow and non-cloud samples. The range of the brightness feature b of cloud shadow pixels is similar to that of non-cloud pixels, that is to say, we cannot distinguish cloud shadows from non-clouds by brightness feature. Therefore, the superpixel coarse classification process is removed from the detection framework here.

There are some images from the L8 CCA dataset, in which the clouds and cloud shadows are both manually labeled. We selected eight images from them for experiments, five images for training and three images for test (Landsat ID of the eight images are given in

Table A2). We randomly extract 10,000 cloud samples, 5000 cloud shadow samples and 15,000 non-cloud samples from the five training images, and all 30,000 samples are used to train the designed double-branch PCANet.

In this section, five metrics are used to assess the accuracy of cloud and cloud shadow detection results, including Overall Accuracy (OA), Precision of cloud (Precision_

c), Recall of cloud (Recall_

c), Precision of cloud shadow (Precision_

s), and Recall of cloud shadow (Recall_

s). OA is used for overall accuracy assessment, including cloud, cloud shadow and non-cloud. Precision_

c and Recall_

c are used for cloud accuracy assessment, and Precision_

s and Recall_

s are used for cloud shadow accuracy assessment. They are defined as follows:

where

CC,

SS and

NN denote the number of pixels correctly detected as cloud, cloud shadow and non-cloud, respectively.

TP denotes the total number of pixels in the image.

DC and

DS denote the number of pixels detected as cloud and cloud shadow, respectively.

GC and

GS denote the number of cloud pixels and cloud shadow pixels in ground truth, respectively.

We compare our method with two other cloud and cloud shadow detection methods including Zhu’s method [

23] and Li’s method [

32].

Figure 7 shows several cloud and cloud shadow detection instances, where (a1–a3) is the original image, (b1–b3) is ground truth, white represents the cloud, gray represents the cloud shadow and black represents the non-cloud, (c1–c3)–(e1–e3) are the results of two compared methods and our method. It can be observed that all three cloud detection methods can effectively detect the clouds and their shadows. In addition, compared to the cloud shadow detection results, the cloud detection results are more accurate. The statistical results in

Table 5 also confirm the conclusions above. From

Table 5, we can see that our method obtains the highest OA, Precision_

c, Precision_

s and Recall_

s, which indicates that the proposed method is effective for cloud and cloud shadow detection.

4. Conclusions

In this paper, a novel cloud detection method is proposed for the multispectral remote sensing images from Landsat 8. Clouds are obvious and easily distinguished from snow and ice in the color composite image of Bands 6, 3 and 2, and SLIC method is firstly used to cluster the color composite image into superpixel sub-regions. In these superpixels, the bright and thick cloud superpixels, as well as obvious non-cloud superpixels, are easily identified. Therefore, we use a threshold function in brightness feature space to distinguish the obvious cloud and non-cloud superpixels from the potential cloud superpixels, thus speeding up the detection. Potential cloud superpixels usually include dark clouds, semi-transparent thin clouds and bright non-clouds, which are easily misclassified. In this paper, a double-branch PCANet is designed to retrieve the high-level features of clouds, then combined with a linear SVM classifier, the classes of potential cloud superpixels are predicted. Through the two-step superpixel classification strategy, all the superpixels in the image are predicted as cloud or non-cloud. Considering the sub-region-based method will cause misclassified pixels, a fully connected CRF model is employed as the post-processing step to refine the final classification result. Because CRF processing is based on pixel and the optimization is global, the final extracted cloud borders are more accurate. Quantitative and qualitative experiments are performed on the L8 CCA dataset. Results demonstrate that, compared with other state-of-the-art methods, the proposed method can correctly detect cloud under different scenes. In addition, it can effectively distinguish the cloud with from snow and ice. In the future, we will take more information into consideration to further improve the accuracy of our cloud detection method. For example, we will consider the cloud shadow as it always occur in pairs with cloud. In addition, we will adapt our work to detect thin cloud, thick cloud, cloud shadow, ice/snow and water simultaneously.