1. Introduction

Small commercial UAVs–often referred to as drones–are conspicuous newcomers providing easy cost-efficient measurements for use in remote sensing science. Presently, most small commercial UAVs on the market have the ability to fly autonomously and to survey crops from overhead for detailed observation. Particularly for unobstructed areas free of interference, such as in sparsely populated agricultural and forestry land, UAVs on autopilot can fly much more widely and safely for acquiring significant sensing imagery. When using automatic flight, it is possible for a UAV to resume flight from a break waypoint for battery replacement, allowing the photogrammetry area to be dozens of hectares or more. The acquired high-resolution orthomosaic imagery gives researchers and growers enormous advantages for accurate observation and measurement in agricultural and environmental applications. In a few recent studies, UAV-based high-resolution imagery was proposed for estimating the plant density of wheat (

Triticum aestivum L.) [

1], ascertaining the canopy height and aboveground biomass of maize (

Zea mays L.) [

2] or grassland [

3], predicting the yield of rice (

Oryza sativa L.) [

4,

5] or wheat [

6], quality assessment of digital surface models (DSMs) [

7], and land cover classification [

8]. Many more applications of UAVs for agroforestry and environmental monitoring can be found in recent review papers [

9,

10,

11].

The observation and assessment of the overall plant growth in a local area requires a fusion of technologies with regard to UAVs, image processing, and crop growth diagnosis [

9,

10]. Technological advancements such as automatic flight and user-friendly controls for small commercial UAVs have enabled convenient one-man operation of UAVs flying over fields on-demand. As proposed in [

12], the high-resolution images provide substantially more significant signals for perceiving or predicting the physiological variations during plant vegetation processes. Flying small UAVs at low altitude, the on-board camera or multispectral sensor can easily capture very high-resolution images with spatial resolution better than 10 cm ground sampling distance (GSD).

The attached camera or sensor type is determined by the objectives of the monitoring and assessment of plant growth. Except certain active sensors such as LiDAR for detecting canopy height [

13], most UAV sensors are referred to as RGB, near-infrared (NIR), multispectral, hyperspectral, or thermal cameras. The RGB cameras are typically applied for acquiring visual imagery of plant growth; assessing plant density, canopy height, and amount of biomass; mapping plant area [

14]; and approximately observing crop nitrogen status [

15]. Nevertheless, images captured by RGB cameras are not effective for assessing vegetation characteristics, owing to the absence of information on the invisible light spectrum. A lightweight multispectral camera is generally designed for capturing the distinctive reflectance signal of plants in several narrow bands of the visible, red edge, or NIR regions. It exhibits the advantages of lower cost and more convenient operation than a hyperspectral camera, which captures accurate and particular signals for diversified applications [

16,

17]. Furthermore, the narrow band sensors of a multispectral camera are more sensitive to subtle variation in crop health than that of a modified NIR camera [

18]. At present, similar to RGB cameras, multispectral cameras offer sufficient capability for monitoring and assessing plant growth [

10].

Because the reflectance values of plants vary in response to environmental changes in sunshine irradiance, solar angle [

19], soil background, and water glint [

20,

21], reducing sampling noise due to these environmental changes for UAV-based measurement is crucial for accurate and stable data acquisition. This is particularly so for long-term measurement during an entire crop-growing season. Several multispectral cameras such as compact Parrot Sequoia, MicaSense RedEdge, and RedEdge-M are equipped with a light sensor that can record both reflectance and incident solar radiation. The reflectance deviation related to changes in sunshine or cloud influence is calibrated in the subsequent image processing; thus, the reflectance values become more reliable. Researchers have recently observed the advantages of these compact multispectral cameras and contributed technical experience [

22] (in preprint) and applications for hierarchical land cover and vegetation classification [

23], monitoring post-fire vegetation regeneration in large and heterogeneous burned areas of approximately 3000 ha [

24], and observing wheat phenotypes based on vegetation indices (VIs) [

6,

25].

With spectral images captured by a multispectral camera, it is convenient to create an index map of spectral VIs such as NDVI and normalized difference red edge index (NDRE) [

21,

26]. The high spatial resolution VIs are subsequently analyzed and segmented in order to detect growth diversity [

27] or predict yield and optimal harvesting time [

6]. They are particularly significant for monitoring relatively small crop plants such as rice, wheat, and soybean (

Glycine max. (L.)

Merr.), which are widely cultivated in flat lowland areas. Referring to the spectral VIs as well as the RGB images, growers or agronomists are capable of observing the overall growth status in the field and rapidly identifying problem areas of stress, disease occurrence, or nutrient deficiency.

Because crop growth status always varies with environmental changes over time, analyzing the time-series variation in crop growth requires long-term measurement of the crop plants during an entire crop-growing season. An ideal solution is use of long-term time-series of satellite imagery [

28,

29,

30] or aerial photographs from manned aircraft [

16,

27]. The use of the satellite imagery could be applicable to very large-scale farming, but it does not seem realistic for smallholder farming due to lack of flexibility for on-demand application. The smallholder farming accounts for the greatest proportion in our study area in the southwestern Japan. The average cultivated land size for single farm entity in this area is 1.8 ha [

31], which is ordinarily comprised of several small and dispersed fields. For the smallholder farming, even using the satellite imagery with high resolution of 1 m GSD such as WorldView series multispectral imagery [

32,

33], the spatial resolution is not sufficient to differentiate the growth status between these small fields. An easy and on-demand UAV-based system offering very high-spatial resolution imagery is adequate to observe and assess the crop growth for the smallholder farming.

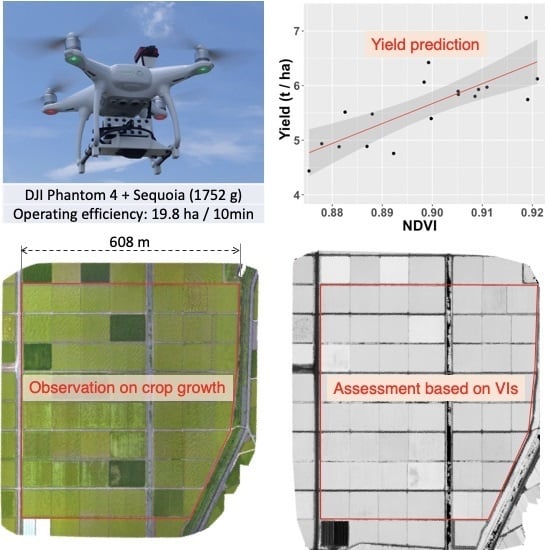

In this study, consequently, we put emphasis on (1) constructing the small UAV-based multispectral sensing system and assessing its operating efficiency at different above-ground levels (AGLs); (2) developing a batch image alignment (BIA) algorithm for aligning and quantifying the NDVI values from a batch of index maps generated with or without use of ground control points (GCPs); (3) evaluating UAV-derived high resolution NDVI for predicting yield, and (4) estimating the effects of nitrogen fertilizer treatment on NDVI values. The main targets in the study were two lowland crops, rice and wheat, used in a double cropping system within the study area.

2. Materials and Methods

2.1. High-Resolution Aerial Imagery Acquisition

The high-resolution aerial images were captured by an on-board RGB camera and multispectral camera attached on each of the following two types of UAVs: DJI Phantom 4 and DJI Phantom 4 Pro (DJI, Hong Kong, China) (

Table 1). The DJI Phantom 4 Pro has weight approximately equal to that of the DJI Phantom 4, whereas the on-board camera sensor differs in CMOS size, lens, focal length, and image resolution. The multispectral sensor of the Parrot Sequoia was mounted on the DJI Phantom 4 or the DJI Phantom 4 Pro by a customized mount (Zcopters, Cataluna, Spain) that is capable of incorporating an additional operating battery for the sensor. Attaching the battery and the sensor reduces the maximum flight time of the UAV from the original 30 min to approximately 16 min. The Parrot Sequoia sensor is composed of an RGB camera and four spectral cameras for the spectral bandwidths (full width half maximum bandwidth) of green 530–570 nm, red 640–680 nm, red-edge 730–740 nm, and NIR 770–810 nm. The sensor can simultaneously capture and store an RGB image of 4608 × 3456 pixels and four monochrome images of 1280 × 960 pixels. The images contain illumination data for calibrating the irradiance on all sunny or cloudy days, and Global Positioning System (GPS) data for constructing a geographical reflectance map.

Acquiring high-resolution aerial imagery requires autonomous flight on a pre-defined flight route, particularly when flying over wide fields. The forward and side overlap for the autonomous flight are highly critical for successfully constructing RGB orthomosaic image or reflectance map. For an autonomous flight plan constructed by certain automatic flight control applications such as DJI GS Pro (DJI, Hong Kong, China) or Pix4D Capture (Pix4D S.A., Lausanne, Switzerland), the overlap should be calculated by the specification parameters of the multispectral camera. The shutter interval for the on-board RGB camera and the multispectral camera should also be determined in advance.

The operating efficiency depends on the forward and side overlap, time interval for capture, and flight altitude. We measured the average operating efficiency from 223 automatic flights at 30, 50, and 100 m AGLs. The multispectral sensing was conducted from August 2017 to May 2018, within a 5 h time window around solar noon, with wind speed below 5 m/s and air temperature higher than 5 C. The times when the sunlight was partly obscured in the sensing area were avoided to guarantee relatively consistent light conditions during the flight. For flooded rice fields, a 3 h window around the solar noon on sunny days was removed from the photogrammetry because of a high water glint. Before UAV takeoff, three sets of calibration panel images of the reflectance panel (MicaSense, Seattle, WA, USA) were captured to calibrate the reflectance values in reflectance maps. For each flight, we manually triggered the capture of data at 1.5 s intervals by the Parrot Sequoia sensor before takeoff, and after landing, stopped it in the same way.

2.2. Image Processing

Using interval capturing, one autonomous flight could produce six image sets: four multispectral image sets of the green, red, red-edge, and NIR bands; and two RGB image sets of the UAV on-board camera and the Parrot Sequoia. The four multispectral image sets and the two RGB image sets were used to construct multispectral reflectance maps of the four bands and RGB orthomosaic image, respectively. We prefer to use the RGB image set of the UAV on-board camera owing to its high resolution. The images sets were processed by Pix4D Mapper Pro (Pix4D S.A., Lausanne, Switzerland). The PC used to run the software included a Core i7-7700K CPU (4.2 GHz, 4 cores), 32 GB of memory, and a graphics accelerator with 2560 GPU cores (NVIDIA GeForce GTX 1080 8 GB).

The process of generating orthomosaic RGB images was performed after marking five or more pre-defined GCPs on the raw RGB images, for which the GPS coordinates were derived from Google satellite map. The GCPs referred to certain conspicuous ground objects that were not moved both in the study area and on the Google satellite map during the entire photogrammetry period. The orthomosaic RGB images were dispensable if only concerned with VI index maps. Before the process of generating the reflectance maps, we performed radiometric calibration of multispectral images using “Camera and Sun Irradiance” in the processing options of the Pix4D Ag Multispectral project. Because the output reflectance maps and NDVI index maps had sufficient alignment accuracy, most of the projects used to generate the reflectance maps were processed without using GCPs. For those output index maps with noticeable distortion or alignment accuracy that was too poor, we defined five or more GCPs and regenerated the maps.

Owing to GPS errors in the captured raw images, the output reflectance or index maps generally contained different boundaries of areas that covered the experimental plots or fields. Hence, we developed the BIA algorithm that was responsible for an affine transform from the original reflectance or index map to a normalized one in which the experimental plots or fields all have identical size and position. Unlike image masking for satellite imagery [

34], we defined a masking region as only the area of the experimental plot or field is included. The affine transformation was applied to each normalized destination image based on a matrix

v, which was calculated from three pairs of affine transform points (ATPs) in the source reflectance or index map and a basis image. The algorithm rapidly read a batch of reflectance or index maps and automatically quantified the average NDVI values in the specified plots. The BIA algorithm was programmed in Python language, and its pseudo-code is briefly described as follows:

| 00: | begin |

| 01: | initialize the three ATPs in the basis image ; |

| 02: | initialize a set of source reflectance or index maps with the size z; |

| 03: | initialize the regions of the experimental plots or fields with the size n; |

| 04: | for to |

| 05: | calculate using three pairs of ATPs in and ; |

| 06: | apply the affine transformation to by ; |

| 07: | for to |

| 08: | create a mask for ; |

| 09: | cut the masked image and obtain the statistical indices in ; |

| 10: | end for |

| 11: | save the statistical indices of each P to a summary file; |

| 12: | end for |

| 13: | save the total summary indices for all source reflectance or index maps; |

| 14: | end |

where

consisted of data including field name, image date, camera type, flight altitude, and the position coordinates of the three ATPs and the target plots or fields. Referring to the method proposed in [

35], we assessed the accuracy for index map alignment using pixel coordinates of of 7–18 check points.

Using the reflectance maps from the green, red, red-edge, and NIR bands, we obtained the NDVI index map by inputting the formula (Equation (

1), [

36,

37]) into the index calculator of Pix4D Mapper Pro.

2.3. Study Sites for Field Experiment Design and Measurements

Because the warm temperate climate and abundant water resources in our study area enable double cropping, our study crops include rice as well as wheat.

Table 2 lists the geographical condition, field area, and cultivated crop types of the 11 sites comprising 120 fields. Highly-mechanized farms in fields with an average size of less than 1.0 ha represents the typical farming style for this region. The map of the study sites is shown in

Figure 1.

The eleven study sites were segmented into two groups according to research purpose. The first group was composed of – and was designed to assess the relations between the VIs and yield. The UAV-based multispectral sensing was periodically conducted at approximately 7–10-day intervals, whereas the fields in the first group were measured on the same day (or 1–2 days before or after). The second group including – was designed for evaluating the remote sensing efficiency and for a trial of discrimination of normally grown rice crop from weeds and lodged rice crops by NDVI. The details of the growing condition for the former group are described in the following sub-sections.

2.3.1. Rice Cultivation Methods by Direct Seeding

Although transplanting accounts for an overwhelming proportion of rice production, growing rice by direct seeding is gradually on the increase in our study area. The study site

was designed to assess the relationships between NDVI and growth parameters for the dry direct seeded rice in 2017 (Okami et al., in preparation). The experiments covered 18 plots subjected to two seeding-methods—lane direct seeding and ridge-making direct seeding [

38]—and three levels of slow-releasing basal fertilizer treatments—88.5, 61.5, and 35.5 kg N ha

(20% N, 10% P

O

, and 12% K

O, respectively) with three replications. The UAV photogrammetry was carried out at 30 m AGL, such that the GSD of the RGB orthomosaic image and the NDVI index map reached 1.3 cm and 3.2 cm, respectively. By using the proposed algorithm, the NDVI value of each plot was calculated as the average value of a 1.6 × 1.6-m

area in the NDVI index map. Because the UAV multispectral sensing was conducted starting from the early reproductive stage of rice, the NDVI values from 91 days after seeding (DAS) only were available. The final yield was sampled at an identical unit acreage 2.7 m

of each plot and measured from the weight of brown rice of thickness 1.8 mm or above and 15% water content.

2.3.2. Wheat Grown under Different Conditions

The experiments on wheat were conducted during the 2017–2018 crop season at the study sites

–

(cultivar: “Shiroganekomugi”; sowing day: 20 November).

Table 3 lists the growing conditions: amount of basal fertilizer and top-dressing fertilizer and their application date, sowing density, and seedlings establishment rate. The fields were categorized into three types based on the application of topdressing fertilizer: applied zero, one, or two times. Because the seedling establishment was highly effective in the field F6 at site

, we applied a high amount of fertilizer by two times of top-dressing in order to improve the growth. The final yield based on unit acreage sampling was measured from the weight of the whole and plump grains with 12% water content.

4. Discussion

In this study, we examined the feasibility of using small UAVs for remote sensing in order to support the rice and wheat growers in the double cropping region. The one-man operable UAV-based multispectral sensing exhibited high operating efficiency (1.4, 3.1, and 13.4 ha per 10 min at 30, 50, and 100 m AGL, respectively;

Table 1), which was higher than that represented in [

6] (1 ha per 10 min at 30–50 m AGL) and in [

39] (approximately 7.1 ha per 10 min at 240-m AGL). The total takeoff weight was approximately 1.7 kg, which was lighter and more compact than those for the other UAVs used for multispectral sensing for monitoring crop growth [

1,

6,

14,

40,

41,

42]. These advantages make it feasible for the common farmers to use the UAV-based remote sensing system for their daily or long-term observation of crop growth.

The initial cost to construct the system was around USD $13,500, including UAV, multispectral sensor, PC and image-processing software, and the related accessories. To perform UAV photogrammetry at 100 m AGL for an area with 100 ha, we needed to secure two operators who both work for 1.5 h, which corresponded to labor cost of approximately USD $64. This was higher than the cost of USD $32 for satellite image acquisition for the same area. In addition to these costs, the required time for data initialization and image processing needed about 2 h and 7.5 h, respectively. However, the minimum order area for the satellite imagery is 25 km, which is excessive and the data is coarse for small farmlands less than 1 ha in farm size. The high-resolution imagery based on on-demand UAV measurement is distinctly more appropriate for the smallholder farming in our study area.

To obtain reliable reflectance index maps, we conducted many experiments assessing data noise of VIs due to photogrammetry conditions such as different AGLs, time spans for photogrammetry in a day, and so on. We observed that the reflectance NDVI values at different AGLs did not exhibit statistically significant correlation with the yield. Although the 65% forward overlap of the multispectral camera was sufficient to succeed in generating reflectance or index maps, 76% or more overlap was much safer and more reliable if taking incidental malfunction of the multispectral sensor into consideration. Due to the limited scope of this paper, we omitted the detailed experimental design and results and only described the necessary key points for photogrammetry conditions in

Section 2.1. In addition to those photogrammetry conditions, the essentials for success in long-term measurement also involved (1) flying the UAV at the same AGL during the entire crop-growing season, (2) performing radiometric calibration to create reflectance maps by using the calibration images of the reflectance panel, and (3) guaranteeing sufficient accuracy of index maps alignment if quantizing VI values at the same experimental plots or fields in a series of index maps.

Our proposed BIA algorithm aided alignment of the series of reflectance or index maps that were generated with or without the use of GCPs. The RGB orthomosaic images generated without the use GCPs often had noticeable distortion, and they had to be reconstructed for alignment by marking GCPs on the raw images. In contrast, most of the reflectance or index maps generated without the use of GCPs had slight distortion and were well aligned within our expected accuracy. This reveals that we could avoid costly measurement of GCPs and extra operations by marking GCPs on the raw image, and directly using the reflectance or index maps generated without the use of GCPs. The BIA algorithm was adequate for our study purpose at the field level. Nevertheless, for a study requiring much more alignment accuracy (such as breeding surveys of small plots), we still recommend generating and aligning reflectance or index maps using GCPs.

The high-resolution RGB orthomosaic image and NDVI index map (

Figure 2) contained numerous implicit signals for estimating the weed and lodging injury areas, identifying the different growth stages between fields, and forecasting the optimum harvesting time. Data conjugation between the UAV-based multispectral sensing and aboveground measurement would aid effective decision-making for seasonable field management of the consolidated farms. The NDVI values of both the rice and the wheat obtained using the high-resolution multispectral images appear to reflect highly responsive plant reactions to levels of fertilizer application (

Figure 3c and

Figure 5). This indicates that our system could enable us detect nitrogen deficiency by referring to criteria based on NDVI values at different nitrogen fertilizer levels. In order to exactly estimate the applied nitrogen fertilizer levels by NDVI, performing the UAV-based multispectral sensing at a similar growth stage for a similar cultivar is strongly recommended.

Based on the statistical analysis for the direct seeded rice in a single growth season, the high correlation coefficients between NDVI and yield would enable us to predict the yield based on NDVI by using our system at the early reproductive stage or the late ripening stage (

Figure 3). The value of

between yield and NDVI (

Figure 3) was lower than that measured at heading for the transplanted rice in [

4] (

) and [

5] (

). Because the direct seeded rice in our study produced non-uniform plant density, it was challenging to compare the prediction model with those for the transplanted rice. With regard to the decline of

in the early and middle ripening stages, we consider that rice panicles in this period would be the source of noise in NDVI measurement, though further experiments are required to verify it.

For the wheat, we obtained a lower correlation between yield and NDVI (

) at the flowering time (

Figure 6) in comparison with that in [

6] (

). Nevertheless, a similar

(=0.809) was obtained at 162 DAS, which was in the early ripening stage. This indicates the feasibility of predicting yield using our system; furthermore, there would exist an appropriate time window, not only limiting at a specified DAS.

In the paper, we report on the feasibility of NDVI remote sensing by using small UAV in rice and wheat fields. However, other effective indices such as red edge vegetation indices [

42] and the canopy chlorophyll content index (CCCI) [

43] have been reported. Furthermore, soybean and barley are also grown in the double cropping system, so that studies on such cases are currently underway in conjunction with the evaluation of the red edge vegetation indices and the canopy chlorophyll content index.