Toward Content-Based Hyperspectral Remote Sensing Image Retrieval (CB-HRSIR): A Preliminary Study Based on Spectral Sensitivity Functions

Abstract

:1. Introduction

2. Related Work

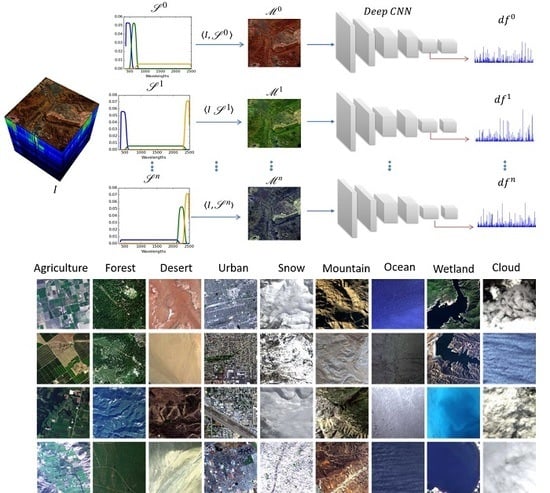

3. Proposed Framework

3.1. Spectral Sensitivity Functions-Based HSI Content Representation

3.2. The ICONES Hyperspectral Satellite Imaging Dataset (ICONES-HSI)

4. Experiments and Results

4.1. SSFs Analysis for HSI Content Discrimination: A Multi-Level Study

4.1.1. Multi-Level SSFs Construction

4.1.2. Results and Discussion

4.2. Trichromatic Image Content Description for HSI Retrieval

4.2.1. Rules of Spectral Sensitivity Function Generation

- Whole spectral range: We consider both the visible and the IR ranges as a whole.

- Partial spectral range: We reduce the sampling process to a selected spectral range and we consider our study for the visible range and the IR range separately.

4.2.2. Trichromatic Image Content Extraction

4.2.3. Results and Discussion

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Bosilj, P.; Aptoula, E.; Lefèvre, S.; Kijak, E. Retrieval of Remote Sensing Images with Pattern Spectra Descriptors. ISPRS Int. J. Geo-Inf. 2016, 5, 228. [Google Scholar] [CrossRef]

- Zhang, J.; Chen, L.; Zhuo, L.; Liang, X.; Li, J. An Efficient Hyperspectral Image Retrieval Method: Deep Spectral-Spatial Feature Extraction with DCGAN and Dimensionality Reduction Using t-SNE-Based NM Hashing. Remote Sens. 2018, 10, 271. [Google Scholar] [CrossRef]

- Ma, C.; Xia, W.; Chen, F.; Liu, J.; Dai, Q.; Jiang, L.; Duan, J.; Liu, W. A Content-Based Remote Sensing Image Change Information Retrieval Model. ISPRS Int. J. Geo-Inf. 2017, 6, 310. [Google Scholar] [CrossRef]

- Li, Y.; Zhang, Y.; Tao, C.; Zhu, H. Content-based high-resolution remote sensing image retrieval via unsupervised feature learning and collaborative affinity metric fusion. Remote Sens. 2016, 8, 709. [Google Scholar] [CrossRef]

- Demir, B.; Bruzzone, L. A novel active learning method in relevance feedback for content-based remote sensing image retrieval. IEEE Trans. Geosci. Remote Sens. 2015, 53, 2323–2334. [Google Scholar] [CrossRef]

- Chi, M.; Plaza, A.; Benediktsson, J.A.; Sun, Z.; Shen, J.; Zhu, Y. Big data for remote sensing: Challenges and opportunities. Proc. IEEE 2016, 104, 2207–2219. [Google Scholar] [CrossRef]

- Demir, B.; Bruzzone, L. Hashing-based scalable remote sensing image search and retrieval in large archives. IEEE Trans. Geosci. Remote Sens. 2016, 54, 892–904. [Google Scholar] [CrossRef]

- Chang, C.I. Hyperspectral Data Exploitation: Theory and Applications; John Wiley & Sons: New York, NY, USA, 2007. [Google Scholar]

- Galvão, L.S.; Pizarro, M.A.; Epiphanio, J.C.N. Variations in reflectance of tropical soils: Spectral-chemical composition relationships from AVIRIS data. Remote Sens. Environ. 2001, 75, 245–255. [Google Scholar] [CrossRef]

- Zhou, W.; Newsam, S.; Li, C.; Shao, Z. PatternNet: A Benchmark Dataset for Performance Evaluation of Remote Sensing Image Retrieval. arXiv, 2017; arXiv:1706.03424. [Google Scholar] [CrossRef]

- Lu, G.; Fei, B. Medical hyperspectral imaging: A review. J. Biomed. Opt. 2014, 19, 010901. [Google Scholar] [CrossRef] [PubMed]

- Liang, H. Advances in multispectral and hyperspectral imaging for archaeology and art conservation. Appl. Phys. A 2012, 106, 309–323. [Google Scholar] [CrossRef]

- Barrett, E.C. Introduction to Environmental Remote Sensing; Routledge: Abingdon, UK, 2013. [Google Scholar]

- Zhang, J.; Geng, W.; Liang, X.; Li, J.; Zhuo, L.; Zhou, Q. Hyperspectral remote sensing image retrieval system using spectral and texture features. Appl. Opt. 2017, 56, 4785–4796. [Google Scholar] [CrossRef] [PubMed]

- Xia, G.; Tong, X.; Hu, F.; Zhong, Y.; Datcu, M.; Zhang, L. Exploiting Deep Features for Remote Sensing Image Retrieval: A Systematic Investigation. arXiv, 2017; arXiv:1707.07321. [Google Scholar]

- Zhou, W.; Newsam, S.; Li, C.; Shao, Z. Learning low dimensional convolutional neural networks for high-resolution remote sensing image retrieval. Remote Sens. 2017, 9, 489. [Google Scholar] [CrossRef]

- Roy, S.; Sangineto, E.; Demir, B.; Sebe, N. Deep Metric and Hash-Code Learning for Content-Based Retrieval of Remote Sensing Images. In Proceedings of the IGARSS 2018—2018 IEEE International Geoscience and Remote Sensing Symposium, Valencia, Spain, 22–27 July 2018; pp. 4539–4542. [Google Scholar]

- Li, Y.; Zhang, Y.; Huang, X.; Ma, J. Learning source-invariant deep hashing convolutional neural networks for cross-source remote sensing image retrieval. IEEE Trans. Geosci. Remote Sens. 2018, 56, 652–6536. [Google Scholar] [CrossRef]

- Li, Y.; Zhang, Y.; Huang, X.; Zhu, H.; Ma, J. Large-Scale Remote Sensing Image Retrieval by Deep Hashing Neural Networks. IEEE Trans. Geosci. Remote Sens. 2018, 56, 950–965. [Google Scholar] [CrossRef]

- Chen, Y.; Lin, Z.; Zhao, X.; Wang, G.; Gu, Y. Deep learning-based classification of hyperspectral data. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2014, 7, 2094–2107. [Google Scholar] [CrossRef]

- Santara, A.; Mani, K.; Hatwar, P.; Singh, A.; Garg, A.; Padia, K.; Mitra, P. BASS Net: Band-Adaptive Spectral-Spatial Feature Learning Neural Network for Hyperspectral Image Classification. IEEE Trans. Geosci. Remote Sens. 2017, 55, 5293–5301. [Google Scholar] [CrossRef]

- Zhao, W.; Du, S. Spectral–spatial feature extraction for hyperspectral image classification: A dimension reduction and deep learning approach. IEEE Trans. Geosci. Remote Sens. 2016, 54, 4544–4554. [Google Scholar] [CrossRef]

- Mei, S.; Ji, J.; Hou, J.; Li, X.; Du, Q. Learning sensor-specific spatial-spectral features of hyperspectral images via convolutional neural networks. IEEE Trans. Geosci. Remote Sens. 2017, 55, 4520–4533. [Google Scholar] [CrossRef]

- Zhang, L.; Zhang, L.; Du, B. Deep learning for remote sensing data: A technical tutorial on the state of the art. IEEE Geosci. Remote Sens. Mag. 2016, 4, 22–40. [Google Scholar] [CrossRef]

- Chen, Y.; Jiang, H.; Li, C.; Jia, X.; Ghamisi, P. Deep Feature Extraction and Classification of Hyperspectral Images Based on Convolutional Neural Networks. IEEE Trans. Geosci. Remote Sens. 2016, 54, 6232–6251. [Google Scholar] [CrossRef]

- Parker, A.R. The diversity and implications of animal structural colours. J. Exp. Biol. 1998, 201, 2343–2347. [Google Scholar]

- Caulfield, H.J. Artificial color. Neurocomputing 2003, 51, 463–465. [Google Scholar] [CrossRef]

- Fu, J.; Caulfield, H.J.; Wu, D.; Tadesse, W. Hyperspectral image analysis using artificial color. J. Appl. Remote Sens. 2010, 4, 043514. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very Deep Convolutional Networks for Large-Scale Image Recognition. arXiv, 2014; arXiv:1409.1556. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 770–778. [Google Scholar]

- Aksoy, S.; Koperski, K.; Tusk, C.; Marchisio, G.; Tilton, J.C. Learning Bayesian classifiers for scene classification with a visual grammar. IEEE Trans. Geosci. Remote Sens. 2005, 43, 581–589. [Google Scholar] [CrossRef]

- Aptoula, E. Remote sensing image retrieval with global morphological texture descriptors. IEEE Trans. Geosci. Remote Sens. 2014, 52, 3023–3034. [Google Scholar] [CrossRef]

- Liu, Y.; Zhang, D.; Lu, G.; Ma, W.Y. A survey of content-based image retrieval with high-level semantics. Pattern Recognit. 2007, 40, 262–282. [Google Scholar] [CrossRef]

- Plaza, A.J. Content-based hyperspectral image retrieval using spectral unmixing. Proc. SPIE 2011, 8180, 81800O. [Google Scholar]

- Plaza, A.; Plaza, J.; Paz, A.; Blazquez, S. Parallel CBIR System for Efficient Hyperspectral Image Retrieval from Heterogeneous Networks of Workstations. In Proceedings of the Ninth International Symposium on Symbolic and Numeric Algorithms for Scientific Computing (SYNASC 2007), Timisoara, Romania, 26–29 September 2007; pp. 285–291. [Google Scholar]

- Veganzones, M.A.; Grana, M. A spectral/spatial CBIR system for hyperspectral images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2012, 5, 488–500. [Google Scholar] [CrossRef]

- Veganzones, M.A.; Datcu, M.; Graña, M. Further results on dissimilarity spaces for hyperspectral images RF-CBIR. Pattern Recognit. Lett. 2013, 34, 1659–1668. [Google Scholar] [CrossRef]

- Ömrüuzun, F.; Demir, B.; Bruzzone, L.; Çetin, Y.Y. Content based hyperspectral image retrieval using bag of endmembers image descriptors. In Proceedings of the Workshop on Hyperspectral Image and Signal Processing: Evolution in Remote Sensing, Los Angeles, CA, USA, 21–24 August 2016; pp. 1–4. [Google Scholar]

- Alber, I.E.; Farber, M.S.; Yeager, N.; Xiong, Z.; Pottenger, W.M. Retrieval of multi-and hyperspectral images using an interactive relevance feedback form of content-based image retrieval. In Data Mining and Knowledge Discovery: Theory, Tools, and Technology III; International Society for Optics and Photonics: Orlando, FL, USA, 2001; Volume 4384, pp. 56–67. [Google Scholar]

- Tekeste, I.; Demir, B. Advanced Local Binary Patterns for Remote Sensing Image Retrieval. In Proceedings of the IGARSS 2018—2018 IEEE International Geoscience and Remote Sensing Symposium, Valencia, Spain, 22–27 July 2018; pp. 6855–6858. [Google Scholar]

- Makantasis, K.; Karantzalos, K.; Doulamis, A.; Doulamis, N. Deep supervised learning for hyperspectral data classification through convolutional neural networks. In Proceedings of the 2015 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Milan, Italy, 26–31 July 2015; pp. 4959–4962. [Google Scholar]

- Lee, H.; Kwon, H. Going Deeper With Contextual CNN for Hyperspectral Image Classification. IEEE Trans. Image Process. 2017, 26, 4843–4855. [Google Scholar] [CrossRef] [PubMed]

- Chen, Y.; Zhao, X.; Jia, X. Spatial Classification of Hyperspectral Data Based on Deep Belief Network. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2015, 8, 2381–2392. [Google Scholar] [CrossRef]

- Kumar, S.; Ghosh, J.; Crawford, M.M. Best-bases feature extraction algorithms for classification of hyperspectral data. IEEE Trans. Geosci. Remote Sens. 2001, 39, 1368–1379. [Google Scholar] [CrossRef]

- Liu, B.; Yu, X.; Zhang, P.; Yu, A.; Fu, Q.; Wei, X. Supervised Deep Feature Extraction for Hyperspectral Image Classification. IEEE Trans. Geosci. Remote Sens. 2018, 56, 1909–1921. [Google Scholar] [CrossRef]

- Richard, N.; Helbert, D.; Olivier, C.; Tamisier, M. Pseudo-Divergence and Bidimensional Histogram of Spectral Differences for Hyperspectral Image Processing. J. Imaging Sci. Technol. 2016, 60, 50402. [Google Scholar] [CrossRef]

- Köhler, R. The International Vocabulary of Metrology: Basic and General Concepts and Associated Terms. Why? How? In Transverse Disciplines in Metrology; Wiley & Sons: New York, NY, USA, 2009; pp. 233–238. [Google Scholar]

- Deng, J.; Dong, W.; Socher, R.; Li, L.J.; Li, K.; Li, F.F. ImageNet: A large-scale hierarchical image database. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; pp. 248–255. [Google Scholar]

- Shimano, N. Optimization of spectral sensitivities with Gaussian distribution functions for a color image acquisition device in the presence of noise. Opt. Eng. 2006, 45, 013201. [Google Scholar] [CrossRef]

| Levels | Level 1 | Level 2 | Level 3 | Level 4 | Level 5 | ||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| (%) | P@20 | MAP | P@20 | MAP | P@20 | MAP | P@20 | MAP | P@20 | MAP | |

| Class | |||||||||||

| Agriculture | 44.3 | 31.83 | 47.7 | 33.93 | 50.4 | 37.05 | 51.3 | 39.07 | 49.6 | 39.0 | |

| Cloud | 83.97 | 80.37 | 80.86 | 77.77 | 85.86 | 82.53 | 86.03 | 82.06 | 87.76 | 82.98 | |

| Desert | 46.67 | 31.1 | 48.61 | 31.86 | 48.24 | 33.47 | 48.52 | 34.23 | 50.65 | 34.87 | |

| Dense Urban | 84.38 | 70.98 | 86.37 | 73.35 | 86.71 | 73.71 | 87.6 | 74.85 | 90 | 75.41 | |

| Forest | 57.61 | 37.61 | 54.28 | 37.71 | 60.58 | 40.96 | 61.09 | 41.63 | 61.16 | 42.95 | |

| Mountain | 65 | 51.1 | 66.42 | 51.66 | 86.21 | 53 | 67.45 | 52.46 | 67.36 | 52.64 | |

| Ocean | 75.22 | 62.72 | 75.96 | 61.89 | 78.16 | 63.53 | 78.75 | 66.29 | 77.06 | 67.59 | |

| Snow | 52.27 | 35.48 | 53.18 | 35.86 | 51.36 | 39.77 | 54.64 | 41.68 | 56.45 | 44.17 | |

| Wetland | 49.17 | 39.54 | 46.67 | 38.33 | 45.83 | 39.7 | 48.19 | 42.72 | 48.75 | 43.38 | |

| Whole dataset | 59.61 | 46.4 | 60.38 | 46.82 | 60.81 | 47.2 | 62.06 | 48.01 | 62.5 | 48.76 | |

| RGB | Level 5 Best SSFs | ||||

|---|---|---|---|---|---|

| (%) | P@20 | MAP | P@20 | MAP | |

| Class | |||||

| Agriculture | 71.8 | 55.39 | 49.6 | 39.0 | |

| Cloud | 68.1 | 64.04 | 87.76 | 82.98 | |

| Desert | 66.2 | 46.55 | 50.65 | 34.87 | |

| Dense-Urban | 94.93 | 83.63 | 50.65 | 34.87 | |

| Forest | 77.03 | 53.26 | 90 | 75.41 | |

| Mountain | 68.49 | 57.24 | 67.36 | 52.64 | |

| Ocean | 91.4 | 79.54 | 77.06 | 67.59 | |

| Snow | 72.55 | 51.27 | 56.45 | 44.17 | |

| Wetland | 24.43 | 19.83 | 48.75 | 43.38 | |

| Whole dataset | 74.08 | 59.33 | 62.5 | 48.76 | |

| RGB (Baseline) | Visible | InfraRed | Whole | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| (%) | P@10 | P@20 | MAP | P@10 | P@20 | MAP | P@10 | P@20 | MAP | P@10 | P@20 | MAP |

| A | 82.6 | 71.8 | 55.4 | 88.4 | 81 | 68.3 | 74.2 | 60 | 42.6 | 78 | 65.2 | 48.9 |

| C | 80.3 | 68.1 | 64 | 87.6 | 78.8 | 69.1 | 83.8 | 73.6 | 65.7 | 93.8 | 78.6 | 66.5 |

| D | 75.2 | 66.2 | 46.5 | 80 | 67 | 49.3 | 80.7 | 68.7 | 48.8 | 80.9 | 70.2 | 50.7 |

| D-U | 96.3 | 94.9 | 83.6 | 97.8 | 96.8 | 87.8 | 89.5 | 96.7 | 84.2 | 100 | 97.5 | 86.5 |

| F | 84.8 | 77 | 53.3 | 79.2 | 69 | 48.4 | 83.9 | 87.9 | 80.7 | 57.9 | ||

| M | 77.9 | 68.5 | 57.2 | 72.1 | 61.9 | 56.5 | 76.5 | 80.9 | 73.1 | 64.5 | ||

| O | 92.3 | 91.4 | 79.5 | 86.2 | 80.4 | 68.1 | 84.3 | 78.4 | 64.3 | 89.7 | 89.9 | 83.1 |

| S | 86 | 72.5 | 51.3 | 88 | 73.4 | 49.2 | 78.2 | 69.6 | 88 | 79.3 | 57.8 | |

| W | 33.7 | 24.4 | 19.8 | 50.5 | 36.5 | 27.7 | 39.2 | 27.5 | 22.2 | 41.9 | 29.7 | 23.9 |

| Avg | 81.5 | 74.1 | 59.3 | 82.4 | 73.3 | 59 | 80.9 | 71.7 | 55.2 | 83.31 | 74.18 | 59.07 |

| Whole | RGB | |||

|---|---|---|---|---|

| Category/Metric | P@5 (%) | MAP (%) | P@5 (%) | MAP (%) |

| Rural Area | 73.7 | 57.31 | 57.14 | 41.85 |

| Urban Area | 72.71 | 57.34 | 80.1 | 56.88 |

| Cultivated Land | 86.56 | 73.13 | 83.3 | 70.3 |

| Forest | 73.7 | 57.31 | 78.0 | 58.81 |

| Average | 81.03 | 66.54 | 77.3 | 61.8 |

| Method | Average MAP | Signature Generation Time |

|---|---|---|

| PCA-based HSI content description | 51.32% | 7.2 s/image |

| Proposed SSFs-based method | 59.07% | 1.2 s/image |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ben-Ahmed, O.; Urruty, T.; Richard, N.; Fernandez-Maloigne, C. Toward Content-Based Hyperspectral Remote Sensing Image Retrieval (CB-HRSIR): A Preliminary Study Based on Spectral Sensitivity Functions. Remote Sens. 2019, 11, 600. https://0-doi-org.brum.beds.ac.uk/10.3390/rs11050600

Ben-Ahmed O, Urruty T, Richard N, Fernandez-Maloigne C. Toward Content-Based Hyperspectral Remote Sensing Image Retrieval (CB-HRSIR): A Preliminary Study Based on Spectral Sensitivity Functions. Remote Sensing. 2019; 11(5):600. https://0-doi-org.brum.beds.ac.uk/10.3390/rs11050600

Chicago/Turabian StyleBen-Ahmed, Olfa, Thierry Urruty, Noël Richard, and Christine Fernandez-Maloigne. 2019. "Toward Content-Based Hyperspectral Remote Sensing Image Retrieval (CB-HRSIR): A Preliminary Study Based on Spectral Sensitivity Functions" Remote Sensing 11, no. 5: 600. https://0-doi-org.brum.beds.ac.uk/10.3390/rs11050600