Radiometric and Atmospheric Corrections of Multispectral μMCA Camera for UAV Spectroscopy

Abstract

:1. Introduction

2. Materials and Methods

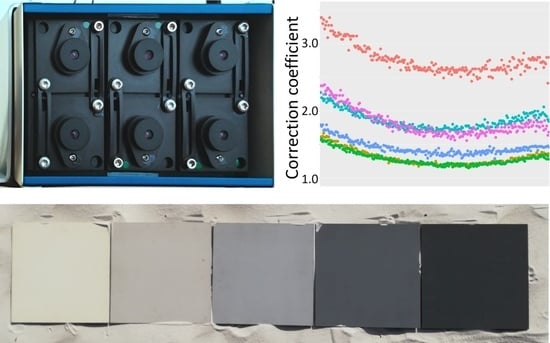

2.1. Multispectral Camera

2.2. Proposed Workflow

2.3. Data Collection

2.3.1. Laboratory Experiment

2.3.2. Field Experiment

2.4. Applied Correction Methods

2.4.1. Sensor Linearity

2.4.2. Noise Reduction

2.4.3. Nonuniform Quantum Efficiency and Vignetting Reduction

2.4.4. Atmospheric Correction

2.5. Data Processing

3. Results

3.1. Sensor Linearity

3.2. Noise Reduction

3.3. Nonuniform Quantum Efficiency of CMOS Array and Vignetting Reduction

3.4. Results of Field Experiment

4. Discussion

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Salamí, E.; Barrado, C.; Pastor, E. UAV flight experiments applied to the remote sensing of vegetated areas. Remote Sens. 2014, 6, 11051–11081. [Google Scholar] [CrossRef]

- Candiago, S.; Remondino, F.; De Giglio, M.; Dubbini, M.; Gattelli, M. Evaluating Multispectral Images and Vegetation Indices for Precision Farming Applications from UAV Images. Remote Sens. 2015, 7, 4026–4047. [Google Scholar] [CrossRef] [Green Version]

- Torresan, C.; Berton, A.; Carotenuto, F.; Di Gennaro, S.F.; Gioli, B.; Matese, A.; Miglietta, F.; Vagnoli, C.; Zaldei, A.; Wallace, L. Forestry applications of UAVs in Europe: A review. Int. J. Remote Sens. 2017, 38, 2427–2447. [Google Scholar] [CrossRef]

- Adão, T.; Hruška, J.; Pádua, L.; Bessa, J.; Peres, E.; Morais, R.; Sousa, J. Hyperspectral Imaging: A Review on UAV-Based Sensors, Data Processing and Applications for Agriculture and Forestry. Remote Sens. 2017, 9, 1110. [Google Scholar] [CrossRef]

- Pádua, L.; Vanko, J.; Hruška, J.; Adão, T.; Sousa, J.J.; Peres, E.; Morais, R. UAS, sensors, and data processing in agroforestry: A review towards practical applications. Int. J. Remote Sens. 2017, 38, 2349–2391. [Google Scholar] [CrossRef]

- Zhang, C.; Kovacs, J.M. The application of small unmanned aerial systems for precision agriculture: A review. Precis. Agric. 2012, 13, 693–712. [Google Scholar] [CrossRef]

- Näsi, R.; Honkavaara, E.; Lyytikäinen-Saarenmaa, P.; Blomqvist, M.; Litkey, P.; Hakala, T.; Viljanen, N.; Kantola, T.; Tanhuanpää, T.; Holopainen, M. Using UAV-based photogrammetry and hyperspectral imaging for mapping bark beetle damage at Tree-Level. Remote Sens. 2015, 7, 15467–15493. [Google Scholar] [CrossRef]

- Aasen, H.; Honkavaara, E.; Lucieer, A.; Zarco-Tejada, P.J. Quantitative remote sensing at Ultra-High resolution with UAV spectroscopy: A review of sensor technology, measurement procedures, and data correctionworkflows. Remote Sens. 2018, 10, 1091. [Google Scholar] [CrossRef]

- Smith, G.M.; Milton, E.J. The use of the empirical line method to calibrate remotely sensed data to reflectance. Int. J. Remote Sens. 1999, 20, 2653–2662. [Google Scholar] [CrossRef]

- Aspinall, R.J.; Marcus, W.A.; Boardman, J.W. Considerations in collecting, processing, and analysing high spatial resolution hyperspectral data for environmental investigations. J. Geogr. Syst. 2002, 4, 15–29. [Google Scholar] [CrossRef]

- Sandau, R.; Beisl, U.; Braunecker, B.; Cramer, M.; Driescher, H.; Eckardt, A.; Fricker, P.; Gruber, M.; Hilbert, S.; Jacobsen, K.; et al. Digital Airborne Camera: Introduction and Technology; Sandau, R., Ed.; Springer: Dordrecht, The Netherlands, 2010; ISBN 9781402088773. [Google Scholar]

- Ryan, R.E.; Pagnutti, M. Enhanced absolute and relative radiometric calibration for digital aerial cameras. In Proceedings of the Photogrammetric Week’09; Fritch, D., Ed.; Wichtmann Verlag: Heidelberg, Germany, 2009; pp. 81–90. [Google Scholar]

- Gao, B.C.; Montes, M.J.; Davis, C.O.; Goetz, A.F.H. Atmospheric correction algorithms for hyperspectral remote sensing data of land and ocean. Remote Sens. Environ. 2009, 113, 16–24. [Google Scholar] [CrossRef]

- Dinguirard, M.; Slater, P.N. Calibration of Space-Multispectral imaging sensors: A review. Remote Sens. Environ. 1999, 68, 194–205. [Google Scholar] [CrossRef]

- Tetracam Inc. Tetracam µMCA User’s Guide. Available online: http://www.tetracam.com/PDFs/u%20MCA%20Users%20Guide%20V1.1.pdf (accessed on 17 August 2019).

- Aasen, H.; Burkart, A.; Bolten, A.; Bareth, G. Generating 3D hyperspectral information with lightweight UAV snapshot cameras for vegetation monitoring: From camera calibration to quality assurance. ISPRS J. Photogramm. Remote Sens. 2015, 108, 245–259. [Google Scholar] [CrossRef]

- Crusiol, L.G.T.; Nanni, M.R.; Silva, G.F.C.; Furlanetto, R.H.; da Silva Gualberto, A.A.; de Carvalho Gasparotto, A.; De Paula, M.N. Semi professional digital camera calibration techniques for Vis/NIR spectral data acquisition from an unmanned aerial vehicle. Int. J. Remote Sens. 2017, 38, 2717–2736. [Google Scholar] [CrossRef]

- Parrot. Parrot Announcement-Release of Application Notes. Available online: https://forum.developer.parrot.com/t/parrot-announcement-release-of-application-notes/5455 (accessed on 27 September 2019).

- MicaSense. Best Practices: Collecting Data with MicaSense Sensors. Available online: http://support.micasense.com/hc/en-us/articles/224893167-Best-practices-Collecting-Data-with-MicaSense-RedEdge-and-Parrot-Sequoia (accessed on 27 September 2019).

- Assmann, J.J.; Kerby, J.T.; Cunliffe, A.M.; Myers-Smith, I.H. Vegetation monitoring using multispectral sensors—Best practices and lessons learned from high latitudes. J. Unmanned Veh. Syst. 2018, 7, 54–75. [Google Scholar] [CrossRef]

- Withagen, P.J.; Groen, F.C.A.; Schutte, K. CCD color camera characterization for image measurements. IEEE Trans. Instrum. Meas. 2007, 56, 199–203. [Google Scholar] [CrossRef]

- Healey, G.E.; Kondepudy, R. Radiometric CCD camera calibration and noise estimation. IEEE Trans. Pattern Anal. Mach. Intell. 1994, 16, 267–276. [Google Scholar] [CrossRef]

- Mullikin, J.C.; van Vliet, L.J.; Netten, H.; Boddeke, F.R.; van der Feltz, G.; Young, I.T. Methods for CCD Camera Characterization. In Proceedings of the Image Acquisition and Scientific Imaging Systems; Titus, H.C., Waks, A., Eds.; International Society for Optics and Photonics: San Jose, CA, USA, 1994; Volume 2173, pp. 73–84. [Google Scholar]

- Fraser, C.S. Digital camera Self-Calibration. ISPRS J. Photogramm. Remote Sens. 1997, 52, 149–159. [Google Scholar] [CrossRef]

- Hytti, H.T. Characterization of digital image noise properties based on RAW data. Electron. Imaging 2006, 6059, 60590A. [Google Scholar]

- Neale, C.M.U.; Crowther, B.G. An airborne multispectral video/radiometer remote sensing system: Development and calibration. Remote Sens. Environ. 1994, 49, 187–194. [Google Scholar] [CrossRef]

- Mansouri, A.; Marzani, F.S.; Gouton, P. Development of a Protocol for CCD Calibration: Application to a Multispectral Imaging System. Int. J. Robot. Autom. 2005, 20. [Google Scholar] [CrossRef] [Green Version]

- Olsen, D.; Dou, C.; Zhang, X.; Hu, L.; Kim, H.; Hildum, E. Radiometric calibration for AgCam. Remote Sens. 2010, 2, 464–477. [Google Scholar] [CrossRef]

- Hunt, E.R.; Dean Hively, W.; Fujikawa, S.J.; Linden, D.S.; Daughtry, C.S.T.; McCarty, G.W. Acquisition of NIR-Green-Blue digital photographs from unmanned aircraft for crop monitoring. Remote Sens. 2010, 2, 290–305. [Google Scholar] [CrossRef]

- Lehmann, J.; Nieberding, F.; Prinz, T.; Knoth, C. Analysis of Unmanned Aerial System-Based CIR Images in Forestry—A New Perspective to Monitor Pest Infestation Levels. Forests 2015, 6, 594–612. [Google Scholar] [CrossRef]

- Minařík, R.; Langhammer, J. Use of a multispectral UAV photogrammetry for detection and tracking of forest disturbance dynamics. In Proceedings of the International Archives of the Photogrammetry, Remote Sensing and Spatial Information Sciences; Halounova, L., Ed.; Copernicus GmbH: Göttingen, Gemany, 2016; Volume XLI-B8, pp. 711–718. [Google Scholar]

- Lelong, C.C.D.; Burger, P.; Jubelin, G.; Roux, B.; Labbé, S.; Baret, F. Assessment of unmanned aerial vehicles imagery for quantitative monitoring of wheat crop in small plots. Sensors 2008, 8, 3557–3585. [Google Scholar] [CrossRef]

- Berni, J.A.J.; Zarco-tejada, P.P.J.; Suarez, L.; Fereres, E.; Member, S.; Suárez, L. Thermal and Narrowband Multispectral Remote Sensing for Vegetation Monitoring From an Unmanned Aerial Vehicle. IEEE Trans. Geosci. Remote Sens. 2009, 47, 722–738. [Google Scholar] [CrossRef] [Green Version]

- Kelcey, J.; Lucieer, A. Sensor correction of a 6-Band multispectral imaging sensor for UAV remote sensing. Remote Sens. 2012, 4, 1462–1493. [Google Scholar] [CrossRef]

- Kelcey, J.; Lucieer, A. Sensor Correction and Radiometric Calibration of a 6-Band Multispectral Imaging Sensor for Uav Remote Sensing. ISPRS-Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2012, XXXIX-B1, 393–398. [Google Scholar] [CrossRef]

- Del Pozo, S.; Rodríguez-Gonzálvez, P.; Hernández-López, D.; Felipe-García, B. Vicarious radiometric calibration of a multispectral camera on board an unmanned aerial system. Remote Sens. 2014, 6, 1918–1937. [Google Scholar] [CrossRef]

- Nocerino, E.; Dubbini, M.; Menna, F.; Remondino, F.; Gattelli, M.; Covi, D. Geometric calibration and radiometric correction of the maia multispectral camera. In Proceedings of the International Archives of the Photogrammetry, Remote Sensing and Spatial Information Sciences; Honkavaara, E., Ed.; Copernicus GmbH: Göttingen, Germany, 2017; Volume XLII-3/W3, pp. 149–156. [Google Scholar]

- Padró, J.-C.; Muñoz, F.-J.; Ávila, L.; Pesquer, L.; Pons, X. Radiometric Correction of Landsat-8 and Sentinel-2A Scenes Using Drone Imagery in Synergy with Field Spectroradiometry. Remote Sens. 2018, 10, 1687. [Google Scholar] [CrossRef]

- Padró, J.-C.; Carabassa, V.; Balagué, J.; Brotons, L.; Alcañiz, J.M.; Pons, X. Monitoring opencast mine restorations using Unmanned Aerial System (UAS) imagery. Sci. Total Environ. 2019, 657, 1602–1614. [Google Scholar] [CrossRef] [PubMed]

- Honkavaara, E.; Saari, H.; Kaivosoja, J.; Pölönen, I.; Hakala, T.; Litkey, P.; Mäkynen, J.; Pesonen, L. Processing and Assessment of Spectrometric, Stereoscopic Imagery Collected Using a Lightweight UAV Spectral Camera for Precision Agriculture. Remote Sens. 2013, 5, 5006–5039. [Google Scholar] [CrossRef] [Green Version]

- Hakala, T.; Honkavaara, E.; Saari, H.; Mäkynen, J.; Kaivosoja, J.; Pesonen, L.; Pölönen, I. Spectral imaging from UAVs under varying illumination conditions. In Proceedings of the International Archives of the Photogrammetry, Remote Sensing and Spatial Information Sciences; Grenzdörffer, G., Bill, R., Eds.; Copernicus GmbH: Göttingen, Germany, 2013; Volume XL-1/W2, pp. 189–194. [Google Scholar]

- Hruska, R.; Mitchell, J.; Anderson, M.; Glenn, N.F. Radiometric and geometric analysis of hyperspectral imagery acquired from an unmanned aerial vehicle. Remote Sens. 2012, 4, 2736–2752. [Google Scholar] [CrossRef]

- Aasen, H.; Bendig, J.; Bolten, A.; Bennertz, S.; Willkomm, M.; Bareth, G. Introduction and preliminary results of a calibration for full-frame hyperspectral cameras to monitor agricultural crops with UAVs. In Proceedings of the International Archives of the Photogrammetry, Remote Sensing and Spatial Information Sciences; Sunar, F., Altan, O., Taberner, M., Eds.; Copernicus GmbH: Göttingen, Gemany, 2014; Volume XL–7, pp. 1–8. [Google Scholar]

- Buettner, A.; Roeser, H.P. Hyperspectral remote sensing with the UAS “Stuttgarter adler”—Challenges, experiences and first results. In Proceedings of the International Archives of the Photogrammetry, Remote Sensing and Spatial Information Sciences; Grenzdörffer, G., Bill, R., Eds.; Copernicus GmbH: Göttingen, Germany, 2013; Volume XL-1/W2, pp. 61–65. [Google Scholar]

- Suomalainen, J.; Anders, N.; Iqbal, S.; Roerink, G.; Franke, J.; Wenting, P.; Hünniger, D.; Bartholomeus, H.; Becker, R.; Kooistra, L. A lightweight hyperspectral mapping system and photogrammetric processing chain for unmanned aerial vehicles. Remote Sens. 2014, 6, 11013–11030. [Google Scholar] [CrossRef]

- Baugh, W.M.; Groeneveld, D.P. Empirical proof of the empirical line. Int. J. Remote Sens. 2008, 29, 665–672. [Google Scholar] [CrossRef]

- Al-Amri, S.S.; Kalyankar, N.V.; Santosh, K. A Comparative Study of Removal Noise from Remote Sensing Image. Int. J. Comput. Sci. Issues 2010, 7, 32–36. [Google Scholar]

- Box, G.E.P.; Cox, D.R. An Analysis of Transformations. J. R. Stat. Soc. Ser. B 1964, 26, 211–252. [Google Scholar] [CrossRef]

- Greenhouse, S.W.; Geisser, S. On methods in the analysis of profile data. Psychometrika 1959, 24, 95–112. [Google Scholar] [CrossRef]

- Goldman, D.B. Vignette and Exposure Calibration and Compensation. IEEE Trans. Pattern Anal. Mach. Intell. 2010, 32, 2276–2288. [Google Scholar] [CrossRef]

- Yu, W. Practical Anti-Vignetting methods for digital cameras. IEEE Trans. Consum. Electron. 2004, 50, 975–983. [Google Scholar]

- Teillet, P.M. Image correction for radiometric effects in remote sensing. Int. J. Remote Sens. 1986, 7, 1637–1651. [Google Scholar] [CrossRef]

- Yang, G.; Li, C.; Wang, Y.; Yuan, H.; Feng, H.; Xu, B.; Yang, X. The DOM generation and precise radiometric calibration of a UAV-Mounted miniature snapshot hyperspectral imager. Remote Sens. 2017, 9, 642. [Google Scholar] [CrossRef]

- R Core Team. R: A Language and Environment for Statistical Computing; R Foundation for Statistical Computing: Vienna, Austria; Available online: http://www.R-project.org/ (accessed on 21 January 2018).

- Tetracam Inc. PixelWrench2 User Guide. Available online: http://www.tetracam.com/PDFs/Tetracam_PixelWrench2_User_Guide.pdf (accessed on 21 January 2018).

- Wang, C.; Myint, S.W. A Simplified Empirical Line Method of Radiometric Calibration for Small Unmanned Aircraft Systems-Based Remote Sensing. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2015, 8, 1876–1885. [Google Scholar] [CrossRef]

- Nevalainen, O.; Honkavaara, E.; Tuominen, S.; Viljanen, N.; Hakala, T.; Yu, X.; Hyyppä, J.; Saari, H.; Pölönen, I.; Imai, N.; et al. Individual Tree Detection and Classification with UAV-Based Photogrammetric Point Clouds and Hyperspectral Imaging. Remote Sens. 2017, 9, 185. [Google Scholar] [CrossRef]

- Tuominen, S.; Balazs, A.; Honkavaara, E.; Pölönen, I.; Saari, H.; Hakala, T.; Viljanen, N. Hyperspectral UAV-Imagery and photogrammetric canopy height model in estimating forest stand variables. Silva Fenn. 2017, 51, 7721. [Google Scholar] [CrossRef]

- Lebourgeois, V.; Bégué, A.; Labbé, S.; Mallavan, B.; Prévot, L.; Roux, B. Can Commercial Digital Cameras Be Used as Multispectral Sensors? A Crop Monitoring Test. Sensors 2008, 8, 7300–7322. [Google Scholar] [CrossRef]

| Specification | Description/Value |

|---|---|

| Type | µMCA 6 Snap, 6 identical global shutter sensors, changeable bandpass filters |

| Sensor | 1.3 mega-pixel CMOS sensor (1280 × 1024 pixels) |

| Sensitivity | ~450 nm to ~1000 nm |

| Pixel Size | 4.8 microns |

| Focal length | 9.6 mm fixed lens |

| Aperture | f/3.2 |

| Horizontal Angle of View | 38.26° |

| Vertical Angle of View | 30.97° |

| Default Depth of Field | ~2 m to infinity |

| Bands | B1: 550 nm (FWHM 20 nm) |

| B2: 650 nm (FWHM 20 nm) | |

| B3: 700 nm (FWHM 20 nm) | |

| B4: 800 nm (FWHM 20 nm) | |

| B5: 900 nm (FWHM 20 nm) | |

| B6: 950 nm (FWHM 20 nm) |

| Exposure Time (µS) | Light Intensity % |

|---|---|

| 50 | 10, 20, 30, 40, 50, 60, 70, 80, 90, 96 |

| 250 | 8, 16, 24, 32, 40, 48, 56, 64, 72, 80 |

| 500 | 6, 12, 18, 24, 30, 36, 42, 48, 54, 60 |

| 1000 | 16, 20, 24, 28, 32, 36, 40 |

| Temperature (°C) | Exposure Time (µS) |

|---|---|

| 8 | 50 |

| 250 | |

| 500 | |

| 1000 | |

| 19 | 50 |

| 250 | |

| 500 | |

| 1000 | |

| 27 | 50 |

| 250 | |

| 500 | |

| 1000 |

| Band | Expression | Statistics | F-Value | p-Value |

|---|---|---|---|---|

| B1 | temp | F(1.35, 1348.41) | 57.57 | <0.001 |

| exptime | F(2.69, 2682.73 | 106.70 | <0.001 | |

| temp:exptime | F(5.50, 5808.43) | 21.97 | <0.001 | |

| B2 | temp | F(1.66, 1658.68) | 327.60 | <0.001 |

| exptime | F(2.93, 2922.19) | 41.31 | <0.001 | |

| temp:exptime | F(5.91, 5906.79) | 8.54 | <0.001 | |

| B3 | temp | F(1.34, 1697.68) | 163.14 | <0.001 |

| exptime | F(2.74, 2741.60) | 43.91 | <0.001 | |

| temp:exptime | F(5.87, 5868.6) | 38.29 | <0.001 | |

| B4 | temp | F(2, 1998) | 44.46 | <0.001 |

| exptime | F(3, 2997) | 150.28 | <0.001 | |

| temp:exptime | F(6, 5994) | 60.26 | <0.001 | |

| B5 | temp | F(1.67, 1676.18) | 53.26 | <0.001 |

| exptime | F(2.89, 2888.67) | 80.76 | <0.001 | |

| temp:exptime | F(5.85, 5842.95) | 10.28 | <0.001 | |

| B6 | temp | F(1.47, 1472.17) | 559.63 | <0.001 |

| exptime | F(1.95, 1949.77) | 29.87 | <0.001 | |

| temp:exptime | F(3.91, 3905,11) | 37.38 | <0.001 |

| Band | Null Hypotheses | Z-Statistics | p-Value |

|---|---|---|---|

| 1 | 14.347 | <0.001 | |

| 2 | 18.179 | <0.001 | |

| 3 | 13.656 | <0.001 | |

| 4 | 12.889 | <0.001 | |

| 5 | 14.641 | <0.001 | |

| 6 | 15.601 | <0.001 |

| Wavelength | RAW 4 | Calibrated 4 | RAW 5 | Calibrated 5 | RAW 7 | Calibrated 7 |

|---|---|---|---|---|---|---|

| 550 | 17.60 | 1.40 | 0.94 | 0.69 | 3.29 | 1.73 |

| 650 | 13.86 | 2.10 | 2.38 | 0.82 | 5.65 | 1.15 |

| 700 | 13.30 | 0.91 | 0.87 | 0.94 | 7.15 | 0.24 |

| 800 | 8.56 | 0.54 | 1.06 | 0.72 | 4.44 | 0.62 |

| 850 | 9.80 | 1.07 | 0.80 | 0.77 | 2.52 | 0.95 |

| 900 | 11.63 | 0.70 | 0.91 | 0.73 | 3.08 | 0.53 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Minařík, R.; Langhammer, J.; Hanuš, J. Radiometric and Atmospheric Corrections of Multispectral μMCA Camera for UAV Spectroscopy. Remote Sens. 2019, 11, 2428. https://0-doi-org.brum.beds.ac.uk/10.3390/rs11202428

Minařík R, Langhammer J, Hanuš J. Radiometric and Atmospheric Corrections of Multispectral μMCA Camera for UAV Spectroscopy. Remote Sensing. 2019; 11(20):2428. https://0-doi-org.brum.beds.ac.uk/10.3390/rs11202428

Chicago/Turabian StyleMinařík, Robert, Jakub Langhammer, and Jan Hanuš. 2019. "Radiometric and Atmospheric Corrections of Multispectral μMCA Camera for UAV Spectroscopy" Remote Sensing 11, no. 20: 2428. https://0-doi-org.brum.beds.ac.uk/10.3390/rs11202428