1. Introduction

Remote sensing images can be categorized by their spatial, spectral, and temporal resolutions [

1], and has been generally researched for many areas such as land-cover mapping [

2], water monitoring [

3], and anomaly detection [

4]. As a particular type of remote sensing images with high spectral resolution, hyperspectral image (HSI) contains plentiful information both in the spectral and spatial dimension [

5]. HSI has been used in many fields including vegetation cover monitoring [

6], atmospheric environmental research [

7], and change area detection [

8], among others. Supervised classification is an essential task of HSI, and is the common technology used in the above applications. However, the over-redundancy of spectral band information and limited training samples account for a huge challenge to HSI classification.

Early spectral-based attempts including support vector machines (SVM) [

9], multinomial logistic regression (MLR) [

10,

11], and random or dynamic subspace [

12,

13], focus on the spectral characteristics of HSI. Nevertheless, another useful piece of information is that the adjacent pixels are possibly of the same category, but the spectral-based methods ignore the high spatial correlation and local consistency of HSI. Therefore, the increasing number of classification frameworks based on spectral-spatial features have been presented. Two types of low-level features, morphological profiles [

14] and Gabor feature [

15], were designed to represent the spatial information. Based on SVM, the morphological kernel [

16] and the composite kernel [

17] methods were also proposed to exploit spectral-spatial information. Although above attempts improve the accuracy of the classifier, these methods highly depend on the hand-crafted descriptors.

Deep learning (DL) has shown powerful capabilities in automatically extracting nonlinear and hierarchical features. A great surge of computer vision tasks have benefited from DL and made significant breakthroughs, such as objection detection [

18], natural language processing [

19], and image classification [

20]. As a typical classification tasks, HSI classification has been deeply influenced by DL and has obtained excellent improvements.

In [

21], Chen introduced stacked autoencoders (SAE) for extracting useful features. Similarly, Tao [

22] used two sparse SAEs to capture spectral and spatial information separately. Ma et al. [

23] proposed an updated deep auto-encoder (DAE) to extract spectral-spatial features, and designed a novel synergic representation to handle the small-scale training set. Zhang et al. [

24] used a recursive autoencoder (RAE) to extracted high-level features from the neighborhoods of the target pixel and used a new weighting scheme to fuse the spatial information. In [

25], Chen et al. proposed a classification method based on deep belief network (DBN) and restricted Boltzmann machine (RBM).

However, in the above-mentioned methods, the input is one-dimensional. Although the spatial information is utilized, the initial structure is destroyed. Since convolutional neural networks (CNN) could exploit spatial feature while retaining the original structure, some novel solutions have been introduced with the advent of CNN. Zhao et al. [

26] adopted CNN as a feature extractor in their framework. Lee et al. [

27] proposed a contextual deep CNN (CDCNN) with deeper and wider networks. In [

28], Chen et al. designed 3D-CNN-based feature extractor model integrated with regularization.

Although DL has brought promising improvements in HSI classification, the demand of DL for training samples is enormous, while the cost of manual annotation is rather expensive for HSI. Generally, deeper networks can capture finer features, but it will be harder to train deeper networks. The emergence of the residual network (ResNet) [

29] and the dense convolutional network (DenseNet) [

30] eases the difficulty of training of deeper networks. Inspired by the ResNet, Zhong et al. [

31] proposed a spectral-spatial residual network (SSRN), which is more effective with limited training samples. Wang et al. [

32] introduced DenseNet to their fast dense spectral-spatial convolution (FDSSC) algorithm.

To optimize the discrimination of extracted features, the attention mechanism was adopted to refine the feature maps. Fang et al. [

33] designed a 3-D dense convolutional network with spectral-wise attention mechanism (MSDN-SA) based on DenseNet and attention mechanism. Ma et al. [

34] proposed a double-branch multi-attention mechanism network (DBMA) motivated by the convolutional block attention module (CBAM) [

35], and obtained the best classification results.

Inspired by the latest development of DL fields, some new methods could be observed in the literature. Mou et al. [

36] proposed a recurrent neural networks (RNN) framework for HSI classification in which hyperspectral pixels were analyzed via the sequential perspective. Because of the severe absence of labelled samples in HSI, semi-supervised learning (SSL) [

37], generative adversarial network (GAN) [

38], and active learning (AL) [

39] were introduced to alleviate this problem. In [

40], spectral-spatial capsule networks (CapsNets) were designed to weaken the complexity of the network and enhance the accuracy of the classification. Furthermore, self-pace learning [

41], self-taught learning [

42], and superpixel-based methods [

43] are also worth noting.

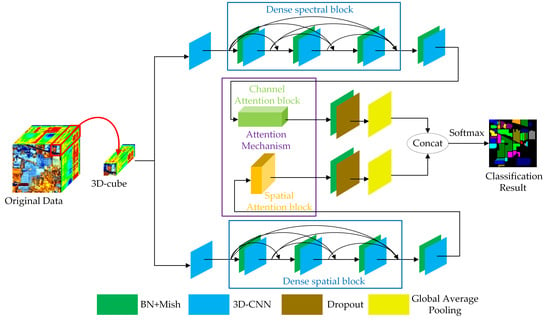

In this paper, inspired by the state-of-the-art DBMA algorithm and an adaptive self-attention mechanism dual attention network (DANet) [

44], we design the double-branch dual-attention mechanism network (DBDA) for HSI classification. The proposed framework contains two branches named the spectral branch and spatial branch, which capture spectral and spatial features separately. The channel-wise attention mechanism and spatial-wise attention mechanism are adopted to refine the feature maps. By concatenating the output of the two branches, we obtain syncretic spectral-spatial features. Finally, the classification results are determined using a softmax function. The three significant contributions of this paper could be listed as follows:

Based on DenseNet and 3D-CNN, we propose an end-to-end framework double-branch dual-attention mechanism network (DBDA). The spectral branch and spatial branch of the proposed framework can exploit features respectively without any feature engineering.

A flexible and adaptive self-attention mechanism is introduced to both the spectral and spatial dimensions. The channel-wise attention block is designed to focus on the information-rich spectral bands, and the spatial-wise attention block is built to concentrate on the information-rich pixels.

The DBDA obtains the state-of-the-art classification accuracy in four datasets with limited training data. Furthermore, the time consumption of our proposed network is less than the two compared deep-learning algorithms.

The rest of this paper is arranged as follows: In

Section 2, we illustrate the related work briefly. The detailed structure of DBDA is given in

Section 3. In

Section 4 and

Section 5, we provide and analyze the experimental results. Finally, a conclusion of the entire paper with a direction for future work is presented in

Section 6.

3. Methodology

The procedure of the DBDA framework contains three steps: dataset generation, training and validation, and prediction.

Figure 5 illustrates the whole framework of our method.

An HSI dataset X is supposed to be composed of N labelled pixels , where b represents the bands, and the corresponding category label set is , where c denotes the numbers of land cover classes.

In the dataset generation step, neighboring pixels of the center pixel is selected from the original data to generate the 3D-cubes set . If the target pixel is on the edge of the image, the values of missing adjacent pixels are set as zero. The , i.e., patch size, is set as 9 in our framework. Then, the 3D-cubes set is randomly divided into training set , validation set , and testing set . Accordingly, their corresponding label vectors are divided into , and . Certainly, the labels of neighboring pixels are not visible to the network, we use the spatial information around target pixel only.

In the training and validation steps, the training set is used to update the parameters for many epochs, while the validation set is adopted to monitor the performance of models and to select the best-trained model.

In the prediction step, the test set is chosen to verify the effectiveness of the trained model.

The commonly used quantitative indexes for HSI classification to measure the difference between predicted results and real values is the cross-entropy loss function, which is defined as

where

means the label vector predicted by the model and

represents the ground-truth label vector.

3.1. The Framework of the DBDA Network

The whole structure of the DBDA network can be seen in

Figure 6. For convenience, we call the top branch

Spectral Branch and name the bottom branch

Spatial Branch. The input is fed into spectral branch and spatial branch respectively to get the spectral feature maps and spatial feature maps. Then the fusion operation between spectral and spatial feature maps are adopted to get the classification results.

The following parts introduce the spectral branch, Spatial Branch and spectral and spatial fusion operation taking the Indian Pines (IP) dataset as an example; the patch size is assigned as 9 × 9 × 200. To facilitate the understanding for the matrices mentioned below such as , the represent the height, width, and depth of the 3D-cube, and 24 represents the number of 3D-cubes generated by 3D-CNN.

The IP dataset contains pixels with 200 spectral bands, that is, the size of IP is . The details of IP can be seen in Table 3. There are only 10, 249 pixels have corresponding labels, and the other pixels are background.

3.1.1. Spectral Branch with the Channel Attention Block

First, a 3D-CNN layer with a

kernel size is used. The down sampling stride is set to

, which could reduce the number of bands. Then, feature maps in the shape of

are captured. After that, the dense spectral block combined by 3D-CNN with BN is attached. Each 3D-CNN of the dense spectral block has 12 channels with a

kernel size. After attaching the dense spectral block, the channels of feature maps increase to 60 calculated by Equation (5). Therefore, we obtain feature maps with size of

. Next, after the last 3D-CNN with kernel size of

, a

feature map is generated. However, the 60 channels make different contributions to the classification. To refine the spectral features, the channel attention block illustrated in

Figure 4a and explained in

Section 2.4.1 is adopted. The channel attention block reinforces the informative channels and whittles the information-lacking channels. After obtaining the weighted spectral feature maps by channel attention, a BN layer and a dropout layer are applied to enhance the numerical stability and vanquish the overfitting. Finally, via a global average pooling layer, the feature maps in the shape of

are obtained. The implementation of the spectral branch is available in

Table 1.

3.1.2. Spatial Branch with the Spatial Attention Block

Meanwhile, the input data in the shape of

are delivered to the spatial branch, and the initial 3D-CNN layer’s size is set to

which can compress spectral bands into one dimension. After that, feature maps in the shape of (

are obtained. Then, the dense spatial block combined by 3D-CNN with BN is attached. Each 3D-CNN in the dense spectral block has 12 channels with a

kernel size. Next, the extracted feature maps in the shape of (

are fed into the spatial attention block, as illustrated in

Figure 4b and expounded in

Section 2.4.2. With the attention block, the coefficient of each pixel is weighted to get a more discriminative spatial feature. After capturing the weighted spatial feature maps, a BN layer with a dropout layer is applied. Finally, the spatial feature maps in the shape of

are obtained via a global average pooling layer. The implementation of the spatial branch is given in

Table 2.

3.1.3. Spectral and Spatial Fusion for HSI Classification

With the spectral branch and spatial branch, several spectral feature maps and spatial feature maps are obtained. Then, we perform a concatenation between two features for classification. Moreover, the reason why the concatenation operation is applied instead of add operation is that the spectral and spatial features are in the irrelevant domains, and the concatenate operation could keep them independent while the add operation would mix them together. In the end, the classification result is obtained via the fully connected layer and the softmax activation function.

For other datasets, network implementations are the same, and the only difference is the number of spectral bands. The whole methodology flowchart of DBDA is shown in

Figure 7.

3.2. Measures Taken to Prevent Overfitting

Numerous training parameters and limited training samples cause the network to be prone overfitting. Thus, we take some measures to prevent overfitting.

3.2.1. A Strong and Appropriate Activation Function

The activation function brings the concept of nonlinearity to a neural network. An appropriate activation function can accelerate the speed of the counter-propagation and convergence of the network. The activation function we adopted is Mish [

50], a self-regularized non-monotone activation function, instead of the conventional

[

51]. The formula for the Mish is:

where

x represents the input of the activation. The comparison of Mish and ReLU can be seen in

Figure 8. Mish is upper unbounded, and lower bounded with a scope of

. The differential coefficient definition of Mish is:

where

and

.

ReLU is a piecewise linear function that prunes all the negative inputs. Thus, if the input is nonpositive, then the neuron is going to “die” and cannot be activated anymore, even though negative inputs might contain useful information. On the contrary, negative inputs are preserved as negative outputs by Mish, which trades the input information and the network sparsity better.

3.2.2. Dropout Layer, Early Stopping Strategy and Dynamic Learning Rate Adjustment

A dropout layer [

52] is adopted between the last BN layer and the global average pooling layer in the spatial branch and spectral branch separately. Dropout is a simple but effective method to prevent overfitting by dropping out units (hidden or visible) on a given percentage

p at the training phase. Moreover, the

p is selected as 0.5 in our framework. The existence of dropout makes the presence of other units unreliable, which prevents co-adaptation between units.

In addition, two training skills, the early stopping strategy, and the dynamic learning rate adjustment method are also introduced to our model. Early stopping signifies if the loss function is no longer decreasing for a certain number of epochs (the number is 20 in our model), then we would stop the training process early to prevent overfitting and reduce the training time.

The learning rate is a crucial hyper parameter to train a network, and dynamic learning rate can help a network avoid some local minima. The cosine annealing [

53] method is adopted to adjust the learning rate dynamically as the following equation:

where

is the learning rate within the

ith run and

is the range of the learning rate.

accounts for the count of epochs that have been executed, and

controls the count of epochs that will be executed in a cycle of adjustment.

4. Experimental Results

To verify the accuracy and efficiency of the proposed model, experiments on four datasets are designed to compare and validate the accuracy and efficiency between the proposed network and other methods. The three quantitative metrics of overall accuracy (OA), average accuracy (AA), and Kappa coefficient (K) are used to measure the accuracy of each method. Concretely, OA represents the ratio of the true classifications of the entire pixels. AA means the average accuracy of all categories. The Kappa coefficient reflects the consistency between the ground truth and classification result. The higher the three metric values are, the better the classification result is. Meanwhile, we investigate the running time for each framework to evaluate its efficiency.

For each dataset, a certain number of training samples and validation samples are randomly selected from the labelled data on a certain percentage, and the rest of the samples are used to test the performance of the model. Since the proposed DBDA can maintain excellent performance when training samples are severely lacking, the amount of training samples and validation samples are set at a minimal level.

4.1. The Introduction about Datasets

In this paper, four widely used HSI datasets, the Indian Pines (IP) dataset, the Pavia University (UP) dataset, the Salinas Valley (SV) dataset, and the Botswana dataset (BS), are employed in the experiments.

Indian Pines (IP): Obtained through airborne visible infrared imaging spectrometer (AVIRIS) sensor in north-western Indiana, the Indian Pines dataset is composed of 200 spectral bands with a wavelength scope of 0.4 um to 2.5 um and 16 land cover classes. IP encompasses pixels and owns the resolution of 20 m/pixel.

Pavia University (UP): Gathered by the reflective optics imaging spectrometer (ROSIS-3) sensor at the University of Pavia, northern Italy, the Pavia University dataset is composed of 103 spectral bands with a wavelength scope of 0.43 um to 0.86 um and 9 land cover classes. UP encompasses pixels and owns the resolution of 1.3 m/pixel.

Salinas Valley (SV): Collected by the AVIRIS sensor from Salinas Valley, CA, USA, the Salinas Valley dataset is composed of 204 spectral bands with a wavelength scope of 0.4 um to 2.5 um and 16 land cover classes. SV encompasses pixels and owns the resolution of 3.7 m/pixel.

Botswana (BS): Captured by the NASA EO-1 satellite over the Okavango Delta, Botswana, the Botswana dataset is composed of 145 spectral bands with a wavelength scope of 0.4 um to 2.5 um and 14 land cover classes. BS encompasses pixels and owns the resolution of 30 m/pixel.

Deep learning algorithms are data-driven, which rely on plenty of labelled training samples. The more labelled data are fed into training, the better accuracy is yielded. However, more data mean more time consumption and higher computation complexity. It is worth noting that the proposed DBDA can maintain excellent performance even though the training samples are very lacking. Therefore, the size of training samples and validation samples are set at a minimal level in the experiments. For IP, we select 3% samples for training, and 3% samples for validation. As the samples are enough for each class of UP and SV, we only select 0.5% samples for training, and 0.5% samples for validation. For BS, the proportion of samples for training and validation is set to 1.2%. The reason why a decimal appears is that the number of samples in BS is small, so we set the ratio as 1% with a ceiling operation.

Table 3,

Table 4,

Table 5 and

Table 6 list the samples of training, validation and testing for the four datasets.

4.2. Experimental Setting

To evaluate the effectiveness of DBDA, the deep-learning-based classifiers CDCNN [

27], SSRN [

31], FDSSC [

32], and the state-of-the-art double-branch multi-attention mechanism network (DBMA) [

34] are compared with our proposed framework. Furthermore, the SVM with RBF kernel [

9] is also taken into account. The patch size of each classifier is set according to its original paper. To compare the training and testing consumptions of time, all experiments were executed on the same platform configured with 32 GB of memory and an NVIDIA GeForce RTX 2080Ti GPU. All deep-learning-based classifiers were implemented with PyTorch, and SVM was implemented with sklearn. Then, a brief introduction to the above methods will be given separately.

SVM: For SVM with a radial basis function (RBF) kernel, all individual pixels with their spectral bands are fed in directly.

CDCNN: The architecture of the CDCNN is shown in [

27], which is based on 2D-CNN and ResNet. The size of input is

, where

b denotes the number of spectral bands.

SSRN: The architecture of the SSRN is proposed in [

31], which is based on 3D-CNN and ResNet. The size of the input is

.

FDSSC: The architecture of the FDSSC can be seen in [

32], which is based on 3D-CNN and DenseNet. The size of the input is

.

DBMA: The architecture of the DBMA is presented in [

34], which is based on 3D-CNN, DenseNet, and an attention mechanism.

is the input patch size.

For CDCNN, SSRN, FDSSC, DBMA, and the proposed method, the batch size is set as 16, and the optimizer is set to Adam with the 0.0005 learning rate. The upper limit of the early stopping strategy is set to 200 epochs. If the loss in the validation set no longer declines for 20 epochs, then we would terminate the training phase.

4.3. Classification Maps and Categorized Results

4.3.1. Classification Maps and Categorized Results for the IP Dataset

The categorized results using different methods for the IP dataset are demonstrated in

Table 7 where the best class-specific accuracy is in bold, and classification maps of the different methods and ground truth are shown in

Figure 9.

Our proposed framework obtains the best results with 95.38% OA, 96.47% AA, and 0.9474 Kappa, which can be seen from

Table 7.CDCNN based on 2D-CNN achieves the worst accuracy with 62.32% OA, due to the limited training samples and weak network structure. Although SVM performs better than CDCNN with more than 7% in OA, the salt-and-pepper noise is severe, which can be seen in

Figure 9c. Because SVM uses no spatial neighborhood information. The 3D-CNN based models far exceed SVM and CDCNN, owing to its incorporation of both spatial and spectral information in the classification. FDSSC uses dense connection instead of residual connection, which enhances the performance of the network and obtains more than 5% improvement in OA compared to SSRN. Based on FDSSC, DBMA extracts the spatial and spectral features in two independent branches and brings the attention mechanism in. However, when training samples are very lacking, DBMA might overfit the training data. With our proposed framework DBDA, it can accomplish stable and reliable performance with limited data duo to its flexible and adaptive attention mechanism, the appropriate activation function, and the other measures to prevent overfitting.

Taking class 7, which only has three training samples in the IP dataset, as an example, our method performs well and obtains an acceptable consequence of 92.59%, while the results of other methods (SVM: 56.10%, CDCNN: 0.00%, SSRN: 0.00%, FDSSC: 73.53%, and DBMA: 40.00%) are not very satisfactory.

Overall, the proposed model improves the OA by 2.23%, the AA by 8.80%, and the kappa by 0.0225 compared to DBMA.

4.3.2. Classification Maps and Categorized Result for the UP Dataset

The categorized results using different methods for the UP dataset are demonstrated in

Table 8 where the best class-specific accuracy is in bold, and classification maps for the different methods and ground truth are shown in

Figure 10.

We can see that our proposed method obtains the best results regarding the three indexes from

Table 8. Though our method cannot make every class precision best, the accuracy of each class using our method exceeds 89%, which means our method is able to capture the distinctive features between different classes.

Since the samples in the UP dataset are sufficient, there are enough samples for each class even if we just choose 0.5% training samples. Thus, DBMA overcomes overfitting and performs better than FDSSC because of its superior architecture. CDCNN with ample samples surpasses the performance of SVM.

4.3.3. Classification Maps and Categorized Results for the SV Dataset

The categorized results using the different methods for the SV dataset are demonstrated in

Table 9 where the best class-specific accuracy is in bold, and classification maps of the different methods and ground truth are shown in

Figure 11.

We can see that our proposed method obtains the best results regarding the three indexes from

Table 9, and the accuracy of each category classified by our method exceeds 93%.

Similarly, because of the sufficient samples in the SV dataset, 0.5% training samples are enough. Thus, DBMA once again performs better than FDSSC. However, the SV dataset owns 16 classes while the UP dataset only has 9 classes, so CDCNN obtains a weaker performance than SVM.

4.3.4. Classification Maps and Categorized Result for the BS Dataset

The categorized results using different methods for the BS dataset are demonstrated in

Table 10 where the best class-specific accuracy is in bold, and classification maps of the different methods and ground truth are shown in

Figure 12.

Since the BS dataset is small and only with 3, 248 labelled samples, just 40 samples are selected as the training set and 40 samples are chosen as the validation set. Nonetheless, our method achieves 96.24% OA performance, 2.81% higher than DBMA. One reason is that our method can capture spatial and spectral features more effectively.

4.4. Investigation of Running Time

The above experiments prove that our proposed method can achieve a higher degree of accuracy with less data. However, a good method should balance the accuracy and efficiency properly. This part is executed to measure the efficiency of each method.

Table 11,

Table 12,

Table 13 and

Table 14 list the consumptions of time for the six algorithms on the IP, UP, SV, and BS datasets.

Since we use SVM as a pixel-based model, it spends less time than 3D-cube-based models in most cases. On account of 2D-CNN containing less parameters to be trained, CDCNN takes less time than 3D-CNN-based models.

For 3D-CNN-based models, the proposed method consumes less training time compared to FDSSC and DBMA while obtaining better performance because of its higher rate of convergence. Even though SSRN is quicker than our method, the accuracy of our method is superior. That is, our method can balance the accuracy and efficiency better.

5. Discussion

In this part, further assessments of DBDA are conducted. First, different proportions of training samples are fed into the network, and the results reflect that our method can maintain effectiveness especially when the training samples are severely limited. Second, the results of ablation experiments confirm the necessity of the attention mechanism. Third, the results of the different activation functions show that Mish is a better choice than ReLU for DBDA.

5.1. Investigation of the Proportion of Training Samples

As we mentioned, deep learning is a data-driven algorithm that depends on large amounts of high-quality labelled dataset. In this part, we investigate the scenarios for different proportions of training samples.

Figure 13 demonstrates the experimental results. For the IP and BS datasets, we use 0.5%, 1%, 3%, 5%, and 10% samples as the training sets, respectively. For the UP and SV datasets, we use 0.1%, 0.5%, 1%, 5%, and 10% of samples as the training sets, respectively.

As we expected, the accuracy improves with increase in the number of training samples. All 3D-based methods, including SSRN, FDSSC, DBMA, and the proposed framework can obtain near-perfect performances as long as enough samples (about 10% of the whole dataset) are provided. At the same time, the performance gaps between different models are narrowed according to the increases in training samples. Nevertheless, our method outpaces other methods, especially when samples are insufficient. Since it is costly to label the dataset, our proposed method can save labor and cost.

5.2. Effectiveness of the Attention Mechanism

To verify the effectiveness of the attention mechanism, we remove the spatial-attention module, spectral-attention module, and both attention modules of the DBDA respectively, and compare the performance between these three “incomplete DBDA” and the “complete DBDA”.

From

Figure 14, we can conclude that the existence of the spatial attention mechanism and the spectral attention mechanism does promote the accuracy on four datasets.

Averagely, the attention mechanism improves 4.69% OA on four datasets. Furthermore, a single spatial attention mechanism (average 2.18% improvement) performs better than a single spectral attention mechanism (average 0.97% improvement) upon most occasions.

5.3. Effectiveness of the Activation Function

In

Section 3.2.1, we illustrate why we adopted Mish as the activation function rather than the generally used ReLU. Here, we will compare the performance between DBDA based on Mish and DBDA based on ReLU.

Figure 15 shows the classification OA of them.

As shown in

Figure 15, DBDA based on Mish surpasses DBDA based on ReLU. Specifically, there are 2.27%, 2.01%, 4.00% and 1.24% OA improvements on the IP, UP, SV, and BS datasets, respectively. Since Mish can quicken counter-propagation, the difference in performance occurs.

6. Conclusions

In this paper, we proposed an end-to-end framework double-branch dual-attention mechanism network for HSI classification. The input of the DBDA framework is original 3D pixel data without any cumbersome pre-processing to reduce dimensionality. Based on densely connected 3D-CNN layers with BN, we designed two branches that capture spectral and spatial features respectively. Meanwhile, a flexible and adaptive self-attention mechanism was applied to spectral branch and spatial branch, respectively. Mish was introduced as the activation function to accelerate the counter-propagation and convergence processes. Dynamic learning rates, early stopping, and dropout layers were also adopted to prevent overfitting.

Extensive experimental results demonstrate that our proposed framework surpasses the state-of-the-art algorithm, especially when training samples are finite and limited. Meanwhile, the consumption of time is also decreased in comparison to FDSSC and DBMA, as the attention blocks and the activation function Mish accelerate the convergent speed of the model. Accordingly, we draw a conclusion that the structure of our method is more preferable for HSI classification.

A future direction of our work is applying our proposed framework to other hyperspectral images, not just process the above-mentioned open-source datasets. Moreover, it is also an attractive challenge to reduce the training time.