Hierarchical Multi-View Semi-Supervised Learning for Very High-Resolution Remote Sensing Image Classification

Abstract

:1. Introduction

2. Related Works

2.1. Deep Convolutional Neural Networks

2.2. Superpixel Segmentation

3. Proposed Method

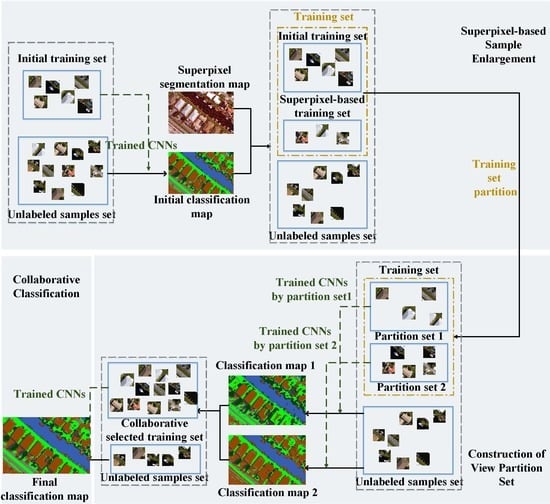

3.1. Superpixel-Based Sample Enlargement

3.2. Construction of a View Partition Set

3.3. Collaborative Classification

| Algorithm 1 Hierarchical multi-view semi-supervised learning for VHR remote sensing image classification. |

| Input: Training set and unlabeled testing set. |

| Output: Label predicted for the unlabeled samples. |

| Level 1: Superpixel-based sample enlargement |

| 1: Train the CNNs with training set and get the initial classification map . |

| 2: Segment the VHR image with superpixel segmentation method. |

| 3: Select appropriate unlabeled samples based on steps 1 and 2 to enlarged the training set . |

| Level 2: View partition |

| 4: According to the trained CNNs (step 1), obtain the feature set of training set . |

| 5: Intra-class partition for feature set by K-means. |

| 6: Intra-class partition for feature set by K-means. |

| 7: Train the CNNs with the two partition sets, respectively. |

| 8: Two classification maps and are obtained according to the trained CNNs (Step 7). |

| Level 3: Collaborative classification |

| 9: Select unlabeled samples with the same label prediction on , , to enlarge the training set. |

| 10: Train the CNNs with the new training set. |

| 11: Predict the labels of the unlabeled samples using the trained CNNs (Step 10). |

4. Experimental Results

4.1. Datasets

4.2. Experiment Setup

4.3. Experimental Results on Aerial Data

4.4. Experimental Results on JX_1 Data

4.5. Experimental Results on Pavia University Data

5. Discussion

6. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Bazi, T.; Melgain, F. Toward an optimal SVM classification system for hyperspectral remote sensing images. IEEE Trans. Geosci. Remote Sens. 2006, 44, 3374–3385. [Google Scholar] [CrossRef]

- Patra, S.; Bruzzone, L. A novel som-svm-based active learning technique for remote sensing image classification. IEEE Trans. Geosci. Remote Sens. 2014, 52, 6699–6910. [Google Scholar] [CrossRef]

- Li, W.; Prasad, S.; Fowler, J.E.; Bruce, L.M. Locality-preserving dimensionality reduction and classification for hyperspectral image analysis. IEEE Trans. Geosci. Remote Sens. 2012, 50, 1185–1198. [Google Scholar] [CrossRef] [Green Version]

- Xanthopoulos, P.; Pardalos, P.M. Linear Discriminant Analysis; Springer: New York, NY, USA, 2007; pp. 237–280. [Google Scholar]

- Shafri, H.Z.M.; Suhaili, A.; Mansor, S. The performance of maximum likelihood, spectral angle mapper, neural network and decision tree classifiers in hyperspectral image analysis. J. Comput. Sci. 2007, 6, 419–423. [Google Scholar] [CrossRef] [Green Version]

- Ham, J.; Chen, Y.C.; Crawford, M.M.; Ghosh, J. Investigation of the random forest framework for classification of hyperspectral data. IEEE Trans. Geosci. Remote Sens. 2007, 43, 492–501. [Google Scholar] [CrossRef] [Green Version]

- Pan, C.; Gao, X.B.; Wang, Y.; Li, J. Markov random field integrating adaptive interclass-pair penalty and spectral similarity for hyperspectral image classification. IEEE Trans. Geosci. Remote Sens. 2018, 57, 2520–2534. [Google Scholar] [CrossRef]

- Flores, E.; Zortea, Z.; Scharcanski, J. Dictionaries of deep features for land-use scene classification of very high spatial resolution images. Pattern Recognit. 2019, 89, 32–44. [Google Scholar] [CrossRef]

- Deng, C.; Liu, X.L.; Li, C.; Tao, D.C. Active multi-kernel domain adaptation for hyperspectral image classification. Pattern Recognit. 2018, 77, 306–315. [Google Scholar] [CrossRef] [Green Version]

- Benediktsson, J.A.; Palmason, J.A.; Sveinsson, J.R. Classification of hyperspectral data from urban areas based on extended morphological profiles. IEEE Trans. Geosci. Remote Sens. 2005, 43, 480–491. [Google Scholar] [CrossRef]

- Kang, X.D.; Li, S.T.; Benediktsson, J.A. Spectral-spatial hyperspectral image classification with edge-preserving filtering. IEEE Trans. Geosci. Remote Sens. 2014, 52, 2666–2677. [Google Scholar] [CrossRef]

- Chen, Y.S.; Lin, Z.H.; Zhao, X.; Wang, G.; Gu, Y.F. Deep learning-based classification of hyperspectral data. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2014, 7, 2094–2107. [Google Scholar] [CrossRef]

- Cheng, G.; Li, Z.P.; Yao, X.W.; Guo, L.; Wei, Z.L. Remote sensing image scene classification using bag of convolutional features. EEE Geosci. Remote Sens. Lett. 2017, 14, 1729–1735. [Google Scholar] [CrossRef]

- Cheng, G.; Li, Z.P.; Han, J.W.; Yao, X.W.; Guo, L. Exploring hierarchical convolutional features for hyperspectral image classification. IEEE Trans. Geosci. Remote Sens. 2018, 56, 6712–6722. [Google Scholar] [CrossRef]

- Zhou, P.C.; Han, H.W.; Cheng, G.; Zhang, B.C. Learning compact and discriminative stacked autoencoder for hyperspectral image classification. IEEE Trans. Geosci. Remote Sens. 2019, 57, 4823–4833. [Google Scholar] [CrossRef]

- Chen, Y.S.; Jiang, H.; Li, C.; Ghamisi, P. Deep feature extraction and classification of hyperspectral images based on convolutional neural networks. IEEE Trans. Geosci. Remote Sens. 2016, 57, 6232–6251. [Google Scholar] [CrossRef] [Green Version]

- Mirzaei, S.; Hamme, H.V.; Khosravani, S. Hyperspectral image classification using non-negative tensor factorization and 3D convolutional neural networks. Signal Process. Image Commun. 2019, 76, 178–185. [Google Scholar] [CrossRef]

- Seydgar, S.; Naeini, A.A.; Zhang, M.M.; Li, W.; Satari, M. 3-D convolutional-recurrent networks for spectral-spatial classification of hyperspectral images. Remote Sens. 2019, 11, 883. [Google Scholar] [CrossRef] [Green Version]

- Qi, W.C.; Zhang, X.; Wang, N.; Zhang, M.; Cen, Y. spectral-spatial cascaded 3D convolutional neural network with a convolutional long short-term memory networks for hyperspectral image classification. Remote Sens. 2019, 11, 2363. [Google Scholar] [CrossRef] [Green Version]

- Zhao, W.Z.; Du, S.H. Learning multiscale and deep representations for classifying remotely sensed imagery. ISPRS J. Photogramm. Remote Sens. 2016, 113, 155–165. [Google Scholar] [CrossRef]

- Zhang, M.M.; Li, W.; Du, Q. Diverse region-based CNN for hyperspectral image classification. IEEE Trans. Image Process. 2018, 27, 2623–2634. [Google Scholar] [CrossRef]

- Cui, X.M.; Zheng, K.; Gao, L.R.; Zhang, B.; Yang, D.; Ren, J.C. Multi-scale spatial-spectral convolutional networks with image-based framework for hyperspectral imagery classification. Remote Sens. 2019, 11, 2220. [Google Scholar] [CrossRef] [Green Version]

- Lestner, C.; Saffari, A.; Santner, J.; Bischof, H. Semi-supervised random forests. In Proceedings of the IEEE 12th International Conference on Computer Vision, Kyoto, Japan, 29 September–2 October 2009. [Google Scholar]

- Arshad, A.; Riaz, A.; Jiao, L.C. Semi-supervised deep fuzzy C-mean clustering for imbalanced multi-class classification. IEEE Access 2019, 7, 28100–28112. [Google Scholar] [CrossRef]

- Bruzzone, L.; Chi, M.; Marconcin, M. A novel transductive SVM for semisupervised classification of remote-sensing images. IEEE Trans. Geosci. Remote Sens. 2006, 44, 3363–3373. [Google Scholar] [CrossRef] [Green Version]

- Li, J.; Bioucas-Dias, J.; Plaza, A. Semi-supervised hyperspectral image segmentation using multinomial logistic regression with active learning. IEEE Trans. Geosci. Remote Sens. 2010, 48, 4085–4098. [Google Scholar]

- Fu, Q.Y.; Yu, X.C.; Wei, W.P.; Xue, Z.X. Semi-supervised classification of hyperspectral imagery based on stacked autoencoders. In Proceedings of the Eighth International Conference on Digital Image Processing (ICDIP 2016), Chengu, China, 29 August 2016; Volume 10033, p. 10032B-1. [Google Scholar]

- Rasmus, A.; Berglund, M.; Honkala, M.; Valpola, H.; Raiko, T. Semi-supervised learning with ladder networks. In Advances in Neural Information Processing System 28 (NIPS 2015); 2015; pp. 2554–3456. Available online: http://papers.nips.cc/paper/5947-semi-supervised-learning-with-ladder-networks.pdf (accessed on 20 March 2020).

- Feng, Z.X.; Yang, S.Y.; Wang, M.; Jiao, L.C. Learning dual geometric low-rank structure for semisupervised hyperspectral image classification. IEEE Trans. Cybern. 2019, in press. [Google Scholar] [CrossRef] [PubMed]

- Wang, X.Q. Research on multi-view semi-supervised learning algorithm based on co-training. In Proceedings of the fifth International Conference on Machine Learning and Cybernetics, Dalian, China, 13–16 August 2006; pp. 1276–1280. [Google Scholar]

- Zhang, X.R.; Song, Q.; Liu, R.C.; Wang, W.N.; Jiao, L.C. Modified co-training with spectral and spatial views for semisupervised hyperspectral image classification. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2014, 7, 2044–2055. [Google Scholar] [CrossRef]

- Romaszewski, M.; Glomb, P.; Chrolewa, M. Semi-supervised hyperspectral classification from a small number of training samples using a co-training approach. ISPRS J. Photogramm. Remote Sens. 2016, 121, 60–76. [Google Scholar] [CrossRef]

- Dai, D.X.; Gool, L.V. Ensemble projection for semi-supervised image classification. In Proceedings of the 2013 IEEE International Conference on Computer Vision, Sydney, NSW, Australia, 1–8 December 2013; pp. 2072–2078. [Google Scholar]

- Dai, X.Y.; Wu-Dias, X.F.; Zhang, L.M. Semi-supervised scene classification for remote sensing images: A method based on convolutional neural networks and ensemble learning. IEEE Geosci. Remote Sens. Lett. 2019, 16, 869–873. [Google Scholar] [CrossRef]

- Livieris, I.E. A new ensemble self-labeled semi-supervised algorithm. Informatical 2019, 43, 221–234. [Google Scholar] [CrossRef] [Green Version]

- Livieris, I.E.; Drakopoulou, K.; Tampakas, V.; Mikropoulos, T.; Pintelas, P. An ensemble-basedsemi- supervised approach for predicting students’ performance. In Research on e-Learning and ICT in Education; Springer: Cham, Switzerland, 2018; pp. 25–42. [Google Scholar]

- Mei, X.G.; Pan, E.; Ma, Y.; Dai, X.B.; Huang, J.; Fan, F.; Du, Q.L.; Zheng, H.; Ma, J.Y. Spectral-spatial attention networks for hyperspectral image classification. Remote Sens. 2019, 11, 963. [Google Scholar] [CrossRef] [Green Version]

- Meng, Z.; Li, L.L.; Jiao, L.C.; Feng, Z.X.; Tang, X.; Liang, M.M. Fully dense multi-scale fusion network for hyperspectral image classification. Remote Sens. 2019, 11, 2718. [Google Scholar] [CrossRef] [Green Version]

- Lecun, Y.; Bottou, L. Gradient-based learning applied to document recognition. Proc. IEEE 1998, 86, 11–2278. [Google Scholar] [CrossRef] [Green Version]

- Glorot, X.; Bordes, A.; Bengio, Y. Deep sparse rectifier neural networks. J. Mach. Learn. Res. 2010, 315–323. [Google Scholar]

- Zhang, Y.S.; Jiang, X.W.; Wang, X.X.; Cai, Z.H. Spectral-spatial hyperspectral image classification with superpixel pattern and extreme learning machine. Remote Sens. 2019, 11, 1983. [Google Scholar] [CrossRef] [Green Version]

- Fang, L.Y.; Li, S.T.; Duan, W.H.; Ren, J.C.; Benediktsson, J.A. Classification of hyperspectral images by exploiting spectral-spatial information of superpixel via multiple kernels. IEEE Trans. Geosci. Remote Sens. 2015, 53, 6663–6674. [Google Scholar] [CrossRef] [Green Version]

- Jia, S.; Deng, X.L.; Zhu, J.S.; Xu, M.; Zhou, J.; Jia, X.P. Collaborative representation-based multiscale superpixel fusion for hyperspectral image classification. IEEE Trans. Geosci. Remote Sens. 2019, 5, 7770–7784. [Google Scholar] [CrossRef]

- Feng, Z.X.; Wang, M.; Yang, S.Y.; Liu, Z.; Liu, L.Z.; Wu, B.; Li, H. Superpixel tensor sparse coding for structural hyperspectral image classification. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2017, 10, 1632–1639. [Google Scholar] [CrossRef]

- Shi, C.; Pun, C.M. Superpixel-based 3D deep neural networks for hyperspectral image classification. Pattern Recognit. 2018, 74, 600–616. [Google Scholar] [CrossRef]

- Zheng, C.Y.; Wang, N.N.; Cui, J. Hyperspectral image classification with small training sample size using superpixel-guided training sample enlargement. IEEE Trans. Geosci. Remote Sens. 2019, 57, 7307–7316. [Google Scholar] [CrossRef]

- Liu, M.Y.; Tuzel, O.; Ramalingam, S.; Chellappa, R. Entropy rate superpixel segmentation. In Proceedings of the 24th IEEE Conference on Computer Visual and Pattern Recognition, Providence, RI, USA, 20–25 August 2011. [Google Scholar]

- Hartigan, J.A.; Wong, M.A. A K-means clustering algorithm. Appl. Stat. 2013, 28, 100–108. [Google Scholar] [CrossRef]

- Lv, Z.Y.; Zhang, P.L.; Benediktsson, J.A. Automatic object-oriented, spectral-spatial feature extraction driven by tobler’s first law of geography for very high resolution aerial imagery classification. Remote Sens. 2017, 9, 285. [Google Scholar] [CrossRef] [Green Version]

| Class | Aeria Data | JX_1 Data | Pavia University Data | |||

|---|---|---|---|---|---|---|

| Training | Testing | Training | Testing | Training | Testing | |

| 1 | 100 | 14,719 | 100 | 46,276 | 100 | 6631 |

| 2 | 100 | 37,022 | 100 | 45,278 | 100 | 18,649 |

| 3 | 100 | 5791 | 100 | 20,347 | 100 | 2099 |

| 4 | 100 | 4077 | 100 | 27,849 | 100 | 3064 |

| 5 | 100 | 3374 | 100 | 14,956 | 100 | 1345 |

| 6 | 100 | 3374 | 100 | 8019 | 100 | 5029 |

| 7 | 100 | 1330 | ||||

| 8 | 100 | 3682 | ||||

| 9 | 100 | 947 | ||||

| total | 1200 | 100,463 | 1200 | 162,785 | 1800 | 42,776 |

| Class | CNNs | TSVM | SemiMLR | SemiSAE | Ladder Networks | Spectral- Spatial View | HMVSSL |

|---|---|---|---|---|---|---|---|

| 1 | 0.9948 | 0.9766 | 0.9808 | 0.2828 | 0.9811 | 0.9906 | 0.9963 |

| 2 | 0.9320 | 0.9421 | 0.9643 | 0.8356 | 0.7908 | 0.9401 | 0.9600 |

| 3 | 0.9168 | 0.0484 | 0.9698 | 0.9686 | 0.9444 | 0.9606 | 0.9418 |

| 4 | 0.9588 | 0.5013 | 0.7589 | 0.7331 | 0.6762 | 0.9674 | 0.9583 |

| 5 | 0.9306 | 0.8057 | 0.9750 | 0.8595 | 0.8866 | 0.9374 | 0.9410 |

| 6 | 0.9677 | 0.6037 | 0.7825 | 0.8459 | 0.8554 | 0.9766 | 0.9775 |

| OA | 0.9421 | 0.8182 | 0.9564 | 0.7669 | 0.8588 | 0.9501 | 0.9581 |

| AA | 0.9501 | 0.6463 | 0.9052 | 0.7542 | 0.8554 | 0.9621 | 0.9625 |

| Kappa | 0.9202 | 0.7444 | 0.9389 | 0.6896 | 0.8099 | 0.9311 | 0.9419 |

| Class | CNNs | TSVM | SemiMLR | SemiSAE | Ladder Networks | Spectral- Spatial View | HMVSSL |

|---|---|---|---|---|---|---|---|

| 1 | 1.0000 | 0.9992 | 0.9990 | 0.9923 | 0.9976 | 0.9985 | 0.9984 |

| 2 | 0.9548 | 0.8884 | 0.9943 | 0.9251 | 0.9922 | 0.9942 | 0.9990 |

| 3 | 0.9987 | 1.0000 | 1.0000 | 0.9996 | 1.0000 | 0.9975 | 0.9989 |

| 4 | 0.9545 | 0.8515 | 0.9794 | 0.9123 | 0.9192 | 0.9674 | 0.9598 |

| 5 | 0.9712 | 0.7967 | 0.8024 | 0.7904 | 0.7676 | 0.8970 | 0.9319 |

| 6 | 0.9759 | 0.9399 | 0.9428 | 0.9608 | 0.9337 | 0.9592 | 0.9694 |

| OA | 0.9757 | 0.9217 | 0.9736 | 0.9409 | 0.9587 | 0.9800 | 0.9820 |

| AA | 0.9759 | 0.9126 | 0.9530 | 0.9302 | 0.9350 | 0.9679 | 0.9747 |

| Kappa | 0.9691 | 0.9004 | 0.9664 | 0.9251 | 0.9474 | 0.9746 | 0.9771 |

| Class | CNNs | TSVM | SemiMLR | SemiSAE | Ladder Networks | Spectral- Spatial View | HMVSSL |

|---|---|---|---|---|---|---|---|

| 1 | 0.9143 | 0.6265 | 0.8085 | 0.6233 | 0.6373 | 0.9020 | 0.9385 |

| 2 | 0.9089 | 0.7522 | 0.9045 | 0.5186 | 0.3995 | 0.8946 | 0.9309 |

| 3 | 0.8833 | 0.7751 | 0.9252 | 0.7851 | 0.4493 | 0.8218 | 0.9419 |

| 4 | 0.9703 | 0.9367 | 0.9507 | 0.9076 | 0.8580 | 0.9768 | 0.9758 |

| 5 | 0.9985 | 0.9963 | 0.9948 | 0.9606 | 0.9896 | 1.0000 | 0.9970 |

| 6 | 0.7600 | 0.7447 | 0.9978 | 0.4983 | 0.8288 | 0.8039 | 0.8540 |

| 7 | 0.8211 | 0.8203 | 0.9910 | 0.7857 | 0.9195 | 0.9308 | 0.9361 |

| 8 | 0.8506 | 0.6070 | 0.8724 | 0.4723 | 0.6043 | 0.8786 | 0.8509 |

| 9 | 0.9905 | 0.9894 | 0.9958 | 0.9958 | 1.0000 | 0.9937 | 1.0000 |

| OA | 0.8923 | 0.7487 | 0.9097 | 0.6022 | 0.5878 | 0.8927 | 0.9237 |

| AA | 0.8997 | 0.8053 | 0.9378 | 0.7275 | 0.7429 | 0.9114 | 0.9361 |

| Kappa | 0.9905 | 0.6806 | 0.8829 | 0.5251 | 0.6080 | 0.8593 | 0.8995 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Shi, C.; Lv, Z.; Yang, X.; Xu, P.; Bibi, I. Hierarchical Multi-View Semi-Supervised Learning for Very High-Resolution Remote Sensing Image Classification. Remote Sens. 2020, 12, 1012. https://0-doi-org.brum.beds.ac.uk/10.3390/rs12061012

Shi C, Lv Z, Yang X, Xu P, Bibi I. Hierarchical Multi-View Semi-Supervised Learning for Very High-Resolution Remote Sensing Image Classification. Remote Sensing. 2020; 12(6):1012. https://0-doi-org.brum.beds.ac.uk/10.3390/rs12061012

Chicago/Turabian StyleShi, Cheng, Zhiyong Lv, Xiuhong Yang, Pengfei Xu, and Irfana Bibi. 2020. "Hierarchical Multi-View Semi-Supervised Learning for Very High-Resolution Remote Sensing Image Classification" Remote Sensing 12, no. 6: 1012. https://0-doi-org.brum.beds.ac.uk/10.3390/rs12061012