1. Introduction

There is a consensus that modern agricultural production is expected to happen within the requirements of sustainability and climate change [

1,

2]. This demands careful planning and precise forecasting of agricultural production, which rely on up-to-date, detailed and accurate information about agricultural land use [

3,

4]. The size and location of agricultural fields in active use and the type of use they are utilized for during each growing season is among the vital information needed. Equally important is the size and location of agricultural fields that are unused during a growing season. These areas are either temporarily or permanently out of food and fodder production. According to national agricultural policy in Norway, it is a goal to increase food production by 20 percent within 2030. To achieve this goal, it is necessary to maintain agricultural fields for food production.

Monitoring agricultural fields in practice is, however, a demanding task. Some countries have detailed land information system at the level of parcels, for, e.g., The Netherlands [

5], where annual monitoring can be conducted through parcel registration. The basic map unit in Norway, on the other hand, is the property map, while detailed maps at parcel level do not exist. Annual uses of agricultural areas are registered only through farmers’ applications for a national agricultural subsidy. Each farmer reports the size of the land in use and for which purpose it is used without linking it to a specific parcel or field. The data that is registered are, therefore, non-spatial. As these data are tied to subsidy benefits, the certainty of the registered data is at least questionable. Ideally, an independent system of permanent parcel delineation and annual monitoring is beneficial to land use planning, production forecasting and subsidy estimation. In the absence of such a system, an independent annual monitoring of agricultural land uses is a feasible alternative.

At the forefront of the list of tools used for agricultural monitoring is remote sensing. Remote sensing has long been used in agriculture for various purposes. Some examples of use cases are mapping agricultural land use and crop types [

6,

7], monitoring agricultural activities and crop growth [

8], forecasting yields [

9], and detection of diseases [

10]. Although not exhaustive, these application examples demonstrate the use of remote sensing as a tool for inventory, monitoring and forecasting agricultural production. However, there are a few limitations caused by the characteristics of the data and the methods of analysis. The low spatial resolutions of most publicly available remote sensing images often fail to fulfill the required spatial precision in fragmented agricultural fields. Weather conditions, at least for optical images, and low temporal resolution, limit the opportunities to obtain good quality data over a given period. The characteristically large data volume of remote sensing also continues to be a challenge.

The past few years have, however, seen major improvements in the technologies for acquiring, storing and analyzing remote sensing data. The spectral, spatial and temporal characteristics of remote sensing images have generally improved. A few satellites, both commercial and public, with high spatial resolution, have been launched. The Sentinel-2 optical satellites of the European Union’s Earth Observation Program called Copernicus collect data over the entire globe in 13 spectral bands, with a spatial resolution ranging between 10 and 60 m [

11]. They repeat the imaging process every 5 days at the equator and on even fewer days towards the poles. The high temporal resolution increases the chances of acquiring data on cloud-free days or mosaicking cloud free images over a relatively short period. It also adds a temporal dimension that can improve pattern recognition problems such as land cover classification.

Image-processing tools and algorithms have also improved over the past years. The improvements in big data and machine learning technologies are among the most important. Artificial Neural Network (ANN), although developed many decades ago, has evolved into a leading practical machine learning tool relatively recently, as a result of the exponential increase in computational capabilities and the availability of training data [

12]. Among the many machine learning algorithms developed based on ANN, Multilayer Perceptron (MLP) [

13], Convolutional Neural Network (CNN) [

14], Recurrent Neural Network (RNN) [

15], etc., are the most common examples. There is rich coverage from the literature on each of the algorithms and their numerous variants [

16,

17]. These ANN-based algorithms have proven to be effective alternatives to traditional statistical methods, especially for complex and nonlinear problems, as they are non-parametric and assume no prior statistical distribution of the data [

16]. The algorithms can be built in such a way that they take layer upon layer of variants of neural networks depending on the complexity of the data and the problem they handle. Deep learning is the phrase used to describe these multilayer neural networks [

12].

Deep learning is used in remote sensing for various applications [

18], image classification being one. Any of the deep learning models can be adapted for image classification depending on the characteristics of the data and the purpose of classification. Often, due to the complexity of the problem and the higher precision needed for the classification result, remotely sensed data from a point in time is not enough, even with the use of deep learning. Data over longer timespans, hence time series, can capture the temporal pattern that manifests differently for different classes of objects. Time series classification deals with assigning a label (class) to unlabeled time series data using a trained model from labeled training samples. Detailed reviews of methods used in time series classification, including deep learning, are available [

19] and will not be discussed here. MLP is often used when one chooses to ignore the temporal dimension and treat the time series as independent multivariate data. The MLP has been applied to optical and SAR images to classify seasonal crops in Ukraine [

20]. MLP has also been used to estimate sorghum biomass production from image time series acquired by an Unmanned Aerial Vehicle (UAV) [

21]. In both cases, the temporal and the spectral orders of the data are not fully utilized. The time dimension is considered in cases where, for example, RNN is used for time series classification [

22]. However, [

19] state that RNN is more suited to forecasting problems than classification. Among the limitations summarized by [

19] are that RNN: 1) suffers from a vanishing gradient problem, 2) is hard to train due to difficulties in parallelizing, 3) is computationally demanding as it relies on the total history as opposed to the CNN which learns from time windows. As a result, CNN is gaining popularity for time series classification. CNN is, in some cases, used with a 1D kernel along the temporal dimension, as demonstrated in [

23,

24]. 3D CNN is also implemented to include the temporal dimension as a third dimension, as, for example, used by [

25] for crop classification using multitemporal satellite images. 3D CNN involves convolution over the spatial dimensions, and the order of the spectral bands is not utilized. As a spatial window is used for the convolution, pixels are not evaluated independently.

Recently a comprehensive contribution proposed a temporal CNN architecture by demonstrating its application on Formosat-2 image time series [

26]. In the study, CNN is applied using kernels along the temporal and spectral dimensions turn by turn (1D) and simultaneously (2D) investigating the contribution of each dimension. The proposed temporal CNN architecture opens an interesting methodological option that needs further testing and application on other images and application areas. The spatial and temporal resolutions of the Formsat-2 [

27] are different from the currently widely used satellites, for example, Sentinel-2 [

11]. In conventional CNN, convolution is carried out in the spatial dimensions to make use of the spatial texture variations. The use of 2D CNN based on spatial dimension alone, as satellite remote sensing has limitations. This is partly due to the fact that the spatial features of land use types are not as distinct as often seen in ordinary object classes such as cats and dogs, widely used as examples in machine learning. Additionally, when spatial kernels are used for convolution, pixels are not evaluated independently of their adjacent pixels. This has implications for large pixels, as in the case of satellite images, and pixels at the boundaries of fields and at image edges. Enhancing a pixel into an image by using the spectral and temporal dimensions instead of the spatial dimension enables independent evaluation of a pixel. Temporal changes in land surface manifest differently for different land use types and indicate the usefulness of the temporal dimension in pattern classification, similar to the spectral dimension.

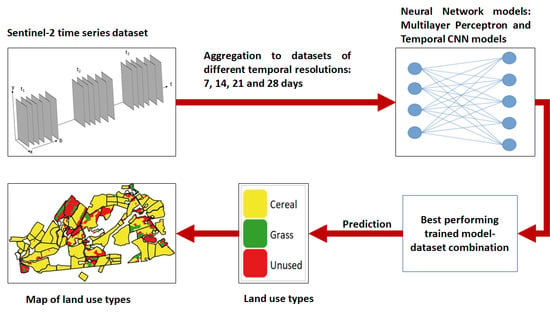

This paper presents an application of deep learning algorithms and satellite image time series (SITS) data from Sentinel-2 to map and monitor agricultural land use in Norway. The spectral–temporal differences in the different land use types are explored first. The study then investigates the applicability of temporal CNNs for mapping the major agricultural land use types: cereal crops, fodder crops (grasses), and land left out of production. The relatively simple MLP algorithm is tested and used as a baseline to evaluate the more complex CNN algorithms. We have optimized different variants of the deep learning models to identify the most suitable model for mapping land use types. Norway often has cloudy weather and missing observations that need to be addressed with interpolation. The research therefore investigates which temporal resolution of the Sentinel-2 SITS data, aggregated and interpolated for missing data, is most suitable for the objective stated in regions of frequent cloud coverage. The best performing model–dataset combination is then used to map and evaluate seasonal agricultural land uses. The results have implications for the seasonal monitoring of agricultural land uses in the absence of alternative methods.

2. Materials and Methods

2.1. Data

The Sentinel-2 images acquired during the period between the 15th of April and the 15th of October 2019 over the land area of Norway are downloaded and organized as a data cube in a NetCDF-4 format. As the land use types can change from year to year, we decided to use data from a single year, i.e., 2019. The year is selected as it is the most recent year for which training data were accessible. Six bands of the atmospherically corrected images (level 2A), namely the three visible (bands 2, 3 and 4), the near infrared (band 8A), and the two middle infrared bands (bands 11 and 12) are selected. The classification layer created during the atmospheric correction is also included for later masking of clouds and shadows. The bands are resampled to 20 m pixel size to reduce the data volume and the effect of georeferencing error. The satellite image time series (SITS) data are then stored tile by tile for the entire land area of the country in a data model represented in

Figure 1. Three agricultural land use classes are defined, namely: (1) Cereal, representing areas used for cultivation of cereals such as wheat, barley, rye, and oat; (2) Grass, representing areas used for the cultivation of grass-based fodder; (3) Unused, representing agricultural areas without crops, i.e., temporarily or permanently abandoned with activities not qualifying for subsidies.

Training data for each of the three classes are collected manually using the production subsidy (PT) database together with the property map. Some farmers have small farms, and they produce either cereals or grass on their farm. A farmer applies for a subsidy annually, and the production data for each farm are stored in the PT database. In this way, the production subsidy data are linked to the property map. In cases where it is difficult to establish a spatial connection between production reports and property maps, the farms are not represented in the training dataset. Such cases arise where a farmer reports that some part of his/her farm is in use for food and fodder crops while the rest is not, or some part is used for cereal crops while the rest is used for fodder grass. The unused class could not be obtained directly from the PT database. However, a year when a farmer has not applied for subsidy at all is an indication that the farm is not used for crop production, i.e., is unused. The training data used for further analysis are collected by picking three random points from each of the identified polygons.

The training points gathered in the above process contain the location data and the land use status. The values of the SITS data at the training points are extracted from the NetCDF files. After the extraction, every point has values for each band for each timestamp. In some cases, there are missing data due to the masking of clouds and shadows. In such cases, the values are interpolated from the two closest timestamps using linear interpolation. The training data are, thereafter, complete with the spectral data and the land use classes. About 43,000 training points are collected scattered throughout the country. The training data are further aggregated into different time steps, as discussed below.

2.2. Conceptualization of the SITS Data for Machine Learning

Let us assume time series as T = t

1, t

2, t

3, …, t

t, where subscripts 1…t stand for timestamps. In SITS, the time intervals are not uniform unless one is collecting data from only one orbital path without any filtering. This means that the time gap between t

1 and t

2 is not necessarily equal to the time gap between t

2 and t

3, and so forth. Additionally, optical satellite images are often affected by weather conditions. Therefore, there is a need for feature selection or feature extraction. The SITS data with size t are reduced to SITS data with size n, n being less than t, resulting in T = t

1, t

2, t

3, …, t

n. This reduces the data size, makes the time interval uniform and reduces noise (e.g., clouds and shadows). The temporal interval, sometimes called a window, to be used is chosen purposely. For this study, we used 7, 14, 21 and 28 days, respectively, representing from a week to a month. The temporal intervals are chosen for convenience in overlapping with multiples of week, as the temporal resolution of the Sentinel-2 is not constant for the entire country. Due to increasing overlaps between swaths of adjacent orbits with increasing latitude, the temporal frequency is higher over Norway than the nominal 5 days. As a result, the frequency over Norway varies from south to north. We selected a minimum interval of seven days to include multiple acquisitions for each location. The aggregation technique to map from

t size data to

n size data depends on the type of the time series data. To reduce the effects of shadows (low digital values) and clouds (high digital values), common in raw optical satellite data, the median statistic is preferred in this study. For the time steps lacking image data, the values are interpolated from the nearest time steps using linear interpolation method. Linear interpolation is a commonly known algorithm used for filling missing values in time series images, and is suitable when global smoothing is not attractive [

28]. Missing data in satellite images are often related to cloud cover, where adjacent pixels are missing at the same time. Therefore, in this case, temporal interpolation is more practical and preferred over spatial interpolation. The dataset then becomes a 4D array of two spatial dimensions representing the northings and eastings, with one dimension representing the spectral bands of the Sentinel-2 and one dimension representing the time steps chosen.

The analysis of the SITS data is approached based on the following three different conceptualizations:

(1) The image data for each band at each time are treated as an independent predictor variable. The data are not treated as time series but as a multivariate dataset, with multiple independent variables and a dependent variable. The number of the independent variables is equal to the number of bands multiplied by the number of time steps. The goal is then to train a model that relates the predictor variables to the dependent variable, the land use classes in this case, so that the model can be used to classify the scenes;

(2) The image data over the time periods are treated as a one-dimensional time series. That is, a pixel is assumed to be observed using each band alternatively over the time period. The spectral bands are treated as independent, whereas the temporal dimension is treated as a continuous series of temporal data. Temporal features of the spectral data can then be extracted to model the land use classes;

(3) Assigning one dimension to the time variable and another to the spectral bands, the observation at a pixel can be treated as a 2D representation of the pixel over temporal and spectral dimensions. Here, both the time order and the spectral order are important, together giving a 2D perspective of data collected at a point in space, i.e., a pixel. Both temporal, spectral and spectral–temporal features can be extracted by treating these 2D data as an entity, just like an image of a cat or any other object.

2.3. Implementation of the Deep Learning Algorithms

2.3.1. Preprocessing

The training data represent six spectral bands collected over the time period specified above. After aggregating the training data to a defined temporal resolution, the data are rescaled to values between 0 and 1 using the MinMax scaler of Scikit-learn preprocessing Python package (v. 0.23.2., [

29]). The data are then split into a training (70%) and a test (30%) set through random selection. The test dataset is kept out of the training process for later evaluation of the trained models.

2.3.2. Model Selection and Training

Python API of the Keras package, built on top of the Google-developed machine learning program called Tensorflow (v. 2.1.0, [

30]), is used. To implement a machine learning scheme, one needs to select a model and the model parameters. Variants of two ANN-based machine learning models, namely the MLP and the CNN, are selected based on the conceptualizations discussed above. Model parameters are of two types: trainable parameters that are learned during training, and the hyperparameters that are defined manually or fine-tuned automatically. The trainable parameters are mostly the weights and biases of the model, whereas there is a long list of hyperparameters depending on the type of model used.

The MLP model is widely applied to multivariate data fitting into the first conceptualization, defined above. The MLP models the relationship between predictor variables and a dependent variable by estimating the probability that a pixel or a record belongs to a class. The probability is a weighted sum of the predictor variables converted to probability using a certain function, e.g., SoftMax [

31]. The weights and the accompanying biases are estimated through iteration with the objective of minimizing a defined loss function. In search of an accurate model, layer upon layer of the neural net can be used, where the outputs of one layer are fed as input to the next layer. The output of the final layer is fed to the function that translates it into probability values. Mathematical details of deep learning architectures are given in the literature (e.g., [

32,

33]) and will not be discussed further here.

The MLP has a few hyperparameters whose values need to be set before the training. Optimizing the hyperparameters is not an easy task. Manual trial-and-error approaches are impractical for going through all the value domains. Therefore, an automated method of hyperparameter tuning is implemented using Keras tuner [

34]. The Keras tuner is a package that automatically goes through the different value combinations of the hyperparameters, and trains each combination, and evaluates and compares them based on a criterium set by the user, for example, the validation accuracy. The first step in optimizing the hyperparameters is defining the domains of each of the parameters. Traversing through the theoretical domains is practically impossible, as some of the parameters have an infinite domain. The practical domain (parameter space) can, therefore, be defined in a trial-and-error approach to obtain the threshold values beyond which the performances of the algorithm declines. Even after defining the parameter space, a brute-force approach of going through all possible combinations of the values is computationally demanding. Fortunately, the Keras tuner provides a system which selects a defined number of models whose parameter values are combined randomly, i.e., randomized search. Here, the randomized search method is applied with the parameter domains presented in

Table 1 below.

Variants of the CNN algorithm are implemented for the second and the third data conceptualization, shown above. Where only the temporal order is considered important, i.e., in the second conceptualization shown above, a CNN architecture that convolves the data with a 1D kernel along the temporal dimension, extraction features that are expressive of the temporal variation of the values, is implemented. Where the data are conceptualized as 2D data, with the spectral and temporal dimensions taking one dimension each, a 2D CNN algorithm is implemented. A 2D (along the spectral and temporal dimensions) kernel is then used to convolve the data to extract various features. This enables the convolution to be performed on a single pixel rather than on a set of adjacent pixels. Each pixel is treated as an image, as opposed to the spatial CNN, where an image is a set of adjacent pixels. A CNN architecture is defined based on the number and size of hyperparameters. The number of trainable parameters is dependent on the hyperparameters. Instead of using one of the available CNN architectures, the architecture is built through autotuning the different hyperparameters for both the 1D and the 2D CNN. Here, the randomized search of the Keras tuner module is also used after defining the search space of the hyperparameters as presented in

Table 1.

In

Figure 2, an example architecture of the 2D CNN model, based on the spectral and temporal dimensions, is presented. It is a simple architecture with two hidden layers. In most CNN implementations, convolution is followed by other steps (not included in the figure) that help the model to generalize better, for example pooling and dropout. The figure does not propose a new architecture; it is instead used to show how the spectral and temporal dimensions are used in the input layer. The input image depicted in the figure is a single pixel converted to an image using its temporal and spectral dimensions. The size of the spectral dimension is six, whereas the size of the dimensions varies depending on the temporal interval used. The kernel of size three by three refers to length of three in both the temporal and the spectral dimensions. The pixel is then convolved consecutively depending on the number of the hidden layers. The final convolution is flattened, and a fully connected network is then applied. Finally, the SoftMax function converts the final output to probability values for each of the classes. A batch of such pixel images are fed into the model during training and prediction.

2.3.3. Dealing with Overfitting

One of the challenges of machine learning is the tendency of the models to try to perfectly fit the training data, i.e., overfitting, instead of generalizing it. Overfit models fail to predict samples outside the training set with the same level of accuracy. To deal with overfitting of the models, some measures were taken, namely, dropout [

35], batch normalization [

36], regularization (both L1 and L2) [

37], data augmentation [

38] and early stopping. The dropout process removes a certain fraction of features, those with the lowest weights, in every layer. This forces the training to adapt more to the features with high weights. Batch normalization is important to rescale the inputs of the hidden layers and speed up the learning process. Although we rescale the input layer, there is a problem of value shift in the resulting features. Weight regularization penalizes the outliers, basically high-weight features, so that the model generalizes better. The dropout rate and the weight regularizations are optimized through the hyperparameter-tuning process. Additionally, to virtually increase the sample size and to generalize better, data augmentation is implemented. As in data orientation, temporal versus spectral is important and must be kept rigid, so no rotation, translation and shearing are implemented. The presence of noise is more realistic in this study. Therefore, in data augmentation, only spectral transformation using a gaussian noise of mean 0 and variance 0.03 is implemented. Early stopping is also implemented in such a way that the trends in the validation loss are monitored, and the training stops when the validation loss keeps on increasing for a certain number of consecutive epochs. Further training beyond this epoch leads to overfitting.

2.3.4. Testing of the Trained Models

As stated above, four temporal datasets were used based on four different temporal resolutions. Their comparison helps understand which temporal interval is suitable for time series analysis of Sentinel-2 images in cloudy regions such as Norway. The four temporal datasets were tested on three different deep learning models, namely MLP, 1D CNN and 2D CNN. As a result, twelve sets of model–dataset combinations were compared. The hyperparameters of the models were tuned using the randomized search method of the Keras tuner by limiting the number of the randomly selected models to 20 for each of the twelve combinations. One optimized model was selected for each of the twelve model–dataset combinations based on the validation accuracy from 20% of the training data. The optimized models were then compared to each other based on the accuracy with which the test classes were predicted, using overall accuracy and Cohen’s Kappa.

2.3.5. Prediction and Evaluation

Machine learning models often fail to predict with the same level of accuracy that they train with. Therefore, before deciding which model is best, a prediction is made for areas that have independent field observations. As the test data are collected under the same conditions as the data used for the training, a totally independent dataset is needed. Such data were obtained from two sources. The first source was the Norwegian Agricultural Environmental Monitoring Programme (JOVA). JOVA is a national monitoring programme for nutrient runoff from agricultural fields which consists of 13 sites across Norway where several environmental variables are monitored together with detailed information on agricultural practice on a parcel level. For this study, only one such site of 43 hectare was available, with data from 2019. The second approach was the manual collection of additional data using the PT database and the property map in a similar approach to the ones collected for the training, but this time using the entire polygons rather than selecting a few points.

We predicted the land use classes using twelve different optimized models for areas that have field-recorded data. To remove salt-and-paper type noise from the predicted maps, we used a majority filter of a three by three window. The field data from JOVA and PT were then compared to the predicted land use maps, and the numbers of agreeing and disagreeing pixels were counted. We computed the accuracies of the predictions based on the overall accuracy, precision, recall and F1 score [

39]. The model–dataset combination that produced the highest accuracy was used for the final prediction of the land use classes for the entire county.

4. Discussion

Four major results are presented in the above section: namely, temporal signatures of the three different seasonal land use classes, the architectures (hyperparameters) of the optimized models of the twelve model–dataset combinations, the accuracies of the model–dataset combinations as evaluated on held-out test data, and the predictive performances of the selected models. These results will be discussed here.

The spectral differences between the different land use classes are the basis for the use of remote sensing in land use classification. In this work, emphasis is given to the temporal signature, as the central theme of the work is time-series data. As shown in

Figure 3, there is a difference in the temporal signature between cereals and the other two classes. Cereals have a distinct SAVI temporal signature that starts to increase from late spring and early summer, attaining its peak during the summer, and decreases again during the autumn. This is expected, as cereals are sown in spring and harvested in autumn. Between those times, the cereals continue changing their vigor, which manifests in the SAVI temporal signature. The SAVI temporal signature of grass, on the other hand, shows some deep drops. Such a decline in SAVI can be explained by the multiple harvests of grass during the growing season. In Norway, grass is harvested and stored for use as fodder during the winter. The frequency of the harvest depends on the growing season and the growing conditions. The unused areas, however, lack a distinct spectral or temporal signature, as they are formed of numerous surface types. The one shown in the figure as an example is close to that of grass, as most unused areas are left alone for natural growth. In most cases, on unused areas, grass establishes first, which is gradually overtaken by bushes and shrubs. They finally give way to forest regeneration if left to the natural succession process. The distinctness of a spectral–temporal signature, or lack thereof, directly affects the success of classification and mapping of the class using either traditional image classification or machine learning algorithms, including the deep learning algorithms used in this work.

The use of the Keras tuner to optimize the hyperparameters gave interesting results, as presented in

Table 2. Firstly, one does not need to use very deep layers to obtain good results. The best-performing models have hidden layers ranging from 2 to 4. Deeper layers produce overfit models that do not generalize well and are computationally inefficient. Secondly, the activation function ReLU is selected in all cases, as is theoretically claimed [

12]. Thirdly, the number and size of the filters in the CNN algorithms is, interestingly, related to the dimensions. A higher number of filters is found to be optimal for the 1D CNN, rather than for the 2D CNN. This is explained by the fact that the 2D CNN already involves a large number of features, as feature number increases exponentially with dimension. The optimum filter size is also larger for the 1D CNN. The spectral dimension is limited to 6. Therefore, filter sizes larger than 5 are not practical for the 2D CNN. In the case of the 1D CNN, the filters can be larger depending on the size of the temporal dimension. A larger filter along the temporal dimension can extract temporal features over a longer period. Therefore, a filter size of 7 is found to be optimum for the 1D CNN. It appears that the 1D CNN compensates for its lower dimension by increasing the size and number of filters. Fourthly, the optimum dropout rate is higher when the number of features increases, as in the case of 2D CNN. The dropout rate is lower for the 1D CNN and the MLP. A low rate of regularization is considered as optimum, indicating that, as other overfitting measures such as dropout and batch normalization are included, the need for regularization is not as great.

As presented in the results section, the 2D CNN performs best compared to the other models. The 2D CNN extracts a greater number of features than the 1D CNN, but it can also be susceptible to overfitting, as some of these features do not necessarily contribute much. This can explain the higher rate of dropout in the 2D CNN. Additionally, both the temporal and the spectral orders are considered when using 2D kernels. Differences in the spectral characteristics of different land cover classes form the basis of land use/cover mapping using remotely sensed data. Additionally, the seasonal variation in the different land use types is also important. For example, unused areas simply a follow natural growth succession, while cereal and grass fields are susceptible to human interference, which also has different specific time periods. The temporal dimension captures this temporal signature of the different land use classes. The use of both complements each other and increases the performance of the model. The 1D CNN disregards the spectral order; although it is included in the analysis, the orderly signature is not captured by the 1D kernel. Therefore, although its convolutional method outperforms the MLP, its disregard of the spectral order makes its performance lower than that of the 2D CNN. However, one interesting fact is that the 1DCNN performed better than the 2D CNN in terms of prediction, at least for the areas in which we had field data, when using the same temporal resolution. This can be attributed to better generalization by the former, owing to the larger filter, and more overfitting by the later.

Both the 1D and 2D CNN have increased the level of accuracies compared to the baseline algorithm, i.e., the MLP, applied on the same dataset, as can be observed in

Figure 4. Even if the MLP is simple and can be used as a baseline, its hyperparameters need optimization to obtain the best performance. Considering the ease of application and efficiency of computation, the accuracies achieved by the MLP are appreciable and make it relevant in practical uses. Although systematic comparison of computational efficiencies is not part of this study, and hence not presented in the results, it is observed that the MLP is at least ten times faster than the 1D CNN, which in turn is about four times faster than the 2D CNN when similar depths are used. If one chooses to use the MLP for computational reasons, there will, consequently, be an accuracy trade-off.

The level of accuracies obtained, which ranges between 87% and 94%, is comparable to that of other studies of similar purpose. For example, [

7] applied Random Forest (RF), ensembles of MLP and CNN (both 1D and 2D) to multitemporal and multisource images to classify crop types. Their overall accuracies varied from 88.7 (RF) to 94.6 % (2D CNN). Additionally, [

25] applied 3D CNN on multitemporal images to classify crop types, and compared this with 2D CNN and other conventional methods. In agreement with our result, the CNN that involves the temporal dimension (3D CNN) produced the highest accuracy. Their technique uses 3D kernels, where pixels are not evaluated independently of adjacent pixels. Another relevant work is an early attempt at crop type classification by applying the RF classifier to mono-temporal Sentinel-2 images, which produced overall accuracies of 76% and 83% when object-based and pixel-based classification were used, respectively [

41]. The overall accuracy improved considerably (reaching 95%) when multi-temporal images were used on the same area in a later study [

42]. There is potential for improving the accuracy if the optical SITS data are supplemented with SAR SITS data, as demonstrated in a study by [

43]. The studies mentioned, in agreement with the present study, highlight the importance of the temporal dimension in crop-type and land-use mapping using satellite images. The studies use different setups, data and methods to compare their quantitative performances to the results of the present work. Comparison to state-of-the-art benchmark algorithms was also not part of this work. State-of-the-art benchmark algorithms for time series classification are analyzed and compared in [

19] and [

44]. The approach presented here could be included in future analysis and comparison of state-of-the art algorithms for time series classification.

Regarding the temporal resolutions, the 7 days dataset produced the best accuracy. Obtaining cloud-free images within a 7 days interval is often difficult. Some of the data were obtained by interpolation. The interpolation does not seem to affect the expected high accuracy from the highest temporal resolution, i.e., 7 days. As the temporal resolution gets worse, so does the accuracy. More frequent temporal data capture the minor temporal differences between the different land use classes. Increasing the temporal gaps smoothens out such minor differences, making it harder to accurately classify the land use classes.

The most challenging part of this study was obtaining samples that represent the unused areas accurately. The unused areas are areas that are known to be agricultural fields but that are not used for crop production that qualifies for subsidies. By implication, the surface condition of the area varies considerably. This fact makes it difficult both to manually identify it and to detect it using remote sensing. The lack of uniqueness in its surface condition leads to a lack of uniqueness in its spectral–temporal properties as well. The unused areas represented in the training dataset are fitted well by the deep learning algorithms, as the test accuracy for the class “unused” was not much different from that of grass and cereal. However, when predicted for entirely unknown areas, the unused areas and grass were not well separated, leading to a higher precision but lower recall for grass. Some of the areas wrongly predicted as unused include grass, green houses in the middle of cereal fields and other crop types such as fruits.

5. Conclusions and Outlook

In this study, Sentinel-2 SITS data were used to map three agricultural land use classes by implementing deep learning algorithms. The study has demonstrated that by using time-series data from Sentinel-2, it is possible to discriminate between the major production classes, cereals, grasses, and areas out of production. The last class is the most heterogeneous. It was difficult to obtain a large and representative training dataset for this class, and thus the prediction for the class had a relatively low accuracy. We need to focus on this land use category and improve the accuracy before the procedure is implemented at a national level. One strategy is to split the heterogeneous unused land-use class into several classes and train a model that predicts more than three classes. The difficulty is in obtaining a sufficient amount of reliable data for this class.

The results have also indicated that SITS data of higher temporal resolution produce more accurate maps of land-use classes, as details of the spectral–temporal differences are learned, as opposed to SITS data of lower temporal resolution. Additionally, the results suggest that CNN is superior to MLP in learning such time series data. The 1D and 2D CNN perform similarly: one trains better while the other generalizes better. This similarity in performance might change if the SITS data have numerous spectral bands, for example, in the case of hyperspectral, as the 2D CNN can then use larger filter sizes. This is a question to be investigated in possible future works. Additionally, considering the ease of application and efficiency of computation, the accuracies achieved by the MLP are appreciable and make it relevant in practical uses.

The study has also demonstrated that temporal CNN, i.e., the use of temporal and spectral dimensions, is applicable and useful in Sentinel-2 images. The approach partially circumvents the limitations of using CNN in spatial dimensions on low-resolution satellite images. The results of the work are expected to be applicable to regions with similar topo-climatic conditions to Norway, i.e., similarities in the frequency of cloud coverage and topographic conditions.