UAV Data as an Alternative to Field Sampling to Monitor Vineyards Using Machine Learning Based on UAV/Sentinel-2 Data Fusion

Abstract

:1. Introduction

2. Materials

2.1. Study Site and Field Data Collection

2.2. Remote Sensing Data Acquisition

2.2.1. UAV Data

2.2.2. Sentinel-2 Data

2.3. Image Preprocessing

2.3.1. UAV Data Preprocessing

2.3.2. Sentinel-2 Data Preprocessing

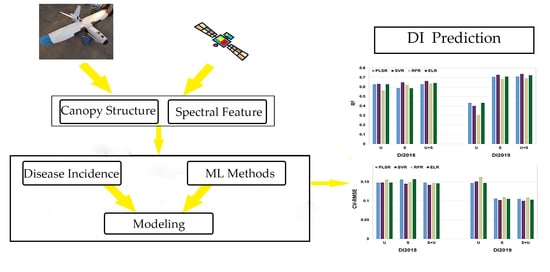

3. Methods

3.1. Feature Extraction

3.1.1. UAV Imagery-Based Canopy Feature Extraction

3.1.2. Sentinel-2 Imagery-Based VI Feature Extraction

3.2. Modeling Methods

4. Results

4.1. Assessment of Sampling Data Based on the UAV

4.1.1. Validation of Canopy Height

4.1.2. Assessment of the Relevance of UAV Data to Sentinel-2 Data

4.1.3. Correlation between DI and VIs

4.2. Machine Learning Modeling

5. Discussion

5.1. Overall Potential of UAV Data as Alternative to Field Sampling

5.2. Comparison of Machine Learning Models

5.3. Contributions of Different Types of Features Extracted from Multiplicity Sensors

6. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Brook, A.; De Micco, V.; Battipaglia, G.; Erbaggio, A.; Ludeno, G.; Catapano, I.; Bonfante, A. A Smart Multiple Spatial and Temporal Resolution System to Support Precision Agriculture from Satellite Images: Proof of Concept on Aglianico Vineyard. Remote Sens. Environ. 2020, 240. [Google Scholar] [CrossRef]

- King, P.D.; Smart, R.E.; McClellan, D.J. Timing of Crop Removal Has Limited Effect on Merlot Grape and Wine Composition. Agric. Sci. 2015, 6, 456–465. [Google Scholar] [CrossRef] [Green Version]

- Meggio, F.; Zarco-Tejada, P.J.; Núñez, L.C.; Sepulcre-Cantó, G.; González, M.R.; Martín, P. Grape Quality Assessment in Vineyards Affected by Iron Deficiency Chlorosis Using Narrow-Band Physiological Remote Sensing Indices. Remote Sens. Environ. 2010, 114, 1968–1986. [Google Scholar] [CrossRef] [Green Version]

- Oerke, E.C. Crop Losses to Pests. J. Agric. Sci. 2005, 144, 31–43. [Google Scholar] [CrossRef]

- Jasrotia, P.; Yadav, J.; Lal Kashyap, P.; Kumar Bhardwaj, A.; Kumar, S.; Singh, G.P. Chapter 13—Impact of Climate Change on Insect Pests of Rice–Wheat Cropping System: Recent Trends and Mitigation Strategies. In Improving Cereal Productivity Through Climate Smart Practices; Sareen, S., Sharma, P., Singh, C., Jasrotia, P., Pratap Singh, G., Sarial, A.K., Eds.; Woodhead Publishing: Sawston, UK, 2021; pp. 225–239. [Google Scholar] [CrossRef]

- Zhang, J.; Pu, R.; Huang, W.; Yuan, L.; Luo, J.; Wang, J. Using In-Situ Hyperspectral Data for Detecting and Discriminating Yellow Rust Disease from Nutrient Stresses. Field Crops Res. 2012, 134, 165–174. [Google Scholar] [CrossRef]

- Grisham, M.P.; Johnson, R.M.; Zimba, P.V. Detecting Sugarcane Yellow Leaf Virus Infection in Asymptomatic Leaves with Hyperspectral Remote Sensing and Associated Leaf Pigment Changes. J. Virol. Methods 2010, 167, 140–145. [Google Scholar] [CrossRef]

- Aktar, W.; Sengupta, D.; Chowdhury, A. Impact of Pesticides Use in Agriculture: Their Benefits and Hazards. Interdiscip. Toxicol. 2009, 2, 1–12. [Google Scholar] [CrossRef] [Green Version]

- Muneret, L.; Thiéry, D.; Joubard, B.; Rusch, A.; McKenzie, A. Deployment of Organic Farming at a Landscape Scale Maintains Low Pest Infestation and High Crop Productivity Levels in Vineyards. J. Appl. Ecol. 2018, 55, 1516–1525. [Google Scholar] [CrossRef]

- Rodríguez-San Pedro, A.; Allendes, J.L.; Beltrán, C.A.; Chaperon, P.N.; Saldarriaga-Córdoba, M.M.; Silva, A.X.; Grez, A.A. Quantifying Ecological and Economic Value of Pest Control Services Provided by Bats in a Vineyard Landscape of Central Chile. Agric. Ecosyst. Environ. 2020, 302, 107063. [Google Scholar] [CrossRef]

- Ramoelo, A.; Dzikiti, S.; van Deventer, H.; Maherry, A.; Cho, M.A.; Gush, M. Potential to Monitor Plant Stress Using Remote Sensing Tools. J. Arid Environ. 2015, 113, 134–144. [Google Scholar] [CrossRef]

- Weiss, M.; Jacob, F.; Duveiller, G. Remote Sensing for Agricultural Applications: A meta-Review. Remote Sens. Environ. 2020, 236. [Google Scholar] [CrossRef]

- Seelig, H.D.; Hoehn, A.; Stodieck, L.S.; Klaus, D.M.; Adams, W.W.; Emery, W.J. Relations of Remote Sensing Leaf Water Indices to Leaf Water Thickness in Cowpea, Bean, and Sugarbeet Plants. Remote Sens. Environ. 2008, 112, 445–455. [Google Scholar] [CrossRef]

- Maimaitijiang, M.; Ghulam, A.; Sidike, P.; Hartling, S.; Maimaitiyiming, M.; Peterson, K.; Shavers, E.; Fishman, J.; Peterson, J.; Kadam, S.; et al. Unmanned Aerial System (UAS)-Based Phenotyping of Soybean Using Multi-Sensor Data Fusion and Extreme Learning Machine. ISPRS J. Photogramm. Remote Sens. 2017, 134, 43–58. [Google Scholar] [CrossRef]

- Sagan, V.; Maimaitijiang, M.; Sidike, P.; Maimaitiyiming, M.; Erkbol, H.; Hartling, S.; Peterson, K.T.; Peterson, J.; Burken, J.; Fritschi, F. Uav/Satellite Multiscale Data Fusion for Crop Monitoring and Early Stress Detection. ISPRS Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2019, XLII-2/W13, 715–722. [Google Scholar] [CrossRef] [Green Version]

- Mutanga, O.; Dube, T.; Galal, O. Remote Sensing of Crop Health for Food Security in Africa: Potentials and Constraints. Remote Sens. Appl. Soc. Environ. 2017, 8, 231–239. [Google Scholar] [CrossRef]

- Vuolo, F.; Żółtak, M.; Pipitone, C.; Zappa, L.; Wenng, H.; Immitzer, M.; Weiss, M.; Frederic, B.; Atzberger, C. Data Service Platform for Sentinel-2 Surface Reflectance and Value-Added Products: System Use and Examples. Remote Sens. 2016, 8, 938. [Google Scholar] [CrossRef] [Green Version]

- Khaliq, A.; Comba, L.; Biglia, A.; Ricauda Aimonino, D.; Chiaberge, M.; Gay, P. Comparison of Satellite and UAV-Based Multispectral Imagery for Vineyard Variability Assessment. Remote Sens. 2019, 11, 436. [Google Scholar] [CrossRef] [Green Version]

- Moeckel, T.; Safari, H.; Reddersen, B.; Fricke, T.; Wachendorf, M. Fusion of Ultrasonic and Spectral Sensor Data for Improving the Estimation of Biomass in Grasslands with Heterogeneous Sward Structure. Remote Sens. 2017, 9, 98. [Google Scholar] [CrossRef] [Green Version]

- Wang, R.; Gamon, J.A. Remote Sensing of Terrestrial Plant Biodiversity. Remote Sens. Environ. 2019, 231. [Google Scholar] [CrossRef]

- Wang, C.; Nie, S.; Xi, X.; Luo, S.; Sun, X. Estimating the Biomass of Maize with Hyperspectral and LiDAR Data. Remote Sens. 2016, 9, 11. [Google Scholar] [CrossRef] [Green Version]

- Hornero, A.; Hernández-Clemente, R.; North, P.R.J.; Beck, P.S.A.; Boscia, D.; Navas-Cortes, J.A.; Zarco-Tejada, P.J. Monitoring the Incidence of Xylella fastidiosa Infection in Olive Orchards Using Ground-Based Evaluations, Airborne Imaging Spectroscopy and Sentinel-2 Time Series Through 3-D Radiative Transfer Modelling. Remote Sens. Environ. 2020, 236. [Google Scholar] [CrossRef]

- Sepulcre-Cantó, G.; Zarco-Tejada, P.J.; Jiménez-Muñoz, J.C.; Sobrino, J.A.; Soriano, M.A.; Fereres, E.; Vega, V.; Pastor, M. Monitoring Yield and Fruit Quality Parameters in Open-Canopy Tree Crops under Water Stress. Implications for ASTER. Remote Sens. Environ. 2007, 107, 455–470. [Google Scholar] [CrossRef]

- Xu, F.; Gao, Z.; Jiang, X.; Shang, W.; Ning, J.; Song, D.; Ai, J. A UAV and S2A Data-Based Estimation of the Initial Biomass of Green Algae in the South Yellow Sea. Mar. Pollut. Bull. 2018, 128, 408–414. [Google Scholar] [CrossRef] [PubMed]

- Serrano, L.; González-Flor, C.; Gorchs, G. Assessment of Grape Yield and Composition Using the Reflectance Based Water Index in Mediterranean Rainfed Vineyards. Remote Sens. Environ. 2012, 118, 249–258. [Google Scholar] [CrossRef] [Green Version]

- Pla, M.; Bota, G.; Duane, A.; Balagué, J.; Curcó, A.; Gutiérrez, R.; Brotons, L. Calibrating Sentinel-2 Imagery with Multispectral UAV Derived Information to Quantify Damages in Mediterranean Rice Crops Caused by Western Swamphen (Porphyrio porphyrio). Drones 2019, 3, 45. [Google Scholar] [CrossRef] [Green Version]

- Rischbeck, P.; Elsayed, S.; Mistele, B.; Barmeier, G.; Heil, K.; Schmidhalter, U. Data Fusion of Spectral, Thermal and Canopy Height Parameters for Improved Yield Prediction of Drought Stressed Spring Barley. Eur. J. Agron. 2016, 78, 44–59. [Google Scholar] [CrossRef]

- Kattenborn, T.; Lopatin, J.; Förster, M.; Braun, A.C.; Fassnacht, F.E. UAV Data as Alternative to Field Sampling to Map Woody Invasive Species Based on Combined Sentinel-1 and Sentinel-2 Data. Remote Sens. Environ. 2019, 227, 61–73. [Google Scholar] [CrossRef]

- Ordóñez Galán, C.; Rodríguez Pérez, J.R.; García Cortés, S.; Bernardo Sánchez, A. Analysis of the Influence of Forestry Environments on the Accuracy of GPS Measurements by Means of Recurrent Neural Networks. Math. Comput. Model. 2013, 57, 2016–2023. [Google Scholar] [CrossRef]

- Wing, M.G.; Frank, J. Vertical Measurement Accuracy and Reliability of Mapping-Grade GPS Receivers. Comput. Electron. Agric. 2011, 78, 188–194. [Google Scholar] [CrossRef]

- Turner, W. Sensing Biodiversity. Science 2014, 346, 301–302. [Google Scholar] [CrossRef]

- Leitao, P.J.; Schwieder, M.; Pötzschner, F.; Pinto, J.R.R.; Teixeira, A.M.C.; Pedroni, F.; Sanchez, M.; Rogass, C.; van der Linden, S.; Bustamante, M.M.C.; et al. From Sample to Pixel: Multi-Scale Remote Sensing Data for Upscaling Aboveground Carbon Data in Heterogeneous Landscapes; Humboldt-Universität zu Berlin: Berlin, Germany, 2018. [Google Scholar] [CrossRef]

- Maimaitiyiming, M.; Sagan, V.; Sidike, P.; Kwasniewski, M. Dual Activation Function-Based Extreme Learning Machine (ELM) for Estimating Grapevine Berry Yield and Quality. Remote Sens. 2019, 11, 740. [Google Scholar] [CrossRef] [Green Version]

- Margenot, A.; O’Neill, T.; Sommer, R.; Akella, V. Predicting Soil Permanganate Oxidizable Carbon (POXC) by Coupling DRIFT Spectroscopy and Artificial Neural Networks (ANN). Comput. Electron. Agric. 2020, 168. [Google Scholar] [CrossRef]

- Loozen, Y.; Rebel, K.T.; de Jong, S.M.; Lu, M.; Ollinger, S.V.; Wassen, M.J.; Karssenberg, D. Mapping Canopy Nitrogen in European Forests Using Remote Sensing and Environmental Variables with the Random Forests Method. Remote Sens. Environ. 2020, 247, 111933. [Google Scholar] [CrossRef]

- Were, K.; Bui, D.T.; Dick, Ø.B.; Singh, B.R. A Comparative Assessment of Support Vector Regression, Artificial Neural Networks, and Random Forests for Predicting and Mapping Soil Organic Carbon Stocks across an Afromontane Landscape. Ecol. Indic. 2015, 52, 394–403. [Google Scholar] [CrossRef]

- Huang, G.-B.; Zhu, Q.-Y.; Siew, C.-K. Extreme Learning Machine: Theory and Applications. Neurocomputing 2006, 70, 489–501. [Google Scholar] [CrossRef]

- Meacham-Hensold, K.; Montes, C.M.; Wu, J.; Guan, K.; Fu, P.; Ainsworth, E.A.; Pederson, T.; Moore, C.E.; Brown, K.L.; Raines, C.; et al. High-Throughput Field Phenotyping Using Hyperspectral Reflectance and Partial Least Squares Regression (PLSR) Reveals Genetic Modifications to Photosynthetic Capacity. Remote Sens. Environ. 2019, 231. [Google Scholar] [CrossRef]

- Maimaitijiang, M.; Sagan, V.; Sidike, P.; Hartling, S.; Esposito, F.; Fritschi, F.B. Soybean Yield Prediction from UAV Using Multimodal Data Fusion and Deep Learning. Remote Sens. Environ. 2020, 237. [Google Scholar] [CrossRef]

- Cardoso, J.; Santos, A.A.; Rossetti, A.; Vidal, J. Relationship between Incidence and Severity of Cashew Gummosis in Semiarid North-Eastern Brazil. Plant Pathol. 2004, 53, 363–367. [Google Scholar] [CrossRef]

- Carisse, O.; Lefebvre, A.; Heyden, H.; Roberge, L.; Brodeur, L. Analysis of Incidence–Severity Relationships for Strawberry Powdery Mildew as Influenced by Cultivar, Cultivar Type, and Production Systems. Plant Dis. 2013, 97, 354–362. [Google Scholar] [CrossRef] [Green Version]

- Govorcin, M.; Pribicevic, B.; Đapo, A. Comparison and Analysis of Software Solutions for Creation of a Digital Terrain Model Using Unmanned Aerial Vehicles. In Proceedings of the 14th International Multidisciplinary Scientific GeoConference SGEM 2014, Albena, Bulgaria, 17–26 June 2014. [Google Scholar] [CrossRef]

- Cubero-Castan, M.; Schneider-Zapp, K.; Bellomo, M.; Shi, D.; Rehak, M.; Strecha, C. Assessment of the Radiometric Accuracy in a Target Less Work Flow Using Pix4D Software. In Proceedings of the 2018 9th Workshop on Hyperspectral Image and Signal Processing: Evolution in Remote Sensing (WHISPERS), Amsterdam, The Netherlands, 23–26 September 2018; pp. 1–4. [Google Scholar]

- Generate High Resolution Outputs for Any Project and Use Case. Available online: https://www.pix4d.com/product/pix4dmapper-photogrammetry-software (accessed on 27 January 2021).

- Deng, L.; Mao, Z.; Li, X.; Hu, Z.; Duan, F.; Yan, Y. UAV-Based Multispectral Remote Sensing for Precision Agriculture: A Comparison between Different Cameras. ISPRS J. Photogramm. Remote Sens. 2018, 146, 124–136. [Google Scholar] [CrossRef]

- Matese, A.; Di Gennaro, S.; Berton, A. Assessment of a Canopy Height Model (CHM) in a Vineyard Using UAV-Based Multispectral Imaging. Int. J. Remote Sens. 2016, 38, 1–11. [Google Scholar] [CrossRef]

- Wilke, N.; Siegmann, B.; Klingbeil, L.; Burkart, A.; Kraska, T.; Muller, O.; van Doorn, A.; Heinemann, D.G.S. Quantifying Lodging Percentage and Lodging Severity Using a UAV-Based Canopy Height Model Combined with an Objective Threshold Approach. Remote Sens. 2019, 11, 515. [Google Scholar] [CrossRef] [Green Version]

- Panagiotidis, D.; Abdollahnejad, A.; Surovy, P.; Chiteculo, V. Determining Tree Height and Crown Diameter from High-Resolution UAV Imagery. Int. J. Remote Sens. 2017, 38. [Google Scholar] [CrossRef]

- Tzotsos, A.; Argialas, D. Support Vector Machine Classification for Object-Based Image Analysis. In Lecture Notes in Geoinformation and Cartography; Springer Nature: Cham, Switzerland, 2008; pp. 663–677. [Google Scholar] [CrossRef]

- Wan, L.; Cen, H.; Zhu, J.; Zhang, J.; Zhu, Y.; Sun, D.; Du, X.; Zhai, L.; Weng, H.; Li, Y.; et al. Grain Yield Prediction of Rice Using Multi-Temporal UAV-Based RGB and Multispectral Images and Model Transfer—A Case Study of Small Farmlands in the South of China. Agric. For. Meteorol. 2020, 291. [Google Scholar] [CrossRef]

- Schirrmann, M.; Giebel, A.; Gleiniger, F.; Pflanz, M.; Lentschke, J.; Dammer, K.-H. Monitoring Agronomic Parameters of Winter Wheat Crops with Low-Cost UAV Imagery. Remote Sens. 2016, 8, 706. [Google Scholar] [CrossRef] [Green Version]

- Song, Y.; Lu, Y.; Liu, T.; Li, H.; Yue, Z.; Liu, H.; Gao, T. Variation of Vegetation Fractional Coverage and Its Relationship with Climate in a Desert Steppe: Optimization of Farmland Layout in a Farming–Pastoral Ecotone Using the Ecological Suitability Index. Ecol. Eng. 2020, 150, 105834. [Google Scholar] [CrossRef]

- Tong, S.; Zhang, J.; Ha, S.; Lai, Q.; Ma, Q. Dynamics of Fractional Vegetation Coverage and Its Relationship with Climate and Human Activities in Inner Mongolia, China. Remote Sens. 2016, 8, 776. [Google Scholar] [CrossRef] [Green Version]

- Wang, Y.; Sun, M.; Song, B. Public Perceptions of and Willingness to Pay for Sponge City Initiatives in China. Resour. Conserv. and Recycl. 2017, 122, 11–20. [Google Scholar] [CrossRef]

- Jönsson, P.; Eklundh, L. Seasonality Extraction by Function Fitting to Time-Series of Satellite Sensor Data. IEEE Trans. Geosci. Remote Sens. 2002, 40, 1824–1832. [Google Scholar] [CrossRef]

- Chauhan, S.; Darvishzadeh, R.; Boschetti, M.; Pepe, M.; Nelson, A. Remote Sensing-Based Crop Lodging Assessment: Current Status and Perspectives. ISPRS J. Photogramm. Remote Sens. 2019, 151, 124–140. [Google Scholar] [CrossRef] [Green Version]

- Gitelson, A.; Kaufman, Y.; Merzlyak, M. Use of a Green Channel in Remote Sensing of Global Vegetation from EOS-MODIS. Remote Sens. Environ. 1996, 58, 289–298. [Google Scholar] [CrossRef]

- Roujean, J.-L.; Breon, F.-M. Estimating PAR Absorbed by Vegetation from Bidirectional Reflectance Measurements. Remote Sens. Environ. 1995, 51, 375–384. [Google Scholar] [CrossRef]

- Chen, J.M. Evaluation of Vegetation Indices and a Modified Simple Ratio for Boreal Applications. Can. J. Remote Sens. 1996, 22, 229–242. [Google Scholar] [CrossRef]

- Haboudane, D.; Miller, J.R.; Tremblay, N.; Zarco-Tejada, P.J.; Dextraze, L. Integrated Narrow-Band Vegetation Indices for Prediction of Crop Chlorophyll Content for Application to Precision Agriculture. Remote Sens. Environ. 2002, 81, 416–426. [Google Scholar] [CrossRef]

- Qi, J.; Chehbouni, A.; Huete, A.R.; Kerr, Y.H.; Sorooshian, S. A Modified Soil Adjusted Vegetation Index. Remote Sens. Environ. 1994, 48, 119–126. [Google Scholar] [CrossRef]

- Nichol, C.J.; Huemmrich, K.F.; Black, T.A.; Jarvis, P.G.; Walthall, C.L.; Grace, J.; Hall, F.G. Remote Sensing of Photosynthetic-light-Use Efficiency of Boreal Forest. Agric. For. Meteorol. 2000, 101, 131–142. [Google Scholar] [CrossRef] [Green Version]

- Zarco-Tejada, P.J.; Miller, J.R.; Mohammed, G.H.; Noland, T.L.; Sampson, P.H. Estimation of Chlorophyll Fluorescence under Natural Illumination from Hyperspectral data. Int. J. Appl. Earth Obs. Geoinf. 2001, 3, 321–327. [Google Scholar] [CrossRef] [Green Version]

- Frampton, W.J.; Dash, J.; Watmough, G.; Milton, E.J. Evaluating the Capabilities of Sentinel-2 for Quantitative Estimation of Biophysical Variables in Vegetation. ISPRS J. Photogramm. Remote Sens. 2013, 82, 83–92. [Google Scholar] [CrossRef] [Green Version]

- Blackburn, G.A. Spectral Indices for Estimating Photosynthetic Pigment Concentrations: A Test Using Senescent Tree Leaves. Int. J. Remote Sens. 1998, 19, 657–675. [Google Scholar] [CrossRef]

- Hunt, E.R.; Daughtry, C.S.T.; Eitel, J.U.H.; Long, D.S. Remote Sensing Leaf Chlorophyll Content Using a Visible Band Index. Agron. J. 2011, 103, 1090–1099. [Google Scholar] [CrossRef] [Green Version]

- Merzlyak, M.N.G.; Anatoly, A.; Chivkunova, O.B.; Rakitin, V.Y. Non-Destructive Optical Detection of Pigment Changes during Leaf Senescence and Fruit Ripening. Physiol. Plant. 1999, 106, 135–141. [Google Scholar] [CrossRef] [Green Version]

- Yeniay, Ö.; Goktas, A. A Comparison of Partial Least Squares Regression with Other Prediction Methods. Hacet. J. Math. Stat. 2002, 31, 99–111. [Google Scholar]

- Genuer, R.; Poggi, J.-M. Random Forests with R; Springer: Berlin, Germany, 2020; pp. 33–55. [Google Scholar] [CrossRef]

- Gholizadeh, A.; Boruvka, L.; Saberioon, M.; Vašát, R. A Memory-Based Learning Approach as Compared to Other Data Mining Algorithms for the Prediction of Soil Texture Using Diffuse Reflectance Spectra. Remote Sens. 2016, 8, 341. [Google Scholar] [CrossRef] [Green Version]

- Walker, S.; Khan, W.; Katic, K.; Maassen, W.; Zeiler, W. Accuracy of Different Machine Learning Algorithms and Added-Value of Predicting Aggregated-Level Energy Performance of Commercial Buildings. Energy Build. 2020, 209. [Google Scholar] [CrossRef]

- Johansen, K.; Raharjo, T.; McCabe, M. Using Multi-Spectral UAV Imagery to Extract Tree Crop Structural Properties and Assess Pruning Effects. Remote Sens. 2018, 10, 854. [Google Scholar] [CrossRef] [Green Version]

- Steven, M. The Sensitivity of the OSAVI Vegetation Index to Observational Parameters. Remote Sens. Environ. 1998, 63, 49–60. [Google Scholar] [CrossRef]

- Xiao, X.; Zhang, T.; Zhong, X.; Shao, W.; Li, X. Support Vector Regression Snow-Depth Retrieval Algorithm Using Passive Microwave Remote Sensing Data. Remote Sens. Environ. 2018, 210, 48–64. [Google Scholar] [CrossRef]

- Rodriguez-Galiano, V.; Sánchez Castillo, M.; Chica-Olmo, M.; Chica Rivas, M. Machine Learning Predictive Models for Mineral Prospectivity: An Evaluation of Neural Networks, Random Forest, Regression Trees and Support Vector Machines. Ore Geol. Rev. 2015, 71. [Google Scholar] [CrossRef]

- Grabska, E.; Frantz, D.; Ostapowicz, K. Evaluation of Machine Learning Algorithms for Forest Stand Species Mapping Using Sentinel-2 Imagery and Environmental Data in the Polish Carpathians. Remote Sens. Environ. 2020, 251. [Google Scholar] [CrossRef]

- Pullanagari, R.R.; Kereszturi, G.; Yule, I.J. Mapping of Macro and Micro Nutrients of Mixed Pastures Using Airborne AisaFENIX Hyperspectral Imagery. ISPRS J. Photogramm. Remote Sens. 2016, 117, 1–10. [Google Scholar] [CrossRef]

- Stanton, C.; Starek, M.J.; Elliott, N.; Brewer, M.; Maeda, M.M.; Chu, T. Unmanned Aircraft System-Derived Crop Height and Normalized Difference Vegetation Index Metrics for Sorghum Yield and Aphid Stress Assessment. J. Appl. Remote Sens. 2017, 11. [Google Scholar] [CrossRef] [Green Version]

- Maimaitijiang, M.; Sagan, V.; Sidike, P.; Daloye, A.M.; Erkbol, H.; Fritschi, F.B. Crop Monitoring Using Satellite/UAV Data Fusion and Machine Learning. Remote Sens. 2020, 12, 1357. [Google Scholar] [CrossRef]

- Näsi, R.; Viljanen, N.; Kaivosoja, J.; Alhonoja, K.; Hakala, T.; Markelin, L.; Honkavaara, E. Estimating Biomass and Nitrogen Amount of Barley and Grass Using UAV and Aircraft Based Spectral and Photogrammetric 3D Features. Remote Sens. 2018, 10, 1082. [Google Scholar] [CrossRef] [Green Version]

| DS Level | Severity | Symptom | Damaged Area | Incidence |

|---|---|---|---|---|

| 0 | Healthy | Symptomless | 0% | No Incidence |

| 1 | Initial | Leaves became brown and curled up | 0%–25% | Incidence |

| 2 | Medium | Canopy with a small portion of dead branches and brown leaves | 25%–45% | Incidence |

| 3 | High | Canopy with a large area of dead branches | 45%–65% | Incidence |

| 4 | Very high | Dead canopy | >65% | Incidence |

| Vegetation Index | Equation | Reference |

|---|---|---|

| Normalized Difference Vegetation Index | [56] | |

| Green Normalized Difference Vegetation Index | [57] | |

| Renormalized Difference Vegetation Index | [58] | |

| Modified Simple Ratio | [59] | |

| Transformed NDVI | [59] | |

| Normalized Difference Index | [60] | |

| Optimized Soil-Adjusted Vegetation Index | [59] | |

| Modified Soil-Adjusted Vegetation Index | [61] | |

| Atmospherically Resistant Vegetation Index | [62] | |

| Chlorophyll Index | [63] | |

| Inverted Red-Edge Chlorophyll Index | [64] | |

| Pigment Specific Simple Ratio A | [65] | |

| Sentinel-2 Red Edge Position | [65] | |

| Transformed Chlorophyll Absorption Ratio Index | [60] | |

| Modified Chlorophyll Absorption Ratio Index | [66] | |

| TCARI/OSAVI | [60] | |

| Plant Senescence Reflectance Index | [67] |

| All Samples | Training | Reference | |

|---|---|---|---|

| No. of Samples | 144 | 100 | 44 |

| Mean | 19.3 | 19.4 | 18.9 |

| Median | 18 | 18 | 18 |

| Minimum | 12 | 12 | 12 |

| Maximum | 39 | 39 | 39 |

| Kurtosis | 4.050 | 5.343 | 0.711 |

| Skewness | 1.497 | 1.784 | 0.849 |

| CV | 0.205 | 0.207 | 0.197 |

| Platform | Metric | RSLR | SVR | RFR | ELR |

|---|---|---|---|---|---|

| Parrot Sequoia+ (CH, VFC) | R2 | 0.432 | 0.399 | 0.305 | 0.432 |

| RMSE | 2.785 | 2.863 | 3.078 | 2.785 | |

| CV-RMSE | 0.147 | 0.151 | 0.163 | 0.147 | |

| Satellite (VI) | R2 | 0.706 | 0.728 | 0.682 | 0.709 |

| RMSE | 2.009 | 1.925 | 2.083 | 1.989 | |

| CV-RMSE | 0.106 | 0.1 | 0.11 | 0.105 | |

| Satellite+ Parrot Sequoia+ (CH, VFC, VI) | R2 | 0.71 | 0.736 | 0.69 | 0.723 |

| RMSE | 1.982 | 1.898 | 2.057 | 1.946 | |

| CV-RMSE | 0.105 | 0.1 | 0.109 | 0.103 | |

| Satellite+ RGB (CH,CC,VI) | R2 | 0.69 | 0.716 | 0.68 | 0.69 |

| RMSE | 2.058 | 1.97 | 2.076 | 2.058 | |

| CV-RMSE | 0.109 | 0.104 | 0.11 | 0.109 |

| Platform | Metric | DI2018 | DI2019 |

|---|---|---|---|

| Parrot Sequoia+ (CH, VFC) | R2 | 0.632 | 0.399 |

| RMSE | 2.11 | 2.863 | |

| CV-RMSE | 0.148 | 0.151 | |

| Satellite (VI) | R2 | 0.648 | 0.727 |

| RMSE | 2.066 | 1.928 | |

| CV-RMSE | 0.145 | 0.102 | |

| Satellite+ Parrot Sequoia+ (CH, VFC, VI) | R2 | 0.662 | 0.736 |

| RMSE | 2.024 | 1.898 | |

| CV-RMSE | 0.142 | 0.1 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhou, X.; Yang, L.; Wang, W.; Chen, B. UAV Data as an Alternative to Field Sampling to Monitor Vineyards Using Machine Learning Based on UAV/Sentinel-2 Data Fusion. Remote Sens. 2021, 13, 457. https://0-doi-org.brum.beds.ac.uk/10.3390/rs13030457

Zhou X, Yang L, Wang W, Chen B. UAV Data as an Alternative to Field Sampling to Monitor Vineyards Using Machine Learning Based on UAV/Sentinel-2 Data Fusion. Remote Sensing. 2021; 13(3):457. https://0-doi-org.brum.beds.ac.uk/10.3390/rs13030457

Chicago/Turabian StyleZhou, Xixuan, Liao Yang, Weisheng Wang, and Baili Chen. 2021. "UAV Data as an Alternative to Field Sampling to Monitor Vineyards Using Machine Learning Based on UAV/Sentinel-2 Data Fusion" Remote Sensing 13, no. 3: 457. https://0-doi-org.brum.beds.ac.uk/10.3390/rs13030457