Best Accuracy Land Use/Land Cover (LULC) Classification to Derive Crop Types Using Multitemporal, Multisensor, and Multi-Polarization SAR Satellite Images

Abstract

:1. Introduction

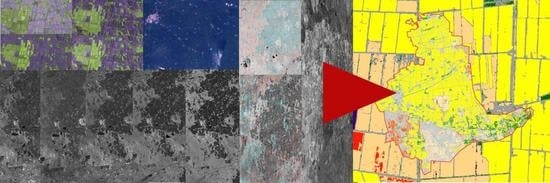

2. Study Area and Data

3. Methods

3.1. Retrieval of Polarimetric Features

3.2. Preprocessing of the Remote Sensing Data

3.3. Supervised LULC Classification Using Remote Sensing Images

3.4. Maximum Likelihood Classification and Optimization

- Classify and validate all input raster bands individually.

- Choose the one which results in the classification with the highest accuracy.

- Combine this/these band(s) of the final stack successively with those bands that are not in the final stack. Add the band whose combination resulted in the highest accuracy-increase into the final stack.

- Repeat step 3 until the accuracy does not increase any more or all bands are used.

3.5. Random Forest Classification

4. Results

5. Discussion

6. Conclusions

Supplementary Files

Supplementary File 1Acknowledgments

Author Contributions

Conflicts of Interest

References

- Foody, G.M. Status of land cover classification accuracy assessment. Remote Sens. Environ. 2002, 80, 185–201. [Google Scholar] [CrossRef]

- Green, K.; Kempka, D.; Lackey, L. Using remote sensing to detect and monitor land-cover and land-use change. Photogramm. Eng. Remote Sens. 1994, 60, 331–337. [Google Scholar]

- Gomez-Chova, L.; Tuia, D.; Moser, G.; Camps-Valls, G. Multimodal classification of remote sensing images: A review and future directions. Proc. IEEE 2015, 103, 1560–1584. [Google Scholar] [CrossRef]

- Chen, J.; Chen, J.; Liao, A.; Cao, X.; Chen, L.; Chen, X.; He, C.; Han, G.; Peng, S.; Lu, M.; et al. Global land cover mapping at 30 m resolution: A POK-based operational approach. ISPRS J. Photogramm. Remote Sens. 2015, 103, 7–27. [Google Scholar] [CrossRef]

- Lo, C.P.; Fung, T. Production of land-use and land-cover maps of central Guangdong Province of China from LANDSAT MSS imagery. Int. J. Remote Sens. 1986, 7, 1051–1074. [Google Scholar] [CrossRef]

- Immitzer, M.; Vuolo, F.; Atzberger, C. First experience with Sentinel-2 data for crop and tree species classifications in Central Europe. Remote Sens. 2016, 8, 166. [Google Scholar] [CrossRef]

- Bryan, L.M. Urban land use classification using synthetic aperture radar. Int. J. Remote Sens. 1983, 4, 215–233. [Google Scholar] [CrossRef]

- Dobson, M.C.; Ulaby, F.T.; Pierce, L.E. Land-cover classification and estimation of terrain attributes using synthetic aperture radar. Remote Sens. Environ. 1995, 51, 199–214. [Google Scholar] [CrossRef]

- Solberg, A.H.S.; Jain, A.K.; Taxt, T. Multisource classification of remotely sensed data: Fusion of Landsat TM and SAR images. IEEE Trans. Geosci. Remote Sens. 1994, 32, 768–778. [Google Scholar] [CrossRef]

- McNairn, H.; Champagne, C.; Shang, J.; Holmstrom, D.; Reichert, G. Integration of optical and Synthetic Aperture Radar (SAR) imagery for delivering operational annual crop inventories. ISPRS J. Photogramm. Remote Sens. 2009, 64, 434–449. [Google Scholar] [CrossRef]

- Forkuor, G.; Conrad, C.; Thiel, M.; Ullmann, T.; Zoungrana, E. Integration of optical and Synthetic Aperture Radar imagery for improving crop mapping in Northwestern Benin, West Africa. Remote Sens. 2014, 6, 6472–6499. [Google Scholar] [CrossRef]

- Blaes, X.; Vanhalle, L.; Defourny, P. Efficiency of crop identification based on optical and SAR image time series. Remote Sens. Environ. 2005, 96, 352–365. [Google Scholar] [CrossRef]

- Atzberger, C. Advances in remote sensing of agriculture: Context description, existing operational monitoring systems and major information needs. Remote Sens. 2013, 5, 949–981. [Google Scholar] [CrossRef]

- Lenz-Wiedemann, V.I.S.; Klar, C.W.; Schneider, K. Development and test of a crop growth model for application within a Global Change decision support system. Ecol. Model. 2010, 221, 314–329. [Google Scholar] [CrossRef]

- Vibhute, A.D.; Gawali, B.W. Analysis and modeling of agricultural land use using remote sensing and geographic information system: A review. Int. J. Eng. Res. Appl. (IJERA) 2013, 3, 81–91. [Google Scholar]

- Schmedtmann, J.; Campagnolo, M.L. Reliable crop identification with satellite imagery in the context of common agriculture policy subsidy control. Remote Sens. 2015, 7, 9325–9346. [Google Scholar] [CrossRef]

- Zhao, Q.; Hütt, C.; Lenz-Wiedemann, V.I.S.; Miao, Y.; Yuan, F.; Zhang, F.; Bareth, G. Georeferencing multi-source geospatial data using multi-temporal TerraSAR-X imagery: A case study in Qixing farm, Northeast China. Photogramm. Fernerkund. Geoinf. 2015, 2015, 173–185. [Google Scholar] [CrossRef]

- Waldhoff, G.; Curdt, C.; Hoffmeister, D.; Bareth, G. Analysis of multitemporal and multisensor remote sensing data for crop rotation mapping. ISPRS Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2012, I-7, 177–182. [Google Scholar] [CrossRef]

- Pinter, P.J., Jr.; Hatfield, J.L.; Schepers, J.S.; Barnes, E.M.; Moran, M.S.; Daughtry, C.S.; Upchurch, D.R. Remote sensing for crop management. Photogramm. Eng. Remote Sens. 2003, 69, 647–664. [Google Scholar] [CrossRef]

- Bush, T.; Ulaby, F. An evaluation of radar as a crop classifier. Remote Sens. Environ. 1978, 7, 15–36. [Google Scholar] [CrossRef]

- Hoogeboom, P. Classification of agricultural crops in radar images. IEEE Trans. Geosci. Remote Sens. 1983, GE-21, 329–336. [Google Scholar] [CrossRef]

- Bargiel, D.; Herrmann, S. Multi-temporal land-cover classification of agricultural areas in two European regions with high resolution spotlight TerraSAR-X data. Remote Sens. 2011, 3, 859–877. [Google Scholar] [CrossRef]

- McNairn, H.; Kross, A.; Lapen, D.; Caves, R.; Shang, J. Early season monitoring of corn and soybeans with TerraSAR-X and RADARSAT-2. Int. J. Appl. Earth Obs. Geoinf. 2014, 28, 252–259. [Google Scholar] [CrossRef]

- Prats-Iraola, P.; Scheiber, R.; Rodriguez-Cassola, M.; Wollstadt, S.; Mittermayer, J.; Bräutigam, B.; Schwerdt, M.; Reigber, A.; Moreira, A. High precision SAR focusing of TerraSAR-X experimental staring spotlight data. In Proceedings of the 2012 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Munich, Germany, 22–27 July 2012; pp. 3576–3579.

- Cloude, S.R.; Pottier, E. A review of target decomposition theorems in radar polarimetry. IEEE Trans. Geosci. Remote Sens. 1996, 34, 498–518. [Google Scholar] [CrossRef]

- Tennakoon, S.B.; Murty, V.V.N.; Eiumnoh, A. Estimation of cropped area and grain yield of rice using remote sensing data. Int. J. Remote Sens. 1992, 13, 427–439. [Google Scholar] [CrossRef]

- Chakraborty, M.; Panigrahy, S.; Sharma, S.A. Discrimination of rice crop grown under different cultural practices using temporal ERS-1 synthetic aperture radar data. ISPRS J. Photogramm. Remote Sens. 1997, 52, 183–191. [Google Scholar] [CrossRef]

- Ribbes, F. Rice field mapping and monitoring with RADARSAT data. Int. J. Remote Sens. 2010, 20, 745–765. [Google Scholar] [CrossRef]

- Wu, F.; Wang, C.; Zhang, H.; Zhang, B.; Tang, Y. Rice crop monitoring in South China with RADARSAT-2 quad-polarization SAR data. IEEE Geosci. Remote Sens. Lett. 2011, 8, 196–200. [Google Scholar] [CrossRef]

- Koppe, W.; Gnyp, M.L.; Hütt, C.; Yao, Y.; Miao, Y.; Chen, X.; Bareth, G. Rice monitoring with multi-temporal and dual-polarimetric terrasar-X data. Int. J. Appl. Earth Obs. Geoinf. 2012, 21, 568–576. [Google Scholar] [CrossRef]

- Brisco, B.; Li, K.; Tedford, B.; Charbonneau, F.; Yun, S.; Murnaghan, K. Compact polarimetry assessment for rice and wetland mapping. Int. J. Remote Sens. 2013, 34, 1949–1964. [Google Scholar] [CrossRef]

- Breit, H.; Fritz, T.; Balss, U.; Lachaise, M.; Niedermeier, A.; Vonavka, M. TerraSAR-X SAR processing and products. IEEE Trans. Geosci. Remote Sens. 2010, 48, 727–740. [Google Scholar] [CrossRef]

- Congalton, R.G.; Green, K. Assessing the Accuracy of Remotely Sensed Data: Principles and Practices; CRC Press: Boca Raton, FL, USA, 2008. [Google Scholar]

- Gnyp, M.L.; Miao, Y.; Yuan, F.; Ustin, S.L.; Yu, K.; Yao, Y.; Huang, S.; Bareth, G. Hyperspectral canopy sensing of paddy rice aboveground biomass at different growth stages. Field Crops Res. 2014, 155, 42–55. [Google Scholar] [CrossRef]

- Zhao, S. Physical Geography of China; Science Press/John Wiley & Sons: Beijing, China; New York, NY, USA, 1986. [Google Scholar]

- R Core Team. R: A Language and Environment for Statistical Computing; R Foundation for Statistical Computing: Vienna, Austria, 2016. [Google Scholar]

- Liaw, A.; Wiener, M. Classification and regression by randomForest. R News 2002, 2, 18–22. [Google Scholar]

- Horning, N. RandomForestClassification. Available online: https://bitbucket.org/rsbiodiv/randomforestclassification/commits/534bc2f (accessed on 15 June 2016).

- Alberga, V.; Satalino, G.; Staykova, D. Comparison of polarimetric SAR observables in terms of classification performance. Int. J. Remote Sens. 2008, 29, 4129–4150. [Google Scholar] [CrossRef]

- Qi, Z.; Yeh, A.G.O.; Li, X.; Lin, Z. A novel algorithm for land use and land cover classification using RADARSAT-2 polarimetric SAR data. Remote Sens. Environ. 2012, 118, 21–39. [Google Scholar] [CrossRef]

- Cloude, S.R.; Goodenough, D.G.; Chen, H. Compact decomposition theory. IEEE Geosci. Remote Sens. Lett. 2012, 9, 28–32. [Google Scholar] [CrossRef]

- Raney, R.K.; Hopkins, J. A perspective on compact polarimetry. IEEE Geosci. Remote Sens. Soc. Newsl. 2011, 12, 12–18. [Google Scholar]

- Jarvis, A.; Reuter, H.I.; Nelson, A.; Guevara, E. Hole-filled SRTM for the Globe Version 4, Available from the CGIAR-CSI SRTM 90m Database. Available online: http://srtm.csi.cgiar.org (accessed on 10 December 2015).

- Curlander, J.C.; McDonough, R.N. Synthetic Aperture Radar- Systems and Signal Processing; John Wiley & Sons, Inc.: New York, NY, USA, 1991; p. 647. [Google Scholar]

- Nonaka, T.; Ishizuka, Y.; Yamane, N.; Shibayama, T.; Takagishi, S.; Sasagawa, T. Evaluation of the geometric accuracy of TerraSAR-X. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2008, 37, 135–140. [Google Scholar]

- Morena, L.C.; James, K.V.; Beck, J. An introduction to the RADARSAT-2 mission. Can. J. Remote Sens. 2004, 30, 221–234. [Google Scholar] [CrossRef]

- Doornbos, E.; Scharroo, R.; Klinkrad, H.; Zandbergen, R.; Fritsche, B. Improved modelling of surface forces in the orbit determination of ERS and ENVISAT. Can. J. Remote Sens. 2002, 28, 535–543. [Google Scholar] [CrossRef]

- Rodriguez, E.; Morris, C.; Belz, J. A global assessment of the SRTM performance. Photogramm. Eng. Remote Sens. 2006, 72, 249–260. [Google Scholar] [CrossRef]

- Congalton, R.G.R. A review of assessing the accuracy of classifications of remotely sensed data. Remote Sens. Environ. 1991, 37, 35–46. [Google Scholar] [CrossRef]

- Breiman, L. Random forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Pal, M. Random forest classifier for remote sensing classification. Int. J. Remote Sens. 2005, 26, 217–222. [Google Scholar] [CrossRef]

- Rodriguez-Galiano, V.F.; Ghimire, B.; Rogan, J.; Chica-Olmo, M.; Rigol-Sanchez, J.P. An assessment of the effectiveness of a random forest classifier for land-cover classification. ISPRS J. Photogramm. Remote Sens. 2012, 67, 93–104. [Google Scholar] [CrossRef]

- Reynolds, J.; Wesson, K.; Desbiez, A.L.; Ochoa-Quintero, J.M.; Leimgruber, P. Using remote sensing and Random Forest to assess the conservation status of critical Cerrado Habitats in Mato Grosso do Sul, Brazil. Land 2016, 5, 12. [Google Scholar] [CrossRef]

- Zhao, L.; Yang, J.; Li, P.; Zhang, L. Characteristics analysis and classification of crop harvest patterns by exploiting high-frequency multipolarization SAR data. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2014, 7, 3773–3783. [Google Scholar] [CrossRef]

- Sonobe, R.; Tani, H.; Wang, X.; Kobayashi, N.; Shimamura, H. Random forest classification of crop type using multi-temporal TerraSAR-X dual-polarimetric data. Remote Sens. Lett. 2014, 5, 157–164. [Google Scholar] [CrossRef]

- Ok, A.O.; Akar, O.; Gungor, O. Evaluation of random forest method for agricultural crop classification. Eur. J. Remote Sens. 2012, 45, 421–432. [Google Scholar]

- Inoue, Y.; Kurosu, T.; Maeno, H.; Uratsuka, S.; Kozu, T.; Dabrowska-Zielinska, K.; Qi, J. Season-long daily measurements of multifrequency (Ka, Ku, X, C, and L) and full-polarization backscatter signatures over paddy rice field and their relationship with biological variables. Remote Sens. Environ. 2002, 81, 194–204. [Google Scholar] [CrossRef]

- Miyaoka, K.; Maki, M.; Susaki, J.; Homma, K.; Noda, K.; Oki, K. Rice-planted area mapping using small sets of multi-temporal SAR data. IEEE Geosci. Remote Sens. Lett. 2013, 10, 1507–1511. [Google Scholar] [CrossRef]

- Souyris, J.C.; Imbo, P.; Fjortoft, R.; Mingot, S.; Lee, J.S. Compact polarimetry based on symmetry properties of geophysical media: The π/4 mode. IEEE Trans. Geosci. Remote Sens. 2005, 43, 634–646. [Google Scholar] [CrossRef]

- McNairn, H.; Shang, J.; Jiao, X.; Champagne, C. The contribution of ALOS PALSAR multipolarization and polarimetric data to crop classification. IEEE Trans. Geosci. Remote Sens. 2009, 47, 3981–3992. [Google Scholar] [CrossRef]

- Anderson, J.; Hardy, E.; Roach, J.; Witmer, R. A Land Use and Land Cover Classification System for Use with Remote Sensor Data; United States Government Printing Office: Washington, DC, USA, 1976.

| Land Use/Land Cover | Number of Polygons | Extent (km2) | Area Used for Classification (%) | Area Used for Validation (%) |

|---|---|---|---|---|

| Coniferous Forest | 3 | 0.065 | 27 | 73 |

| Decideous Forest | 2 | 0.120 | 43 | 57 |

| Maize | 2 | 0.169 | 76 | 24 |

| Pumpkin | 1 | 0.173 | 50 | 50 |

| Rice | 6 | 1.576 | 26 | 74 |

| Soya | 8 | 1.858 | 57 | 43 |

| Urban | 3 | 0.958 | 30 | 70 |

| Concrete | 1 | 0.002 | 100 | -* |

| Water | 1 | 0.004 | 52 | 48 |

| No. | Date | Sensor | Mode | Ground Res. Az × Rg (m) | Polarisation | Pass | Extent (km) | Rice Growth Stage |

|---|---|---|---|---|---|---|---|---|

| 1 | 5 July 2009 | TerraSAR-X | Spotlight HS | 1.76 × 1.43 | HH, VV | Asc. | 7 × 11 | Stem elong. |

| 2 | 16 July 2009 | TerraSAR-X | Spotlight HS | 1.76 × 1.43 | HH, VV | Asc. | 7 × 11 | Booting |

| 3 | 27 July 2009 | TerraSAR-X | Spotlight HS | 1.76 × 1.43 | HH, VV | Asc. | 7 × 11 | Heading |

| 4 | 7 August 2009 | TerraSAR-X | Spotlight HS | 1.76 × 1.43 | HH, VV | Asc. | 7 × 11 | Flowering |

| 5 | 26 June 2009 | TerraSAR-X | Stripmap | 1.89 × 1.57 | VV | Desc. | 30 × 50 | Tillering |

| 6 | 7 July2009 | TerraSAR-X | Stripmap | 1.89 × 1.57 | VV | Desc. | 30 × 50 | Stem elong. |

| 7 | 18 July 2009 | TerraSAR-X | Stripmap | 1.89 × 1.57 | VV | Desc. | 30 × 50 | Booting |

| 8 | 29 July 2009 | TerraSAR-X | Stripmap | 1.89 × 1.57 | VV | Desc. | 30 × 50 | Heading |

| 9 | 9 August 2009 | TerraSAR-X | Stripmap | 1.89 × 1.57 | VV | Desc. | 30 × 50 | Flowering |

| 10 | 25 June 2009 | Radarsat-2 | Fine | 4.8 × 8.93 | HH, HV | Asc. | 54 × 53 | Tillering |

| 11 | 29 July 2009 | Radarsat-2 | Fine | 4.8 × 6.96 | HH, HV | Desc. | 54 × 53 | Heading |

| 12 | 26 June 2009 | Envisat | ASAR APS | 3.88 × 11.85 | VV, VH | Asc. | 60 × 107 | Tillering |

| 13 | 9 August 2009 | FORMOSAT-2 | multispectral | 8 | (4 Bands) | - | 28 × 34 | Flowering |

| Feature | Times Chosen for the Final Stack |

|---|---|

| Alpha angle | 56 |

| Degree of Polarisation | 45 |

| Entropy | 34 |

| C12r | 34 |

| C11 | 24 |

| C12i | 20 |

| C22 | 17 |

© 2016 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC-BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hütt, C.; Koppe, W.; Miao, Y.; Bareth, G. Best Accuracy Land Use/Land Cover (LULC) Classification to Derive Crop Types Using Multitemporal, Multisensor, and Multi-Polarization SAR Satellite Images. Remote Sens. 2016, 8, 684. https://0-doi-org.brum.beds.ac.uk/10.3390/rs8080684

Hütt C, Koppe W, Miao Y, Bareth G. Best Accuracy Land Use/Land Cover (LULC) Classification to Derive Crop Types Using Multitemporal, Multisensor, and Multi-Polarization SAR Satellite Images. Remote Sensing. 2016; 8(8):684. https://0-doi-org.brum.beds.ac.uk/10.3390/rs8080684

Chicago/Turabian StyleHütt, Christoph, Wolfgang Koppe, Yuxin Miao, and Georg Bareth. 2016. "Best Accuracy Land Use/Land Cover (LULC) Classification to Derive Crop Types Using Multitemporal, Multisensor, and Multi-Polarization SAR Satellite Images" Remote Sensing 8, no. 8: 684. https://0-doi-org.brum.beds.ac.uk/10.3390/rs8080684