Research on Vehicle Trajectory Prediction and Warning Based on Mixed Neural Networks

Abstract

:Featured Application

Abstract

1. Introduction

2. Related Works

2.1. Object Detection of a Car

2.2. Long Short-Term Memory

3. Methods and Design

3.1. Object Detection of the Car

3.1.1. Training Data Set

3.1.2. Car Object Detection Model Structure

3.1.3. Training of Car Object Detection Model

3.2. Lane Line Detection

3.3. Trajectory Prediction of a Car

3.3.1. Data Set

3.3.2. The Structure of the Car Trajectory Prediction Model

3.3.3. The Training of the Car Trajectory Prediction Model

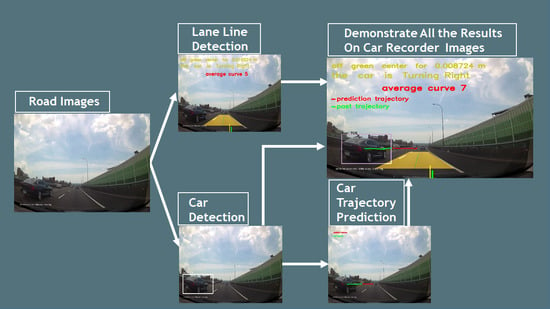

3.4. Combination

4. Results and Discussion

Research Prospect

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

Appendix A. The Parameters of the Car Trajectory Prediction Model

References

- Thompson, S.; Horiuchi, T.; Kagami, S. A probabilistic model of human motion and navigation intent for mobile robot path planning. In Proceedings of the IEEE Xplore, 2009 4th International Conference on Autonomous Robots and Agents, Wellington, New Zealand, 10–12 February 2009; pp. 663–668. [Google Scholar] [CrossRef]

- Alahi, A.; Goel, K.; Ramanathan, V.; Robicquet, A.; Fei-Fei, L.; Savarese, S. Social LSTM: Human Trajectory Prediction in Crowded Spaces. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 26 June–1 July 2016; pp. 961–971. [Google Scholar] [CrossRef] [Green Version]

- Tharindu, F.; Simon, D.; Sridha, S.; Clinton, F. Soft + Hardwired attention: An LSTM framework for human trajectory prediction and abnormal event detection. Neural Netw. 2018, 108, 466–478. [Google Scholar] [CrossRef] [Green Version]

- Mikolov, T.; Karafiát, M.; Burget, L.; Cernocký, J.; Khudanpur, S. Recurrent neural network based language model. In Proceedings of the 11th Annual Conference of the International Speech Communication Association, INTERSPEECH 2010, Chiba, Japan, 26–30 September 2010. [Google Scholar]

- Hochreiter, S.; Schmidhuber, J. Long short-term memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef] [PubMed]

- Altché, F.; de La Fortelle, A. An LSTM network for highway trajectory prediction. In Proceedings of the 2017 IEEE 20th International Conference on Intelligent Transportation Systems (ITSC), Yokohama, Japan, 16–19 October 2017; pp. 353–359. [Google Scholar] [CrossRef] [Green Version]

- Kuo, S. LSTM-Based Vehicle Trajectory Prediction. Master’s Thesis, Institute of Communication Engineering, National Tsinghua University, Hsinchu, Taiwan, 2018. Available online: https://hdl.handle.net/11296/q7qwdc (accessed on 20 August 2020).

- Park, S.H.; Kim, B.; Kang, C.M.; Chung, C.C.; Choi, J.W. Sequence-to-Sequence Prediction of Vehicle Trajectory via LSTM Encoder-Decoder Architecture. In Proceedings of the 2018 IEEE Intelligent Vehicles Symposium (IV), Changshu, China, 26–30 June 2018; pp. 1672–1678. [Google Scholar] [CrossRef] [Green Version]

- Leibe, B.; Schindler, K.; Cornelis, N.; Van Gool, L. Coupled Object Detection and Tracking from Static Cameras and Moving Vehicles. IEEE Trans. Pattern Anal. Mach. Intell. 2008, 30, 1683–1698. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. 2016. Available online: http://pjreddie.com/darknet/yolov1/ (accessed on 5 July 2020).

- Redmon, J.; Farhadi, A. YOLO9000: Better, Faster, Stronger. arXiv 2016, arXiv:1612.08242. Available online: http://pjreddie.com/darknet/yolov2/ (accessed on 5 July 2020).

- Qiu, D.; Weng, M.; Yang, H.; Yu, W.; Liu, K. Research on Lane Line Detection Method Based on Improved Hough Transform. In Proceedings of the 2019 Chinese Control And Decision Conference (CCDC), Nanchang, China, 3–5 June 2019; pp. 5686–5690. [Google Scholar] [CrossRef]

- Qin, Z.; Wang, H.; Li, X. Ultra Fast Structure-aware Deep Lane Detection. In Proceedings of the European Conference on Computer Vision (ECCV), Glasgow, UK, 23–28 August 2020. [Google Scholar]

- Bradski, G. The OpenCV Library. Dr. Dobbs J. Softw. Tools. 2000, 25, 120–125. [Google Scholar]

- SStaudemeyer, R.C.; Morris, E.R. Understanding LSTM—A tutorial into Long Short-Term Memory Recurrent Neural Networks. arXiv 2019, arXiv:1909.09586. [Google Scholar]

- Salehinejad, H.; Baarbé, J.K.; Sankar, S.; Barfett, J.; Colak, E.; Valaee, S. Recent Advances in Recurrent Neural Networks. arXiv 2018, arXiv:1801.01078. [Google Scholar]

- Olah, C. Understanding Lstm Networks–Colah’s Blog, Colah. 2015. Available online: https://colah.github.io/posts/2015-08-Understanding-LSTMs/ (accessed on 20 July 2020).

- Trieu, T.H. Trieu2018darkflow: Darkflow, on GitHub Repository. 2018. Available online: https://github.com/thtrieu/darkflow (accessed on 5 June 2020).

- Tzutalin, L. Git Code. 2015. Available online: https://github.com/tzutalin/labelImg (accessed on 5 June 2020).

| Layers | Details | Output Size |

|---|---|---|

| 1 | Conv2d(filters = 16, kernel_size = 3, stride = 1, Batch_normalize = 1, Activation_function = Leaky Relu) | 16 x 416 x 416 |

| 2 | Maxpooling2d(pool_size = 2, stride = 2) | 16 x 208 x 208 |

| 3 | Conv2d(filters = 32, kernel_size = 3, stride = 1, Batch_normalize = 1, Activation_function = Leaky Relu) | 32 x 208 x 208 |

| 4 | Maxpooling2d(pool_size = 2, stride = 2) | 32 x 104 x104 |

| 5 | Conv2d(filters = 64, kernel_size = 3, stride = 1, Batch_normalize = 1, Activation_function = Leaky Relu) | 64 x 104 x104 |

| 6 | Maxpooling2d(pool_size = 2, stride = 2) | 64 x 52 x 52 |

| 7 | Conv2d(filters = 128, kernel_size = 3, stride=1, Batch_normalize = 1, Activation_function = Leaky Relu) | 128 x 52 x 52 |

| 8 | Maxpooling2d(pool_size = 2, stride = 2) | 128 x 26 x 26 |

| 9 | Conv2d(filters = 256, kernel_size = 3, stride = 1, Batch_normalize = 1, Activation_function = Leaky Relu) | 256 x 26 x 26 |

| 10 | Maxpooling2d(pool_size = 2, stride = 2) | 256 x 13 x 13 |

| 11 | Conv2d(filters = 512, kernel_size = 3, stride = 1, Batch_normalize = 1, Activation_function = Leaky Relu) | 512 x 13 x 13 |

| 12 | Maxpooling2d(pool_size = 2, stride = 1) | 512 x 13 x 13 |

| 13 | Conv2d(filters = 1024, kernel_size = 3, stride = 1, Batch_normalize = 1, Activation_function = Leaky Relu) | 1024 x 13 x 13 |

| 14 | Conv2d(filters = 1024, kernel_size = 3, stride = 1, Batch_normalize = 1, Activation_function = Leaky Relu) | 1024 x 13 x 13 |

| 15 | Conv2d(filters = 30, kernel_size = 1, stride = 1, Activation_function = Leaky Relu) | 30 x 13 x 13 |

| Parameters | Values |

|---|---|

| Number of epochs Batch size Learning rate Size of input image Input’s channels | 10 16 0.001 416 3 |

| Parameters | Values |

|---|---|

| Number of epochs Batch size Learning rate Size of input image Input’s channels | 10 16 0.00001 416 3 |

| Parameters | Values |

|---|---|

| Number of epochs Batch size Learning rate Size of input image Input channels | 25 8 0.00001 416 3 |

| 1 | X | Y |

|---|---|---|

| 2 | 4.999238 | 0.174524 |

| 3 | 5.04951 | 0.178052 |

| 4 | 5.099782 | 0.181615 |

| 5 | 5.150053 | 0.185213 |

| 6 | 5.200325 | 0.188846 |

| 7 | 5.250597 | 0.192515 |

| 8 | 5.300869 | 0.196219 |

| 9 | 5.35114 | 0.199959 |

| 10 | 5.401412 | 0.203733 |

| 11 | 5.451684 | 0.207543 |

| 12 | 5.501955 | 0.211389 |

| 13 | 5.552227 | 0.215269 |

| 14 | 5.602499 | 0.219185 |

| 15 | 5.65277 | 0.223136 |

| … | … | … |

| Layers | Details | Output Size |

|---|---|---|

| 1 2 3 4 5 6 | LSTM(num_units = 256, input_shape = (35,2), return_sequences = False) Dense(num_units = 256) Activation(‘linear’) Dense(num_units = 128) Activation(‘linear’) Dense(num_units = 35) | (None, 256) (None, 256) (None, 256) (None, 128) (None, 128) (None, 35) |

| Parameters | Values |

|---|---|

| Number of epochs Batch size Learning rate Input shape Loss function Optimizer | 100 5 0.001 (35,2) MSE Adam |

| Training Step | Training Time | Loss | Average Loss | ||

|---|---|---|---|---|---|

| Max | Min | Max | Min | ||

| 1 | 5 min 58 s | 106.00877 | 0.96654 | 106.00877 | 3.2454 |

| 2 | 5 min 57 s | 1.05844 | 0.69618 | 0.98139 | 0.83406 |

| 3 | 15 min 10 s | 1.06956 | 0.22245 | 0.80912 | 0.39897 |

| Test Image Number | Total Processing Time (s) | Accuracy |

|---|---|---|

| 1–10 | 0.3637 | 0.75 |

| 11–40 | 0.9518 | 0.86 |

| 50 | 0.132 | 0.71 |

| 65 | 0.159 | 0.89 |

| Time in Video (s) | FPS | Accuracy | ||

|---|---|---|---|---|

| Max | Min | Max | Min | |

| 1–5 s | 10.995 | 10.868 | 0.88 | 0.72 |

| 5–10 s | 10.202 | 9.461 | 0.91 | 0.76 |

| Model | Training Time (s) | Accuracy | Loss | Evaluation Time (ms) | Evaluation Score | |||

|---|---|---|---|---|---|---|---|---|

| Max | Min | Max | Min | Acc | Loss | |||

| Predict X coordinates | 6.829 | 0.33396 | 0.00104 | 0.07694 | 0.00017 | 12 | 0.22222 | 0.000248 |

| Predict Y coordinates | 6.75986 | 0.33357 | 0.00054 | 0.02107 | 0.00098 | 12 | 0.11111 | 0.000932 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Shen, C.-H.; Hsu, T.-J. Research on Vehicle Trajectory Prediction and Warning Based on Mixed Neural Networks. Appl. Sci. 2021, 11, 7. https://0-doi-org.brum.beds.ac.uk/10.3390/app11010007

Shen C-H, Hsu T-J. Research on Vehicle Trajectory Prediction and Warning Based on Mixed Neural Networks. Applied Sciences. 2021; 11(1):7. https://0-doi-org.brum.beds.ac.uk/10.3390/app11010007

Chicago/Turabian StyleShen, Chih-Hsiung, and Ting-Jui Hsu. 2021. "Research on Vehicle Trajectory Prediction and Warning Based on Mixed Neural Networks" Applied Sciences 11, no. 1: 7. https://0-doi-org.brum.beds.ac.uk/10.3390/app11010007