An Image Segmentation Method Using an Active Contour Model Based on Improved SPF and LIF

Abstract

:1. Introduction

2. Related Work

2.1. The GAC Model

2.2. The C–V Model

2.3. The SBGFRLS Model

2.4. The LIF Model

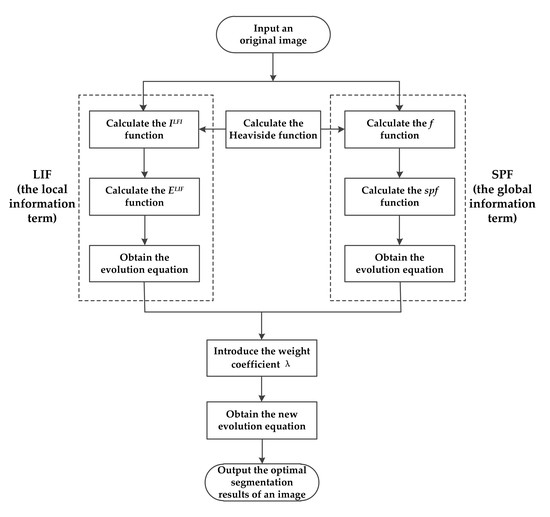

3. Proposed Method

3.1. Improved SPF Function

3.2. Active Contour Model Based on Improved SPF and LIF

3.3. Algorithm Steps

| Algorithm 1. SPFLIF-IS |

| Input: An original image |

Output: The result of image segmentation

|

4. Experimental Results

4.1. Experiment Preparation

4.2. Segmentation Results of Images with Intensity Inhomogeneity

4.3. Segmentation Results of Multiobjective Images

4.4. Segmentation Results of Noisy Images

4.5. Segmentation Results of Texture Image

4.6. Segmentation Results of Real Images

4.7. Comparative Evaluation Results

4.8. Discussion

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Mabood, L.; Ali, H.; Badshah, N.; Chen, K.; Khan, G.A. Active contours textural and inhomogeneous object extraction. Pattern Recognit. 2016, 55, 87–99. [Google Scholar] [CrossRef]

- Zhao, L.K.; Zheng, S.Y.; Wei, H.T.; Gui, L. Adaptive active contour model driven by global and local intensity fitting energy for image segmentation. Opt. Int. J. Light Electron Opt. 2017, 140, 908–920. [Google Scholar] [CrossRef]

- Kass, M.; Witki, A.; Terzopoulos, D. Snakes: Active contour models. Int. J. Comput. Vis. 1988, 1, 321–331. [Google Scholar] [CrossRef] [Green Version]

- Hai, M.; Jia, W.; Wang, X.F.; Zhao, Y.; Hu, R.X.; Luo, Y.T.; Xue, F.; Lu, J.T. An intensity-texture model based level set method for image segmentation. Pattern Recognit. 2015, 48, 1547–1562. [Google Scholar]

- Wang, X.F.; Huang, D.S.; Xu, H. An efficient local Chan-Vese model for image segmentation. Pattern Recognit. 2010, 43, 603–618. [Google Scholar] [CrossRef]

- Gao, S.; Bui, T.D. Image segmentation and selective smoothing by using Mumford-Shah model. IEEE Trans. Image Process. 2005, 14, 1537–1549. [Google Scholar]

- Hao, R.; Qiang, Y.; Yan, X.F. Juxta-Vascular pulmonary nodule segmentation in PET-CT imaging based on an LBF active contour model with information entropy and joint vector. Comput. Math. Methods Med. 2018, 2018, 2183847. [Google Scholar] [CrossRef] [PubMed]

- Xie, X.H.; Mirmehdi, M. MAC: Magnetostatic active contour model. IEEE Trans. Pattern Anal. Mach. Intell. 2008, 30, 632–646. [Google Scholar] [CrossRef] [PubMed]

- Ko, M.; Kim, S.; Kim, M.; Kim, K. A novel approach for outdoor fall detection using multidimensional features from a single camera. Appl. Sci. 2018, 8, 984. [Google Scholar] [CrossRef]

- Jing, Y.; An, J.B.; Liu, Z.X. A novel edge detection algorithm based on global minimization active contour model for oil slick infrared aerial image. IEEE Trans. Geosci. Remote Sens. 2011, 49, 2005–2013. [Google Scholar] [CrossRef]

- Chan, T.F.; Vese, L.A. Active contours without edges. IEEE Trans. Image Process. 2001, 10, 266–277. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Li, C.M.; Kao, C.Y.; Gore, J.C.; Ding, Z.H. Minimization of region-scalable fitting energy for image segmentation. IEEE Trans. Image Process. 2008, 17, 1940–1949. [Google Scholar]

- Han, B.; Wu, Y.Q. A novel active contour model based on modified symmetric cross entropy for remote sensing river image segmentation. Pattern Recognit. 2017, 67, 396–409. [Google Scholar] [CrossRef]

- Li, C.M.; Huang, R.; Ding, Z.H.; Gatenby, J.C.; Metaxas, D.N.; Gore, J.C. A level set method for image segmentation in the presence of intensity inhomogeneities with application to MRI. IEEE Trans. Image Process. 2011, 20, 2007–2016. [Google Scholar] [PubMed]

- Caselles, V.; Kimmel, R.; Sapiro, G. Geodesic active contours. Int. J. Comput. Vis. 1997, 22, 61–79. [Google Scholar] [CrossRef]

- Song, Y.; Wu, Y.Q.; Dai, Y.M. A new active contour remote sensing river image segmentation algorithm inspired from the cross entropy. Digit. Signal Process. 2016, 48, 322–332. [Google Scholar] [CrossRef]

- Cao, G.; Mao, Z.H.; Yang, X.; Xia, D.S. Optical aerial image partitioning using level sets based on modified Chan-Vese model. Pattern Recognit. Lett. 2008, 29, 457–464. [Google Scholar] [CrossRef]

- Li, X.M.; Jiang, D.S.; Shi, Y.H.; Li, W.S. Segmentation of MR image using local and global region based geodesic model. Biomed. Eng. Online 2015, 14, 8. [Google Scholar] [CrossRef] [Green Version]

- Liu, S.G.; Peng, Y.L. A local region-based Chan–Vese model for image segmentation. Pattern Recognit. 2012, 45, 2769–2779. [Google Scholar] [CrossRef]

- Wang, L.; He, L.; Mishra, A.; Li, C.M. Active contours driven by local Gaussian distribution fitting energy. Signal Process. 2009, 89, 2435–2447. [Google Scholar] [CrossRef]

- Zhang, L.; Peng, X.G.; Li, G.; Li, H.F. A novel active contour model for image segmentation using local and global region-based information. Mach. Vis. Appl. 2017, 28, 75–89. [Google Scholar] [CrossRef]

- Soomro, S.; Akram, F.; Munir, A.; Lee, C.H.; Choi, K.N. Segmentation of left and right ventricles in cardiac MRI using active contours. Comput. Math. Methods Med. 2017, 2017, 1455006. [Google Scholar] [CrossRef] [PubMed]

- Zhang, K.H.; Zhang, L.; Song, H.H.; Zhou, W.G. Active contours with selective local or global segmentation: A new formulation and level set method. Image Vis. Comput. 2010, 28, 668–676. [Google Scholar] [CrossRef]

- Li, C.M.; Kao, C.Y.; Gor, J.C.; Ding, Z.H. Implicit active contours driven by local binary fitting energy. In Proceedings of the IEEE Conference on Computer Vision & Pattern Recognition, Minneapolis, MN, USA, 17–22 June 2007; pp. 1–7. [Google Scholar]

- Yuan, J.J.; Wang, J.J. Active contours driven by local intensity and local gradient fitting energies. Int. J. Pattern Recognit. Artif. Intell. 2014, 28, 1455006. [Google Scholar] [CrossRef]

- Tu, S.; Su, Y. Fast and accurate target detection based on multiscale saliency and active contour model for high-resolution SAR images. IEEE Trans. Geosci. Remote Sens. 2016, 54, 5729–5744. [Google Scholar] [CrossRef]

- Wang, L.; Li, C.M.; Sun, Q.S.; Xia, D.S.; Kao, C.Y. Active contours driven by local and global intensity fitting energy with application to brain MR image segmentation. Comput. Med Imaging Graph. 2009, 33, 520–531. [Google Scholar] [CrossRef] [PubMed]

- Zhang, K.H.; Zhang, L.; Lam, K.M.; Zhang, D. A level set approach to image segmentation with intensity inhomogeneity. IEEE Trans. Cybern. 2016, 46, 546–557. [Google Scholar] [CrossRef]

- Jiang, X.L.; Li, B.L.; Wang, Q.; Chen, P. A novel active contour model driven by local and global intensity fitting energies. Opt. Int. J. Light Electron Opt. 2014, 125, 6445–6449. [Google Scholar] [CrossRef]

- Zhao, Y.Q.; Wang, X.F.; Shih, F.Y.; Yu, G. A level-set method based on global and local regions for image segmentation. Int. J. Pattern Recognit. Artif. Intell. 2012, 26, 1255004. [Google Scholar] [CrossRef]

- Zhang, K.H.; Song, H.H.; Zhang, L. Active contours driven by local image fitting energy. Pattern Recognit. 2010, 43, 1199–1206. [Google Scholar] [CrossRef]

- Akram, F.; Garcia, M.A.; Puig, D. Active contours driven by local and global fitted image models for image segmentation robust to intensity inhomogeneity. PLoS ONE 2017, 12, e0174813. [Google Scholar] [CrossRef]

- Zhu, H.Q.; Xie, Q.Y. A multiphase level set formulation for image segmentation using a MRF-based nonsymmetric Student’s-t mixture model. Signal Image Video Process. 2018, 18, 1577–1585. [Google Scholar] [CrossRef]

- Wang, X.F.; Min, H.; Zou, L.; Zhang, Y.G. A novel level set method for image segmentation by incorporating local statistical analysis and global similarity measurement. Pattern Recognit. 2015, 48, 189–204. [Google Scholar] [CrossRef]

- Cao, J.F.; Wu, X.J. A novel level set method for image segmentation by combining local and global information. J. Mod. Opt. 2017, 64, 2399–2412. [Google Scholar] [CrossRef]

- Tian, Y.; Duan, F.Q.; Zhou, M.Q.; Wu, Z.K. Active contour model combining region and edge information. Mach. Vis. Appl. 2013, 24, 47–61. [Google Scholar] [CrossRef]

- Lok, K.H.; Shi, L.; Zhu, X.L.; Wang, D.F. Fast and robust brain tumor segmentation using level set method with multiple image information. J. X-ray Sci. Technol. 2017, 25, 301–312. [Google Scholar] [CrossRef]

- Sun, Z.; Qi, M.; Lian, J.; Jia, W.K.; Zou, W.; He, Y.L.; Liu, H.; Zheng, Y.J. Image segmentation by searching for image feature density peaks. Appl. Sci. 2018, 8, 969. [Google Scholar] [CrossRef]

- Xu, H.Y.; Jiang, G.Y.; Yu, M.; Luo, T. A global and local active contour model based on dual algorithm for image segmentation. Comput. Math. Appl. 2017, 74, 1471–1488. [Google Scholar] [CrossRef]

- Abdelsamea, M.M. A semi-automated system based on level sets and invariant spatial interrelation shape features for Caenorhabditis elegans phenotypes. J. Vis. Commun. Image Represent. 2016, 41, 314–323. [Google Scholar] [CrossRef]

- Ji, Z.X.; Xia, Y.; Sun, Q.S.; Gao, G.; Chen, Q. Active contours driven by local likelihood image fitting energy for image segmentation. Inf. Sci. 2015, 301, 285–304. [Google Scholar] [CrossRef]

- Xu, C.Y.; Yezzi, A.; Prince, J.L. On the relationship between parametric and geometric active contours. In Proceedings of the IEEE Conference Record of the 34th Asilomar Conference on Signals, Systems and Computers, Pacific Grove, CA, USA, 29 October–1 November 2000; pp. 483–489. [Google Scholar]

- Abdelsamea, M.M.; Tsaftaris, S.A. Active contour model driven by globally signed region pressure force. In Proceedings of the IEEE 18th International Conference on Digital Signal Processing, Santorini, Greece, 1–3 July 2013; pp. 1–6. [Google Scholar]

- Li, D.Y.; Li, W.F.; Liao, Q.M. Active contours driven by local and global probability distributions. J. Vis. Commun. Image Represent. 2013, 24, 522–533. [Google Scholar] [CrossRef]

- Hanbay, K.; Talu, M.F. A novel active contour model for medical images via the Hessian matrix and eigenvalues. Comput. Math. Appl. 2018, 75, 3081–3104. [Google Scholar] [CrossRef]

- Vese, L.A.; Chan, T.F. A multiphase level set framework for image segmentation using the Mumford and Shah model. Int. J. Comput. Vis. 2002, 50, 271–293. [Google Scholar] [CrossRef]

- Lei Zhang’s Homepage. Available online: http://www4.comp.polyu.edu.hk/~cslzhang/ (accessed on 10 December 2018).

- Chunming Li’s Homepage. Available online: http://www.engr.uconn.edu/~cmli/ (accessed on 10 December 2018).

- Li, M.; He, C.J.; Zhan, Y. Adaptive regularized level set method for weak boundary object segmentation. Math. Probl. Eng. 2012, 2012, 369472. [Google Scholar] [CrossRef]

- The Berkeley Segmentation Dataset and Benchmark. Available online: https://www2.eecs.berkeley. edu/Research/Projects/CS/vision/bsds/ (accessed on 10 December 2018).

- Jiang, X.L.; Wang, Q.; He, B.; Chen, S.J.; Li, B.L. Robust level set image segmentation algorithm using local correntropy-based fuzzy c-means clustering with spatial constraints. Neurocomputing 2016, 27, 22–35. [Google Scholar] [CrossRef]

- Dice, L.R. Measures of the amount of ecologic association between species. Ecology 1945, 26, 297–302. [Google Scholar] [CrossRef]

- Jaccard, P. The distribution of the flora in the alpine zone. New Phytol. 1912, 11, 37–50. [Google Scholar] [CrossRef]

- Wang, L.; Chang, Y.; Wang, H.; Wang, Z.Z.; Pu, J.T.; Yang, X.D. An active contour model based on local fitted images for image segmentation. Inf. Sci. 2017, 418, 61–73. [Google Scholar] [CrossRef] [Green Version]

- Arbelaez, P.; Maire, M.; Fowlkes, C.; Malik, J. Contour detection and hierarchical image segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2011, 33, 898–916. [Google Scholar] [CrossRef]

- Zhao, W.; Fu, Y.; Wei, X.; Wang, H. An improved image semantic segmentation method based on superpixels and conditional random fields. Appl. Sci. 2018, 8, 837. [Google Scholar] [CrossRef]

- Zhou, S.P.; Wang, J.J.; Zhang, M.M.; Cai, Q.; Gong, Y.H. Correntropy-based level set method for medical image segmentation and bias correction. Neurocomputing 2017, 234, 216–229. [Google Scholar] [CrossRef]

- Zhang, Y.C. Research of level set image segmentation based on Rough Set theory and the extended watershed transformation. Ph.D. Thesis, Dalian University of Technology, Dalian, China, 2018; pp. 4–6. [Google Scholar]

- Sun, L.; Meng, X.C.; Xu, J.C.; Zhang, S.G. An image segmentation method based on improved regularized level set model. Appl. Sci. 2018, 8, 2393. [Google Scholar] [CrossRef]

| Methods | C–V | LBF | SBGFRLS | LIF | LSACM | SPFLIF-IS |

|---|---|---|---|---|---|---|

| Segmentation performance | F1 | T | F1 | F2 | F1 | T |

| T | T | F1 | T | F1 | T | |

| T | T | T | F1 | T | T | |

| T | T | T | F1 | F1 | T |

| Methods | C–V | LBF | SBGFRLS | LIF | LSACM | SPFLIF-IS |

|---|---|---|---|---|---|---|

| Segmentation performance | F2 | T | F3 | F2 | F1 | F2 |

| T | F2 | F3 | F1 | F1 | T | |

| F1 | F1 | F3 | F1 | T | T | |

| F1 | F1 | F1 | F1 | F1 | T |

| Methods | C–V | LBF | SBGFRLS | LIF | LSACM | SPFLIF-IS |

|---|---|---|---|---|---|---|

| Segmentation performance | T | F1 | T | T | F1 | T |

| F2 | F1 | F2 | F1 | F1 | T | |

| F1 | F1 | F1 | F1 | F1 | T | |

| F1 | F1 | F1 | F1 | F1 | F2 | |

| F1 | F1 | F1 | F1 | F1 | F2 |

| Methods | C–V | LBF | SBGFRLS | LIF | LSACM | SPFLIF-IS |

|---|---|---|---|---|---|---|

| Segmentation performance | T | F1 | F3 | F1 | F1 | T |

| F3 | F3 | F3 | F1 | F1 | T |

| Methods | C–V | LBF | SBGFRLS | LIF | LSACM | SPFLIF-IS |

|---|---|---|---|---|---|---|

| Segmentation performance | T | F1 | F1 | F3 | F1 | T |

| F2 | F1 | F2 | F1 | F1 | T | |

| T | F1 | F2 | F1 | F1 | T | |

| F1 | F1 | F2 | F1 | F1 | T |

| Image ID | C–V | LBF | LIF | SPFLIF-IS |

|---|---|---|---|---|

| 3063 | 0.9779 | 0.9576 | 0.8962 | 0.9783 |

| 8068 | 0.978 | 0.9555 | 0.8673 | 0.9827 |

| 14092 | 0.9235 | 0.871 | 0.8058 | 0.9257 |

| 29030 | 0.9525 | 0.9432 | 0.8106 | 0.9743 |

| 41004 | 0.9763 | 0.9565 | 0.8769 | 0.9791 |

| 41006 | 0.9625 | 0.9305 | 0.8358 | 0.9643 |

| 46076 | 0.9763 | 0.9512 | 0.8392 | 0.9783 |

| 48017 | 0.9526 | 0.9066 | 0.855 | 0.9562 |

| 49024 | 0.9566 | 0.9627 | 0.8531 | 0.9792 |

| 51084 | 0.9253 | 0.9401 | 0.8464 | 0.9607 |

| 62096 | 0.9641 | 0.9387 | 0.8617 | 0.9734 |

| 101084 | 0.8942 | 0.8441 | 0.8103 | 0.978 |

| 124084 | 0.9578 | 0.9378 | 0.8865 | 0.9616 |

| 143090 | 0.9575 | 0.9517 | 0.8633 | 0.9692 |

| 147091 | 0.9693 | 0.9387 | 0.8254 | 0.9717 |

| 207056 | 0.9677 | 0.9305 | 0.8183 | 0.9826 |

| 296059 | 0.947 | 0.9276 | 0.8283 | 0.9742 |

| 299091 | 0.9708 | 0.9595 | 0.8448 | 0.9759 |

| 317080 | 0.9591 | 0.9288 | 0.8665 | 0.9634 |

| 388006 | 0.9676 | 0.9452 | 0.8738 | 0.9701 |

| Mean | 0.9568 | 0.9339 | 0.8483 | 0.9699 |

| Image ID | C–V | LBF | SBGFRLS | LIF | LSACM | SPFLIF-IS | ||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| DICE | JSI | DICE | JSI | DICE | JSI | DICE | JSI | DICE | JSI | DICE | JSI | |

| 3063 | 0.9779 | 0.9568 | 0.9576 | 0.9186 | 0.9728 | 0.9470 | 0.8962 | 0.8119 | 0.9774 | 0.9557 | 0.9783 | 0.9575 |

| 8068 | 0.9780 | 0.9570 | 0.9555 | 0.9149 | 0.9785 | 0.9579 | 0.8673 | 0.7657 | 0.9710 | 0.9436 | 0.9827 | 0.9660 |

| 29030 | 0.9525 | 0.9093 | 0.9432 | 0.8925 | 0.9709 | 0.9435 | 0.8106 | 0.6815 | 0.9626 | 0.9280 | 0.9743 | 0.9500 |

| 41004 | 0.9763 | 0.9537 | 0.9565 | 0.9166 | 0.9775 | 0.9560 | 0.8769 | 0.7808 | 0.9741 | 0.9494 | 0.9791 | 0.9590 |

| 46076 | 0.9763 | 0.9537 | 0.9512 | 0.9070 | 0.9753 | 0.9518 | 0.8392 | 0.7230 | 0.9692 | 0.9402 | 0.9783 | 0.9575 |

| 207056 | 0.9677 | 0.9375 | 0.9305 | 0.8700 | 0.9774 | 0.9558 | 0.8183 | 0.6924 | 0.9785 | 0.9579 | 0.9826 | 0.9659 |

| 296059 | 0.9470 | 0.8994 | 0.9276 | 0.8650 | 0.9684 | 0.9387 | 0.8283 | 0.7069 | 0.9741 | 0.9495 | 0.9742 | 0.9498 |

| 299091 | 0.9708 | 0.9433 | 0.9595 | 0.9221 | 0.9750 | 0.9512 | 0.8448 | 0.7313 | 0.9694 | 0.9406 | 0.9759 | 0.9530 |

| Mean | 0.9683 | 0.9388 | 0.9477 | 0.9008 | 0.9745 | 0.9502 | 0.8477 | 0.7367 | 0.9720 | 0.9456 | 0.9782 | 0.9573 |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Sun, L.; Meng, X.; Xu, J.; Tian, Y. An Image Segmentation Method Using an Active Contour Model Based on Improved SPF and LIF. Appl. Sci. 2018, 8, 2576. https://0-doi-org.brum.beds.ac.uk/10.3390/app8122576

Sun L, Meng X, Xu J, Tian Y. An Image Segmentation Method Using an Active Contour Model Based on Improved SPF and LIF. Applied Sciences. 2018; 8(12):2576. https://0-doi-org.brum.beds.ac.uk/10.3390/app8122576

Chicago/Turabian StyleSun, Lin, Xinchao Meng, Jiucheng Xu, and Yun Tian. 2018. "An Image Segmentation Method Using an Active Contour Model Based on Improved SPF and LIF" Applied Sciences 8, no. 12: 2576. https://0-doi-org.brum.beds.ac.uk/10.3390/app8122576