1. Introduction

Power-to-gas technology using intermittent surplus renewable electricity has gained attention for mitigating CO

2 emissions to the atmosphere [

1,

2]. The intermittency of renewable energy sources is a major hurdle in their seamless integration with existing energy systems [

3]. CO

2 methanation [

4,

5] combining captured CO

2 with H

2, produced via water electrolysis [

6], is an alternative to existing energy systems that could be integrated with renewable electricity sources. CO

2 methanation technologies could considerably reduce carbon emissions by encouraging industrial symbiosis from industries with large CO

2 footprints [

7], such as thermal power plants [

8]. Because CH

4 is easier to store and transport than H

2 [

1], the synergistic integration of renewable electricity with a natural gas grid is expected via CO

2 methanation [

4].

Among the various reactor types, fixed-bed reactors (FBs) are the most commonly used types for CO

2 methanation. As the CO

2 methanation reaction is thermodynamically favored at low temperatures and high pressures [

9], an isothermal fixed-bed reactor (IFB) without a hot spot produces high methane selectivity, exhibits stable operation, and prevents deactivation of catalyst particles through processes such as thermal degradation (i.e., nickel sintering [

2]). However, the IFB usually requires high recycling and dilution ratios, and adiabatic reactors to maintain suitable productivity [

10,

11,

12]. The first commercial CO/CO

2 methanation process developed by Lurgi and Sasol (USA, North Dakota) that produces 1.53 billion Nm

3/year is composed of an IFB and two adiabatic FB reactors with recycling [

10]. In Germany, Linde designed an IFB reactor with an indirect heat exchanger to generate steam from the exothermic heat of reaction [

11].

To develop advanced CO

2 methanation technologies, researchers have explored the use of modeling and simulations in the optimization of reactor designs. In particular, computational fluid dynamics (CFD) involving process modeling has been used to understand the hydro- and thermo-dynamics of a reactor following geometrical and operational modifications [

13]. CFD studies of CO

2 methanation have been reported for a single fixed-bed [

14], multi-stage fixed-beds [

14,

15,

16,

17], fixed-beds with a plate-type heat exchanger [

18,

19], monolith reactors [

20,

21], gas–solid fluidized beds [

1,

5,

22], three-phase slurry bubble columns [

23], and microchannel reactors [

24,

25]. However, there are several limitations of traditional modeling and CFD, such as (1) high computational cost for three-dimensional (3D) multiscale and multiphase CFD [

13,

18], (2) difficulties in the efficient discretization of complex geometries [

13,

21], (3) numerical diffusion and round-off errors stemming from numerical differentiation [

26], and (4) difficulties in the identification of physical model parameters [

1,

17,

19,

27].

Despite advances in first principles and empirical elucidation, artificial neural network (ANN) models in the category of data-driven models, black-box models, or surrogate models (SMs), have become an alternative functional mapping between input and output data because of their prompt predictions, automated knowledge extraction, and high inference accuracy [

28,

29,

30]. The structure of ANNs relies on the availability of experimental data, observation data [

28], or data generated by first-principle models such as CFD [

31,

32]. The automatic differentiation (AD) technique, which calculates both functions and their derivative values implementing the chain rule, is used across the ANN layers to efficiently estimate gradients [

33]. AD has a lower computational cost than symbolic differentiation and a higher accuracy than numerical differentiation [

26].

Recently, ANNs and conservation equations coupled with AD that solve ordinary differential equations (ODEs) and partial differential equations (PDEs) called physics-informed neural networks (PINNs) have been reported [

32,

34,

35]. As PINNs are constrained to respect any symmetries, invariances, or first-principle laws [

34], they present great potential for solving chemical engineering problems, which usually deal with complex geometries and physics phenomena. In contrast to common ANNs, PINNs do not depend on empirical data because the initial and boundary conditions are directly used to adjust the network parameters, such as weights and biases [

34]. In addition, the extrapolation capability of PINNs is enhanced owing to physical constraints [

36]. Nevertheless, there are few applications of PINNs in process modeling and chemical reactor design.

PINNs can be used to solve two types of problems: (1) forward and (2) inverse problems [

34]. In the forward problem, the PINN solves ODEs/PDEs, like other numerical solvers of ODEs/PDEs, revealing its inference capability. In the inverse problem, unknown physical model parameters are identified using both the well-trained forward PINN and external input/output datasets. Previous PINN studies focused on a general solution of ODEs/PDEs [

32,

34,

35] and elementary reaction rates [

29]. Few researchers have addressed PINNs for complex reaction rate models showing high nonlinearity.

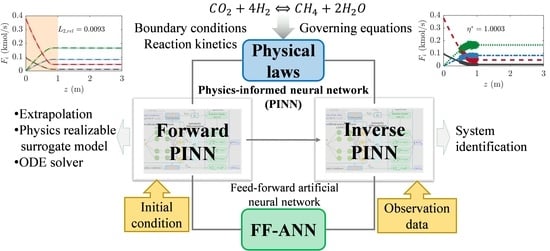

In this study, forward and inverse PINNs coupled with AD were developed for the solution and parameter identification of a highly nonlinear reaction rate model for catalytic CO2 methanation in an IFB reactor. The results obtained from the PINNs were compared with those obtained using a common numerical solver of ODEs (ode15s in MATLAB). The hyper-parameters of the ANN used in the forward PINN, such as (1) the number of hidden layers, (2) number of neurons per hidden layer, (3) activation functions, and (4) number of collocation training points, were systematically determined. In the forward PINN problem, the extrapolation capability was analyzed by narrowing the collocation-training domain and detaching the collocation-training domain from the boundary. In the inverse PINN problem, a reaction effectiveness factor was identified using observation datasets containing 5% and 20% random noises in exact results. The influence of the observation data range on the prediction accuracy of the inverse PINN model was also investigated. This study demonstrates that the forward and inverse PINNs can solve fixed-bed models with highly nonlinear chemical reaction kinetics and identify unknown model parameters.

3. Physics-Informed Neural Networks (PINN) Model

The 1D IFB reactor model coupled with the reaction kinetics in Equations (2)–(7) is typically solved using a stiff ODE solver. In this study, the solution of the 1D IFB reactor model obtained from a stiff ODE solver was compared with that obtained with the PINNs.

The PINN solving the system of ODEs in the IFB reactor model was composed of an FF-ANN, AD, and a governing equation. A strategy for adjusting the hyper-parameters of the FF-ANN was presented in the forward PINN problem. An unknown model parameter (i.e., η) was identified in the inverse PINN problem. The two PINN structures were the same, while the forward PINN exploited training data self-generated for the initial condition and the inverse PINN used observation data from an external source as the training data.

3.1. PINN Structure in the Forward Problem

The architecture of the forward PINN problem is shown in

Figure 2. The objective of the forward PINN problem is to solve the given governing equation with initial, boundary, and operating conditions. The initial conditions were the target values (

Fi,0) over the reactor length (0 <

z ≤ L) except

z = 0 at the beginning of the reaction, which are given as follows:

However, any initial condition must be acceptable in theory to adjust

w and

b because the forward PINN converges to a solution (

Fi,PINN) satisfying the physics-informed constraints. The Dirichlet boundary conditions at

z = 0 were implemented separately in the PINN:

The operating conditions of the IFB, such as

T,

P, and

F0, were used to calculate the partial pressure (

pi) and reaction kinetic rate (

r). The FF-ANN structure contained one input (

), four outputs (

),

hidden layers, and

m neurons for each layer. The input and output datasets of the FF-ANN were randomly sampled from the initial and boundary conditions, Equations (8) and (9), respectively, in the training stage. The activation function (

fa), such as

the sigmoid and hyperbolic tangent (

tanh), was applied for each neuron. The weights (

) and biases (

) for the

jth hidden layer and the

kth neuron must be adjusted to minimize the loss function (

Loss). The AD for spatial derivatives (

) was calculated via the reverse accumulation mode, which propagates derivatives backward from a given output [

26]. The governing equations as the physics-informed part of the ANN included the reaction kinetic rate (

r) in Equation (4), the four ODEs in Equation (2), and the boundary conditions in Equation (3).

The optimized weights and biases (

and

) were obtained from the following optimization problem:

where

MSEg and

MSEb are the mean squared errors for the governing equation and boundary condition, respectively.

Ntrain,

Ncomp, and

Nbnd are the number of training data sets, species (or components), and boundary condition sampling points, respectively. The loss function (

Loss) sums

MSEg and

MSEb.

In Equation (8), for the initial condition, 1000–10,000 training data were randomly and uniformly sampled for the adjustment of the ANN parameters (

and

) and determination of the hyper-parameters (

n,

m,

fa, and

Ntrain). An Adam optimizer [

40] was used to solve Equation (10), which combines a stochastic gradient descent with adaptive momentum, because of its good convergence speed [

41], as confirmed in several PINN models [

29,

35,

42,

43]. An initial learning rate of 0.001 and decay rate of 0.005 were chosen for the Adam optimizer.

Negative intermediate outputs (

Fi) appeared frequently when the stochastic gradient optimizer was used in the PINN. However, a negative

Fi was unfavorable for solving the IFB reaction model with the reaction kinetics. In addition, as the ODE system of the reactor model with chemical reaction rates was stiff, it was desirable to avoid negative

Fi and improve the convergence of the PINN. An exponential mapping of the output values from each hidden layer [

29] was used:

where

aj,l is the value exiting the

lth neuron of the

jth hidden layer.

Gusmao et al. (2020) [

29] presented forward and inverse PINNs to create an SM for the solution of chemical reaction kinetics and to acquire kinetic parameters from experimental data. In our study, the PINN concept was applied to a complex and stiff reaction kinetic problem for CO

2 methanation.

3.2. PINN Structure in the Inverse Problem

The weights (

) and biases (

) of the FF-ANN are the optimized variables in the forward PINN problem, whereas unknown model parameters are identified in the inverse PINN using the optimized weights (

) and biases (

) obtained from the forward PINN. The inverse PINN included the governing equations, boundary, and operating conditions that were used in the forward PINN, as shown in

Figure 3. Rather than using the initial condition as the training data, the inverse PINN problem uses observation data from an external source, such as experimental data. Therefore, the values of

z and

Fi(

z) are different from those in

Figure 2.

In the inverse PINN, the effectiveness factor (

η) as an unknown model parameter was identified using the following optimization with a loss function:

where

Nobs is the number of observation data points (or the experimental data).

MSEg was evaluated for the observation data. The

MSEb in the inverse PINN used the same training data for the Dirichlet boundary condition that was used in the forward problem. The loss function (

Loss) sums

MSEg and

MSEb as functions of

η.

3.3. Hyper-Parameters Setting and Accuracy of PINN Solution

For the FF-ANN, a mini-batch size of 128, which had a minor effect on the PINN training results, was used. The number of training epochs was set to 1000. The number of the hidden layers (n) ranged from 2 to 11. The number of neurons (m) for each layer was 64–256. The sigmoid and tanh functions were considered the activation functions (fa). The number of training data points (Ntrain) varied from 1000 to 10,000. The training data were used to adjust w and b, and to determine the hyper-parameters (m, n, fa, and Ntrain), because the validation data for determining hyper-parameters were not necessary in the PINN, which provides a solution for physical models.

In the FF-ANN, the biases (

b) were initialized to zeros and the weights (

w) were initialized by the following commonly used heuristic called Xavier’s method [

44]:

where

U is the uniform distribution in the interval of

.

Nin and

Nout are the neuron numbers of the previous and present layers, respectively. A pseudo-random generator “

philox” [

45] with 10 rounds and a seed value of “1234” was used to provide the same initial weights for all trainings according to different hyper-parameters. The training data were sampled using Sobol’s quasi-random sequence generator [

46], which filled the

space in a highly uniform manner.

The PINNs were implemented using the deep learning toolbox of MATLAB (Mathworks, R2021a, Natick, MA, USA, 2021). The PINNs were executed on a single NVIDIA Quadro RTX 6000 GPU device. The computational time was 5 min to 3 h to train each forward PINN according to the number of hidden layers and neurons. The inverse PINN model required less than 20 s.

The governing equation in Equation (2) with the boundary conditions in Equation (3) was also solved using a stiff ODE numerical solver, ode15s in MATLAB, with a strict relative and absolute tolerance of 1 × 10

−8. The solution was considered as an exact solution. The accuracy of the PINN model was measured using an

relative error norm (

) [

30] between the PINN solution (

) and stiff ODE solution (

):

Ntest (=1000) is the number of test data in the forward PINN, whereas

Ntest is the number of observation data points (

Nobs) in the inverse PINN. The test and observation data were generated uniformly over a given range of reactor lengths (

z).

4. Results and Discussion

The hyper-parameters (m, n, fa, and Ntrain) of the forward PINN were first determined. Then, the extrapolation performance of the forward PINN was investigated using 10,000 training data points sampled at a limited reactor length (z) and 1000 test data points sampled at the full reactor length (0 ≤ z ≤ 3). Using the optimized structure of the forward PINN, the effects of the number and range of the observation data on unknown model parameters (e.g., η) were examined in the inverse PINN problem.

4.1. Determination of Hyper-Parameters

Activation functions such as the sigmoid and tanh functions were first tested in an FF-ANN structure with four hidden layers and 64 neurons per layer. Then the number of layers and neurons were determined for the FF-ANN using an activation function selected previously. The sigmoid activation function showed good performance for a specific FF-ANN structure (e.g., four hidden layers and 64 neurons), while the tanh activation function exhibited good results for almost all network structures.

Figure 4 shows the comparison between

sigmoid and

tanh in terms of (a) the loss function and (b)

Fi along the reactor length (

z) for 1000 test data points evenly distributed in 0 ≤

z ≤ 3. The 10,000 training data points used to adjust

w and

b were distributed by Sobol’s quasi-random sequence generator, as mentioned earlier. The loss function (

Loss) obtained using

tanh was lower by three orders of magnitude than that obtained using

the sigmoid function (

Figure 4a). Here, the iteration number is the number of mini-batches multiplied by the number of epochs. As a result, the mole flow rates (

Fi,PINN) obtained from the PINN with

tanh were closer to the ODE solution (

Fi,ODE) than those obtained from the PINN with the

sigmoid function (

Figure 4b). The

of the

sigmoid and

tanh functions were 0.05251 and 0.02372, respectively. Therefore, the

tanh activation function for all the hidden layers was chosen for further investigation. The mixed activation functions and other activation functions, such as the rectified linear unit (ReLU), were outside the scope of this study.

Figure 5 shows the influence of the number of hidden layers and neurons in each layer on the loss function (

Loss), L

2 relative error (

L2,rel), and training time (

t). The loss function and training time were obtained from the PINN with

tanh for 10,000 training data points.

L2,rel was measured for 1000 test data compared to the ODE solution. The red spot indicates the forward PINN structure with

n = 7 layers and

m = 256 neurons achieved a minimum loss.

Loss and

sharply decrease for 2 ≤

n ≤ 5 and slowly converge to a certain value for all the investigated numbers of neurons (see

Figure 5a,b). The number of neurons (

m) weakly influenced

Loss and

, which were the lowest for the forward PINN structure with 256 neurons. Although the minimum values of

Loss and

were obtained with the forward PINN structure with seven layers and 256 neurons, the variation in

was negligible for forward PINN structures with more than four layers, at less than 0.69%. The computational time (

t) for the training increased almost linearly with the number of hidden layers (

m). However, the training time (

t) did not increase as the number of neurons in each layer increased. This may be attributed to the fast convergence achieved with a high number of neurons. The FF-ANN structure with seven hidden layers and 256 neurons was chosen for the CO

2 methanation IFB reactor model.

Figure 6 compares the performance of the PINN with two, five, and seven hidden layers, using 256 neurons for each layer. The

decreases with an increase in the number of hidden layers, as shown in

Figure 5. The solution obtained from the PINN with two layers and 256 neurons deviated significantly from the ODE solution (

Figure 6a). The solution obtained from the PINN with seven layers showed excellent agreement with the ODE solution at high computational cost (

Figure 6c).

Using the PINN with seven layers and 256 neurons, the influences of the number of training data points (

Ntrain) on the loss function (

Loss) and training time (

t) are depicted in

Figure 7. As

Ntrain increases, the

Loss converges, and

t increases proportionally. For the error between the PINN and ODE solutions to be sufficiently small, it is desirable that

Ntrain for the 1D reactor model be over 5000.

4.2. Computational Efficiency of the PINN

A single training time (

t) was approximately two hours for the PINN with

m = 256,

n = 7,

tanh, and

Ntrain = 10,000, as shown in

Figure 7. A substantial computational time was required to determine all hyper-parameters of PINN, whereas the ODE numerical solver (e.g., ode15s) showed fast calculation due to no training stage. However, once the hyper-parameters were determined, and weights (

w) and biases (

b) were optimized, the PINN surrogate model against the ODE numerical solver had the advantage in computational time.

In

Figure 8, the computational times of the PINN surrogate model and the ODE numerical solver are compared with respect to the number of spatial points from 1000 to 200,000 with an interval of 1000. The PINN with

n = 7,

m = 256 and

tanh activation function was used. The calculation time (

t) increases with the increase in the number of spatial points or

Ntest. The calculation time of PINN is less than 0.1 s at

Ntest = 200,000, while that of ODE solver with 200,000 spatial points is approximately 0.95 s. The PINN surrogate model has computational efficiency over the ODE solver and it will be useful for optimization problems repeating the calculation of ODEs/PDEs.

4.3. Extrapolation Capability of the PINN

If a sufficient amount of training data is provided (see

Figure 7), the forward PINN guarantees a solution (

Fi,PINN) that satisfies the governing equation, as mentioned earlier. The PINN has an extrapolation capability when applied for the range out of training data, which is similar to solving first-principle laws in a computational domain.

Figure 9 shows the performance of the forward PINN for 10,000 training data points in a limited range of

z and 1000 test data points in a full range of

z (0 ≤

z ≤ 2), using five hidden layers, 256 neurons per layer, and the

tanh activation function. The collocation range of the training data starts from

z = 0 and ends at

z = 0.5–1.0, with an interval of 0.1 in

Figure 9a–f. Even though the PINN was trained within one sixth (0 ≤

z ≤ 0.5) of the full range, the PINN output (

Fi,PINN) for the test data of the full range (0 ≤

z ≤ 2) agrees well the ODE solution (

Fi,ODE) outside the training range (

Figure 9a).

L2,rel decreases, and the training time tends to increase as the training range increases.

Figure 9g–i show the performance of the PINN that was trained for three data ranges detached from

z = 0, reducing the width of the range of

z. The value of

is correct for all data ranges detached from

z = 0 owing to the boundary condition in Equation (3). However,

Fi,PINN between

z = 0 and the beginning point of the training data is far from

Fi,ODE, and the errors between

Fi,PINN and

Fi,ODE are persistent in the other ranges. As the governing equation used in this study is a type of initial value problem, sufficient training data close to

z = 0 must be provided to obtain reliable PINN solutions.

The extrapolation capability of the PINN is remarkable, unlike that of common ANNs [

28,

30]. The accuracy of the PINN solution is closely related to the range and distribution of the training data [

35]. The present forward PINN model for solving governing equations can overcome the drawbacks of traditional CFD modeling and simulation methods, as mentioned in the introduction. Once the PINN parameters (hyper-parameters, weights, and biases) are optimized, the PINN instantly predicts outputs (

Fi) corresponding to inputs (

z), which can be used as an excellent SM of the governing equation. Because training data at spatial collocation points (

z) are generated independently against the specific spatial domain, the forward PINN model is appropriate for solving governing equations with complex geometries or moving boundary conditions [

47]. In addition, numerical diffusion and round-off errors are minimized in PINNs with the aid of AD [

48].

4.4. Identification of Unknown Model Parameters with the Inverse PINN

The trained PINN with seven layers, 256 neurons per layer, and the tanh activation function was used in the inverse PINN problem to identify an unknown parameter (i.e., ). The number of epochs, initial learning rate, and decay rate of the inverse PINN model were set to 500, 0.03, and 0.005, respectively. The initial value was assumed to be 1 × 10−6.

Figure 10 shows the influence of the number of observation data points (

Nobs) and noise ratios on the identification performance of the effectiveness factor (

η).

Nobs had a range of 20–1000. Initially, the ODE solution (

Fi,ODE) for the governing equation with

η = 1 and 0 ≤

z ≤ 2 was obtained using a stiff ODE solver (i.e., ode15s in MATLAB), which is the mean value of

. The observation data were randomly and uniformly generated, satisfying a normal distribution with a mean of

and standard deviation as the noise ratios of 5% and 20%. The

Fi,PINN displayed by the solid, dashed, and dotted lines in

Figure 10 was acquired from the forward PINN with

η* obtained from the inverse PINN.

It is expected that the six inverse PINN problems in

Figure 10 result in

η* = 1 because all observation data were generated for

η = 1.

Nobs influence the value of

η* more significantly than the noise ratio. If the number of observation data is the same, the noise ratio hardly affects

η*. Even though a small amount of observation data (

Nobs = 20) were used, the error between the exact and predicted

η values was only 0.3% (see

Figure 10a,b). The inverse PINN model inherits the accuracy of the forward PINN model. Thus, the inverse PINN model with well-trained weights and biases can accurately identify model parameters, even for a small amount of observation data.

The effect of the collocation range of observation data on

η* was investigated for the inverse PINN model, as shown in

Figure 11. The collocation range of the observation data significantly influences the identification of

η. High accuracy was achieved when the collocation range of the observation data was close to the boundary (

z = 0), as shown in

Figure 11a,b. When observation data far from the boundary are provided, it was difficult for the inverse PINN model to identify the model parameter (

η), as shown in

Figure 11c. The inverse PINN problem took approximately 20 s for 1000 observations. If the forward PINN is well-trained and the observation data are properly provided, the inverse PINN model can identify unknown model parameters more efficiently than other computationally intensive methods such as CFD.

4.5. Extension of PINN to Different Effectiveness Factors

The current PINN can be extended to the ODE solutions for different process conditions, such as the effectiveness factor (

). One more neuron for 0 ≤

≤ 1 was added into the input layer, which results in the input layer with

z and

, and the identical output layer (

Fi). The boundary condition with the combination of

z and

was used as follows:

where

and

are the bounds of

.

The previous optimized network structure with five hidden layers, 256 neurons per layer, and

tanh activation function was used. The number of training points (

was increased from 10,000 to 30,000 because the two input variables (

z and

) were applied. The number of epochs was also increased from 1000 to 2000 to enhance the convergence during training.

Figure 12 shows the PINN predictions

for

and 1.2, where

= 1000. The PINN captured the

at any

. Excellent prediction for

at

, as an extrapolation was observed, as shown in

Figure 12c. The PINN surrogate model can be used for an optimization problem of the CO

2 methanation process with computational efficiency.

5. Summary and Conclusions

A physics-informed neural network (PINN) was developed for an isothermal fixed-bed (IFB) reactor model for catalytic CO2 methanation. The PINN was composed of a feed-forward artificial neural network (FF-ANN), automatic differentiation (AD) for derivatives, and governing equations with a stiff reaction kinetic rate. The loss function of the PINN included two mean squared errors (MSEs) for the governing equations and boundary conditions. The one-dimensional reactor was initialized at a molar flow rate that was the same as the boundary condition at the reactor inlet.

For the forward problem, the PINN solved the material balance expressed by ordinary differential equations (ODEs) for the IFB reactor model, where hyper-parameters, weights, and biases of FF-ANN were determined. For the inverse problem, the PINN used the weights and biases of the trained forward PINN model and identified unknown model parameters, such as the effectiveness factor of the CO2 methanation reaction, using observation data. Future work is to implement the PINN for the solution of the fixed-bed reactor model with a wide range of operating conditions (temperature, pressure, flow rate, and inlet composition), where the reactor model includes the heat and mass balances. The key conclusions drawn are as follows:

The PINN with the tanh activation function, 5–7 hidden layers, and 256 neurons per hidden layer was found to have the most effective combination of hyper-parameters for the IFB reactor model.

The reliability of the PINN depended on the number and range of the training data.

When the molar flow rates of the reactor were predicted as out of the range of training data, the forward PINN model exhibited an excellent extrapolation performance because the PINN provides a solution satisfying physics-informed constraints.

The inverse PINN model identified unknown model parameters when the observation data were properly provided to the inverse PINN.

The training time of the forward PINN was almost proportional to the number of hidden layers and the number of training data points. The training time of the inverse PINN was relatively short, and the inverse PINN was more efficient at identifying unknown model parameters compared to other numerical methods such as computational fluid dynamics (CFD).

The present PINN model was extended to different process conditions such as effectiveness factors.

The current approach is useful for building a surrogate model for CO2 methanation process design and optimization.