2.1. Dynamical Causal Networks

Our starting point is a dynamical causal network: A directed acyclic graph (DAG)

with edges

E that indicate the causal connections among a set of nodes

V and a given set of background conditions (state of exogenous variables)

(see

Figure 1B). The nodes in

represent a set of associated random variables (which we also denote by

V) with state space

and probability function

. For any node

, we can define the parents of

in

as all nodes with an edge leading into

,

A causal network

is dynamical, in the sense that we can define a partition of its nodes

V into

temporally ordered “slices”,

, starting with an initial slice without parents (

) and such that the parents of each successive slice are fully contained within the previous slice (

). This definition is similar to the one proposed in [

32], but is stricter, requiring that there are no within-slice causal interactions. This restriction prohibits any “instantaneous causation” between variables (see also [

7], Section 1.5) and signifies that

fulfills the Markov property. Nevertheless, recurrent networks can be represented as dynamical causal models when unfolded in time (see

Figure 1B) [

20]. The parts of

can thus be interpreted as consecutive time steps of a discrete dynamical system of interacting elements (see

Figure 1); a particular state

, then, corresponds to a system transient over

time steps.

In a

Bayesian network, the edges of

fully capture the dependency structure between nodes

V. That is, for a given set of background conditions, each node is conditionally independent of every other node, given its parents in

, and the probability function can be factored as

For a

causal network, there is the additional requirement that the edges

E capture causal dependencies (rather than just correlations) between nodes. This means that the decomposition of

holds, even if the parent variables are actively set into their state as opposed to passively observed in that state (“Causal Markov Condition”, [

7,

15]),

As we assume, here, that

U contains all relevant background variables, any statistical dependencies between

and

are, in fact, causal dependencies, and cannot be explained by latent external variables (“causal sufficiency”, see [

34]). Moreover, because time is explicit in

and we assume that there is no instantaneous causation, there is no question of the direction of causal influences—it must be that the earlier variables (

) influence the later variables (

). By definition,

contains all parents of

for

. In contrast to the variables

V within

, the background variables

U are conditioned to a particular state

throughout the causal analysis and are, otherwise, not further considered.

Together, these assumptions imply a transition probability function for

V, such that the nodes at time

t are conditionally independent given the state of the nodes at time

(see

Figure 1C),

To reiterate, a dynamical causal network

describes the causal interactions among a set of nodes (the edges in

E describe the causal connections between the nodes in

V) conditional on the state of the background variables

U, and the transition probability function

(Equation (

1)) fully captures the nature of these causal dependencies. Note that

is generally undefined in the case where

. However, in the present context, it is defined as

using the

operation. The interventional probability

is well-defined for all

and can typically be inferred from the mechanisms associated with the variables in

.

In summary, we assume that

fully and accurately describes the system of interest for a given set of background conditions. In reality, a causal network reflects assumptions about a system’s elementary mechanisms. Current scientific knowledge must inform which variables to include, what their relevant states are, and how they are related mechanistically [

7,

36]. Here, we are primarily interested in natural and artificial systems, such as neural networks, for which detailed information about the causal network structure and the mechanisms of individual system elements is often available, or can be obtained through exhaustive experiments. In such systems, counterfactuals can be evaluated by performing experiments or simulations that assess how the system reacts to interventions. The transition probabilities can, in principle, be determined by perturbing the system into all possible states while holding the background variables fixed and observing the resulting transitions. Alternatively, the causal network can be constructed by experimentally identifying the input-output function of each element (i.e., its structural equation [

7,

34]). Merely observing the system without experimental manipulation is insufficient to identify causal relationships in most situations. Moreover, instantaneous dependencies are frequently observed in (experimentally obtained) time-series data of macroscopic variables, due to unobserved interactions at finer spatio-temporal scales [

37]. In this case, a suitable dynamical causal network may still be obtained, simply by discounting such instantaneous dependencies, since these interactions are not due to the macroscopic mechanisms themselves.

Our objective, here, is to formulate a quantitative account of actual causation applicable to any predetermined, dynamical causal network, independent of practical considerations about model selection [

12,

36]. Confounding issues due to incomplete knowledge, such as estimation biases of probabilities from finite sampling, or latent variables, are, thus, set aside for the present purposes. To what extent and under which conditions the identified actual causes and effects generalize across possible levels of description, or under incomplete knowledge, is an interesting question that we plan to address in future work (see also [

38,

39]).

2.3. Cause and Effect Repertoires

Before defining the actual cause or actual effect of an occurrence, we first introduce two definitions from IIT which are useful in characterizing the causal powers of occurrences in a causal network: Cause/effect repertoires and partitioned cause/effect repertoires. In IIT, a cause (or effect) repertoire is a conditional probability distribution that describes how an occurrence (set of elements in a state) constrains the potential past (or future) states of other elements in a system [

25,

26] (see also [

27,

41] for a general mathematical definition). In the present context of a transition

, an effect repertoire specifies how an occurrence

constrains the potential future states of a set of nodes

. Likewise, a cause repertoire specifies how an occurrence

constrains the potential past states of a set of nodes

(see

Figure 2).

The effect and cause repertoire can be derived from the system transition probabilities in Equation (

1) by conditioning on the state of the occurrence and

causally marginalizing the variables outside the occurrence

and

(see Discussion 4.1). Causal marginalization serves to remove any contributions to the repertoire from variables outside the occurrence by averaging over all their possible states. Explicitly, for a single node

, the effect repertoire is:

where

with state space

. Note that, for causal marginalization, each possible state

is given the same weight

in the average, which corresponds to imposing a uniform distribution over all

. This ensures that the repertoire captures the constraints due to the occurrence, and not to whatever external factors might bias the variables in

W to one state or another (this is discussed in more detail in

Section 4.1).

In graphical terms, causal marginalizing implies that the connections from all

to

are “cut” and independently replaced by an un-biased average across the states of the respective

, which also removes all dependencies between the variables in

W. Causal marginalization, thus, corresponds to the notion of cutting edges proposed in [

34]. However, instead of feeding all open ends with the product of the corresponding marginal distributions obtained from the observed joint distribution, as in Equation (

7) of [

34], here we impose a uniform distribution

, as we are interested in quantifying mechanistic dependencies, which should not depend on the observed joint distribution.

The complementary cause repertoire of a singleton occurrence

, using Bayes’ rule, is:

In the general case of a multi-variate

(or

), the transition probability function

not only contains dependencies of

on

, but also correlations between the variables in

due to common inputs from nodes in

, which should not be counted as constraints due to

. To discount such correlations, we define the effect repertoire over a set of variables

as the product of the effect repertoires over individual nodes (Equation (

2)) (see also [

34]):

In the same manner, we define the cause repertoire of a general occurrence

over a set of variables

as:

We can also define

unconstrained cause and effect repertoires, a special case of cause or effect repertoires where the occurrence that we condition on is the empty set. In this case, the repertoire describes the causal constraints on a set of the nodes due to the structure of the causal network, under maximum uncertainty about the states of variables within the network. With the convention that

, we can derive these unconstrained repertoires directly from the formulas for the cause and effect repertoires, Equations (

3) and (

4). The unconstrained cause repertoire simplifies to a uniform distribution, representing the fact that the causal network itself imposes no constraint on the possible states of variables in

,

The unconstrained effect repertoire is shaped by the update function of each individual node

under maximum uncertainty about the state of its parents,

where

, since

.

In summary, the effect and cause repertoires

and

, respectively, are conditional probability distributions that specify the causal constraints due to an occurrence on the

potential past and future states of variables in a causal network

. The cause and effect repertoires discount constraints that are not specific to the occurrence of interest; possible constraints due to the state of variables outside of the occurrence are causally marginalized from the distribution, and constraints due to common inputs from other nodes are avoided by treating each node in the occurrence independently. Thus, we denote cause and effect repertoires with

, to highlight that, in general,

. However,

is equivalent to

(the conditional probability imposing a uniform distribution over the marginalized variables), in the special case that all variables

are conditionally independent, given

(see also [

34], Remark 1). This is the case, for example, if

already includes all inputs (all parents) of

, or determines

completely.

An objective of IIT is to evaluate whether the causal constraints of an occurrence on a set of nodes are “integrated”, or “irreducible”; that is, whether the individual variables in the occurrence work together to constrain the past or future states of the set of nodes in a way that is not accounted for by the variables taken independently [

25,

42]. To this end, the occurrence (together with the set of nodes it constrains) is partitioned into independent parts, by rendering the connection between the parts causally ineffective [

25,

26,

34,

42]. The

partitioned cause and effect repertoires describe the residual constraints under the partition. Comparing the partitioned cause and effect repertoires to the intact cause and effect repertoires reveals what is lost or changed by the partition.

A partition

of the occurrence

(and the nodes it constrains,

) into

m parts is defined as:

such that

is a partition of

and

with

. Note that this includes the possibility that any

, which may leave a set of nodes

completely unconstrained (see

Figure 3 for examples and details).

The partitioned effect repertoire of an occurrence

over a set of nodes

under a partition

is defined as:

This is the product of the corresponding

m effect repertoires, multiplied by the unconstrained effect repertoire (Equation (

6)) of the remaining set of nodes

, as these nodes are no longer constrained by any part of

under the partition.

In the same way, a partition

of the occurrence

(and the nodes it constrains

) into

m parts is defined as:

such that

is a partition of

and

with

. The partitioned cause repertoire of an occurrence

over a set of nodes

under a partition

is defined as:

the product of the corresponding

m cause repertoires multiplied by the unconstrained cause repertoire (Equation (

6)) of the remaining set of nodes

, which are no longer constrained by any part of

due to the partition.

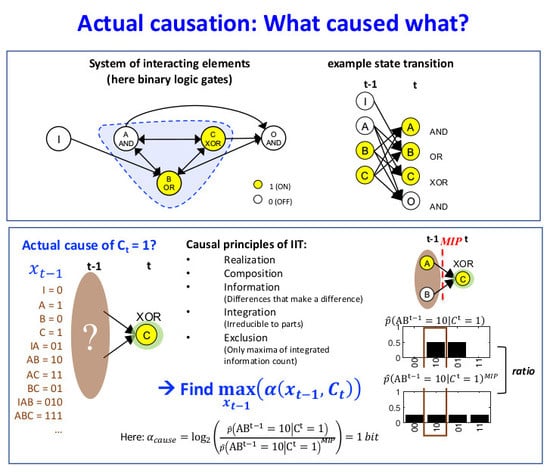

2.4. Actual Causes and Actual Effects

The objective of this section is to introduce the notion of a causal account for a transition of interest in as the set of all causal links between occurrences within the transition. There is a causal link between occurrences and if is the actual effect of , or if is the actual cause of . Below, we define causal link, actual cause, actual effect, and causal account, following five causal principles: Realization, composition, information, integration, and exclusion.

Realization. A transition

must be consistent with the transition probability function of a dynamical causal network

,

Only occurrences within a transition

may have, or be, an actual cause or actual effect (This requirement corresponds to the first clause (“AC1”) of the Halpern and Pearl account of actual causation [

20,

21]; that is, for

to be an actual cause of

, both must actually happen in the first place.)

As a first example, we consider the transition

, shown in

Figure 1D. This transition is consistent with the conditional transition probabilities of the system, shown in

Figure 1C.

Composition. Occurrences and their actual causes and effects can be uni- or multi-variate. For a complete causal account of the transition

,

all causal links between occurrences

and

should be considered. For this reason, we evaluate every subset of

as occurrences that may have actual effects and every subset

as occurrences that may have actual causes (see

Figure 4). For a particular occurrence

, all subsets

are considered as candidate effects (

Figure 5A). For a particular occurrence

, all subsets

are considered as candidate causes (see

Figure 5B). In what follows, we refer to occurrences consisting of a single variable as “first-order” occurrences and to multi-variate occurrences as “high-order” occurrences, and, likewise, to “first-order” and “high-order” causes and effects.

In the example transition shown in

Figure 4,

and

are first-order occurrences that could have an actual effect in

, and

is a high-order occurrence that could also have its own actual effect in

. On the other side,

,

and

are occurrences (two first-order and one high-order) that could have an actual cause in

. To identify the respective actual cause (or effect) of any of these occurrences, we evaluate all possible sets

,

, and

at time

(or

t). Note that, in principle, we also consider the empty set, again using the convention that

(see “exclusion”, below).

Information. An occurrence must provide information about its actual cause or effect. This means that it should increase the probability of its actual cause or effect compared to its probability if the occurrence is unspecified. To evaluate this, we compare the probability of a candidate effect

in the effect repertoire of the occurrence

(Equation (

3)) to its corresponding probability in the unconstrained repertoire (Equation (

6)). In line with information-theoretical principles, we define the effect information

of the occurrence

about a subsequent occurrence

(the candidate effect) as:

In words, the effect information

is the relative increase in probability of an occurrence at

t when constrained by an occurrence at

, compared to when it is unconstrained. A positive effect information

means that the occurrence

makes a positive difference in bringing about

. Similarly, we compare the probability of a candidate cause

in the cause repertoire of the occurrence

(Equation (

4)) to its corresponding probability in the unconstrained repertoire (Equation (

5)). Thus, we define the cause information

of the occurrence

about a prior occurrence

(the candidate cause) as:

In words, the cause information

is the relative increase in probability of an occurrence at

when constrained by an occurrence at

t, compared to when it is unconstrained. Note that the unconstrained repertoire (Equations (

5) and (

6)) is an average over all possible states of the occurrence. The cause and effect information thus take all possible counterfactual states of the occurrence into account in determining the strength of constraints.

In an information-theoretic context, the formula

is also known as the “pointwise mutual information” (see [

43], Chapter 2). While the pointwise mutual information is symmetric, the cause and effect information of an occurrence pair

are not always identical, as they are defined based on the product probabilities in Equations (

3) and (

4). Nevertheless,

and

can be interpreted as the number of bits of information that one occurrence specifies about the other.

In addition to the mutual information, is also related to information-theoretic divergences that measure differences in probability distributions, such as the Kullback–Leibler divergence , which corresponds to an average of over all states , weighted by . Here, we do not include any such weighting factor, since the transition specifies which states actually occurred. While other definitions of cause and effect information are, in principle, conceivable, captures the notion of information in a general sense and in basic terms.

Note that is a necessary, but not sufficient, condition for to be an actual effect of and is a necessary, but not sufficient, condition for to be an actual cause of . Further, if and only if conditioning on the occurrence does not change the probability of a potential cause or effect, which is always the case when conditioning on the empty set.

Occurrences

that lower the probability of a subsequent occurrence

have been termed “preventative causes” by some [

33]. Rather than counting a negative effect information

as indicating a possible “preventative effect”, we take the stance that such an occurrence

has no effect on

, since it actually predicts other occurrences

that did not happen. By the same logic, a negative cause information

means that

is not a cause of

within the transition. Nevertheless, the current framework can, in principle, quantify the strength of possible “preventative” causes and effects.

In

Figure 5A, the occurrence

raises the probability of

, and vice versa (

Figure 5B), with

bits. By contrast, the occurrence

lowers the probability of occurrence

and also of the second-order occurrence

, compared to their unconstrained probabilities. Thus, neither

nor

can be actual effects of

. Likewise, the occurrence

lowers the probability of

, which can, thus, not be its actual cause.

Integration. A high-order occurrence must specify more information about its actual cause or effect than its parts when they are considered independently. This means that the high-order occurrence must increase the probability of its actual cause or effect beyond the value specified by its parts.

As outlined in

Section 2.3, a partitioned cause or effect repertoire specifies the residual constraints of an occurrence after applying a partition

. We quantify the amount of information specified by the parts of an occurrence based on partitioned cause/effect repertoires (Equations (

8) and (

10)). We define the effect information under a partition

as

and the cause information under a partition

as

The information a high-order occurrence specifies about its actual cause or effect is integrated to the extent that it exceeds the information specified under

any partition

. Out of all permissible partitions

(Equation (

7)), or

(Equation (

9)), the partition that reduces the effect or cause information the least is denoted the “minimum information partition” (MIP) [

25,

26], respectively:

or

We can, then, define the integrated effect information

as the difference between the effect information and the information under the MIP:

and the integrated cause information

as:

For first-order occurrences or , there is only one way to partition the occurrence ( or ), which is necessarily the MIP, leading to or , respectively.

A positive integrated effect information () signifies that the occurrence has an irreducible effect on , which is necessary, but not sufficient, for to be an actual effect of . Likewise, a positive integrated cause information () means that has an irreducible cause in , which is a necessary, but not sufficient, condition for to be an actual cause of .

In our example transition, the occurrence

(

Figure 5C) is reducible. This is because

is sufficient to determine that

with probability 1 and

is sufficient to determine that

with probability 1. Thus, there is nothing to be gained by considering the two nodes together as a second-order occurrence. By contrast, the occurrence

determines the particular past state

with higher probability than the two first-order occurrences

and

, taken separately (

Figure 5D, right). Thus, the second-order occurrence

is irreducible over the candidate cause

with

bits (see Discussion 4.4).

Exclusion: An occurrence should have at most one actual cause and one actual effect (which, however, can be multi-variate; that is, a high-order occurrence). In other words, only one occurrence can be the actual effect of an occurrence , and only one occurrence can be the actual cause of an occurrence .

It is possible that there are multiple occurrences

over which

is irreducible (

), as well as multiple occurrences

over which

is irreducible (

). The integrated effect or cause information of an occurrence quantifies the strength of its causal constraint on a candidate effect or cause. When there are multiple candidate causes or effects for which

, we select the strongest of those constraints as its actual cause or effect (that is, the one that maximizes

). Note that adding unconstrained variables to a candidate cause (or effect) does not change the value of

, as the occurrence still specifies the same irreducible constraints about the state of the extended candidate cause (or effect). For this reason, we include a “minimality” condition, such that no subset of an actual cause or effect should have the same integrated cause or effect information. This minimality condition between overlapping candidate causes or effects is related to the third clause (“AC3”) in the various Halpern–Pearl (HP) accounts of actual causation [

20,

21], which states that no subset of an actual cause should also satisfy the conditions for being an actual cause. Under uncertainty about the causal model, or other practical considerations, the minimality condition could, in principle, be replaced by a more elaborate criterion, similar to, for example, the Akaike information criterion (AIC) that weighs increases in causal strength, as measured here, against the number of variables included in the candidate cause or effect.

We define the irreducibility of an occurrence as its maximum integrated effect (or cause) information over all candidate effects (or causes),

and

Considering the empty set as a possible cause or effect guarantees that the minimal value that can take is 0. Accordingly, if , then the occurrence is said to be reducible, and it has is no actual cause or effect.

For the example in

Figure 2A,

has two candidate causes with

bits, the first-order occurrence

and the second-order occurrence

. In this case,

is the actual cause of

, by the minimality condition across overlapping candidate causes.

The exclusion principle avoids causal over-determination, which arises from counting multiple causes or effects for a single occurrence. Note, however, that symmetries in can give rise to genuine indeterminism about the actual cause or effect (see Results 3). This is the case if multiple candidate causes (or effects) are maximally irreducible and they are not simple sub- or super-sets of each other. Upholding the causal exclusion principle, such degenerate cases are resolved by stipulating that the one actual cause remains undetermined between all minimal candidate causes (or effects).

To summarize, we formally translate the five causal principles of IIT into the following requirements for actual causation:

| Realization: | There is a dynamical causal network and a transition , such that . |

| Composition: | All may have actual effects and be actual causes, and all may have actual causes and be actual effects.

|

| Information: | Occurrences must increase the probability of their causes or effects (). |

| Integration: | Moreover, they must do so above and beyond their parts (). |

| Exclusion: | An occurrence has only one actual cause (or effect), and it is the occurrence that maximizes (or ). |

Having established the above causal principles, we now formally define the actual cause and the actual effect of an occurrence within a transition

of the dynamical causal network

:

Definition 1. Within a transition of a dynamical causal network , the actual cause of an occurrence is an occurrence which satisfies the following conditions:

- 1.

The integrated cause information of over is maximal - 2.

No subset of satisfies condition (1)

Define the set of all occurrences that satisfy the above conditions as . As an occurrence can have, at most, one actual cause, there are three potential outcomes:

- 1.

If , then is the actual cause of ;

- 2.

if then the actual cause of is indeterminate; and

- 3.

if , then has no actual cause.

Definition 2. Within a transition of a dynamical causal network , the actual effect of an occurrence is an occurrence which satisfies the following conditions:

- 1.

The integrated effect information of over is maximal - 2.

No subset of satisfies condition (1)

Define the set of all occurrences that satisfy the above conditions as . As an occurrence can have, at most, one actual effect, there are three potential outcomes:

- 1.

If , then is the actual effect of ;

- 2.

if then the actual effect of is indeterminate; and

- 3.

if , then has no actual effect.

Based on Definitions 1 and 2:

Definition 3. Within a transition of a dynamical causal network , a causal link is an occurrence with and actual effect ,or an occurrence with and actual cause , An integrated occurrence defines a single causal link, regardless of whether the actual cause (or effect) is unique or indeterminate. When the actual cause (or effect) is unique, we sometimes refer to the actual cause (or effect) explicitly in the causal link, (or ). The strength of a causal link is determined by its or value. Reducible occurrences () cannot form a causal link.

Definition 4. For a transition of a dynamical causal network , the causal account, , is the set of all causal links and within the transition.

Under this definition, all actual causes and actual effects contribute to the causal account . Notably, the fact that there is a causal link does not necessarily imply that the reverse causal link is also present, and vice versa. In other words, just because is the actual effect of , the occurrence does not have to be the actual cause of . It is, therefore, not redundant to include both directions in , as illustrated by the examples of over-determination and prevention in the Results section (see, also, Discussion 4.2).

Figure 6 shows the entire causal account of our example transition. Intuitively, in this simple example,

has the actual effect

and is also the actual cause of

, and the same for

and

. Nevertheless, there is also a causal link between the second-order occurrence

and its actual cause

, which is irreducible to its parts, as shown in

Figure 5D (right). However, there is no complementary link from

to

, as it is reducible (

Figure 5C, right). The causal account, shown in

Figure 6, provides a complete causal explanation for “what happened” and “what caused what” in the transition

.

Similar to the notion of system-level integration in IIT [

25,

26], the principle of integration can also be applied to the causal account as a whole, not only to individual causal links (see

Appendix A). In this way, it is possible to evaluate to what extent the transition

is irreducible to its parts, which is quantified by

.

In summary, the measures defined in this section provide the means to exhaustively assess “what caused what” in a transition , and to evaluate the strength of specific causal links of interest under a particular set of background conditions, .