1. Introduction

In this age, the cyberspace is growing faster as a primary source for a node to node information transfer with all its charms and challenges. The cyberspace serves as a significant source to access an infinite amount of information and resources over the globe. In 2017, the internet usage rate was 48% globally, later it increased to 81% for developing countries [

1]. The broad spectrum of the cyberspace embraces the internet, users, the system resources, the technical skills of the participants and much more, not just the internet. The cyber-world also plays a significant role in causing limitless vulnerabilities to cyber threats and attacks. Cybersecurity is a set of different techniques, devices, and methods used to defend cyberspace against cyber-attacks and cyber threats [

2]. In the modern world of computer and information technology, the cybercrimes are growing with faster steps as compared to the current cybersecurity system. The weak system configuration, unskilled staff, and scanty amount of techniques are some factors that rise to vulnerabilities in a computer system to threats [

3]. Because of the growing cyber threats, more headway needs to make when developing cybersecurity methods. The outdated and conventional cybersecurity methods have a substantial downside because these methods are ineffectual in dealing with unknown and polymorphic security attacks. There is a need for robust and advanced security methods that can learn from their experiences and detect the previous and new unknown attacks. Cyber threats are increasing in a significant way. It is becoming very challenging to cope with the speed of security threats and provide needful solutions to prevent them [

4].

Machine learning: One of the primarily used advanced methods for cybercrime detection is machine learning techniques. Machine learning techniques can be applied to address the limitations and constraints faced by conventional detection methods [

5]. Researchers have addressed the advancements, limitations, and constraints of applying machine learning techniques for cyberattack detection and have provided a comparison of conventional methods with machine learning techniques. Machine learning is a sub-field of artificial intelligence. ML techniques are built with the abilities to learn from experiences and data without being programmed explicitly [

6]. Applications of ML techniques are expanding in different areas of life, such as education [

7,

8], medical [

9,

10,

11], business and cybersecurity [

12,

13,

14]. Machine learning techniques are playing their role on both sides of the net, i.e., attacker-side and defender-side. On the attacker side, ML techniques are employed to pass through the defense wall. In contrast, on defense side, ML techniques are applied to create prompt and robust defense strategies.

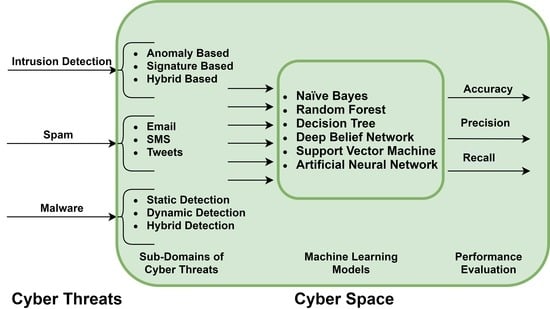

Cyber threats: Machine learning techniques are playing a vital role in fighting against cybersecurity threats and attacks such as intrusion detection system [

15,

16], malware detection [

17], phishing detection [

18,

19], spam detection [

20,

21], and fraud detection [

22] to name a few. We will focus on malware detection, intrusion detection system, and spam classification for this review. Malware is a set of instructions that are designed for malicious intent to disrupt the normal flow of computer activities. Malicious code runs on a targeted machine with the intent to harm and compromise the integrity, confidentiality and availability of computer resources and services [

23]. Saad et al. in [

24] discussed the main critical problems in applying machine learning techniques for malware detection. Saad et al. argued that machine learning techniques have the ability to detect polymorphic and new attacks. Machine learning techniques will lead to all other conventional detection methods in the future. The training methods for malware detections should be cost-effective. The malware analysts should also be able to keep with the understanding of ML malware detection methods up to an expert level. Ambalavanan et al. in [

25] described some of the strategies to detect cyber threats efficiently. One of the critical downsides of the security system is that the security reliability level of the computing resources is generally determined by the ordinary user, who does not possess technical knowledge about security.

Another threat to computer resources is a spam message. Spam messages are unwanted and solicited messages that consume a lot of network resources along with computer memory and speed. ML techniques are being employed to detect and classify a message as spam or ham. ML techniques have a significant contribution to detect spam messages on computer [

26,

27], SMS messages on mobile [

28], spam tweets [

29], or images/video [

30,

31].

An intrusion detection system (IDS) is a protection system to computer networks from any malign intrusions for scanning the network vulnerabilities. Signature-based, anomaly-based and hybrid-based are considered major classifications of an intrusion detection system for network analysis. ML techniques have a substantial contribution to detecting different types of intrusions on network and host computers. However, there are numerous areas such as detection of zero-day and new attacks are considered significant challenges for ML techniques [

32].

Threats to validity: For this review, we included the studies that (1) deal with anyone of the six machine learning models in cyber security, (2) target cyber threats including intrusion detection, spam detection, and malware detection, and (3) discuss the performance evaluation in terms of accuracy, recall, or precision. We have used multiple combinations of strings such as ‘machine learning and cyber security’ and ‘machine learning and cybersecurity’ to retrieve the peer-reviewed articles of journal, conference proceedings, book chapters, and reports. We have targeted the six databases, namely Scopus, ACM Digital Library, IEEE Xplore, ScienceDirect, SpringerLink, and Web of Science. Google Scholar was also used for forward and backward search. We have focused on recent advancement in the last ten years. In total, 2852 documents were retrieved, and 1764 duplicated items were removed. The title and abstract were screened to identify potential articles. The full text of 361 studies were assessed to find the relevancy with the inclusion criteria. We have excluded the articles that were discussing (1) the cyber threats other than intrusion detection, spam detection, and malware detection, (2) threats to cyber-physical systems, (3) threats to cloud security, IoT devices, smart grids, smart cities, and satellite and wireless communication. With backward and forward search, 19 more studies were retrieved. In total, 143 studies were finally selected for data extraction purpose.

Figure 1 depicts the process of article selection. The previous survey and review articles were used in addition to these included papers to provide a comprehensive performance evaluation.

Xin et al. in [

33] reviewed the critical challenges faced by machine learning techniques and their solutions in a network intrusion detection system. Each ML technique has its pros and cons. No ML technique could be declared as the best technique with no limitations. One of the biggest challenges faced by ML techniques is that data collection is a lengthy and laborious procedure. Most of the publicly available datasets are outdated, have missing or redundant values [

33]. In contrast, this paper covers other cybersecurity threats and the evaluation of ML models in those areas.

Gandotra et al. in [

34] provided a classification of malware in the static, dynamic and hybrid analysis. Moreover, he provided a review of several research papers that applied machine learning techniques to detect malware. However, he only targeted a cyber threat, i.e., malware. Moreover, critical analysis and performance evaluation of machine learning techniques are missed. There is no description of the state-of-the-art malware datasets. In contrast, our paper has targeted sever cyber threats and provided the description of commonly used datasets. Moreover, the performance evaluation of significant machine learning techniques on a frequently used dataset is also presented.

Bhavna et al. in [

35] reviewed several papers applying machine learning techniques to detect cyber threats. However, they have focused and described more on instruction detection. Performance evaluation of machine learning techniques and benchmark datasets are also not provided.

Ford et al. in [

36] presented a survey on the application of machine learning techniques in cybersecurity. This survey addressed the crucial challenges in applying machine learning technique in cybersecurity. ML techniques are efficiently fighting against the cyberattacks and threats. However, machine learning classifiers themselves are exposed to various cyber and adversarial attacks. There is an immense amount of work needed to improve the safety of ML from adversarial cyberattacks. Jiang et al. in [

37] examined the various publications on using machine learning techniques in cybersecurity from 2008 to early 2016. The authors also described that, despite the growing role of machine learning techniques in cybersecurity, the selection of appropriate and suitable machine learning technique for a specific underlying safety problem is still a challenging matter of grave concern.

Hodo et al. in [

38] assessed the performance of machine learning techniques in anomaly detection and measured the usefulness of feature selection in ML IDS. They claimed that although convolutional neural network (CNN) classifier could have served as a satisfactory classifier in cybersecurity, still it has not been used to its full potential. Moreover, machine learning models are unable to adequately detect the attacks because of the missing and incorrect signatures in the signature list of an intrusion detection system. Besides, further work is needed to explore the knowledge-based and behavioral-based approaches.

Apruzzese et al. in [

39] presented an analysis of machine learning techniques in cybersecurity to detect the spams, malware and intrusions. It asserted that the machine learning techniques are vulnerable to cyber threats and all the methods are still struggling to overcome all the limitations and obstacles. The biggest challenge is that most same classifier used for different kind of safety problems. It is highly required to find suitable classifier for a particular safety issue. It also emphasized that all the shortcomings of machine learning techniques should be handled as a matter of deep concern as cyber attackers are leveraging all their resources.

The communication technologies used by smart grids are leading to cybersecurity deficits. Yin et al. in [

40] developed a method to gauge the vulnerable area of distributed network protocol 3 (DNP3) protocol based on IoT-based smart grid and SCADA system. The obtained vulnerability measures were used to develop an attack model for the data link layer, transport layer, and application layer. Furthermore, they developed two algorithms by applying machine learning techniques to transform the data. Authors showed by experimental results that the proposed system classified intrusive fields with detailed information about DNP3 protocol. Peter et al. in [

41] discussed three types of malware and central measures that are crucially needed to overcome the security threats. They suggested that cybercrimes can be reduced by continuously updating the cybersecurity policy, decreasing the reaction time and robust segmentation. Ndibanje et al. in [

42] presented a classification method for obscure malware detection by using API call as malicious code. They applied similarity-based machine algorithms for feature extraction and claimed to have effective results for obscure detection methods.

Torres et al. in [

43] discussed the utilization of machine learning classification techniques applied in cybersecurity. They provided a review of different alternatives to using machine learning models to reduce the error rate in intrusion and attack detection. However, this paper describes the significant challenges and considers several other cyber threats to cybersecurity. Ucci et al. [

44] focused on achieving malware detection using key machine learning techniques. They analyzed malware detection using a feature extraction process. They also emphasized that there is an urgent need to update the currently used datasets as most of the publicly available datasets are outdated. In contrast, this paper provided an overview of commonly used ML models, their complexities and evaluations criteria based on several datasets in multiple cyber domains.

Table 1 presents a comparison of this paper with the existing survey and review papers. It can be observed that most of the review papers have not presented a comprehensive review of significant cyber threats. Moreover, none of the paper provided the performance evaluation of famous machine learning techniques. Secondly, we have not just provided the performance evaluation. Instead, we have compared them based on benchmark datasets. Our comparisons in Tables 4–9 depict the performance of each machine learning technique on the detection of significant cyber threats based on frequently used datasets. We have also described the current challenges of using machine learning techniques in cybersecurity that open new horizons for future research in this direction.

Contributions: In this review paper, we build upon the existing literature of applications of ML models in cybersecurity and provide a comprehensive review of ML techniques in cybersecurity. The following are significant contributions to this study:

- (1)

To the best of our knowledge, we have made the first attempt to provide a comparison of the time complexity of commonly used ML models in cybersecurity. We have also described the critical limitations of each ML model.

- (2)

Unlike other review papers, we have reviewed applications of ML models to common cyber threats that are intrusion detection, spam detection and malware detection.

- (3)

We have comprehensively compared each classifier performance based on frequently used datasets.

- (4)

We have listed the critical challenges of using machine learning techniques in the cybersecurity domain.

This review paper is organized as follows:

Section 2 describes an overview of cybersecurity threats, commonly used security datasets, basics of machine learning, and evaluation criteria to evaluate the performance of any classifier.

Section 3 provides a comprehensive comparison of frequently used ML classifiers based on different cyber threats and datasets.

Section 4 concludes this study and points out the critical challenges of ML models in cybersecurity.

4. Discussion and Conclusions

Machine learning techniques have become the most integral underlying part of the modern cyber world, particularly for cybersecurity. Machine learning techniques are being applied on both sides, i.e., attacker side and defender side. On the attacker side, machine learning techniques are being used to find new ways to pass through and evade the security system and firewall. On the defender side, these techniques are helping security professional to protect the security systems from illegal penetration and unauthorized access. This paper reviews a comparative analysis of machine learning techniques applied to detect cybersecurity threats. We have considered three significant threats to cyberspace: intrusion detection, spam detection, and malware detection. We have compared six machine learning models, namely, random forest, support vector machine, naïve Bayes, decision tree, artificial neural network, and deep belief network. We have further compared these models on further sub-domain of cyber threats. The sub-domains of each cyber threat are different. Anomaly-based, signature-based, and hybrid-based are considered sub-domains for intrusion detection. For malware detection, the sub-domains are either static detection, dynamic detection or hybrid-detection. Sub-domains for the spam are the medium on which the models are applied to classify spam like images, videos, emails, SMS or calls.

Section 2 described each sub-domain of threat in detail. This section is divided into two parts. First part provides the discussion on the performance of various ML models applied in cybersecurity. The second part provides the challenges of using machine models in cybersecurity and concludes the study.

4.1. Performance Evaluation of ML Models

Figure 2 shows the performance comparison of six machine learning techniques based on frequently used datasets to detect intrusion detection. We have picked the values from the given tables that show the maximum value for accuracy, precision and recall based on the dataset. SVM has revealed an outstanding performance of nearly 98% on KDD dataset whereas the utmost accuracy for SVM reported on NSL-KDD dataset was 83%. DBN performed outstanding nearly on all datasets and shown an accuracy above 95% to detect intrusion. On the DARPA dataset, NB and ANN performed better accuracy than other models, but ANN has given worse precision value on DARPA dataset. On NSL-KDD dataset, DBN performed best among other models concerning accuracy, precision, and recall. The SVM and DBN came up with an excellent precision on KDD-Cup 99 dataset among all other models. On KDD dataset, decision tree and random forest have shown excellent precision rate among all the models. Random forest on KDD dataset, NB on DARPA, DBN on NSL-KDD, and NB on KDD Cup99 have shown the best recall rates, respectively.

Figure 3 shows the performance evaluation of six machine learning techniques on frequently used datasets to detect malware. We have observed that there are not much benchmark datasets available for malware detection. Mostly, the researchers collected their customized datasets and applied machine learning techniques to evaluate the models. We have also noticed that machine learning techniques are often shown outstanding accuracy, precision, and recall values on the customized dataset. These proposed techniques don’t show similar best performance when applied to other datasets. Classical machine learning techniques, e.g., decision tree performed better on several datasets. DBN showed an outstanding recall value almost on all datasets. DT and RF have performed with a better precision rate on VirusShare dataset. RF has shown excellent recall, precision, and accuracy values on Enron dataset. ANN has shown worst performance on Enron dataset for accuracy, recall and precision. With respect to accuracy, the NB and DT performed excellently on the VirusShare dataset compared to other models.

Figure 4 shows performance evaluation of machine learning techniques based on frequently used datasets for spam classification. Spambase is a famous spam dataset, and NB performed better among other machine learning models with respect to the accuracy, precision, and recall. Researchers have also collected dataset from Twitter, containing millions of tweets. RF has outperformed and reported more than 97% precision value. All evaluated machine learning models have shown more than 90% accuracy to detect and classify spam. SVM and DBN have shown better accuracy, recall and precision among other models when applied to the collection of SMS to classify spam text messages. It can also be observed that SMS collection is a customized dataset collected by the researcher. Every machine learning model has given more than 95% accuracy, precision, and recall, whereas the same machine learning models have different performance values on standard datasets, i.e., Enron and Spambase.

Figure 5 shows the comparative analysis of accuracy, precision and recall values for the detection of intrusion, spam and malware. We have taken the maximum value obtained by six machine learning models regardless of the dataset. It is depicted that SVM, DT and RF have given the maximum accuracy and precision value for intrusion detection. However, DBN and ANN reported the best recall value for the detection of intrusion. It is recommended that SVM, DT, NB, and RF should be considered for intrusion detection if accuracy is the priority for intrusion detection. DBN and ANN comparatively performed worse than other models to detect malware. However, ANN has shown exceptional recall value for the detection of malware. RF and NB have shown better accuracy for the classification of spams yet DBN again recommended in case precision and recall is the priority of situation. Keeping in view the metrics collected from reviewed papers, RF and NB are recommended for the classification of spam, and SVM and DT for the detection of malware, respectively, for better accuracy.

4.2. Challenges of Using ML Models in Cybersecurity and Future Directions

The application of machine learning techniques to detect several cyber threats has shown better results than conventional methods. Despite having all those improvements, machine learning techniques are still facing many challenges in the cybersecurity domain. We have presented a comparison of machine learning techniques based on frequently used datasets. The unavailability of benchmark and updated datasets for the training of machine learning models is a big challenge. Another unbalancing trend is that the same dataset is generating different results using the same techniques for the same sub-domain. In the

Table 4, SVM is applied to detect anomaly-based intrusion by [

92,

93] but having a difference of 10% in accuracy. The same can be seen in the case of [

98,

99] in

Table 4, [

116,

117] in

Table 5, [

151,

160] in

Table 8, and [

86,

103] in

Table 9 to name a few.

The unbalance in the accuracy may be due to the selection of different feature extraction methods or data for testing and training purposes. However, this discrepancy in results has created confusion while selecting a suitable classifier for a particular problem. In addition to these problems, the available datasets need to rationalize by banishing the redundant, noisy, missing and unbalanced data. These datasets should be up to date with modern and sophisticated attacks, and missing values should be detected and removed from the required data.

The detection speed of particular cyber threat and prompt action are critical challenges for machine learning models. The time complexity of machine learning models matters in this case. Faster and robust models will detect the cyber threat beforehand and stop it from creating any problems for network and system. However, in order to have a better detection rate and to take a prompt action, the models with lower time complexity are suggested to use. Generally, the models with linear complexities such as O(n) and log-logarithmic are considered best. The choice of machine learning model will vary from case to case. The time complexity of each model is obtained after a rigorous literature review and web search. We have provided the time complexity of significant machine learning models used in cybersecurity in

Table 2. It is essential to have efficient models to produce the best detection rate and quick response in some scenarios, such as the military. If the detection rate of models will be slower, then attackers will dodge the model to harm the system before any preventative measures are taken.

Deep learning can handle data without human interaction; however, still have several limitations. In comparison to machine learning techniques, deep learning techniques need an enormous amount of data and costly hardware components to produce better results. The substantial magnitude of time and power is required to process more massive datasets. In order to train the model, there is a need for high computing hardware such as GPUs and parallel processing to expedite the learning and classification processes. The growing rate of unlabeled, sparse, and missing data also affects the training process of the models. There is a need to have high computational efficiency where maximum throughput is trying to achieve with limited resources.

There is a significant need to have high-level of correctness instead of speed and response of prediction in some scenarios. Trustworthiness is essential when machine learning techniques are being applied in life-critical or mission-critical applications such as self-driving cars. Image classification is very critical to correctly read a traffic sign by self-driving cars. Prediction cannot be applied with blind faith where robots are doing the treatment and surgery of cancer patients.

We have also observed that, even in 2020, researchers are applying and testing the latest machine learning and deep learning techniques on outdated datasets. It is apparent from the year column of

Table 4,

Table 5,

Table 6,

Table 7,

Table 8 and

Table 9 that latest machine learning techniques are being tested on DARPA and KDD Cup datasets which are more than 15 years old. There is a need to have the latest, benchmark, and real-time datasets to evaluate latest machine learning and deep learning models. There are benchmark datasets for intrusion detection like DARPA and KDD Cup. However, we have perceived that there is a deficiency of state-of-the-art datasets for spam and malware detection problems. Researchers are applying latest machine learning techniques on their customized datasets. They claim to have the better accuracy of their models without disclosing their datasets and code to regenerate the results. Customized datasets are often collected in a particular fashion that lack diversity and their proposed model(s) performed well on those datasets. However, when the same models are tested on other similar problem domain on different dataset, the models don’t show the similar best results as claimed by authors on their customized datasets.

Furthermore, researchers are publishing the performance evaluation of their proposed models using different metrics. Some are publishing the recall while others are only focusing on accuracy. There should be standardized metrics to compare the performance of models. It can be observed from

Table 4,

Table 5,

Table 6,

Table 7,

Table 8 and

Table 9 that most of the researchers have published the accuracy of their model, leaving other metrics. False-negative is a value that describes the case whereby an illegitimate user has been granted with access to a system and network. This would have a drastically worse effect on system performance rather than considering accuracy. There should be standard metrics in order to compare the models using different measurements. This would then be a milestone for future research to improve the performance of models.

Further, there is a need to have robust machine learning models to handle adversarial inputs. There should be an emphasis on training the model in adversarial settings to develop robust models against adversarial inputs. We have reviewed the six machine learning models based on several datasets to detect a cyber threat but encourage a beginner in this domain to delve into the extensive bibliography presented in this review paper. In future work, we will analyze more ML and DL techniques against several other cybersecurity threats. We will evaluate the ML models in other areas of cybersecurity, such as IoT, smart cities, methods based on API calls, cellular network, and smart grids.