An Ultra-Short-Term Electrical Load Forecasting Method Based on Temperature-Factor-Weight and LSTM Model

Abstract

:1. Introduction

2. Long Short-Term Memory Artificial Neural Networks

- Forget gate: The forget gate determines by the forgetting coefficient, which refers to how much of the long-term memory of the previous moment should be retained. It further integrates the output of the previous time point with the input of the current time point into an input matrix . Finally, the sigmoid activation function outputs a real number in the range (0,1); here, 1 means that all memories should be stored, while 0 indicates that all memories should be forgotten:Here, is the activation function, represents the weight matrix of the fully connected layer network, and indicates the bias matrix of the fully connected layer network; moreover, is the forgetting coefficient.

- Input gate: Function of the input gate: it determines how much of the current input is saved for long-term memory :Here, and denote the weight and bias parameters, respectively, of the function sigmoid at the fully connection layer, while and are the weight and bias parameters, respectively, of the tanh function of the fully connection layer.

- Output gate: Function of the output gate: the intermediate parameter is used to determine the extent to which the long-term memory affects the current cell output:

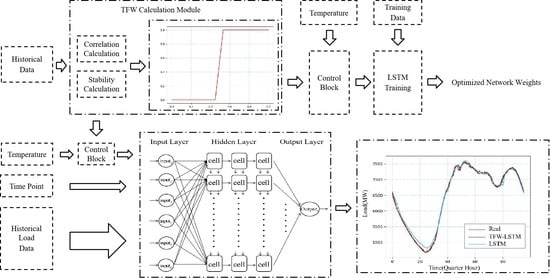

3. TFW-LSTM Method

3.1. Data Acquisition and Preprocessing

3.2. Construction of LSTM Model

3.3. TFW

| Algorithm 1 TFW Calculation Module. |

| Input: |

| Historical load data L; |

| Historical temperature data T; |

| Output: |

| TFW ; |

|

3.4. Implementation of TFW-LSTM Method

3.4.1. Structure of TFW-LSTM Method

3.4.2. Experimental Configuration

4. Results and Discussion

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

Abbreviations

| USTLF | Ultra-short-term electrical load forecasting |

| TFW | Temperature factor weight |

| LSTM | Long short-term memory |

| MAPE | Mean absolute percentage error |

| BP | Back propagation |

| GM | Grey model |

| MLP | Multi-layer perceptron |

| TFW-LSTM | The abbreviated name of our proposed method |

| ACA | Adjacent cell average |

| TIC | Temperature influence coefficient |

References

- Hong, T.; Fan, S. Probabilistic electric load forecasting:a tutorial review. Int. J. Forecast. 2016, 32, 914–938. [Google Scholar] [CrossRef]

- Wang, Q.; Zhang, C.; Ding, Y.; Xydis, G.; Wang, J.; Østergaard, J. Review of real-time electricity markets for integrating distributed energy resources and demand response. Appl. Energy 2015, 138, 695–706. [Google Scholar] [CrossRef]

- Jia, Z.; Fan, Z.; Li, C.; Jiang, M. The Application of Improved Grey GM(1,1) Model in Power System Load Forecast. Future Wirel. Netw. Inf. Syst. 2011, 144, 603–608. [Google Scholar]

- Song, J.; Shu, H.; Dong, J.; Liang, Y.; Li, Y.; Yang, B. Comprehensive Load Forecast Based on GM(1,1) and BP Neural Network. Electr. Power Constr. 2020, 41, 75–80. [Google Scholar] [CrossRef]

- Liu, C.-W.; Zhao, H.-K.; Yan, H.; Wang, J.-H. Power Load Forecasting Based on Fractional GM(1,1) and BP Neural Network. Math. Pract. Theory 2018, 48, 145–151. [Google Scholar]

- Long, Y.; Su, Z.; Wang, Y. Monthly load forecasting model based on seasonal adjustment and BP neural network. Syst. Eng. Theory Pract. 2018, 38, 1052–1060. [Google Scholar] [CrossRef]

- Ge, Q.; Jiang, H.; He, M.; Zhu, Y.; Zhang, J. Power Load Forecast Based on Fuzzy BP Neural Networks with Dynamical Estimation of Weights. Int. J. Fuzzy Syst. 2020, 22, 956–969. [Google Scholar] [CrossRef]

- Hu, Y.-C. Electricity consumption prediction using a neural network based grey forecasting approach. J. Oper. Res. Soc. 2017, 68, 1259–1264. [Google Scholar] [CrossRef]

- Rim, H.; Mourad, Z.; Ousama, B.-S. Short-term electric load forecasting in Tunisia using artificial neural networks. Energy Syst. 2020, 11, 357–375. [Google Scholar] [CrossRef]

- Behm, C.; Nolting, L.; Praktiknjo, A. How to Model European Electricity Load Profiles using Artificial Neural Networks. Appl. Energy 2020, 277, 115564. [Google Scholar] [CrossRef]

- Pal, S.S.; Kar, S. A hybridized forecasting method based on weight adjustment of neural network using generalized type-2 fuzzy set. Int. J. Fuzzy Syst. 2019, 21, 308–320. [Google Scholar] [CrossRef]

- Imtiaz, P.; Arif, S.; Anjan, D.; Temitayo, O.; Md Golam, D. Multi-Layer Perceptron Based Photovoltaic Forecasting for Rooftop PV Applications in Smart Grid. 2020. Available online: https://www.researchgate.net/publication/338852633 (accessed on 26 August 2020).

- Tong, Y.; Liu, Y.; Wang, J.; Xin, G. Text Steganography on RNN-Generated Lyrics. Math. Biosci. Eng. 2019, 16, 5451–5463. [Google Scholar] [CrossRef]

- Xiang, L.; Yang, S.; Liu, Y.; Li, Q.; Zhu, C. Novel Linguistic Steganography Based on Character-Level Text Generation. Mathematics. 2020, 8, 1558. Available online: https://0-doi-org.brum.beds.ac.uk/10.3390/math8091558 (accessed on 17 September 2020). [CrossRef]

- Wang, J.; Qin, J.; Xiang, X.; Tan, Y.; Pan, N. CAPTCHA recognition based on deep convolutional neural network. Math. Biosci. Eng. 2019, 16, 5851–5861. [Google Scholar] [CrossRef]

- Zhang, Y.; Lu, W.; Ou, W.; Zhang, G.; Zhang, X.; Cheng, J.; Zhang, W. Chinese medical question answer selection via hybrid models based on CNN and GRU. Multimed. Tools Appl. 2020, 79, 14751–14776. [Google Scholar] [CrossRef]

- Luo, Y.; Qin, J.; Xiang, X.; Tan, Y.; Liu, Q.; Xiang, L. Coverless real-time image information hiding based on image block matching and Dense Convolutional Network. J. Real-Time Image Proc. 2020, 17, 125–135. [Google Scholar] [CrossRef]

- Hochreiter, S.; Schmidhuber, J. Long short-term memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef]

- Muzaffar, S.; Afshari, A. Short-Term Load Forecasts Using LSTM Networks. Energy Procedia 2019, 158, 2922–2927. [Google Scholar] [CrossRef]

- Santra, A.S.; Lin, J.-L. Integrating Long Short-Term Memory and Genetic Algorithm for Short-Term Load Forecasting. Energies 2019, 12, 2040. [Google Scholar] [CrossRef] [Green Version]

- Qing, X.; Niu, Y. Hourly day-ahead solar irradiance prediction using weather forecasts by LSTM. Energy 2018, 148, 461–468. [Google Scholar] [CrossRef]

- Li, S. LSTM Recurrent Neural Network Short-Term Power Load Forecasting Based on TensorFlow. Shanghai Energy Conserv. 2018, 7, 974–977. [Google Scholar]

- Chen, Z.; Liu, J.; Li, C.; Ji, X.; Li, D.; Huang, Y.; Di, F. Ultra Short-term Power Load Forecasting Based on Combined LSTM-XGBoost Model. Power System Technol. 2019, 44, 614–620. [Google Scholar] [CrossRef]

- Zhang, M.; Du, Y.; Hong, G. Application of LSTM in Yichang Short-term Power Load Forecasting. J. Meteorol. Res. Appl. 2019, 40, 72–77. [Google Scholar] [CrossRef]

- Liu, P.; Zheng, P.; Chen, Z. Deep Learning with Stacked Denoising Auto-Encoder for Short-Term Electric Load Forecasting. Energies 2019, 12, 2445. [Google Scholar] [CrossRef] [Green Version]

- Kulkarni, S.; Simon, S.P. A spiking neural network (SNN) forecast engine for short-term electrical load forecasting. Appl. Soft Comput. 2013, 13, 28–35. [Google Scholar] [CrossRef]

- Wang, H.; Xue, W.; Liu, Y.; Peng, J.; Jiang, H. Probabilistic wind power forecasting based on spiking neural network. Energy 2020, 196. [Google Scholar] [CrossRef]

- Udaya, B.R.; Alberto, M.; Anton, S.; Henrik, J.; Calogero, M.O. Cuneate spiking neural network learning to classify naturalistic texture stimuli under varying sensing conditions. Neural Netw. 2020, 123, 273–287. [Google Scholar] [CrossRef]

- Zhang, N.; Li, Z.; Zou, X.; Quiring, S.M. Quiring. Comparison of three short-term load forecast models in Southern California. Energy 2019, 189, 1–11. [Google Scholar] [CrossRef]

- Li, B.; Men, D.; Yang, J.; Zhou, J. Bus load Forecasting Based on Numerical Weather Prediction. Autom. Electr. Power Syst. 2015, 39, 137–140. [Google Scholar] [CrossRef]

- Yuan, S.; Zhang, G.; Zhang, J. Integrated Forecasting Model of Bus Load Based on Numerical Weather Prediction. Power Syst. Autom. 2019, 41, 62–65. [Google Scholar] [CrossRef]

- Wang, J.; Gu, X.; Liu, W. An Empower Hamilton Loop based Data Collection Algorithm with MobileAgent for WSNs. Hum.-Cent. Comput. Inf. Sci. 2019, 9, 1–14. [Google Scholar] [CrossRef]

- Wang, J.; Yang, Y.; Wang, T. Big Data Service Architecture: A Survey. J. Internet Technol. 2020, 21, 393–405. [Google Scholar]

- Zhang, J.; Zhong, S.; Wang, T. Blockchain-Based Systems and Applications: A Survey. J. Internet Technol. 2020, 21, 1–14. [Google Scholar]

- Yu, F.; Liu, L.; Xiao, L.; Li, K.; Ca, S. A robust and fixed-time zeroing neural dynamics for computing time-variant nonlinear equation using a novel nonlinear activation function. Neurocomputing 2019, 350, 108–116. [Google Scholar] [CrossRef]

- Yu, F.; Liu, L.; He, B.; Huang, Y.; Shi, C.; Cai, S.; Song, Y.; Du, S.; Wan, Q. Analysis and FPGA Realization of a Novel 5D Hyperchaotic Four-Wing Memristive System, Active Control Synchronization, and Secure Communication Application. Complexity 2019, 1, 1–18. [Google Scholar] [CrossRef] [Green Version]

- Zhang, D.; Yang, G.; Li, F. Detecting seam carved images using uniform local binary patterns. Multimed. Tools Appl. 2018, 79, 8415–8430. [Google Scholar] [CrossRef]

- Ding, X.; Zhang, D. Detection of motion-compensated frame-rate up-conversion viaoptical flow-based prediction residue. Optik 2020, 207, 1637–1649. [Google Scholar] [CrossRef]

| RNN-Unit | Input Size | Learning Rate | RNN-Hid Layer | Batch Size | Keep-Prob | MAPE% |

|---|---|---|---|---|---|---|

| 40 | 6 | 0.0006 | 3 | 96 | 1 | 0.516494 |

| 60 | 7 | 0.001 | 4 | 120 | 1 | 0.928832 |

| 40 | 7 | 0.0001 | 4 | 48 | 0.9 | 1.87681 |

| 80 | 5 | 0.001 | 3 | 96 | 1 | 1.065048 |

| 60 | 4 | 0.0006 | 5 | 96 | 1 | 0.586579 |

| Interval | TFW |

|---|---|

| Add Temperature Factor Interval | 100% |

| NO Temperature Factor Interval | 0% |

| Fuzzy Endpoint |

| MAPE% | MAE | RMSE | MAPE% | MAE | RMSE | ||

|---|---|---|---|---|---|---|---|

| 12 January 2019 | 1.227 | 63.163 | 103.183 | 1.463 | 77.701 | 114.354 | 0.075 |

| 18 January 2019 | 1.153 | 56.607 | 85.589 | 1.630 | 83.032 | 127.219 | 0.102 |

| 24 January 2019 | 1.241 | 54.110 | 84.717 | 1.946 | 82.014 | 117.868 | 0.206 |

| 12 March 2019 | 1.246 | 43.658 | 65.677 | 1.357 | 47.994 | 74.596 | 0.162 |

| 17 March 2019 | 1.202 | 42.803 | 69.275 | 2.405 | 85.847 | 110.384 | 0.110 |

| 20 March 2019 | 0.790 | 27.635 | 38.617 | 1.981 | 63.316 | 84.662 | 0.039 |

| 28 March 2019 | 1.718 | 56.029 | 117.487 | 2.399 | 82.745 | 144.635 | 0.059 |

| 13 April 2019 | 1.525 | 49.922 | 71.111 | 1.968 | 65.622 | 93.890 | 0.099 |

| 20 April 2019 | 0.918 | 30.824 | 43.712 | 1.654 | 55.777 | 76.264 | 0.012 |

| 30 April 2019 | 1.096 | 35.621 | 50.803 | 1.619 | 52.045 | 73.962 | 0.046 |

| 4 May 2019 | 1.293 | 42.546 | 66.575 | 1.836 | 61.701 | 90.558 | 0.253 |

| 12 May 2019 | 1.118 | 36.729 | 54.485 | 1.597 | 51.431 | 70.312 | 0.126 |

| 23 May 2019 | 1.062 | 37.021 | 56.133 | 1.507 | 49.843 | 74.977 | 0.007 |

| 31 May 2019 | 1.192 | 39.722 | 61.448 | 1.821 | 57.460 | 74.052 | 0.137 |

| 12 June 2019 | 1.451 | 57.441 | 78.488 | 1.526 | 60.385 | 82.375 | 0.578 |

| 20 June 2019 | 1.119 | 55.520 | 87.815 | 1.572 | 80.519 | 119.348 | 0.284 |

| 30 June 2019 | 1.390 | 56.590 | 88.353 | 1.458 | 58.247 | 85.244 | 0.904 |

| 13 July 2019 | 1.564 | 57.286 | 80.040 | 1.583 | 60.550 | 94.416 | 0.637 |

| 20 July 2019 | 1.421 | 78.423 | 110.185 | 1.345 | 74.223 | 107.056 | 0.980 |

| 27 July 2019 | 1.025 | 66.570 | 97.223 | 0.957 | 61.890 | 88.563 | 0.594 |

| 31 July 2019 | 0.502 | 32.783 | 42.402 | 1.159 | 68.649 | 86.243 | 0.533 |

| 6 August 2019 | 0.638 | 42.122 | 61.446 | 0.766 | 48.252 | 65.227 | 0.209 |

| 10 August 2019 | 0.604 | 36.360 | 52.501 | 0.836 | 49.441 | 65.596 | 0.236 |

| 20 August 2019 | 0.726 | 46.776 | 66.012 | 0.882 | 55.004 | 75.880 | 0.281 |

| 31 August 2019 | 1.150 | 51.518 | 67.147 | 1.194 | 77.397 | 94.842 | 0.699 |

| 8 September 2019 | 1.059 | 55.734 | 89.783 | 1.145 | 61.295 | 92.147 | 0.540 |

| 24 September 2019 | 1.313 | 52.112 | 78.539 | 1.510 | 56.266 | 85.089 | 0.450 |

| 28 September 2019 | 1.117 | 42.166 | 59.831 | 1.257 | 51.505 | 75.338 | 0.048 |

| 3 October 2019 | 1.247 | 46.173 | 65.438 | 2.061 | 69.810 | 88.587 | 0.196 |

| 13 October 2019 | 1.215 | 43.057 | 66.225 | 1.926 | 66.577 | 89.706 | 0.336 |

| 19 October 2019 | 1.106 | 39.967 | 59.553 | 1.218 | 43.722 | 62.159 | 0.026 |

| 30 October 2019 | 1.120 | 40.293 | 67.258 | 1.377 | 47.215 | 62.717 | 0.010 |

| 7 November 2019 | 0.651 | 23.465 | 29.651 | 1.205 | 44.648 | 69.212 | 0.038 |

| 14 November 2019 | 0.919 | 33.960 | 56.952 | 1.145 | 43.583 | 67.244 | 0.000 |

| 20 November 2019 | 1.158 | 43.012 | 67.328 | 1.359 | 52.550 | 85.253 | 0.043 |

| 30 November 2019 | 1.918 | 87.432 | 117.466 | 3.755 | 184.180 | 244.727 | 0.000 |

| 8 December 2019 | 0.908 | 39.555 | 63.833 | 1.631 | 72.002 | 106.587 | 0.120 |

| 24 December 2019 | 0.797 | 38.797 | 50.369 | 3.375 | 156.705 | 201.106 | 0.088 |

| 31 December 2019 | 1.402 | 72.795 | 106.010 | 1.636 | 80.844 | 112.853 | 0.093 |

| MAPE% | MAE | RMSE | MAPE% | MAE | RMSE | MAPE% | MAE | RMSE | |

|---|---|---|---|---|---|---|---|---|---|

| 20 March | 0.79 | 27.63 | 38.61 | 2.85 | 89.11 | 106.56 | 7.76 | 252.16 | 302.10 |

| 31 July | 0.50 | 32.78 | 42.40 | 2.47 | 165.66 | 200.96 | 5.32 | 341.48 | 427.58 |

| 7 November | 0.65 | 23.46 | 29.65 | 2.04 | 73.38 | 96.71 | 6.80 | 241.92 | 286.86 |

| 24 December | 0.79 | 39.69 | 53.18 | 2.87 | 144.66 | 176.36 | 7.70 | 362.18 | 442.44 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, D.; Tong, H.; Li, F.; Xiang, L.; Ding, X. An Ultra-Short-Term Electrical Load Forecasting Method Based on Temperature-Factor-Weight and LSTM Model. Energies 2020, 13, 4875. https://0-doi-org.brum.beds.ac.uk/10.3390/en13184875

Zhang D, Tong H, Li F, Xiang L, Ding X. An Ultra-Short-Term Electrical Load Forecasting Method Based on Temperature-Factor-Weight and LSTM Model. Energies. 2020; 13(18):4875. https://0-doi-org.brum.beds.ac.uk/10.3390/en13184875

Chicago/Turabian StyleZhang, Dengyong, Haixin Tong, Feng Li, Lingyun Xiang, and Xiangling Ding. 2020. "An Ultra-Short-Term Electrical Load Forecasting Method Based on Temperature-Factor-Weight and LSTM Model" Energies 13, no. 18: 4875. https://0-doi-org.brum.beds.ac.uk/10.3390/en13184875