Fault Classification Decision Fusion System Based on Combination Weights and an Improved Voting Method

Abstract

:1. Introduction

2. Methods

2.1. The AHP

- The weight vector is calculated as follows:where is the weight of the indicator and is the weight vector of the indicators.

- Calculate the largest eigenvalue of the judgment matrix:

2.2. EW-TOPSIS

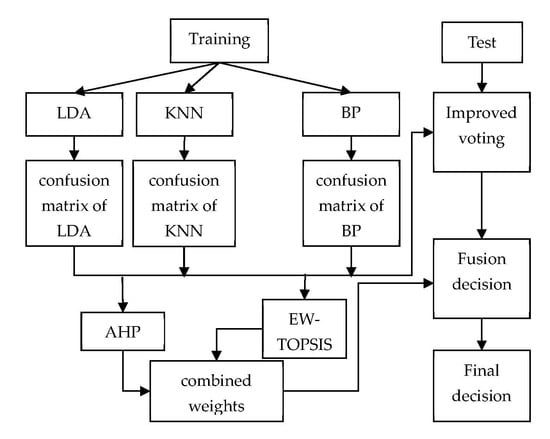

3. The Proposed Method

3.1. Selection of Base Classifiers

3.2. Classifier Performance Evaluation

3.3. Formatting of Mathematical Components

4. Results

4.1. TE Process

4.2. Experiment

4.3. Discussion

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Gao, Z.W.; Cecati, C.; Ding, S.X. A survey of fault diagnosis and fault-tolerant techniques part II: Fault diagnosis with knowledge-based and hybrid/active approaches. IEEE Trans. Ind. Electron. 2015, 62, 3768–3774. [Google Scholar] [CrossRef]

- Tidriri, K.; Chatti, N.; Verron, S.; Tiplica, T. Bridging data-driven and model-based approaches for process fault diagnosis and health monitoring: A review of researches and future challenges. Annu. Rev. Control 2016, 42, 63–81. [Google Scholar] [CrossRef]

- Zeng, L.; Long, W.; Li, Y. A novel method for gas turbine condition monitoring based on KPCA and analysis of statistics T2 and SPE. Processes 2019, 7, 124. [Google Scholar] [CrossRef]

- Wu, X.; Gao, Y.; Jiao, D. Multi-label classification based on random forest algorithm for non-intrusive load monitoring system. Processes 2019, 7, 337. [Google Scholar] [CrossRef]

- Dasarathy, B.V.; Sheela, B.V. A composite classifier system design: Concepts and methodology. Proc. IEEE 1979, 67, 708–713. [Google Scholar] [CrossRef]

- Polikar, R. Ensemble based systems in decision making. IEEE Circuits Syst. Mag. 2006, 6, 21–45. [Google Scholar] [CrossRef]

- Qu, J.; Zhang, Z.; Gong, T. A novel intelligent method for mechanical fault diagnosis based on dual-tree complex wavelet packet transform and multiple classifier fusion. Neurocomputing 2016, 171, 837–853. [Google Scholar] [CrossRef]

- Moradi, M.; Chaibakhsh, A.; Ramezani, A. An intelligent hybrid technique for fault detection and condition monitoring of a thermal power plant. Appl. Math. Model. 2018, 60, 34–47. [Google Scholar] [CrossRef]

- Pashazadeh, V.; Salmasi, F.R.; Araabi, B.N. Data driven sensor and actuator fault detection and isolation in wind turbine using classifier fusion. Renew. Energy 2018, 116, 99–106. [Google Scholar] [CrossRef]

- Kannatey-Asibu, E.; Yum, J.; Kim, T.H. Monitoring tool wear using classifier fusion. Mech. Syst. Signal Proc. 2017, 85, 651–661. [Google Scholar] [CrossRef] [Green Version]

- Ng, Y.S.; Srinivasan, R. Multi-agent based collaborative fault detection and identification in chemical processes. Eng. Appl. Artif. Intell. 2010, 23, 934–949. [Google Scholar] [CrossRef]

- Chen, Y.; Cremers, A.B.; Cao, Z. Interactive color image segmentation via iterative evidential labeling. Inf. Fusion 2014, 20, 292–304. [Google Scholar] [CrossRef]

- Lin, Y.; Wang, C.; Ma, C.; Dou, Z.; Ma, X. A new combination method for multisensor conflict information. J. Supercomput. 2016, 72, 2874–2890. [Google Scholar] [CrossRef]

- Liu, Y.; Ni, W.; Ge, Z. Fuzzy decision fusion system for fault classification with analytic hierarchy process approach. Chemom. Intell. Lab. Syst. 2017, 166, 61–68. [Google Scholar] [CrossRef]

- Ge, Z.; Liu, Y. Analytic hierarchy process based fuzzy decision fusion system for model prioritization and process monitoring application. IEEE Trans. Ind. Inform. 2019, 15, 357–365. [Google Scholar] [CrossRef]

- Saaty, T.L. How to make a decision: The analytic hierarchy process. Eur. J. Oper. Res. 1990, 48, 9–26. [Google Scholar] [CrossRef]

- Shi, L.; Shuai, J.; Xu, K. Fuzzy fault tree assessment based on improved AHP for fire and explosion accidents for steel oil storage tanks. J. Hazard. Mater. 2014, 278, 529–538. [Google Scholar] [CrossRef]

- Tang, Y.; Sun, H.; Yao, Q.; Wang, Y. The selection of key technologies by the silicon photovoltaic industry based on the Delphi method and AHP (analytic hierarchy process): Case study of China. Energy 2014, 75, 474–482. [Google Scholar] [CrossRef]

- Samuel, O.W.; Asogbon, G.M.; Sangaiah, A.K.; Fang, P.; Li, G. An integrated decision support system based on ANN and Fuzzy_AHP for heart failure risk prediction. Expert Syst. Appl. 2017, 68, 163–172. [Google Scholar] [CrossRef]

- Sara, J.; Stikkelman, R.M.; Herder, P.M. Assessing relative importance and mutual influence of barriers for CCS deployment of the ROAD project using AHP and DEMATEL methods. Int. J. Greenh. Gas Control 2015, 41, 336–357. [Google Scholar] [CrossRef]

- Kumar, R.; Anbalagan, R. Landslide susceptibility mapping using analytical hierarchy process (AHP) in Tehri reservoir rim region, Uttarakhand. J. Geol. Soc. India 2016, 87, 271–286. [Google Scholar] [CrossRef]

- Wang, T.; Chen, J.S.; Wang, T.; Wang, S. Entropy weight-set pair analysis based on tracer techniques for dam leakage investigation. Nat. Hazards 2015, 76, 747–767. [Google Scholar] [CrossRef]

- Schneider, E.R.F.A.; Krohling, R.A. A hybrid approach using TOPSIS, Differential Evolution, and Tabu Search to find multiple solutions of constrained non-linear integer optimization problems. Knowl. Based Syst. 2014, 62, 47–56. [Google Scholar] [CrossRef]

- He, Y.H.; Wang, L.B.; He, Z.Z.; Xie, M. A fuzzy TOPSIS and rough set based approach for mechanism analysis of product infant failure. Eng. Appl. Artif. Intell. 2016, 47, 25–37. [Google Scholar] [CrossRef]

- Patil, S.K.; Kant, R. A fuzzy AHP-TOPSIS framework for ranking the solutions of Knowledge Management adoption in Supply Chain to overcome its barriers. Expert Syst. Appl. 2014, 41, 679–693. [Google Scholar] [CrossRef]

- Wu, J.; Li, P.; Qian, H.; Chen, J. On the sensitivity of entropy weight to sample statistics in assessing water quality: Statistical analysis based on large stochastic samples. Environ. Earth Sci. 2015, 74, 2185–2195. [Google Scholar] [CrossRef]

- Ji, Y.; Huang, G.; Sun, W. Risk assessment of hydropower stations through an integrated fuzzy entropy-weight multiple criteria decision making method: A case study of the Xiangxi River. Expert Syst. Appl. 2015, 42, 5380–5389. [Google Scholar] [CrossRef]

- Yan, J.; Feng, C.; Li, L. Sustainability assessment of machining process based on extension theory and entropy weight approach. Int. J. Adv. Manuf. Technol. 2014, 71, 1419–1431. [Google Scholar] [CrossRef]

- Kou, G.; Peng, Y.; Wang, G. Evaluation of clustering algorithms for financial risk analysis using MCDM methods. Inf. Sci. 2014, 275, 1–12. [Google Scholar] [CrossRef]

- Nyimbili, P.; Erden, T.; Karaman, H. Integration of GIS, AHP and TOPSIS for earthquake hazard analysis. Nat. Hazards 2018, 92, 1523–1546. [Google Scholar] [CrossRef]

- Downs, J.J.; Vogel, E.F. A plant-wide industrial process control problem. Comput. Chem. Eng. 1993, 17, 245–255. [Google Scholar] [CrossRef]

- Russell, E.L.; Chiang, L.H.; Braatz, R.D. Data-Driven Techniques for Fault Detection and Diagnosis in Chemical Processes; Springer: London, UK, 2000. [Google Scholar]

| Importance Scale | Description |

|---|---|

| 1 | Two factors are equally important |

| 3 | The first factor is slightly more important than the second factor |

| 5 | The first factor is very important relative to the second factor |

| 7 | The first factor is absolutely very important compared with the second factor |

| 9 | The first factor is extremely important compared with the second factor |

| 2, 4, 6, 8 | Median between adjacent scales |

| n | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 |

|---|---|---|---|---|---|---|---|---|

| RI | 0 | 0 | 0.58 | 0.90 | 1.12 | 1.24 | 1.32 | 1.41 |

| Indicators | F | ACC | MR | P | Weights |

|---|---|---|---|---|---|

| F | 1 | 2 | 2 | 3 | 0.4236 |

| ACC | 1/2 | 1 | 1 | 2 | 0.2270 |

| MR | 1/2 | 1 | 1 | 2 | 0.2270 |

| P | 1/3 | 1/2 | 1/2 | 1 | 0.1223 |

| Fault No. | LDA | KNN | BN | RF | SVM | BP | VOTE | IVM | CWM | ETIVM | AIVM | CWIVM |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 1 | 98 | 98.13 | 98.63 | 98.5 | 98.63 | 98.63 | 98.88 | 99.13 | 98.88 | 99.13 | 98.88 | 98.88 |

| 2 | 96.25 | 97.5 | 98.25 | 98 | 97.88 | 98.75 | 98.38 | 99 | 98.25 | 98.38 | 98.25 | 98.25 |

| 3 | 11.25 | 21.25 | 17.25 | 17.38 | 19.13 | 0 | 24.63 | 14.25 | 17.5 | 12.63 | 11.63 | 11.38 |

| 4 | 81.5 | 20.75 | 73.63 | 95.13 | 89.13 | 11.13 | 97.63 | 99.5 | 94.38 | 98.63 | 98.38 | 98.38 |

| 5 | 98.5 | 20.5 | 98.13 | 51.63 | 98.13 | 95.38 | 99.38 | 99.13 | 99.13 | 99.13 | 98.13 | 98.88 |

| 6 | 99.75 | 99.75 | 56.38 | 99.38 | 63.13 | 33.88 | 100 | 100 | 100 | 100 | 63.13 | 100 |

| 7 | 100 | 80.38 | 100 | 99.75 | 100 | 100 | 100 | 100 | 100 | 100 | 100 | 100 |

| 8 | 37.13 | 28.75 | 96.5 | 53.25 | 34 | 54.63 | 71.88 | 78 | 83 | 90.38 | 96.13 | 95.25 |

| 9 | 14.25 | 13 | 21.63 | 17.25 | 15.75 | 0 | 19.13 | 7.88 | 20.38 | 7.75 | 13.25 | 10.5 |

| 10 | 40.13 | 8.13 | 83.75 | 43.38 | 21.38 | 0 | 53.25 | 78.63 | 75.25 | 82 | 83.75 | 83.75 |

| 11 | 8.63 | 8.63 | 70.75 | 62 | 15.63 | 0 | 37.5 | 45.5 | 62.5 | 59.75 | 62.25 | 61.63 |

| 12 | 31.25 | 29.75 | 97.25 | 76.63 | 60.5 | 2.25 | 73.75 | 83.13 | 89 | 86.63 | 94.5 | 91.63 |

| 13 | 20.75 | 16.5 | 57.75 | 16.5 | 12.25 | 22.13 | 19.13 | 28.38 | 37.63 | 36.75 | 51.38 | 49.63 |

| 14 | 7.75 | 44.25 | 97.25 | 94 | 72.5 | 0 | 85.13 | 93.13 | 94.13 | 93.25 | 97 | 94.25 |

| 15 | 15 | 14.25 | 26.25 | 26 | 18.88 | 0 | 20.63 | 26.75 | 26.63 | 28.13 | 31 | 30.63 |

| 16 | 37.75 | 7.5 | 79.25 | 48.25 | 20.75 | 29 | 51.5 | 73.38 | 70.25 | 76.63 | 79.13 | 78.75 |

| 17 | 51.75 | 39.13 | 86.5 | 90.38 | 73.63 | 84.38 | 87.38 | 92.88 | 90.13 | 92.63 | 89.5 | 91.75 |

| 18 | 16.5 | 81.25 | 18.63 | 86.13 | 62 | 70 | 86.13 | 88.75 | 86.5 | 88.88 | 51.5 | 88.5 |

| 19 | 14.5 | 29.88 | 94.38 | 78.13 | 49.38 | 0 | 68 | 93.5 | 89.5 | 95.38 | 95.25 | 95.75 |

| 20 | 52 | 12.63 | 87.5 | 61.38 | 47.38 | 79.13 | 72.5 | 89.13 | 84.13 | 88.25 | 87.5 | 87.63 |

| 21 | 5.75 | 6.25 | 99.25 | 24 | 2.75 | 38 | 30 | 93.5 | 80.38 | 98.88 | 99.75 | 99.75 |

| Average | 44.68 | 37.05 | 74.23 | 63.67 | 51.08 | 38.92 | 66.42 | 75.4 | 76.07 | 77.77 | 76.2 | 79.29 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zeng, F.; Li, Z.; Zhou, Z.; Du, S. Fault Classification Decision Fusion System Based on Combination Weights and an Improved Voting Method. Processes 2019, 7, 783. https://0-doi-org.brum.beds.ac.uk/10.3390/pr7110783

Zeng F, Li Z, Zhou Z, Du S. Fault Classification Decision Fusion System Based on Combination Weights and an Improved Voting Method. Processes. 2019; 7(11):783. https://0-doi-org.brum.beds.ac.uk/10.3390/pr7110783

Chicago/Turabian StyleZeng, Fanliang, Zuxin Li, Zhe Zhou, and Shuxin Du. 2019. "Fault Classification Decision Fusion System Based on Combination Weights and an Improved Voting Method" Processes 7, no. 11: 783. https://0-doi-org.brum.beds.ac.uk/10.3390/pr7110783