1. Introduction

Natural systems around the world are experiencing staggering rates of degradation in modern times. Trends in global biodiversity and biogeochemistry have been dominated by human-caused patterns of disturbance [

1,

2]. Human-led disturbance also alters ecosystem resilience, a long-term feedback generating irrevocable change [

3]. Such impacts leave even complex communities functionally extinct, unable to perform their most basic processes. By 2100, the increasing intensity of land-use change is expected to have the most significant impact on biodiversity, influencing key earth system functionality [

3,

4,

5]. The associated interactions of ecosystem and biodiversity change represent a pivotal challenge for ecologists. This complex framework combines the efforts of sustainability, ethics, and policy [

2] to manage the future of natural resources.

Forests provide a unique array of ecosystem services to human society, yet still rank among the most exploited of natural environments. The United Nations Food and Agriculture Administration (FAO) estimated that between 2000–2005, forested lands experienced a net global loss of 7.3 million hectares per year [

1]. In a more recent 2015 assessment, FAO has estimated a total of 129 million ha of forest land-cover loss between 1990–2015 [

6]. This unprecedented level of deforestation elicited action from numerous scientific disciplines, international economies, and facets of government. Similar to many forests around the world, coastal New Hampshire forests have been markedly impacted by disturbance regimes. Local ecosystem services such as nutrient cycling, habitat provisioning, resource allocation, and water quality regulation, which are directly linked to general welfare [

7], are experiencing diminished capabilities due to the loss of critical area [

8,

9]. Many scientific disciplines look to mitigate these impacts and conserve remaining resources but require every advantage of novel strategies and adaptive management to overcome the intricate challenges at hand. To form a comprehensive understanding of natural environments and their patterns of change, scientists and landscape managers must use modern tools to assimilate mass amounts of heterogeneous data at multiple scales. Then, they must translate that data into useful decision-making information [

10,

11].

Capturing large amounts of data to evaluate the status and impacts of disturbance on the biological composition and structure of complex environments is inherently difficult. The continual change of landscapes, as evident in forest stands in the Northeastern United States [

12], further aggravates these intentions. Stimulating conservation science and informing management decisions justifies collecting greater quantities of data and at an applicable accuracy [

13,

14]. Remote sensing can both develop and enrich datasets in such a manner as to meet the project-specific needs of the end user [

15,

16]. Remote sensing is experiencing continual technological evolution and embracement to meet the needs of analyzing complex systems [

17,

18]. This highly versatile tool goes beyond in situ observations, encompassing our ability to learn about an object or phenomenon through a sensor without coming into direct contact with it [

19,

20]. Most notably, recent advances have enabled data capture at resolutions matching new ranges of ecological processes [

18], filling gaps left by field-based sampling for urgently needed surveying on anthropogenic changes in the environment [

10,

21].

New photogrammetric modeling techniques are harnessing high-resolution remote sensing imagery for unprecedented end-user products with minimal technical understanding [

22,

23]. Creating three-dimensional (3D) point clouds and planimetric models through Structure from Motion (SfM) utilizes optical imagery and the heavy redundancy of image tie points (image features) for a low-cost, highly capable output [

24,

25,

26,

27,

28,

29,

30,

31,

32]. Although the creation of orthographic and geometrically corrected (planimetric) surfaces using photogrammetry has been around for over 50 years now [

33,

34], computer vision has only recently linked the SfM workflow to include multi-view stereo (MVS) and bundle adjustment algorithms. This coupled processing simultaneously corrects for parameters and provides an automated workflow for on-demand products [

23,

35].

As remote sensing continues to advance into the 21st century, new sensors and sensor platforms are broadening the scales at which spatial data are collected. Unmanned Aerial Systems (UAS) is one such platform, being technically defined only by its lack of on-board operator [

36,

37]. This unrestricted definition serves to exemplify the ubiquitous nature of the tool. Every day there is a wider variety of modifications and adaptations, blurring the lines of classification. Designations such as unmanned aircraft systems (UAS), unmanned aerial vehicles (UAV), unmanned aircrafts (UA), remotely piloted aircrafts (RPA), remotely operated aircrafts (ROA), aerial robotics, and drones all derive from unique social and scientific perspectives [

37,

38,

39,

40]. The specification of these titles comes from the respective understanding of the user and their introduction to the platform. Here, UAS is the preferred term due to the platform’s system of components that coordinates the overall operation.

Following a parallel history of development with manned aviation, UAS have emerged from their mechanical contraption prototypes [

41] to the forefront of remote sensing technologies [

36,

39]. Although many varieties of UAS exist today, the majority utilizes some form of: (i) a launch and recovery system or flight mechanism, (ii) a sensor payload, (iii) a communication data link, (iv) the unmanned aircraft, (v) a command and control element, and (vi) most importantly, the human [

38,

39,

40,

41,

42,

43,

44].

A more meaningful classification of a UAS may be whether it is a rotary-winged, fixed-winged, or hybrid aircraft. Each aircraft provides unique benefits and limitations corresponding to the structure of its core components. Rotary-winged UAS, which are designated for their horizontally rotating propellers, are regarded for their added maneuverability and hovering capabilities, and for their vertical take-off and landing (VTOL) operation [

41]. These platforms are subsequently hindered by their shorter duration flight capacity on average [

39]. Fixed-wing UAS, which have been sought after for their longer duration flights and higher altitude thresholds, suffer from a trade-off of focused, extensive coverage. Hybrid unmanned aircraft are beginning to provide realistic utility by combining the advantages of each system including VTOL maneuverability and fixed-wing aircraft flight durations [

45]. Each of the systems, with its consumer desired versatility, have driven configurations to meet project needs. Applications such as precision agricultural monitoring [

46,

47], coastal area management [

48], forest inventory [

49], structure characterization [

50,

51], and fire mapping [

52] are only a small portion of the fields already employing the potential of UAS [

38,

40,

44,

53]. New data products, with the support of specialized or repurposed software functionality, are changing the ways that we model the world. The proliferation of UAS photogrammetry is now recognized by interested parties in fields such as computer science, robotics, artificial intelligence, general photogrammetry, and remote sensing [

23,

40,

43,

54].

The rapid adoption of this new technology brings cause for questions as to how it will inevitably affect other, more intensive aspects of society. Major challenges to the advancement of UAS include understandings of privacy, security, safety, and social concerns [

40,

44,

55]. These major influences are causing noticeable shifts in the worldwide acceptance of the platform, and spurring regulations as outcomes of the conflict of interest between government and culture [

39,

53,

54,

56]. In an initiative to more favorably integrate UAS into the National Airspace System (NAS) of the United States (US), the Federal Aviation Administration (FAA) established the Remote Pilot in Command (RPIC) license under 14 Code of Federal Regulations (CFR) [

57]. RPIC grants permission for low-altitude flights, under restricted flexibility, to overseers with sufficient aeronautical knowledge [

58].

Evidence of the shift towards on-demand spatial data products is everywhere [

44,

59]. The extremely high resolution, flexible operation, minimal cost, and automated workflow associated with UAS make them an ideal remote sensing platform for many projects [

43,

53,

59,

60]. However, as these systems become more integrated, it is important that we remain cognizant of factors that may affect data quality and best-use practices [

23]. Researchers in several disciplines have investigated conditions for data capture and processing that may decrease positional certainty or reliability [

38]. These studies provide comparative analysis of the UAS-SfM data products to highly precise sensors such as terrestrial laser scanners (TLS), light detection and ranging (LiDAR) systems, and ground control point surveying [

30,

32,

35,

49,

51,

61]. However, fewer studies and guidelines have provided clear quantitative evidence for issues affecting the comprehensive coverage of study sites using UAS-SfM, especially for complex natural environments [

62,

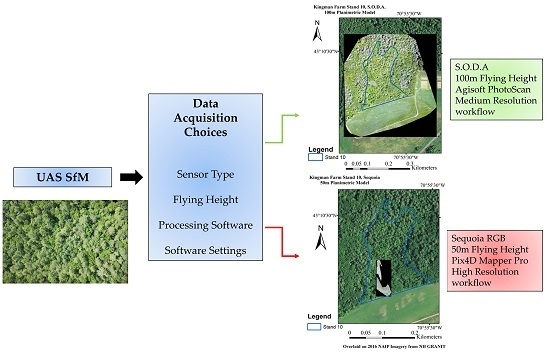

63]. The principles expressed here reflect situations in which the positional reliability of the resulting products is not the emphasis of the analysis. Instead, our research provides an overview of the issues associated with the use of UAS for data collection and processing to capture complex forest environments in an effective manner. Specifically, our objectives were to: (i) analyze the effects of image capture height on SfM processing success, and (ii) determine the influences of processing characteristics on output conditions by applying multiple software packages and workflow settings for modeling complex forested landscapes.

2. Materials and Methods

This research was conducted using three woodland properties owned and managed by the University of New Hampshire, Durham, New Hampshire, USA. These properties included Kingman Farm, Moore Field, and West Foss Farm, totaling approximately 154.6 ha of forested land (235.2 ha of total area), and were located in the seacoast region of New Hampshire (

Figure 1). Our research team was granted UAS operational permission to fly over each of these forested areas, which were selected for their similarity to the broader New England landscape mosaic [

64]. For our first objective, the analysis of flying height on image processing capabilities, a sub-region of Kingman Farm designated as forest stand 10 [

65] was selected. Stand 10, comprising 3.6 ha (.036 km

2) of red maple swamp and coniferous patches, was used due to its overstory diversity and proximity to an open ground station area. Part of the second objective—establishing a comparison between software packages for forest landscape modeling—required a larger area of analysis. For this reason, processed imagery across all three woodland properties—Kingman Farm (135.17 ha), West Foss Farm (52.27 ha), and Moore Field (47.76 ha)—was used. The other part of the second objective, determining the influences on processing workflow settings on the SfM outputs, utilized the Moore Field woodland property as an exemplary subset of the regional landscape.

A SenseFly eBee Plus by Parrot, Cheseaux-sur-Lausanne, Switzerland, (

Figure 2) was used to evaluate the utility of fixed wing UAS. With integrated, autonomous flight controller software (eMotion 3 version 3.2.4, senseFly Parrot Group, Cheseaux-sur-Lausanne, Switzerland), a communication data link, and an inertial measurement unit (IMU), this platform boasted an expected 59 min flight time using one of two sensor payloads. First, the Sensor Optimized for Drone Applications (S.O.D.A) captured 20 MP of natural color imagery. Alternatively, the Parrot Sequoia sensor incorporated five optics into a multispectral system, including a normal color 16 MP optic and individual blue, green, red, red edge, and near infrared sensors. For our purposes, we solely compared the Sequoia RGB sensor to the S.O.D.A. For the rest of this analysis, we will refer to this single sensor.

To alleviate climatic factors influencing image capture operations, cloudy weather and near dawn or dusk flights were avoided in lieu of optimal sun-angle and image consistency [

62]. Prior day weather forecasting from the National Weather Service (NWS) and drone-specific flight mapping services was checked. Maintaining image capture spacing regularity during flight planning is also heavily influential on processing effectiveness. Therefore, wind speeds in excess of 12 m/s were avoided, despite the UAS being capable of operating in speeds up to 20 m/s. In addition, flight lines were oriented perpendicular to the prevailing wind direction. These subtle operational considerations, while not particularly noticeable in the raw imagery, are known to improve overall data quality [

62,

63] and mission safety.

To analyze the efficiency and effectiveness of the UAS image collection process, several flying heights and two sensor systems were compared. These flying heights were determined using a canopy height model provided through a statewide LiDAR (.las) dataset [

66]. The LiDAR dataset was collected between the winter of 2010 and the spring of 2011 under a United States Geological Survey (USGS) North East Mapping project [

67]. The original dataset was sampled at a two-meter nominal scale, and was upscaled to a 10-meter nominal scale using the new cells maximum value. Then, it was cleaned so as to both provide the UAS with a more consistent flight altitude, and ensure safety from structure collisions. Setting the flying height above the canopy height model more importantly ensured that images kept a constant ground sampling distance (gsd), or model pixel size, during processing. It was originally intended to evaluate flying heights of 25 m, 50 m, and 100 m above this canopy height model. However, test missions proved that flying below 50 m quickly lost line-of-sight (a legal requirement) and the communication link with the ground control station. With the goal of determining the optimal height for: high spatial resolution, processing efficiency, and maintaining a safe operation, flying heights of 50 m, 100 m, and 120 m above the forest canopy were selected. Since these flying heights focus on the forest canopy layer, and not the ground, all of the methods and results are set relative to this height model. These flying heights represented a broad difference in survey time of approximately 9 min to 18 min per mission for the given study area, stand 10, and an even greater difference in the number of images acquired (337 at 50 m with the S.O.D.A, and 126 at 120 m). The threshold of 120 m was based on CFR 14 Part 107 restrictions, stating all UAS flights are limited to a 400 ft above-surface ceiling [

58]. Additional flying heights at lower altitudes have been tested for rotary-winged UAS [

60,

62].

Similar to the traditional photogrammetric processes of transcribing photographic details to planimetric maps using successive image overlap [

68], successful SfM modeling from UAS collected imagery requires diligent consideration of fundamental flight planning characteristics. Generating planimetric models, or other spatial data products from UAS imagery, warrants at least a 65% end (forward) and sidelap based on user-recommended sampling designs [

69]. The computer vision algorithms rely on this increased redundancy for isolating specific tie points among the high levels of detail and inherent scene distortions [

27,

30,

32]. Many practitioners choose even higher levels of overlap to support their needs for data accuracy, precision, and completeness. For complex environments, recommended image overlap increases to 85% endlap and 70% to 80% sidelap [

70]. For all of the missions, we applied 85% endlap and 70% sidelap, which approached the maximum possible for this sensor.

The first step in processing the UAS images captured by the S.O.D.A and Sequoia red–green–blue (RGB) sensors was to link their spatial data to the raw imagery. Image-capture orientation and positioning was collected by the onboard IMU and global positioning system (GPS) and stored in the flight log file (.bbx). A post-flight mission planning process imbedded in the eMotion3 flight planning software accessed the precise timing of each camera trigger in the flight log automatically when connected to the eBee Plus and automated the spatial matching process.

All of the processing was performed on the same workstation, a Dell Precision Tower 7910 with an Intel Xenon E5-2697 18 core CPU, 64GB of RAM, and a NIVIDIA Quadro M4000 graphics card. The workflows in both software packages created dense point clouds using MVS algorithms a 3D surface mesh, and a planimetric model (i.e., orthomosaic) using their respective parameters. In Pix4D Mapper Pro (Pix4D), Lausanne, Switzerland, when comparing the impact of flying height against processing effectiveness as part of our first objective, several processing parameters were adjusted to mitigate the complications for computer vision in processing the forest landscape. All of the combinations of sensor and flying height SfM models used a 0.5 tie-point image scale, a minimum of three tie points per processed image, a 0.25 image scale with multi-scale view for the point cloud densification, and triangulation algorithms for generating digital surface models (DSM) from the dense point clouds [

70]. The tie-point image scale metric reduced the imagery to half its original size (resolution) to reduce the scene complexity while extracting visual information [

69]. We used a 0.25 image scale while generating the dense point cloud to further simplify the raw image geometry for computing 3D coordinates [

70]. Although these settings promote automated processing speed and completeness in complex landscapes, their use could influence result accuracy and should be used cautiously.

For both objectives, we used key “quality” indicators within the software packages to assess SfM model effectiveness. First, image alignment (“calibration” in the software) denotes the ability to define the camera position and orientation within a single block, using identified tie point coordinates [

22,

27,

71]. Manual tie point selection using ground control points or other surveyed features was not used to enhance this. Only images that were successfully aligned could be used for further processing. Camera optimization determined the difference between the initial camera properties and the transformed properties generated by the modeling procedure [

71]. The average number of tie points per image demonstrated how much visual data could be automatically generated. The average number of matches per image showed the strength of the tie-point matching algorithms between overlapping images. The mean re-projection error calculated the positional difference between the initial and final tie-point projection. Final planimetric model ground sampling distances (cm/pixel) and their surface areas were observed to assess the completeness and success of the procedure. Lastly, we recorded the total time required for processing each model.

Another key consideration of SfM processing was the choice of software package used in the analysis. As part of the second objective, we investigated the influence of the software package choice on model completion. Leading proprietary UAS photogrammetry and modeling software packages Agisoft PhotoScan (Agisoft) and Pix4D have garnered widespread adoption [

23,

38,

49,

72,

73]. The initial questioning of other users (ASPRS Conference in spring 2016) and test mission outputs provided inconsistent performance reviews to judge a superior workflow. Although open source UAS-SfM software has become increasingly accessible and renowned, only these software came recommended as total solutions for our hardware and environment. To contrast the two packages in their ability to model the forest landscape, all three woodland properties were processed and analyzed. To remain consistent between Agisoft and Pix4D workflow settings, we used “High” or “Aggressive” (depending on software vocabulary) image alignment accuracy, 0.5 tie-point image scales, and a medium resolution mesh procedure.

To accomplish our final objective and evaluate the capabilities of the suggested default resolution processing parameters further, Moore Field was processed individually using both medium and high-resolution (“quality”) settings within Agisoft. During this final analysis, we adjusted only the model resolution setting during the batch processing between each test, and again used the 0.5 tie-point image scale for both. The outputs were compared based on the image alignment success, the total number of tie points formed, the point cloud density, and the planimetric model resolution.

3. Results

The results of the data processing for UAS imagery collected 50 m, 100 m, and 120 m above complex forest community canopies demonstrated significant implications regarding the effectiveness of the data collection. At 50 m above the forest canopy the S.O.D.A. sensor collected 337 images covering Stand 10 of Kingman farm. Using the Pix4D software to process the imagery, 155 out of the 337 (45%) of the images were successfully aligned for processing to at least three matches (

Figure 3a), with a 5.53% difference in camera optimization. The processing took a total of 69:06 min, in which an average of 29,064 image tie points and 1484 tie-point matches were found per image. The distribution of tie point matches for each aligned image can be seen in

Figure 3b, with a gradient scale representing the density of matching. Additionally, geolocation error or uncertainty is shown for each image with its surrounding circle size. The final planimetric model covered 30.697 ha (0.307 km

2) with a 3.54-cm gsd (

Figure 3c), and a 0.255 pixel mean re-projection error. Successful image alignment and tie-point matching were limited to localized regions near the edges of the forested area to the south, promoting significant surface gaps and extrapolation within the resulting DSM and planimetric model (

Figure 3c,d).

Capturing imagery with the Sequoia RGB multispectral sensor at a height of 50 m above the canopy produced 322 images, of which 122 (37%) could be aligned successfully (

Figure 4a). The majority of which were again localized to the southern, forest edge. The overall camera optimization difference was 1.4%. The average number of image tie points and tie-point matches were 25,561 and 383.91 per image, respectively. However, all of these tie point matches appeared to be concentrated on a small area within the study site (

Figure 4b). This processing workflow, lasting 43:21 min, created a final orthomosaic covering 0.2089 ha (0.0021 km

2) at a 0.64-cm gsd, and a 0.245 pixel mean re-projection error (

Figure 4c). Image stitching for this flight procedure recognized image objects in only two distinct locations throughout the study area, forcing widespread irregularity for the minimal area that was produced (

Figure 4a–d).

Beginning at 100 m above the forest canopy, UAS-SfM models more comprehensively recognized the complexity of the forest vegetation. At 100 m, with the S.O.D.A. sensor, data processing aligned 176 of 177 (99%) of the collected images at a 0.45% camera optimization difference (

Figure 5a). Pix4D generated an average of 29,652 image tie points with 2994.12 tie-point matches per image. The distribution and density of tie point matching (

Figure 5b) between overlapping images can be seen to more thoroughly cover the entire study area. Finishing in 84:15 min, the final planimetric output had a 3.23-cm gsd, covered 16.5685 ha (0.1657 km

2), and had a mean re-projection error of 0.140 (

Figure 5c). Camera alignment, image tie-point matching, and planimetric processing results portrayed greater computer recognition and performance with minimal incomprehension near the center of the forest stand (

Figure 5). The DSM for this flying height and sensor show far greater retention of the forest stand (

Figure 5d).

Alternatively, the Sequoia RGB sensor at 100 m above the canopy successfully aligned 159 of 178 (89%) collected images. The failure of the remaining images in the northern part of the study area (

Figure 6a) corresponded with a considerably greater number of image geolocation errors and tie-point matches (

Figure 6b). This workflow took the greatest amount of processing time of 133:08 min, with 25,825 image tie points and 902.51 tie-point matches on average. The camera optimization difference with this height and sensor combination was 0.87%. The planimetric model covered 14.32 ha (0.1432 km

2), had a 3.95-cm gsd, and a mean re-projection error of 0.266 pixels (

Figure 6c). Both the planimetric model (

Figure 6c) and DSM (

Figure 6d) covered the entirety of the study area, with diminished clarity along the northern boundary.

At the highest flying height, 120 m above the canopy surface, processing of the S.O.D.A. imagery successfully aligned 121 of 126 (96%) images with a 0.44% camera optimization difference. The five images that could not be represented were clumped near the northwestern corner of the flight plan (

Figure 7a). Images averaged 29,168 tie points and 3622.33 tie-point matches. The distribution of tie-point matches (

Figure 7b) showed moderate density throughout the study area, with greatest connectivity along the southern boundary (

Figure 7b). After 52:32 min of processing, an 18.37-ha (0.1837 km

2) planimetric surface was generated with a 3.8-cm gsd and a 0.132 pixel re-projection error

Figure 7c). The resulting DSM (

Figure 7d) and planimetric model were created with high image geolocation precision and image object matching across the imagery (

Figure 7). The entirety of the study area for this model is visually complete.

Finally, the Sequoia RGB sensor flying at 120 m above the forest canopy captured 117 images, of which 102 (87%) were aligned. The image alignment and key point matching diagrams (

Figure 8a,b) showed lower comprehension throughout the northern half of the study area, corresponding with the interior forest. Pix4D processing resulted in a 1.73% camera optimization difference with an average of 25,505 tie points and 1033.83 tie point matches per image. The overall processing time was 50:13 min, which generated a 14.926 ha (0.1493 km

2) planimetric model at a 4.44 cm-gsd and 0.273 pixel re-projection error (

Figure 8c). Weak image alignment and tie-point matching in the upper half of the forest stand formed regions of inconsistent clarity along the edges of the study area (

Figure 8c,d).

In summary,

Table 1 shows an evaluation of the results from the six sensor and flying height combinations utilized by the eBee plus UAS. The degree of processing completeness is shown to be heavily influenced by UAS flying height, as demonstrated by variables such as image alignment success, camera optimization, average tie-point matches per image, and the area covered by the models. Flying heights of 100 m and 120 m above the forest canopy both display greater image alignment and tie-point matching success than the 50-m flying height. Although the 50-m flying height did have a slightly finer overall ground sampling distance and re-projection error, these results should be understood in the broader context of the resulting SfM data products. The 100-m flying height demonstrated the most comprehensive alignment of images for both sensors. This increase in image alignment success and average tie points per image also came with a noticeably longer processing duration. However, when comparing image re-projection error and average matching per image indicators for the 100-m and 120-m flying heights, the 120-m model processing contained less uncertainty (

Figure 5 and

Figure 7).

Comparing the S.O.D.A. sensor to the Sequoia RGB sensor across the three flying heights resulted in, on average, a 9% greater image alignment success rate, a 14.29% greater number of tie points per image, an increase of 356.21% in image matches, and a 32.3% lower re-projection error for the S.O.D.A. Ground sampling distances for the two sensors were not quantitatively compared because of the inconsistency of the model completion.

Comparison of the processing of all three woodland properties using both Pix4D and Agisoft demonstrated clear differences in results for the same imagery. Across the combined 3716 images (covering 235.2 ha), Agisoft had an 11.883% higher image alignment success. The final planimetric models also had a 9.91% finer resolution (lower gsd) using Agisoft. However, this increase in gsd resulted in 67.3% fewer tie points (

Table 2).

Within Agisoft itself, several processing workflow complexities are available. In this analysis, we compared the medium and high-resolution (“quality”) point cloud generation procedures to determine their influence on the final planimetric model outputs. In processing Moore Field, we saw an increase in overall tie points of 31.3% (346,598 versus 264,015), an increase of 371% in photogrammetric point cloud density (187.3 m versus 50.5 m), and a 0.01-cm increase in final ground sampling distance (3.33 cm versus 3.32 cm) when using the high versus medium settings (

Table 3). However, the difference in processing time for the higher resolution model was in the order of days.

4. Discussion

The effects of flying height on SfM processing capability, as shown in

Table 1, demonstrate a clear influence on output completeness. All of the three analyses offered insight into complications for obtaining visually complete complex forest models. These study areas presented forests with closed, dense canopies comprising a great deal of structural and compositional diversity, which are typical of the New England region. These forests represent some of the most complex in the world although they are less complex than tropical rain forests. Issues discussed here are appropriately applied to forests of similar complexity. In simpler forest environments with less species diversity and more open canopies, the issues discussed here will be less problematic. In these New England forests, the complexity of the imagery at such a fine scale leads to difficulties in isolating tie points [

74]; these difficulties were exacerbated at the 50-m flying height. Compounding disruptions of the fixed flight speed, sensor settings, and flight variability further biased this flying height. Image alignment and tie-point generation with either the S.O.D.A or the Sequoia RGB sensor at this low altitude was inconsistent and error-prone (

Figure 3 and

Figure 4). This lack of comprehension for initial processing reverberated heavily in the DSMs and planimetric models, generating surfaces largely through extrapolation. Flying at 100 m and 120 m above the forest canopy, image alignment success ranged from 89–99% with ground sampling distances of 3.23–3.8 cm for the S.O.D.A and 3.95–4.44 cm for the Sequoia RGB sensor. These increases in successful image and scene processing were accompanied by a much larger number of average tie-point matches per image. For both sensors, the 100-m flying height appeared to out-compete even that of 120 m, with a higher resolution and image calibration success. The resulting slight difference in GSD for the two flying heights was likely a function of the variation in flight characteristics for the aircraft during image capture, leading to deviations in camera positions, and averaged point cloud comprehension for the forest canopy. Looking at the image tie-point models (

Figure 7 and

Figure 8) shows that this remaining uncertainty or confusion is clustered in the upper corner of the study area, corresponding with the mission start. Several factors could have influenced this outcome, with the most direct impact suggesting environmental stochasticity (i.e., short-term randomness imposed within the environment); for example, canopy movement or the sun angle diminishing tie-point isolation [

62,

63,

75]. Other possibilities for the clustered uncertainty include UAS flight adjustments during camera triggering, leading to discrepancies in the realized overlap, and image motion blur.

Understandably, the Sequoia RGB sensor achieved less comprehensive results compared with the S.O.D.A., which has a sensor that is more specifically designed for photogrammetric modeling. When comparing average results across the 50-m, 100-m, and 120-m flying heights, the Sequoia accomplished 9% lower image alignment success, 14.29% fewer tie points, 356% fewer matches, and a 32.3% higher re-projection error (

Table 1). The processed models became rampant with artifacts and areas void of representation because of a combination of dense vegetation causing scene complexity and camera characteristic differences. Recommended operations for the Sequoia sensor include lowering the flight speed (not capable on the eBee Plus) and increasing flying height to overcome its rolling shutter mechanics, which negatively impact image geolocation (

Figure 4a,b). The creation and deployment of optimized sensors for UAS (i.e., the S.O.D.A. sensor) is a simple yet direct demonstration of increased efficiency and effectiveness behind this rapidly advancing technology and the consumer demand supporting it.

Given the unresolved processing performance for complex natural environments, our study also investigated whether Agisoft or Pix4D generated higher quality SfM outputs. With 11.8% greater image alignment and 9.9% finer resolution, Agisoft appeared to have an advantage (

Table 2). However, determining any universal superiority proved difficult due to the “black box” processing imposed at several steps in either software package [

23,

27]. Pix4D maintained 67.3% more tie points across the three study areas and provided a more balanced user interface, including adding in a variety of additional image alignment parameters and statistical outputs. Pix4D can be more favorable to some users because of its added functionality and statistical output transparency, including: added task recognition during processing, an initial quality report, a tie-point positioning diagram, geolocation error reporting, image-matching strength calculations, and a processing option summary table. However, for the final planimetric model production, Agisoft performed to a superior degree based on SfM output completeness and visualization indicators.

Lastly, we contrasted the medium and high-resolution workflows in Agisoft to determine the impact on modeling forest landscapes. The high-resolution processing workflow experienced a 371% increase in point cloud density. However, the final planimetric models were only marginally impacted at 3.33 cm and 3.32 cm for high and medium-resolution processing, respectively (

Table 3). The slightly improved results were not worth the extra processing time, as the high-resolution workflow took days rather than hours to complete. UAS are sought after for their on-demand data products, further legitimizing this preference [

25,

38,

43]. Assessing the represented software indicators demonstrated that the sensor, software package, and processing setting choice did each affect UAS-SfM output completeness, implying a need to balance output resolution and UAS-SfM comprehension. For our study area, this supported using the S.O.D.A. at a 100-m flying height above the canopy, with a medium-resolution processing workflow in Agisoft PhotoScan. It should be remembered that these conclusions are relative to the forest canopy, as well as our objective for modeling the landscape. These results and software indicators should be considered carefully when assessing photogrammetric certainty. However, depending on the nature of other projects or their restrictions, alternative combinations of each could be implemented.

Many additional considerations for UAS flight operations could be included within these analyses, especially in light of more traditional aerial photogrammetry approaches. Our analyses specifically investigated the ability of UAS-SfM to capture the visual content of the forested environment, but not its positional reliability. To improve computer vision capabilities, multi-scale imagery could have been collected [

49]. Perpendicular flight patterns or repeated passes could have been flown. These suggestions would have increased operational requirements, and thus decreased the efficiency of the data collection. Alternatively, greater flying heights could have been incorporated (e.g., 250 m above the forest canopy). The maximum height used during our research was set by the current FAA guidelines for UAS operations [

58]. Future legislation changes or special waivers for flight ceilings should consider testing a wider range of altitudes for both sensors. Despite the recommendation for maintaining at least a 10-cm gsd to adequately capture complex environments [

63,

69,

70], several models created in this research produced comprehensive planimetric surfaces. Producing a 10-cm gsd would also require flying above the legal 121.92 m ceiling under the current FAA regulations.

Unmanned aerial systems have come a long way in their development [

39,

41], yet still have much more to achieve. The necessity for high-resolution products with an equally appropriate level of completeness is driving hardware and software evolution at astonishing rates. To match the efficiency and effectiveness of this remote sensing tool, intrinsic photogrammetric principles must be recognized. Despite limitations on flight planning [

55], and computer vision performance [

22,

27,

76], UAS can produce astounding results.

5. Conclusions

Harnessing the full potential of Unmanned Aerial Systems requires diligent consideration of their underlying principles and limitations. To date, quantitative recognition of their best operational practices are few and far between outside of positional reliability [

49,

62,

77,

78]. This is despite their exponentially expanding user base and the increasing reliance on their acquired data sets. In conjunction, SfM data processing has not yet set data quality or evaluation standards [

23]. As with any novel technology, these operational challenges and limitations are intrinsic to our ability to acquire and rightfully use such data for decision-making processes [

79,

80]. The objective of this research was to demonstrate a more formal analysis of the data acquisition and processing characteristics for fixed-wing UAS. The three analyses performed here were a result of numerous training missions, parameter refinements, and knowledge base reviews [

69,

70], in an effort to optimally utilize UAS-SfM for creating visual models. Our analysis of the effects of flying height on SfM output comprehension indicated an advantage for flying at 100 m above the canopy surface instead of a lower or higher altitude. This is based on a higher image alignment success rate, and a finer resolution orthomosaic product. In comparing Agisoft PhotoScan and Pix4D Mapper Pro software packages across 235.2 ha of forested land, Agisoft generated more complete UAS-SfM outputs. This is the result of greater image alignment success creating a more complete model, as well as a finer resolution output. Lastly, the evaluation of the workflow settings endorsed medium-resolution processing because of a substantial trade-off between point cloud density, 371% difference, and processing time efficiency. Each of these results was found from the mapping of dense and heterogeneous natural forests. Such outputs are important for land management and monitoring operations.

The photogrammetric outputs derived from the UAS-SfM of complex forest environments represent a new standard for remote sensing data sets. To support the widespread consumer adoption of this approachable platform, our research went beyond the often-limited guides on photogrammetric principles and provided quantitative evidence for optimal practices. With the increasing demand for minimizing costs and maximizing output potential, it is still important to thoroughly consider the influence of flight planning and processing characteristics on data quality. The results presented support these interests, allowing for the collection of data for and the SfM processing of visually complete models of complex natural environments. As associated hardware, software, and legislation continue to develop, transparent standards and assessment procedures will benefit all stakeholders enveloped in this exciting frontier.