Remote Sensing Image Compression Based on Direction Lifting-Based Block Transform with Content-Driven Quadtree Coding Adaptively

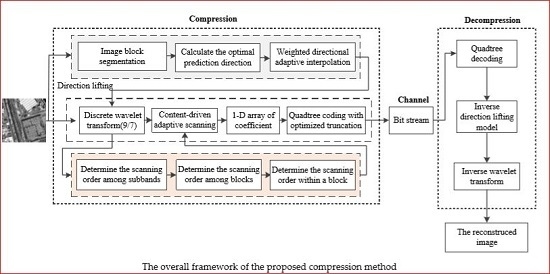

Abstract

:1. Introduction

2. The Directional Adaptive Lifting Partitioned Block Transform (DAL-PBT)

2.1. The Structure of Adaptive Directional Lifting Scheme

2.2. Image Segmentation and the Calculation of Optimal Prediction Direction

2.3. Directional Adaptive Interpolation

2.4. Boundary Handing and Semidirection Displacement

3. A Content-Driven Quadtree Coding Method for Remote Sensing Images

3.1. Content-Driven Subband Scan and Block Scan

| Algorithm 1. Function. |

Input: The transformed image with the DAL-PBT model, the decomposition level , and the size of block .

Output: The generated 1-D coefficient sequence S by scanning the 2-D transformed image . |

3.2. Content-Driven Quadtree Codec with Optimized Truncation (CQOT)

| Algorithm 2. Function. |

Input: The transformed image with the DAL-PBT model, and the wavelet decomposition level ; the size of each block , is the current threshold.

while () { while () { if ,then if , then end if if , then end if if , then end if if , then end if end if Calculate the series of optimized truncation points ; } } Output: The codestream of the transformed image at the given threshold , and the truncation points set . } |

| Algorithm 3. Function. |

| Input: represents a quadtree, and is the index of a node of the quadtree. represents the threshold.

else if else end if else end if else end if } Output: The codestream of the quadtree at the given threshold. . |

3.3. The Overhead of Bits

4. Quality Evaluation Index

4.1. PSNR

4.2. MS-SSIM

4.3. VIF

5. Experiments and Discussion

5.1. Space-Borne Images from Different Sensors

5.2. Performance Comparison of the Proposed Compression Method with Other Scan-Based Methods

6. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Zhang, L.; Lv, X.; Liang, X. Saliency Analysis via Hyperparameter Sparse Representation and Energy Distribution Optimization for Remote Sensing Images. Remote Sens. 2017, 9, 636. [Google Scholar] [CrossRef]

- Shi, C.; Zhang, J.; Zhang, Y. Content-based onboard compression for remote sensing images. Neurocomputing 2016, 191, 330–340. [Google Scholar] [CrossRef]

- Shapiro, J.M. Embedded image coding using zerotrees of wavelet coefficients. IEEE Trans. Signal Process. 1993, 41, 3445–3462. [Google Scholar] [CrossRef]

- Said, A.; Pearlman, W.A. A new, fast, and efficient image codec based on set partitioning in hierarchical trees. IEEE Trans. Circuits Syst. Video Technol. 1996, 6, 243–250. [Google Scholar] [CrossRef]

- Pearlman, W.A.; Islam, A.; Nagaraj, N.A. Said, Efficient low complexity image coding with a set-partitioning embedded block coder. IEEE Trans. Circuits Syst. Video Technol. 2004, 14, 1219–1235. [Google Scholar] [CrossRef]

- Christopoulos, C.; Skodras, A.; Ebrahimi, T. The JPEG2000 still image coding system: An overview. IEEE Trans. Consumer Electron. 2000, 46, 1103–1127. [Google Scholar] [CrossRef]

- Wu, Z.; Bilgin, A.; Marcellin, M.W. Joint source/channel coding for image transmission with JPEG2000 over memoryless channels. IEEE Trans. Image Process 2005, 14, 1020–1032. [Google Scholar] [PubMed]

- Suruliandi, A.; Raja, S.P. Empirical evaluation of EZW and other encoding techniques in the wavelet-based image compression domain. Int. J. Wavelets Mult. 2015, 13, 1550012. [Google Scholar] [CrossRef]

- Zhang, Y.; Cao, H.; Jiang, H. Mutual information-based context template modeling for bitplane coding in remote sensing image compression. J. Appl. Remote Sens. 2016, 10, 025011. [Google Scholar] [CrossRef]

- Hamdi, M.; Rhouma, R.; Belghith, S. A selective compression-encryption of images based on SPIHT coding and Chirikov Standard Map. Signal Process. 2017, 131, 514–526. [Google Scholar] [CrossRef]

- Zhang, L.; Li, A.; Zhang, Z.; Yang, K. Global and local saliency analysis for the extraction of residential areas in high-spatial-resolution remote sensing image. IEEE Trans. Geosci. Remote Sens. 2016, 54, 3750–3763. [Google Scholar] [CrossRef]

- CCSDS122.0-B-1. Image Data Compression. November 2005. Available online: http://public.ccsds.org/publications/archive/122x0b1c3.pdf (accessed on 20 May 2018).

- García-Vílchez, F.; Serra-Sagristà, J. Extending the CCSDS recommendation for image data compression for remote sensing scenarios. IEEE Trans. Geosci. Remote Sens. 2009, 47, 3431–3445. [Google Scholar]

- CCSDS122.0-B-2. Image Data Compression. November 2017. Available online: https://public.ccsds.org/Pubs/122x0b2.pdf (accessed on 20 May 2018).

- Kulkarni, P.; Bilgin, A.; Marcellin, M.W.; Dagher, J.C.; Kasner, J.H.; Flohr, T.J.; Rountree, J.C. Compression of earth science data with JPEG2000. In Hyperspectral Data Compression; Springer: Boston, MA, USA, 2006; pp. 347–378. ISBN 978-0-387-28579-5. [Google Scholar]

- Li, B.; Yang, R.; Jiang, H.X. Remote-sensing image compression using two-dimensional oriented wavelet transform. IEEE Trans. Geosci. Remote Sens. 2011, 49, 236–250. [Google Scholar] [CrossRef]

- Candes, E.J.; Donoho, D.L. New tight frames of curvelets and optimal representations of objects with piecewise C2 singularities. Commun. Pure. Appl. Math. 2004, 57, 219–266. [Google Scholar] [CrossRef]

- Do, M.N.; Vetterli, M. The contourlet transform: An efficient directional multiresolution image representation. IEEE Trans. Image Process. 2005, 14, 2091–2106. [Google Scholar] [CrossRef] [PubMed]

- Velisavljevic, V.; Beferull-Lozano, B.; Vetterli, M.; Dragotti, P.L. Directionlets: Anisotropic multidirectional representation with separable filtering. IEEE Trans. Image Process. 2006, 15, 1916–1933. [Google Scholar] [CrossRef] [PubMed]

- Guo, K.; Labate, D. Optimally sparse multidimensional representation using shearlets. SIAM J. Math. Anal. 2007, 39, 298–318. [Google Scholar] [CrossRef]

- Wang, C.; Lin, H.; Jiang, H. CANS: Towards Congestion-Adaptive and Small Stretch Emergency Navigation with Wireless Sensor Networks. IEEE Trans. Mob. Comput. 2016, 15, 1077–1089. [Google Scholar] [CrossRef]

- Shi, C.; Zhang, J.; Chen, H.; Zhang, Y. A Novel Hybrid Method for Remote Sensing Image Approximation Using the Tetrolet Transform. IEEE J. Sel. Top. Appl. Earth Observ. 2014, 7, 4949–4959. [Google Scholar] [CrossRef]

- Hou, X.; Jiang, G.; Ji, R.; Shi, C. Directional lifting wavelet and universal trellis coded quantization based image coding algorithm and objective quality evaluation. In Proceedings of the IEEE International Conference on Image Processing, Hong Kong, China, 26–29 September 2010; pp. 501–504. [Google Scholar]

- Hou, X.; Yang, J.; Jiang, G.; Qian, X.M. Complex SAR image compression based on directional lifting wavelet transform with high clustering capability. IEEE Trans. Geosci. Remote Sens. 2012, 51, 527–538. [Google Scholar] [CrossRef]

- Hou, X.; Zhang, L.; Gong, C. SAR image Bayesian compressive sensing exploiting the interscale and intrascale dependencies in directional lifting wavelet transform domain. Neurocomputing 2014, 133, 358–368. [Google Scholar] [CrossRef]

- Zhang, L.; Qiu, B. Edge-preserving image compression using adaptive lifting wavelet transform. Int. J. Electron. 2015, 102, 1190–1203. [Google Scholar] [CrossRef]

- Yan, W.; Shaker, A. El-Ashmawy N. Urban land cover classification using airborne LiDAR data: A review. Remote Sens. Environ. 2015, 158, 295–310. [Google Scholar] [CrossRef]

- Hussain, A.J.; Al-Jumeily, D.; Radi, N. Hybrid Neural Network Predictive-Wavelet Image Compression System. Neurocomputing 2015, 151, 975–984. [Google Scholar] [CrossRef] [Green Version]

- Zhang, L.; Chen, J.; Qiu, B. Region-of-Interest Coding Based on Saliency Detection and Directional Wavelet for Remote Sensing Images. IEEE Geosci. Remote Sens. Lett. 2017, 14, 23–27. [Google Scholar] [CrossRef]

- CCSDS122.1-B-1. Spectral Preprocessing Transform For Multispectral and Hyperspectral Image Compression. November 2017. Available online: https://public.ccsds.org/Pubs/122x1b1.pdf (accessed on 20 May 2018).

- Valsesia, D.; Magli, E. A Novel Rate Control Algorithm for Onboard Predictive Coding of Multispectral and Hyperspectral Images. IEEE Trans. Geosci. Remote Sens. 2014, 52, 6341–6355. [Google Scholar] [CrossRef] [Green Version]

- Valsesia, D.; Boufounos, P.T. Universal encoding of multispectral images. In Proceedings of the IEEE International Conference on Acoustics, Speech and Signal Processing, Shanghai, China, 20–25 March 2016; pp. 4453–4457. [Google Scholar]

- Wang, H.; Celik, T. Sparse Representation-Based Hyperspectral Data Processing: Lossy Compression. IEEE J. Sel. Topics Appl. Earth Observ. 2017, 99, 1–10. [Google Scholar] [CrossRef]

- Fu, W.; Li, S.; Fang, L.; Benediktsson, J. Adaptive Spectral–Spatial Compression of Hyperspectral Image with Sparse Representation. IEEE Trans. Geosci. Remote Sens. 2017, 55, 671–682. [Google Scholar] [CrossRef]

- Van, L.P.; De Praeter, J.; Van Wallendael, G.; Van Leuven, S.; De Cock, J.; Van de Walle, R. Efficient Bit Rate Transcoding for High Efficiency Video Coding. IEEE Trans. Multimed. 2016, 18, 364–378. [Google Scholar]

- Huang, K.K.; Liu, H.; Ren, C.X.; Yu, Y.F.; Lai, Z.R. Remote sensing image compression based on binary tree and optimized truncation. Digit. Signal Process. 2017, 64, 96–106. [Google Scholar] [CrossRef]

- Barret, M.; Gutzwiller, J.L.; Hariti, M. Low-Complexity Hyperspectral Image Coding Using Exogenous Orthogonal Optimal Spectral Transform (OrthOST) and Degree-2 Zerotrees. IEEE Trans. Geosci. Remote Sens. 2011, 49, 1557–1566. [Google Scholar] [CrossRef]

- Karami, A. Lossy compression of hyperspectral images using shearlet transform and 3D SPECK. In Proceedings of the Image and Signal Processing for Remote Sensing XXI, Toulouse, France, 15 October 2015; Volume 9643. [Google Scholar]

- Song, X.; Huang, Q.; Chang, S.; He, J.; Wang, H. Three-dimensional separate descendant-based SPIHT algorithm for fast compression of high-resolution medical image sequences. IET Image Process. 2016, 11, 80–87. [Google Scholar] [CrossRef]

- Huang, K.; Dai, D. A New On-Board Image Codec Based on Binary Tree with Adaptive Scanning Order in Scan-Based Mode. IEEE Trans. Geosci. Remote Sens. 2012, 50, 3737–3750. [Google Scholar] [CrossRef]

- Shi, C.; Zhang, J.; Zhang, Y. A Novel Vision-Based Adaptive Scanning for the Compression of Remote Sensing Images. IEEE Trans. Geosci. Remote Sens. 2016, 54, 1336–1348. [Google Scholar] [CrossRef]

- Liu, H.; Huang, K.; Ren, C.; Yu, Y.; Lai, Z. Quadtree Coding with Adaptive Scanning Order for Space-borne Image Compression. Signal Process. Image Commun. 2017, 55, 1–9. [Google Scholar] [CrossRef]

- Antonini, M.; Barlaud, M.; Mathieu, P.; Daubechies, I. Image coding using wavelet transform. IEEE Trans. Image Process. 1992, 1, 205–220. [Google Scholar] [CrossRef] [PubMed]

- Chang, C.L.; Girod, B. Direction-adaptive discrete wavelet transform for image compression. IEEE Trans. Image Process. 2007, 16, 1289–1302. [Google Scholar] [CrossRef] [PubMed]

- Sweldens, W. The lifting scheme: A construction of second generation wavelets. SIAM J. Math. Anal. 1998, 29, 511–546. [Google Scholar] [CrossRef]

- Ding, W.; Wu, F.; Wu, X.; Li, S.; Li, H. Adaptive directional lifting-based wavelet transform for image coding. IEEE Trans. Image Process. 2007, 16, 416–427. [Google Scholar] [CrossRef] [PubMed]

- Liu, Y.; Ngan, K.N. Weighted adaptive lifting-based wavelet transform for image coding. IEEE Trans. Image Process. 2008, 17, 500–511. [Google Scholar] [CrossRef] [PubMed]

- Sheikh, H.R.; Bovik, A.C. Image information and visual quality. IEEE Trans. Image Process. 2006, 15, 430–444. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- CCSDS Reference Test Image Set. April 2007. Available online: http://cwe.ccsds.org/sls/docs/sls-dc/ (accessed on 15 March 2018).

- USC-SIPI Database. Available online: http://sipi.usc.edu/database (accessed on 15 March 2018).

| Filter | Position | The Weights of the Filter |

|---|---|---|

| Bilinear filter | 1/4 | 3/4; 1/4 |

| 1/2 | 1/2; 1/2 | |

| 3/4 | 1/4; 3/4 | |

| Telenor 4-tap filter | 1/4 | −1/16; 13/16; 5/16; −1/16 |

| 1/2 | −1/8; 5/8; 5/8; −1/8 | |

| 3/4 | −1/16; 5/16; 13/16; −1/16 | |

| 2-tap filter | N/A | −1/4; 5/4 |

| Image | Bit Depth (bpp) | Source—Copyright |

|---|---|---|

| lunar | 8 | Galileo Image—NASA |

| Miramar NAS | 8 | USC-SIPI |

| ocean_2kb1 | 10 | NOAA Polar Orbiter (AVHRR)—NOAA |

| Pavia | 11 | QuickBird (0.6 m) |

| Houston | 11 | WorldView-2 Sensor(0.5 m) |

| pleiades_portdebouc_pan | 12 | Simulated PLEIADES—CNES |

| Image | Algorithm | Bit Rate (bpp) | |||||||

|---|---|---|---|---|---|---|---|---|---|

| 0.0313 | 0.0625 | 0.125 | 0.25 | 0.5 | 1 | 2 | 3 | ||

| lunar | CCSDS | 9.86 | 18.92 | 24.45 | 27.64 | 30.68 | 35.07 | 35.07 | 41.10 |

| JPEG2000 | 22.73 | 24.11 | 26.04 | 28.25 | 31.35 | 35.61 | 41.53 | 46.21 | |

| BTCA | 23.28 | 24.64 | 26.49 | 28.74 | 31.71 | 36.13 | 42.47 | 48.45 | |

| HAS | 23.32 | 24.70 | 26.51 | 28.77 | 31.76 | 36.08 | 42.41 | 48.40 | |

| QCAO | 23.32 | 24.93 | 26.50 | 28.87 | 31.79 | 36.15 | 42.46 | 48.51 | |

| Proposed | 23.40 | 25.26 | 26.63 | 29.42 | 31.83 | 36.29 | 42.60 | 48.62 | |

| Miramar NAS | CCSDS | 12.30 | 16.83 | 21.62 | 24.21 | 26.71 | 29.81 | 34.74 | 39.41 |

| JPEG2000 | 19.67 | 20.81 | 22.54 | 24.50 | 26.80 | 29.97 | 35.14 | 40.03 | |

| BTCA | 20.17 | 21.28 | 22.87 | 24.78 | 27.12 | 30.33 | 35.31 | 40.53 | |

| HAS | 20.15 | 21.35 | 22.85 | 24.79 | 27.19 | 30.29 | 35.15 | 40.24 | |

| QCAO | 20.37 | 21.57 | 22.90 | 25.05 | 27.32 | 30.33 | 35.30 | 40.56 | |

| Proposed | 20.61 | 21.76 | 23.00 | 25.27 | 27.44 | 30.54 | 35.37 | 40.66 | |

| ocean_2kb1 | CCSDS | 14.33 | 17.63 | 29.69 | 33.72 | 36.90 | 40.86 | 47.01 | 52.41 |

| JPEG2000 | 28.08 | 29.91 | 32.17 | 34.51 | 37.54 | 41.84 | 48.40 | 53.80 | |

| BTCA | 28.81 | 30.55 | 32.72 | 35.00 | 37.97 | 42.19 | 48.59 | 54.53 | |

| HAS | 28.79 | 30.59 | 32.60 | 34.98 | 37.99 | 42.13 | 48.51 | 54.49 | |

| QCAO | 28.85 | 30.53 | 32.78 | 34.96 | 37.90 | 42.26 | 48.64 | 54.57 | |

| Proposed | 28.97 | 30.84 | 32.95 | 35.15 | 38.11 | 42.35 | 48.69 | 54.59 | |

| Pavia | CCSDS | 19.30 | 25.14 | 34.26 | 37.10 | 39.39 | 42.13 | 46.79 | 51.77 |

| JPEG2000 | 32.51 | 34.05 | 35.75 | 37.64 | 39.79 | 42.65 | 47.74 | 52.97 | |

| BTCA | 32.96 | 34.21 | 35.94 | 37.78 | 39.93 | 42.71 | 47.49 | 52.61 | |

| HAS | 32.90 | 34.33 | 35.95 | 37.73 | 39.94 | 42.71 | 47.34 | 52.38 | |

| QCAO | 33.41 | 34.65 | 36.29 | 37.85 | 39.86 | 42.62 | 47.40 | 52.58 | |

| Proposed | 33.65 | 34.80 | 36.44 | 38.09 | 40.02 | 42.83 | 47.57 | 52.70 | |

| Houston | CCSDS | 15.95 | 21.73 | 31.69 | 35.45 | 38.35 | 41.75 | 46.63 | 51.37 |

| JPEG2000 | 29.94 | 31.73 | 33.52 | 35.92 | 38.65 | 42.17 | 47.28 | 52.28 | |

| BTCA | 30.34 | 31.99 | 33.89 | 36.07 | 38.69 | 42.23 | 47.08 | 52.04 | |

| HAS | 30.33 | 31.92 | 33.87 | 36.04 | 38.69 | 42.24 | 46.99 | 51.90 | |

| QCAO | 31.02 | 32.51 | 34.03 | 36.17 | 38.75 | 42.33 | 46.89 | 51.90 | |

| Proposed | 31.20 | 32.65 | 34.15 | 36.24 | 38.79 | 42.39 | 46.82 | 51.79 | |

| pleiades_portdebouc | CCSDS | 13.20 | 17.93 | 29.24 | 34.21 | 38.20 | 42.87 | 48.99 | 54.05 |

| JPEG2000 | 27.61 | 29.77 | 31.87 | 34.89 | 38.28 | 42.64 | 48.99 | 54.12 | |

| BTCA | 28.47 | 30.40 | 32.88 | 35.57 | 39.23 | 43.52 | 49.31 | 54.42 | |

| HAS | 28.44 | 30.41 | 32.71 | 35.55 | 39.18 | 43.48 | 49.24 | 54.32 | |

| QCAO | 28.61 | 30.45 | 33.35 | 35.73 | 39.22 | 43.52 | 49.35 | 54.48 | |

| Proposed | 28.73 | 30.59 | 33.49 | 35.92 | 39.39 | 43.65 | 49.67 | 54.76 | |

| Image | Algorithm | Bit Rate (bpp) | |||||||

|---|---|---|---|---|---|---|---|---|---|

| 0.0313 | 0.0625 | 0.125 | 0.25 | 0.5 | 1 | 2 | 3 | ||

| lunar | CCSDS | 0.1630 | 0.6538 | 0.8215 | 0.9220 | 0.9672 | 0.9895 | 0.9895 | 0.9971 |

| JPEG2000 | 0.6702 | 0.7849 | 0.8716 | 0.9310 | 0.9649 | 0.9882 | 0.9968 | 0.9988 | |

| BTCA | 0.7211 | 0.8035 | 0.8779 | 0.9413 | 0.9720 | 0.9906 | 0.9979 | 0.9989 | |

| HAS | 0.7296 | 0.8082 | 0.8871 | 0.9474 | 0.9751 | 0.9919 | 0.9980 | 0.9991 | |

| QCAO | 0.7239 | 0.8241 | 0.8843 | 0.9445 | 0.9721 | 0.9908 | 0.9979 | 0.9994 | |

| Proposed | 0.7374 | 0.8456 | 0.8902 | 0.9500 | 0.9789 | 0.9946 | 0.9985 | 0.9996 | |

| Miramar NAS | CCSDS | 0.2877 | 0.5683 | 0.8008 | 0.9148 | 0.9627 | 0.9848 | 0.9950 | 0.9980 |

| JPEG2000 | 0.6242 | 0.7395 | 0.8506 | 0.9222 | 0.9638 | 0.9850 | 0.9945 | 0.9980 | |

| BTCA | 0.6679 | 0.7726 | 0.8680 | 0.9250 | 0.9634 | 0.9857 | 0.9956 | 0.9982 | |

| HAS | 0.6761 | 0.7793 | 0.8601 | 0.9292 | 0.9679 | 0.9867 | 0.9959 | 0.9986 | |

| QCAO | 0.6945 | 0.7908 | 0.8721 | 0.9335 | 0.9682 | 0.9855 | 0.9956 | 0.9985 | |

| Proposed | 0.6963 | 0.7917 | 0.8756 | 0.9379 | 0.9681 | 0.9871 | 0.9956 | 0.9986 | |

| ocean_2kb1 | CCSDS | 0.1640 | 0.4233 | 0.8351 | 0.9319 | 0.9645 | 0.9849 | 0.9962 | 0.9986 |

| JPEG2000 | 0.7014 | 0.8015 | 0.8789 | 0.9265 | 0.9624 | 0.9833 | 0.9959 | 0.9986 | |

| BTCA | 0.7396 | 0.8121 | 0.8820 | 0.9355 | 0.9660 | 0.9863 | 0.9967 | 0.9987 | |

| HAS | 0.7267 | 0.8256 | 0.8869 | 0.9322 | 0.9647 | 0.9857 | 0.9966 | 0.9990 | |

| QCAO | 0.7425 | 0.8237 | 0.8917 | 0.9347 | 0.9659 | 0.9863 | 0.9964 | 0.9988 | |

| Proposed | 0.7477 | 0.8337 | 0.8936 | 0.9361 | 0.9676 | 0.9878 | 0.9968 | 0.9991 | |

| Pavia | CCSDS | 0.1222 | 0.4259 | 0.7703 | 0.8912 | 0.9432 | 0.9731 | 0.9905 | 0.9969 |

| JPEG2000 | 0.5410 | 0.7008 | 0.8089 | 0.8899 | 0.9448 | 0.9742 | 0.9911 | 0.9970 | |

| BTCA | 0.5836 | 0.7046 | 0.8240 | 0.8939 | 0.9419 | 0.9731 | 0.9907 | 0.9970 | |

| HAS | 0.6080 | 0.7250 | 0.8185 | 0.8962 | 0.9484 | 0.9759 | 0.9913 | 0.9971 | |

| QCAO | 0.6427 | 0.7506 | 0.8440 | 0.9029 | 0.9456 | 0.9734 | 0.9903 | 0.9970 | |

| Proposed | 0.6865 | 0.7625 | 0.8518 | 0.9057 | 0.9488 | 0.9742 | 0.9906 | 0.9971 | |

| Houston | CCSDS | 0.0993 | 0.4287 | 0.7617 | 0.8965 | 0.9506 | 0.9785 | 0.9925 | 0.9974 |

| JPEG2000 | 0.5608 | 0.7013 | 0.8173 | 0.8935 | 0.9489 | 0.9779 | 0.9922 | 0.9972 | |

| BTCA | 0.5937 | 0.7106 | 0.8054 | 0.8901 | 0.9497 | 0.9777 | 0.9924 | 0.9974 | |

| HAS | 0.5791 | 0.7021 | 0.8255 | 0.8957 | 0.9512 | 0.9788 | 0.9928 | 0.9976 | |

| QCAO | 0.6529 | 0.7608 | 0.8264 | 0.8953 | 0.9503 | 0.9774 | 0.9920 | 0.9973 | |

| Proposed | 0.6626 | 0.7681 | 0.8271 | 0.8960 | 0.9507 | 0.9769 | 0.9919 | 0.9973 | |

| pleiades_portdebouc | CCSDS | 0.1084 | 0.2578 | 0.7476 | 0.9204 | 0.9726 | 0.9911 | 0.9972 | 0.9989 |

| JPEG2000 | 0.5768 | 0.7473 | 0.8572 | 0.9322 | 0.9725 | 0.9906 | 0.9970 | 0.9988 | |

| BTCA | 0.6469 | 0.7745 | 0.8755 | 0.9405 | 0.9765 | 0.9911 | 0.9973 | 0.9989 | |

| HAS | 0.6350 | 0.7889 | 0.8820 | 0.9413 | 0.9751 | 0.9918 | 0.9974 | 0.9990 | |

| QCAO | 0.6657 | 0.7891 | 0.8937 | 0.9419 | 0.9765 | 0.9912 | 0.9974 | 0.9990 | |

| Proposed | 0.6737 | 0.7990 | 0.8946 | 0.9433 | 0.9775 | 0.9917 | 0.9977 | 0.9991 | |

| Image | Algorithm | Bit Rate (bpp) | |||||||

|---|---|---|---|---|---|---|---|---|---|

| 0.0313 | 0.0625 | 0.125 | 0.25 | 0.5 | 1 | 2 | 3 | ||

| lunar | CCSDS | 0.0221 | 0.0552 | 0.1136 | 0.2553 | 0.4288 | 0.6622 | 0.6622 | 0.8668 |

| JPEG2000 | 0.0446 | 0.0892 | 0.1686 | 0.2830 | 0.4336 | 0.6777 | 0.8841 | 0.9552 | |

| BTCA | 0.0585 | 0.1026 | 0.1797 | 0.3074 | 0.4733 | 0.7149 | 0.9106 | 0.9749 | |

| HAS | 0.0550 | 0.1086 | 0.1856 | 0.3083 | 0.4794 | 0.7235 | 0.9297 | 0.9817 | |

| QCAO | 0.0571 | 0.1253 | 0.1836 | 0.3142 | 0.4703 | 0.7181 | 0.9101 | 0.9746 | |

| Proposed | 0.0599 | 0.1521 | 0.1928 | 0.3405 | 0.4792 | 0.7217 | 0.9086 | 0.9738 | |

| Miramar NAS | CCSDS | 0.0206 | 0.0464 | 0.0977 | 0.2174 | 0.3610 | 0.5373 | 0.7494 | 0.8699 |

| JPEG2000 | 0.0355 | 0.0671 | 0.1326 | 0.2331 | 0.3696 | 0.5551 | 0.7426 | 0.8828 | |

| BTCA | 0.0452 | 0.0844 | 0.1468 | 0.2409 | 0.3669 | 0.5587 | 0.7808 | 0.9138 | |

| HAS | 0.0481 | 0.0834 | 0.1427 | 0.2474 | 0.3950 | 0.5639 | 0.8098 | 0.9265 | |

| QCAO | 0.0508 | 0.0893 | 0.1487 | 0.2511 | 0.3958 | 0.5573 | 0.7829 | 0.9146 | |

| Proposed | 0.0527 | 0.0900 | 0.1527 | 0.2563 | 0.3976 | 0.5583 | 0.7781 | 0.9125 | |

| ocean_2kb1 | CCSDS | 0.0205 | 0.0387 | 0.0887 | 0.2117 | 0.3473 | 0.5351 | 0.7693 | 0.8931 |

| JPEG2000 | 0.0403 | 0.0745 | 0.1339 | 0.2199 | 0.3387 | 0.5259 | 0.7960 | 0.9180 | |

| BTCA | 0.0506 | 0.0853 | 0.1465 | 0.2384 | 0.3654 | 0.5633 | 0.8011 | 0.9297 | |

| HAS | 0.0497 | 0.0904 | 0.1472 | 0.2348 | 0.3678 | 0.5714 | 0.8293 | 0.9468 | |

| QCAO | 0.0514 | 0.0860 | 0.1469 | 0.2387 | 0.3641 | 0.5623 | 0.7998 | 0.9281 | |

| Proposed | 0.0525 | 0.1013 | 0.1488 | 0.2430 | 0.3694 | 0.5576 | 0.7992 | 0.9294 | |

| Pavia | CCSDS | 0.0164 | 0.0355 | 0.0716 | 0.1622 | 0.2629 | 0.3957 | 0.5876 | 0.7606 |

| JPEG2000 | 0.0258 | 0.0514 | 0.0938 | 0.1667 | 0.2706 | 0.4106 | 0.6141 | 0.7771 | |

| BTCA | 0.0311 | 0.0559 | 0.1029 | 0.1708 | 0.2675 | 0.3985 | 0.5987 | 0.7849 | |

| HAS | 0.0340 | 0.0576 | 0.1010 | 0.1726 | 0.2848 | 0.4363 | 0.6445 | 0.8081 | |

| QCAO | 0.0489 | 0.0741 | 0.1259 | 0.1760 | 0.2769 | 0.4145 | 0.5949 | 0.7823 | |

| Proposed | 0.0596 | 0.0805 | 0.1267 | 0.1777 | 0.2760 | 0.4136 | 0.5921 | 0.7829 | |

| Houston | CCSDS | 0.0152 | 0.0307 | 0.0633 | 0.1595 | 0.2707 | 0.4196 | 0.6161 | 0.7768 |

| JPEG2000 | 0.0272 | 0.0541 | 0.0949 | 0.1620 | 0.2763 | 0.4322 | 0.6218 | 0.7607 | |

| BTCA | 0.0326 | 0.0561 | 0.0960 | 0.1643 | 0.2749 | 0.4263 | 0.6305 | 0.7984 | |

| HAS | 0.0300 | 0.0568 | 0.1028 | 0.1693 | 0.2847 | 0.4498 | 0.6638 | 0.8262 | |

| QCAO | 0.0652 | 0.0774 | 0.1027 | 0.1685 | 0.2773 | 0.4208 | 0.6259 | 0.7946 | |

| Proposed | 0.0697 | 0.0791 | 0.1039 | 0.1696 | 0.2726 | 0.4176 | 0.6186 | 0.7906 | |

| pleiades_portdebouc | CCSDS | 0.0103 | 0.0180 | 0.0462 | 0.1215 | 0.2176 | 0.3599 | 0.5682 | 0.7232 |

| JPEG2000 | 0.0220 | 0.0448 | 0.0764 | 0.1330 | 0.2242 | 0.3607 | 0.5467 | 0.7204 | |

| BTCA | 0.0301 | 0.0524 | 0.0899 | 0.1468 | 0.2384 | 0.3632 | 0.5496 | 0.7268 | |

| HAS | 0.0297 | 0.0552 | 0.0909 | 0.1475 | 0.2405 | 0.3839 | 0.5824 | 0.7529 | |

| QCAO | 0.0317 | 0.0534 | 0.1074 | 0.1479 | 0.2375 | 0.3652 | 0.5936 | 0.7105 | |

| Proposed | 0.0361 | 0.0567 | 0.1092 | 0.1519 | 0.2437 | 0.3776 | 0.6072 | 0.7299 | |

| Algorithm | 1 bpp | 0.5 bpp | 0.25 bpp |

|---|---|---|---|

| CCSDS | 1.78 | 0.93 | 0.41 |

| JPEG2000 | 2.12 | 1.19 | 0.53 |

| BTCA | 0.30 | 0.27 | 0.25 |

| HAS | 0.37 | 0.33 | 0.31 |

| QCAO | 0.28 | 0.25 | 0.23 |

| Proposed | 1.29 | 1.16 | 0.98 |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Shi, C.; Wang, L.; Zhang, J.; Miao, F.; He, P. Remote Sensing Image Compression Based on Direction Lifting-Based Block Transform with Content-Driven Quadtree Coding Adaptively. Remote Sens. 2018, 10, 999. https://0-doi-org.brum.beds.ac.uk/10.3390/rs10070999

Shi C, Wang L, Zhang J, Miao F, He P. Remote Sensing Image Compression Based on Direction Lifting-Based Block Transform with Content-Driven Quadtree Coding Adaptively. Remote Sensing. 2018; 10(7):999. https://0-doi-org.brum.beds.ac.uk/10.3390/rs10070999

Chicago/Turabian StyleShi, Cuiping, Liguo Wang, Junping Zhang, Fengjuan Miao, and Peng He. 2018. "Remote Sensing Image Compression Based on Direction Lifting-Based Block Transform with Content-Driven Quadtree Coding Adaptively" Remote Sensing 10, no. 7: 999. https://0-doi-org.brum.beds.ac.uk/10.3390/rs10070999