Efficient SfM for Oblique UAV Images: From Match Pair Selection to Geometrical Verification

Abstract

:1. Introduction

2. Methodology

2.1. Match Pair Selection Based on MST-Expansion

2.2. Tiling Strategy for Feature Extraction and Matching

2.3. Geometrical Verification Using HMCC-RANSAC

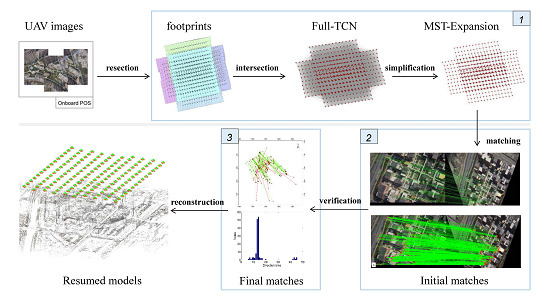

2.4. Integrated Solution for Efficient SfM

- (1)

- Match graph extraction. Image footprints are firstly calculated; then, initial match pairs are searched based on the overlap criterion, and image topology connection network is formed by using initial match pairs; finally, the image connection network is simplified through the MST-Expansion algorithm.

- (2)

- Feature extraction and matching. Features are extracted from each image by using the SiftGPU [22] open-source library. To avoid memory overflow caused by images with large size, the tiling strategy is utilized in feature extraction; to avoid time consumption caused by exhaustive matching, image pairs deduced from the previous step are used to guide feature matching.

- (3)

- Geometrical verification. First, obvious false matches are detected and removed by using the HMCC algorithm; second, the RANSAC-based rigorous geometrical verification is utilized to refine the final matches; finally, matched points corresponding to the same object locations are linked to generate tie-points, namely tracks.

- (4)

- SfM reconstruction. The problem of recovering camera poses and scene geometry is formulated as a joint minimization problem, where the sum of errors between projections of tracks and the corresponding image points is minimized, as presented by Equation (1):where and denote a 3D point and a camera, respectively; is the predicted projection of point on camera ; is the observed image point; denotes the L2-norm; is an indicator function with if point is visible in camera ; otherwise, . The problem is solved using the open-source nonlinear optimization library Ceres Solver [36]. Because good initial values of unknown parameters are essential to ensure the globally optimal solution, an incremental SfM pipeline, similar to Snavely et al. [37], is used in this study. Therefore, based on the above four major steps, this study proposes the integrated SfM solution to achieve efficient orientation for oblique UAV images.

3. Experimental Results

3.1. Datasets

3.2. Performance Evaluation of Individual Steps

3.3. Comparison with Other Software Packages

3.3.1. Efficiency

3.3.2. Completeness

3.3.3. Accuracy

4. Discussion

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Aicardi, I.; Chiabrando, F.; Grasso, N.; Lingua, A.M.; Noardo, F.; Spanò, A. UAV photogrammetry with oblique images: First analysis on data acquisition and processing. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2016, 41, 835–842. [Google Scholar] [CrossRef]

- Jiang, S.; Jiang, W.; Huang, W.; Yang, L. UAV-Based Oblique Photogrammetry for Outdoor Data Acquisition and Offsite Visual Inspection of Transmission Line. Remote Sens. 2017, 9, 278. [Google Scholar] [CrossRef]

- Qin, R. An Object-Based Hierarchical Method for Change Detection Using Unmanned Aerial Vehicle Images. Remote Sens. 2014, 6, 7911–7932. [Google Scholar] [CrossRef] [Green Version]

- Lin, Y.; Jiang, M.; Yao, Y.; Zhang, L.; Lin, J. Use of UAV oblique imaging for the detection of individual trees in residential environments. Urban For. Urban Green. 2015, 14, 404–412. [Google Scholar] [CrossRef]

- Fischler, M.A.; Bolles, R.C. Random sample consensus: A paradigm for model fitting with applications to image analysis and automated cartography. Commun. ACM 1981, 24, 381–395. [Google Scholar] [CrossRef]

- Westoby, M.; Brasington, J.; Glasser, N.; Hambrey, M.; Reynolds, J. ‘Structure-from-Motion’photogrammetry: A low-cost, effective tool for geoscience applications. Geomorphology 2012, 179, 300–314. [Google Scholar] [CrossRef] [Green Version]

- Zhang, R.; Schneider, D.; Strauß, B. Generation and Comparison of TLS and SfM based 3D Models of Solid Shapes in Hydromechanical Research. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2016, XLI-B5, 925–929. [Google Scholar] [CrossRef]

- Ippoliti, E.; Meschini, A.; Sicuranza, F. Structure from motion systems for architectural heritage. A survey of the internal loggia courtyard of Palazzo Dei Capitani, Ascoli Piceno, Italy. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2015, 40, 53. [Google Scholar] [CrossRef]

- Chidburee, P.; Mills, J.; Miller, P.; Fieber, K. Towards a low-cost real-time photogrammetric landslide monitoring system utilising mobile and cloud computing technology. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2016, 41, 791–797. [Google Scholar] [CrossRef]

- Hartmann, W.; Havlena, M.; Schindler, K. Recent developments in large-scale tie-point matching. ISPRS J. Photogramm. Remote Sens. 2016, 115, 47–62. [Google Scholar] [CrossRef]

- Heinly, J.; Schonberger, J.L.; Dunn, E.; Frahm, J.M. Reconstructing the world* in six days*(as captured by the yahoo 100 million image dataset). In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Hilton Head Island, SC, USA, 7–12 June 2015; pp. 3287–3295. [Google Scholar]

- Jiang, S.; Jiang, W. On-Board GNSS/IMU Assisted Feature Extraction and Matching for Oblique UAV Images. Remote Sens. 2017, 9, 813. [Google Scholar] [CrossRef]

- Irschara, A.; Hoppe, C.; Bischof, H.; Kluckner, S. Efficient structure from motion with weak position and orientation priors. In Proceedings of the 2011 IEEE Computer Society Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Colorado Springs, CO, USA, 20–25 June 2011; pp. 21–28. [Google Scholar]

- Rupnik, E.; Nex, F.; Remondino, F. Oblique Multi-Camera Systems - Orientation and Dense Matching Issues. ISPRS Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2014, XL-3/W1, 107–114. [Google Scholar] [CrossRef]

- Xu, Z.; Wu, L.; Chen, S.; Wang, R.; Li, F.; Wang, Q. Extraction of Image Topological Graph for Recovering the Scene Geometry from UAV Collections. ISPRS Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2014, XL-4, 319–323. [Google Scholar] [CrossRef]

- Xu, Z.; Wu, L.; Gerke, M.; Wang, R.; Yang, H. Skeletal camera network embedded structure-from-motion for 3D scene reconstruction from UAV images. ISPRS J. Photogramm. Remote Sens. 2016, 121, 113–127. [Google Scholar] [CrossRef]

- Jiang, S.; Jiang, W. Efficient structure from motion for oblique UAV images based on maximal spanning tree expansion. ISPRS J. Photogramm. Remote Sens. 2017, 132, 140–161. [Google Scholar] [CrossRef]

- Harris, C.; Stephens, M. A combined corner and edge detector. In Alvey Vision Conference; Plessey Research Roke Manor: Manchester, UK, 1988; Volume 15, pp. 10–5244. [Google Scholar]

- Lowe, D.G. Distinctive image features from scale-invariant keypoints. Int. J. Comput. Vis. 2004, 60, 91–110. [Google Scholar] [CrossRef]

- Ke, Y.; Sukthankar, R. PCA-SIFT: A more distinctive representation for local image descriptors. In Proceedings of the 2004 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Washington, DC, USA, 27 June–2 July 2004; p. II. [Google Scholar]

- Morel, J.M.; Yu, G. ASIFT: A New Framework for Fully Affine Invariant Image Comparison. Siam J. Imaging Sci. 2009, 2, 438–469. [Google Scholar] [CrossRef] [Green Version]

- Wu, C. SiftGPU: A GPU Implementation of David Lowe’s Scale Invariant Feature Transform (SIFT). Available online: http://cs.unc.edu/~ccwu/siftgpu (accessed on 19 June 2017).

- Hess, R. An open-source SIFTLibrary. In Proceedings of the 18th ACM International Conference on Multimedia, Firenze, Italy, 25–29 October 2010; pp. 1493–1496. [Google Scholar]

- Rublee, E.; Rabaud, V.; Konolige, K.; Bradski, G. ORB: An efficient alternative to SIFT or SURF. In Proceedings of the 2011 IEEE International Conference on Computer Vision (ICCV), Barcelona, Spain, 6–13 November 2011; pp. 2564–2571. [Google Scholar]

- Chum, O.; Matas, J. Optimal randomized RANSAC. IEEE Trans. Pattern Anal. Mach. Intell. 2008, 30, 1472–1482. [Google Scholar] [CrossRef] [PubMed]

- Hough, P.V.C. Method and Means for Recognizing Complex Patterns. U.S. Patent 3,069,654, 18 December 1962. [Google Scholar]

- Li, X.; Larson, M.; Hanjalic, A. Pairwise geometric matching for large-scale object retrieval. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, New York, NY, USA, 15–17 June 1993; pp. 5153–5161. [Google Scholar]

- Lu, L.; Zhang, Y.; Tao, P. Geometrical Consistency Voting Strategy for Outlier Detection in Image Matching. Photogramm. Eng. Remote Sens. 2016, 82, 559–570. [Google Scholar] [CrossRef]

- Tsai, C.H.; Lin, Y.C. An accelerated image matching technique for UAV orthoimage registration. ISPRS J. Photogramm. Remote Sens. 2017, 128, 130–145. [Google Scholar] [CrossRef]

- Zhuo, X.; Koch, T.; Kurz, F.; Fraundorfer, F.; Reinartz, P. Automatic UAV Image Geo-Registration by Matching UAV Images to Georeferenced Image Data. Remote Sens. 2017, 9, 376. [Google Scholar] [CrossRef]

- Jiang, S.; Jiang, W. Hierarchical Motion Consistency Constraint for Efficient Geometrical Verification in UAV Image Matching. ISPRS J. Photogramm. Remote Sens. 2018, 142, 222–242. [Google Scholar] [CrossRef]

- Rodriguez, E.; Morris, C.S.; Belz, J.E. A global assessment of the SRTM performance. Photogramm. Eng. Remote Sens. 2006, 72, 249–260. [Google Scholar] [CrossRef]

- Graham, R.L.; Hell, P. On the history of the minimum spanning tree problem. Ann. Hist. Comput. 1985, 7, 43–57. [Google Scholar] [CrossRef]

- Kruskal, J.B. On the shortest spanning subtree of a graph and the traveling salesman problem. Proc. Am. Math. Soc. 1956, 7, 48–50. [Google Scholar] [CrossRef]

- Hu, H.; Zhu, Q.; Du, Z.; Zhang, Y.; Ding, Y. Reliable spatial relationship constrained feature point matching of oblique aerial images. Photogramm. Eng. Remote Sens. 2015, 81, 49–58. [Google Scholar] [CrossRef]

- Agarwal, S.; Snavely, N.; Seitz, S.; Szeliski, R. Bundle adjustment in the large. In European Conference on Computer Vision; Springer: Berlin, Germany, 2010; pp. 29–42. [Google Scholar]

- Snavely, N.; Seitz, S.M.; Szeliski, R. Photo tourism: Exploring photo collections in 3D. In ACM Transactions on Graphics (TOG); ACM: Boston, MA, USA, 2006; Volume 25, pp. 835–846. [Google Scholar]

- Chum, O.; Matas, J.; Kittler, J. Locally optimized RANSAC. In Joint Pattern Recognition Symposium; Springer: Berlin/Heidelberg, Germany, 2003; pp. 236–243. [Google Scholar]

- Rupnik, E.; Daakir, M.; Deseilligny, M.P. MicMac—A free, open-source solution for photogrammetry. Open Geosp. Data Softw. Stand. 2017, 2, 14. [Google Scholar] [CrossRef]

- Agisoft PhotoScan. Available online: http://www.agisoft.com/ (accessed on 19 March 2018).

- Agisoft PhotoScan Manual. Available online: http://www.agisoft.com/downloads/user-manuals/ (accessed on 19 March 2018).

- MicMac. Available online: http://www.tapenade.gamsau.archi.fr/TAPEnADe/Tools.html (accessed on 19 March 2018).

- Vedaldi, A.; Fulkerson, B. VLFeat: An Open and Portable Library of Computer Vision Algorithms. 2018. Available online: http://www.vlfeat.org/ (accessed on 19 March 2018).

- Arya, S.; Mount, D.M.; Netanyahu, N.S.; Silverman, R.; Wu, A.Y. An optimal algorithm for approximate nearest neighbor searching fixed dimensions. J. ACM 1998, 45, 891–923. [Google Scholar] [CrossRef] [Green Version]

| Item Name | Value or Description |

|---|---|

| Inputs | Flight control data, camera install angles, mean altitude |

| Outpus | Image match pairs |

| Parameters | Overlap ratio |

| Weight ratio | |

| Eigenvalue ratio | |

| Expansion angle | |

| Expansion threshold |

| Item Name | Value or Description |

|---|---|

| Inputs | Two UAV images |

| Outpus | Feature matches |

| Parameters | Scale size |

| Block size | |

| Block expansion size |

| Item Name | Value or Description |

|---|---|

| Inputs | Initial matches, rough POS, mean altitude |

| Outpus | Inlier matches |

| Parameters | K nearest neighbors (KNN) |

| Z-score test | |

| Neighbor ratio for step 1 | |

| Neighbor ratio for step 2 |

| Item Name | Dataset 1 | Dataset 2 | Dataset 3 | Dataset 4 |

|---|---|---|---|---|

| UAV type | multi-rotor | multi-rotor | multi-rotor | multi-rotor |

| Flight height (m) | 165 | 120 | 175 | 300 |

| Camera mode | Sony RX1R | Sony RX1R | Sony NEX-7 | Sony ILCE-7R |

| Number of cameras | 1 | 2 | 5 | 1 |

| Focal length (mm) | 35 | 35 | nadir: 16 oblique: 35 | 35 |

| Camera mount angle () | front: 25, −15 | front: 25, −15 back: 0, −25 | nadir: 0 oblique: 45/−45 | nadir: 0 oblique: 45/−45 |

| Number of images | 320 | 390 | 750 | 157 |

| Image size (pixel × pixel) | 6000 × 4000 | 6000 × 4000 | 6000 × 4000 | 7360 × 4912 |

| GSD (cm) | 5.05 | 3.67 | 4.27 | 4.20 |

| Dataset | LO-RANSAC | GC-RANSAC | HMCC-RANSAC | ||||

|---|---|---|---|---|---|---|---|

| Filter | Verify | Sum | Filter | Verify | Sum | ||

| 1 | 24.3 | 15,594.6 | 15.5 | 15,610.1 | 14.8 | 15.6 | 30.4 |

| 2 | 11.1 | 9670.4 | 10.2 | 9680.6 | 23.9 | 10.7 | 34.6 |

| 3 | 125.7 | 7828.1 | 74.6 | 7902.7 | 14.6 | 61.8 | 76.4 |

| 4 | 47.9 | 6262.5 | 30.6 | 6293.1 | 5.7 | 22.4 | 28.1 |

| Item Name | MicMac | PhotoScan |

|---|---|---|

| Use POS data | yes | yes |

| Use GPU acceleration | no (8 CPU cores) | yes (1 GPU + 8 CPU cores) |

| Use tiling strategy | yes (SIFT++ library) | yes |

| Image pair selection | POS aided | POS aided + multi-scale |

| Image size | half | original |

| Key point limit | 0 | 80,000 |

| Tie point limit | 0 | 0 |

| Method | Dataset 1 | Dataset 2 | Dataset 3 | Dataset 4 |

|---|---|---|---|---|

| MicMac | 722.12 | 928.05 | 2920.76 | 892.04 |

| PhotoScan | 65.24 | 102.03 | 146.04 | 33.52 |

| Ours | 21.01 | 21.56 | 47.98 | 9.15 |

| Dataset | MicMac | PhotoScan | Ours | ||||

|---|---|---|---|---|---|---|---|

| Images | Points | Images | Points-init | Points-opt | Images | Points | |

| 1 | 320/320 | 878,469 | 320/320 | 1,648,706 | 711,808 | 320/320 | 689,035 |

| 2 | 390/390 | 853,545 | 390/390 | 2,289,047 | 785,513 | 390/390 | 675,811 |

| 3 | 750/750 | 913,854 | 750/750 | 2,314,535 | 905,815 | 750/750 | 848,129 |

| 4 | 157/157 | 376,821 | 157/157 | 832,850 | 393,674 | 157/157 | 333,034 |

| Dataset | MicMac | PhotoScan | Ours | |

|---|---|---|---|---|

| Initial | Optimization | |||

| 1 | 0.994 | 0.928 | 0.404 | 0.378 |

| 2 | 0.768 | 0.728 | 0.242 | 0.295 |

| 3 | 1.484 | 1.350 | 0.562 | 0.477 |

| 4 | 0.876 | 0.775 | 0.319 | 0.291 |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Jiang, S.; Jiang, W. Efficient SfM for Oblique UAV Images: From Match Pair Selection to Geometrical Verification. Remote Sens. 2018, 10, 1246. https://0-doi-org.brum.beds.ac.uk/10.3390/rs10081246

Jiang S, Jiang W. Efficient SfM for Oblique UAV Images: From Match Pair Selection to Geometrical Verification. Remote Sensing. 2018; 10(8):1246. https://0-doi-org.brum.beds.ac.uk/10.3390/rs10081246

Chicago/Turabian StyleJiang, San, and Wanshou Jiang. 2018. "Efficient SfM for Oblique UAV Images: From Match Pair Selection to Geometrical Verification" Remote Sensing 10, no. 8: 1246. https://0-doi-org.brum.beds.ac.uk/10.3390/rs10081246