1. Introduction

For aerial images, semantic labeling means assigning each pixel a category label, which is also known as semantic segmentation in the computer vision field. In nature, it is a multi-category classification problem which needs to classify every pixel in the aerial image [

1,

2,

3]. This characteristic makes it more sophisticated than binary classification problems, like building extraction and road extraction. Besides, different from digital photos widely used in the computer vision field, objects present large scale variations and compose complex scenes in aerial images, especially in urban areas. They consist of many kinds of objects, like buildings, vegetation, trees, etc. Buildings have varying sizes, cars are tiny, while trees are interwoven with vegetation. These properties make the semantic labeling task more difficult. It is a necessary task in aerial image interpretation in spite of its difficulty and becomes the basis for following applications, including land-use analysis, environmental protection, urban change detection, urban planning, and so on [

4,

5,

6,

7,

8].

Regarding this task, researchers have proposed numerous methods to accomplish it, which could be divided into two types, traditional methods and Convolutional Neural Networks (CNNs) methods.

Traditional methods mainly consist of two independent parts, i.e., feature extraction and classification algorithms. Certain types of features are extracted from a small patch in the aerial image, then sent to classifier to determine its category. Features are generally constructed manually, including Scale Invariant Feature Transform (SIFT) [

9], Histogram of Oriented Gradients (HOG) [

10], and Features from Accelerated Segment Test (FAST) [

11]. These hand-crafted features have their own characteristics in specific conditions while cannot handle general situation well. Researchers need to choose suitable features for their specific situation carefully, otherwise they have to design a custom feature. Classifiers used here are mostly regular machine learning algorithms. K-means [

12], Support vector machines [

13], and Random forests [

14] are widely adopted. However, high resolution aerial images, especially urban areas, have complex scenes and objects in different categories present similar appearances. So these traditional methods do not get satisfying results for this task.

In recent years, CNNs have shown dominant performance in the image processing field. It can construct features automatically from massive image data and implement feature extraction and classification simultaneously, which is called an end-to-end method. It presents great performance in the image classification task [

15]. Many classic networks for image classification have been proposed, such as VGG [

16], ResNet [

17], and DenseNet [

18].

Due to the strong recognition ability and feature learning characteristic, CNNs have been introduced to the semantic labeling field. Many CNNs models based on image patch classification have been designed for this task [

19,

20,

21,

22,

23]. The general procedure is cropping a small patch from original large image by sliding window, then classifying this patch with CNNs. This method gets improved performance compared with traditional methods owing to superior feature expressive ability, but loses structural information due to the regular patch partition. Besides, it requires large computational cost because of the enormous iteration steps needed by sliding window method [

24]. As an improvement, researchers try to use a structural segmentation algorithm, like superpixel segmentation methods, to generate patches with irregular shapes and retain more structural information [

25,

26]. Then they employ CNNs models to extract features and classify patches. However, this method still makes use of segmentation algorithms that are decoupled from CNN models, thus taking a risk of commitment to premature decisions.

To overcome the difficulty, Fully Convolutional Networks (FCN) [

27] have been proposed. FCN removes fully connected layers in VGG and outputs the probability map directly. Afterwards, it upsamples the probability map to the same size with original input image. In some sense, FCN discards the segmentation part and generates a semantic labeling outcome as a natural result of pixel-level classification. As a result, FCN can deal with irregular boundaries and get more coherent results than patch-based classification methods.

Although FCN achieves much better performance than other models [

20,

21], there still exist two limitations. Firstly, the feature map size is greatly reduced due to consecutive downsample operations, so the spatial resolution of the final feature map is largely reduced. This means a great deal of information is lost, which makes it difficult to recover details from the small and coarse feature map. Lastly, the semantic labeling result misses plenty of details and seems vague locally. Secondly, it uses features extracted by the backbone network directly, without exploiting features efficiently. This makes FCN weak in capturing multi-scale features and recognizing complicated scenes. Hence, for objects with multiple scales, it cannot recognize them well. This problem is more severe in aerial images due to the large scale variations, complex scenes, and fine-structured objects.

To remedy the first problem, researchers either generate feature map with higher resolution, or take advantage of shallow layer features more efficiently. For instance, DeconvNet [

28] uses consecutive unpooling and deconvolution layers to restore feature map resolution step wise. It adopts encoder–decoder architecture, in fact. SegNet [

29] records a pooling index in the encoder part, then utilizes pooling index information to perform non-linear upsampling in the decoder part and get more accurate location information. This eliminates the need for upsampling in a learning way. U-Net [

30] proposes a similar encoder–decoder model and introduces low-level features to improve final result during decoder stage. FRRN [

31] designs a two-stream network. One stream carries information at the full image resolution to keep precise boundaries. The other stream goes through consecutive pooling operations to get robust features for recognition. RefineNet [

32] devises a multi-path refinement network. It exploits information along the downsampling process to perform high resolution predictions with long-range residual connections.

For the other problem, researchers try to exploit features extracted by CNN more extensively. PSPNet [

33] exploits global context information by different-region-based context aggregation through spatial pyramid pooling. DeepLab [

34,

35] uses parallel dilated convolutional operations to aggregate multi-scale features and robustly segment objects at multiple scales. GCN [

36] validates the effectiveness of large convolution kernel and applies global convolution operation to capture context information. EncNet [

37] introduces context encoding module, which captures the semantic context of scenes and selectively highlights class-dependent feature maps to capture context information.

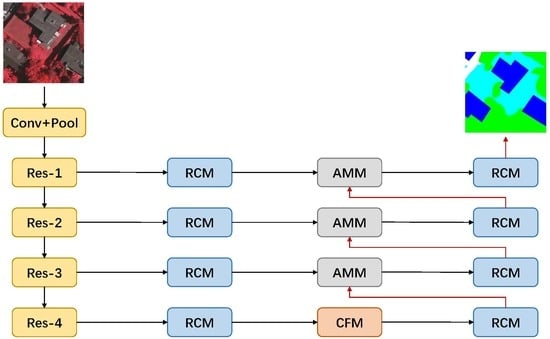

In this paper, we introduce a novel end-to-end network for semantic labeling in aerial images, which can handle problems mentioned above efficiently. It is an encoder–decoder-like architecture, with efficient context information aggregation and attention-based multi-level feature fusion. Specifically, we design a Context Fuse Module (CFM), which is composed of parallel convolutional layers with kernels of different sizes and a global pooling branch. The former is used to aggregate context information with multiple receptive fields. The latter is used to introduce global information which has been proved efficient in recent works [

33,

35]. We also propose an Attention Mix Module (AMM), which utilizes a channel-wise attention mechanism to combine multi-level features and selectively emphasizes more discriminative features. We further employ a Residual Convolutional Module (RCM) to refine features in all feature levels. Based on these modules, we construct a new end-to-end network for semantic labeling in aerial images. We evaluate the proposed network on ISPRS Vaihingen and Potsdam datasets. Experimental results demonstrate that our network outperforms other state-of-the-art CNN-based models and top methods on the benchmark with only raw image data.

In summary, our contributions are:

We design a Context Fuse Module to exploit context information extensively. It is composed of parallel convolutional layers with different size kernels to aggregate context information with multiple receptive fields, and a global pooling branch to introduce global information.

We propose an Attention Mix Module, which utilizes channel-wise attention mechanism to combine multi-level features and selectively emphasizes more discriminative features for recognition. We further employ a Residual Convolutional Module to refine features in all feature levels.

Based on these models, we construct a new end-to-end network for semantic labeling in aerial images. We evaluate the proposed network on ISPRS Vaihingen and Potsdam datasets. Experimental results demonstrate that our network outperforms other state-of-the-art CNN-based models and top methods on the benchmark with only raw image data, without using digital surface model information.