Geographically Weighted Machine Learning and Downscaling for High-Resolution Spatiotemporal Estimations of Wind Speed

Abstract

:1. Introduction

2. Study Region and Materials

2.1. Study Region

2.2. Measurement Data

2.3. Covariates

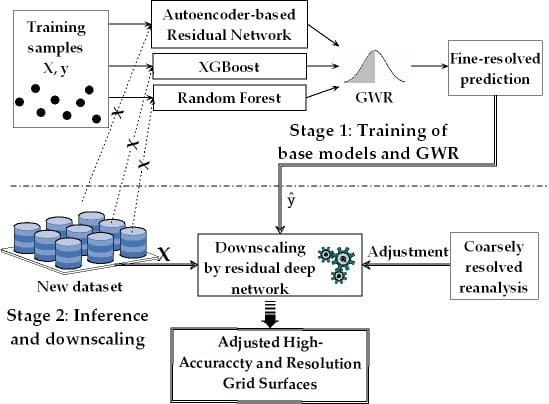

3. Methods

3.1. Stage 1: Geographically Weighted Learning

3.1.1. Base Learners

3.1.2. Geographically Weighted Learning

3.2. Stage 2: Downscaling with A Deep Residual Network

3.3. Optimization of Hyperparameters and Validation

4. Results

4.1. Data Summary and Preprocessing

4.2. Training of the Models in Stage 1

4.3. Predictions and Downscaling in Stage 2

5. Discussion

6. Conclusions

Supplementary Materials

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Hewson, E.W. Meteorological Factors Affecting Causes and Controls of Air Pollutio. J. Air Pollut. Control Assoc. 1956, 5, 235–241. [Google Scholar] [CrossRef]

- National Research Council. Coastal Meteorology: A Review of the State of the Science; The National Academies Press: Washington, DC, USA, 1992. [Google Scholar]

- Puc, M.; Bosiacka, B. Effects of Meteorological Factors and Air Pollution on Urban Pollen Concentrations. Pol. J. Environ. Stud. 2011, 20, 611–618. [Google Scholar]

- Bohnenstengel, I.S.; Belcher, E.S.; Aiken, A. Meteorology, Air Quality, and Health in London: The ClearfLo Project. Am. Meteorol. Soc. 2015, 779–804. [Google Scholar] [CrossRef]

- Scorer, R.R. Air Pollution Meteorology; Woodhead Publishing: Cambridge, UK, 2002. [Google Scholar]

- Jhun, I.; Coull, B.A.; Schwartz, J.; Hubbell, B.; Koutrakis, P. The impact of weather changes on air quality and health in the United States in 1994–2012. Environ. Res. Lett. 2015, 10, 084009. [Google Scholar] [CrossRef]

- WHO. Extreme Weather and Climate Events and Public Health Response; European Environment Agency: Bratislava, Slovakia, 2004. [Google Scholar]

- Ronay, A.K.; Fink, O.; Zio, E. Two Machine Learning Approaches for Short-Term Wind Speed Time-Series Prediction. IEEE Trans. Neural Netw. Learn. Syst. 2016, 27, 1734–1747. [Google Scholar]

- Dee, P.E.; Uppala, M.S.; Simmons, J.A. The ERA-Interim reanalysis: Configuration and performance of the data assimilation system. Q. J. R. Meteorol. Soc. 2011, 137, 5697. [Google Scholar] [CrossRef]

- Rienecker, M.M.; Suarez, J.M.; Gelaro, R. MERRA—NASA’s Modern-Era Retrospective Analysis for Research and Applications. J. Clim. 2011, 24, 3624–3648. [Google Scholar] [CrossRef]

- Saha, S.; Moorthi, S.; Pan, H.; Wu, X. The NCEP Climate Forecast System Reanalysis. Bull. Am. Meteorol. Soc. 2010, 91, 1015–1057. [Google Scholar] [CrossRef]

- Cai, Q.; Wang, W.; Wang, S. Multiple Regression Model Based on Weather Factors for Predicting the Heat Load of a District Heating System in Dalian, China—A Case Study. Open Cybern. Syst. J. 2015, 9, 2755–2773. [Google Scholar] [CrossRef]

- Jie, H.; Houjin, H.; Mengxue, Y.; Wei, Q.; Jie, X. A time series analysis of meteorological factors and hospital outpatient admissions for cardiovascular disease in the Northern district of Guizhou Province, China. Braz. J. Med. Biol. Res. 2014, 47, 689–696. [Google Scholar] [CrossRef] [Green Version]

- Voet, P.; Diepen, C.; Voshaar, J.O. Spatial Interpolation of Daily Meteorological Data; DLO Winand Starting Center: Wageningen, The Netherlands, 1995. [Google Scholar]

- Yang, G.; Zhang, J.; Yang, Y.; You, Z. Comparison of interpolation methods for typical meteorological factors based on GIS—A case study in Jitai basin, China. In Proceedings of the 19th International Conference on Geoinformatics, Shanghai, China, 24–26 June 2011. [Google Scholar]

- Wiki. Climate Model. Wikimedia Foundation, Inc., 2018. Available online: https://en.wikipedia.org/wiki/Climate_model (accessed on 9 June 2019).

- Mohandes, M.; Halawani, O.T.; Rehman, S.; Hussain, A.A. Support vector machines for wind speed prediction. Renew. Energy 2004, 29, 939–947. [Google Scholar] [CrossRef]

- Lei, M.; Shiyan, L.; Chuanwen, J.; Hongling, J.; Yan, Z. A review on the forecasting of wind speed and generated power. Renew. Sustain. Energy Rev. 2009, 13, 915–920. [Google Scholar] [CrossRef]

- Philippopoulos, K.; Deligiorgi, D.; Kouroupetroglou, G. Artificial Neural Network Modeling of Relative Humidity and Air Temperature Spatial and Temporal Distributions Over Complex Terrains. In Pattern Recognition Applications and Methods; Basel, Springer: Switzerland, 2014; pp. 171–187. [Google Scholar]

- Traiteur, J.J.; Callicutt, J.D.; Smith, M.; Roy, B.S. A Short-Term Ensemble Wind Speed Forecasting System for Wind Power Applications. Am. Meteorol. Soc. 2012, 1763–1774. [Google Scholar] [CrossRef]

- Jones, N. How machine learning could help to improve climate forecasts. Nature 2017, 584, 379–380. [Google Scholar] [CrossRef]

- Bishop, M.C. Neural Networks for Pattern Recognition; Oxford University Press: Oxford, UK, 1995. [Google Scholar]

- Goodfellow, I.; Bengio, Y.; Courville, A. Deep Learning; MIT Press: Cambridge, MA, USA, 2016. [Google Scholar]

- Parker, W. Reanalyses and observations, what is the difference? Am. Meteorol. Soc. 2016. [Google Scholar] [CrossRef]

- Atkinson, M.P. Downscaling in remote sensing. Int. J. Appl. Earth Obs. Geoinf. 2012, 22, 106–114. [Google Scholar] [CrossRef]

- Gotway, C.A.; Young, L.J. Combining incompatible spatial data. J. Am. Stat. Assoc. 2002, 97, 632–648. [Google Scholar] [CrossRef]

- Goovaerts, P. Combining areal and point data in geostatistical interpolation: Applications to soil science and medical geography. Math. Geosci. 2010, 42, 535–554. [Google Scholar] [CrossRef]

- Pardo-Iguzquiza, E.; Rodriguez-Galiano, V.F.; Chica-Olmo, M.; Atkinson, P.M. Image fusion by spatially adaptive filtering using downscaling cokriging. ISPRS J. Photogramm. Remote Sens. 2011, 66, 337–346. [Google Scholar] [CrossRef]

- Li, L.; Fang, Y.; Wu, J.; Wang, J. Autoencoder Based Residual Deep Networks for Robust Regression Prediction and Spatiotemporal Estimation. arXiv 2018, arXiv:1812.11262. [Google Scholar]

- Rovithakis, A.G.; Christodoulou, A.M. Adaptive control of unknown plants using dynamical neural networks. IEEE Trans. Syst. Man Cybern. 1994, 24, 400–412. [Google Scholar] [CrossRef]

- Yu, W. PID Control with Intelligent Compensation for Exoskeleton Robots; Elsevier Inc.: Amsterdam, The Netherlands, 2018. [Google Scholar]

- Wiki. Planetary Boundary Layer. Wikimedia Foundation, Inc., 2018. Available online: https://en.wikipedia.org/wiki/Planetary_boundary_layer (accessed on 9 June 2019).

- Wizelius, T. The relation between wind speed and height is called the wind profile or wind gradient. In Developing Wind Power Projects; Earthscan Publications Ltd.: London, UK, 2007; p. 40. [Google Scholar]

- Chen, T.; Guestrin, C. XGBoost: A Scalable Tree Boosting System. arXiv 2016, arXiv:1603.02754. [Google Scholar]

- Ho, T.K. The Random Subspace Method for Constructing Decision Forests. IEEE Trans. Pattern Anal. Mach. Intell. 1998, 20, 832–844. [Google Scholar]

- Hastie, T.; Tibshirani, R.; Friedman, J. The Elements of Statistical Learning, 2nd ed.; Springer: Berlin, Germany, 2008. [Google Scholar]

- He, K.M.; Zhang, X.Y.; Ren, S.Q.; Sun, J. Identity Mappings in Deep Residual Networks. Lect. Notes Comput. Sci. 2016, 9908, 630–645. [Google Scholar]

- Srivastava, K.R.; Greff, K.; Schmidhuber, J. Highway networks. arXiv 2015, arXiv:1505.00387. [Google Scholar]

- He, K.; Sun, J. Convolutional neural networks at constrained time cost. arXiv 2015, arXiv:1603.02754. [Google Scholar]

- He, K.M.; Zhang, X.Y.; Ren, S.Q.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Zou, H.; Hastie, T. Regularization and Variable Selection via the Elastic Net. J. R. Stat. Soc. Ser. B 2005, 67, 301–320. [Google Scholar] [CrossRef]

- Breiman, L. Random forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Fotheringham, A.; Charlton, M. Geographically weighted regression: A natural evolution of the expansion method for spatial data analysis. Environ. Plan. A 1998, 30, 1905–1927. [Google Scholar] [CrossRef]

- Tobler, W. A computer movie simulating urban growth in the Detroit region. Econ. Geogr. 1970, 46, 234–240. [Google Scholar] [CrossRef]

- Li, H.; Calder, C.; Cressie, N. Beyond Moran’s I. Testing for Spatial Dependence Based on the Spatial Autoregressive Model. Geogr. Anal. 2007, 39, 357–375. [Google Scholar] [CrossRef]

- Iglewicz, B.; Hoaglin, C.D. How to Detect and Handle Outliers. In The ASQ Basic References in Quality Control: Statistical Techniques; Mykytka, F.E., Ed.; American Society for Quality: Milwaukee, WI, USA, 1993. [Google Scholar]

- Zou, Y.; Wang, Y.; Zhang, Y.; Koo, J. Arctic sea ice, Eurasia snow, and extreme winter haze in China. Sci. Adv. 2017, 3, 1–9. [Google Scholar] [CrossRef]

- Charlton, M.; Fotheringham, S. Geographically Weighted Regression A Tutorial on Using GWR in ArcGIS 9.3; National Centre for Geocomputation National University of Ireland: County Kildare, Ireland, 2018. [Google Scholar]

- Lu, B.; Charlton, M.; Harris, P.; Fotheringham, S. Geographically weighted regression with a non-Euclidean distancemetric: A case study using hedonic house price data. Int. J. Geogr. Inf. Sci. 2014, 28, 660–681. [Google Scholar] [CrossRef]

- Propastin, P.; Kappas, M.; Erasmi, S. Application of geographically weighted regression to investigate the impact of scale on prediction uncertainty by modelling relationship between vegetation and climate. Int. J. Spat. Data Infrastruct. Res. 2008, 3, 73–94. [Google Scholar]

- Rokach, L.; Maimon, O. Data Mining with Decision Trees: Theory and Applications; World Scientific Pub. Co. Inc.: Danvers, MA, USA, 2008. [Google Scholar]

| Item | WSa | WSIb | O3Ic | PBLHd | TEMIe | ELEf |

|---|---|---|---|---|---|---|

| Unit | m/s | m/s | DU | m | °C | m |

| Mean | 2.1 | 2.8 | 318.6 | 683.4 | 12.9 | 790.2 |

| Median | 1.8 | 2.4 | 311.9 | 612.3 | 13.3 | 400.0 |

| IQR | 1.4 | 1.9 | 52.3 | 531.0 | 16.6 | 1045.5 |

| Range | 0.0, 23.2 | 0.3, 19.2 | 219.4, 484.4 | 55.7, 3865.8 | −18.1, 38.4 | 1.8, 4800.0 |

| Base Model | Training | Independent Test | ||||||

|---|---|---|---|---|---|---|---|---|

| R2 | Adjusted R2 | RMSEa | MAEb | R2 | Adjusted R2 | RMSE | MAE | |

| ARNc | 0.68 | 0.68 | 0.76 | 0.49 | 0.66 | 0.66 | 0.72 | 0.51 |

| XGBoost | 0.76 | 0.76 | 0.60 | 0.46 | 0.67 | 0.67 | 0.71 | 0.51 |

| RFd | 0.69 | 0.69 | 0.76 | 0.49 | 0.63 | 0.63 | 0.77 | 0.53 |

| GAMe | 0.43 | 0.43 | 0.95 | 0.67 | 0.42 | 0.42 | 0.96 | 0.67 |

| FFNNf | 0.58 | 0.58 | 0.83 | 0.57 | 0.58 | 0.58 | 0.82 | 0.57 |

© 2019 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, L. Geographically Weighted Machine Learning and Downscaling for High-Resolution Spatiotemporal Estimations of Wind Speed. Remote Sens. 2019, 11, 1378. https://0-doi-org.brum.beds.ac.uk/10.3390/rs11111378

Li L. Geographically Weighted Machine Learning and Downscaling for High-Resolution Spatiotemporal Estimations of Wind Speed. Remote Sensing. 2019; 11(11):1378. https://0-doi-org.brum.beds.ac.uk/10.3390/rs11111378

Chicago/Turabian StyleLi, Lianfa. 2019. "Geographically Weighted Machine Learning and Downscaling for High-Resolution Spatiotemporal Estimations of Wind Speed" Remote Sensing 11, no. 11: 1378. https://0-doi-org.brum.beds.ac.uk/10.3390/rs11111378