1. Introduction

We are living in the information era, surrounded by a variety of geospatial data from diverse sources. For obtaining useful and reliable information for decision-making, the data must be properly acquired, processed, and analysed. This is a challenging task, particularly in the field of 3D geospatial data modelling [

1,

2], where the current software support is mostly inadequate. For this purpose, spatial extract, transform, load (ETL) solutions can be used. In computing, ETL has been known since the 1970s, and is often used in data warehousing, i.e., central repositories of integrated data from one or more disparate sources. It is a type of data integration concept referring to the three steps of extract, transform, and load, and is used to integrate data from multiple sources [

3]. For processing and integrating various geospatial data, spatial ETL solutions have been developed in the last few decades. A spatial ETL solution supports geospatial data extraction from homogeneous or heterogeneous sources, transforms (processes) the data into a proper storage format/structure, and, finally, loads the data into a target database, such as a topographic database. It is a virtual environment with data manipulation tools that enable better spatial and non-spatial data management. In addition to data transformation tools, spatial ETL solutions also contain various geoprocessing algorithms to process and analyse spatial and non-spatial data, e.g., geometry validation and repair, topology check, or creating and merging attributes [

4].

This research addresses the complex workflow of data-driven 3D building modelling using point clouds as a data source. The motivation for the research has been in the current lack of software support for 3D geospatial data manipulation [

3,

4], that is needed for this purpose. The main objective of this research has been to develop a workflow supported by the selected spatial ETL software for 3D building modelling, based on unmanned aerial vehicle (UAV) data. We hypothesise that the ETL environment and tools enable us to develop a workflow for 3D building modelling based on a geospatial point cloud, where available algorithms for data processing are used. We focus on a data-driven modelling approach, where we use a UAV photogrammetric point cloud to reconstruct a 3D building model in the form of a solid geometry. The surface of a solid is defined by a set of closed polygons with a known orientation; semantic attributes are added to geometric features according to their role in the building model. Our research aimed to cover the entire workflow from the processing of the photogrammetric point cloud to the final 3D semantic building model in the standardised format consistent with the OGC CityGML standard [

5], Level of Detail 2 (LOD2). The proposed modelling workflow has been tested on selected buildings in Slovenian semi-urban area.

The paper is structured as follows: this short introduction is followed by

Section 2, which provides an overview of related research and publications.

Section 3 describes the basic concepts of spatial ETL, data used for 3D building modelling, and the methodology utilised in the proposed workflow for 3D building modelling and quality assessment. In

Section 4, the results of the 3D building modelling are presented, with the developed workflow and quality assessment. In

Section 5, the main findings and critical evaluation of the proposed workflow, with respect to previous publications, are discussed, while

Section 6 provides the main conclusions.

5. Discussion

The previously published studies on data-driven 3D building modelling from the point cloud were mainly designed for LiDAR data [

11,

33]. In our case, we decided to use a UAV photogrammetric point cloud, because it has not yet been widely used for 3D building modelling. Although a laser scanning point cloud and a photogrammetric point cloud seem alike, each of them has its own characteristics, which must be considered when using them as a data source for 3D building modelling.

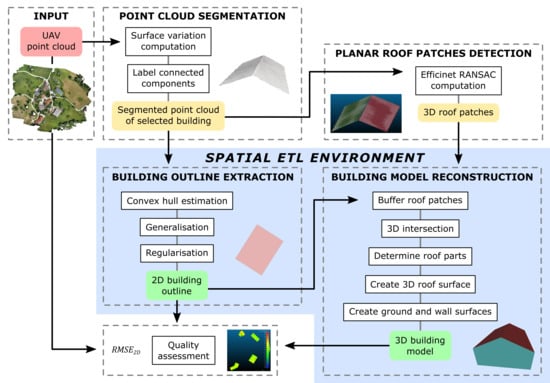

The developed 3D building modelling workflow includes the complete workflow from the input photogrammetric point cloud processing to the final reconstruction of a 3D building model in the semantic vector format, in accordance with the OGC CityGML standard for LOD2 [

5]. In general, the workflow consists of four main steps divided into three parts: the point cloud segmentation and planar roof patches detection (Part 1), roof outline extraction (Part 2), and 3D building model reconstruction (Part 3). Part 2 and Part 3 have been completely performed within the ETL environment, which is a novelty in data-driven 3D building modelling based on a point cloud. The spatial ETL solution has been used to address the challenges related to the manipulation of 3D geospatial data, which is needed for this purpose.

In the proposed 3D building modelling workflow, we start with point cloud segmentation. The first step includes the determination of geometric properties of the point in the point cloud, which are often used in the procedures for point cloud segmentation and classification [

46,

51,

52]. The values of surface variation as a simple geometric feature were computed for each point in the cloud with respect to the fixed neighbourhood. This enables the extractions of planar regions from the scene when retaining only points with a lower surface variation. The advantage of our approach is its simplicity, which made it possible to obtain results in a fast and efficient manner. Since our aim was only to find points of planar regions, the surface variation was a proper parameter to find the groups of points that correspond to the building roofs. The first drawback of this approach is the fact it uses a fixed neighbourhood to compute the surface variation and thus requires knowledge of the scene and point cloud density to define proper values for the size of the neighbourhood, from which the surface variation is computed. We provide a similar density of the point cloud. The size of the fixed neighbourhood was determined following the criteria that at least 95% of all points had at least 15 points in its neighbourhood. When point cloud density varies within the input point cloud, the performance for neighbourhood determination could be improved by applying the adaptive size of the neighbourhood, as proposed in [

53]. The second disadvantage of the approach is that, after excluding the points with a higher surface variation from the cloud, we may lose some points on the edges of planar regions, which can further affect modelling accuracy. In our case, the threshold value of surface variation for the selected study area was set empirically based on the characteristics of the scene. Compared to the findings in [

53], we used a slightly higher threshold, aiming not to lose the necessary points on the roof ridge and roof edges for further modelling. For better robustness of the point cloud segmentation and its applicability to other case studies, additional geometric features could be computed for each point in the point cloud, based on eigenvalues of the covariance matrix within the point’s local neighbourhood. These features and their combinations can be used in advanced algorithms for point cloud segmentation [

51,

54]. Additionally, points in the photogrammetric point cloud contain essential information about colour, which can be added in the point cloud segmentation, as suggested in [

55].

The second step of point cloud segmentation is the extraction of groups of points as connected components. The method of connected component analysis is well known in the field of image analysis, but also often used in point cloud processing to group points with a similar spatial distribution [

34]. In our workflow, the method yields good results for the extraction of the group of points corresponding to the building roof. The advantage of the approach is the fact that no auxiliary data (e.g., building outlines or footprints from the topographic or cadastral database) is needed to extract the groups of points corresponding to the building, which is the case in many previously published studies [

8,

56]. The weakness of the method is the fact that the operator must consider setting the proper values of the parameters used in the method, in order to obtain accurate results. This means that the method is highly dependent on the dataset used in the workflow. For our study area, the extraction of connected components as a group of points for building roofs worked well, because we used the photogrammetric point cloud obtained from UAV imagery acquired only in nadir view. This resulted in points being present only on the building roof, and not also on facades. If the point cloud is created from the nadir and oblique imagery, the points will be present on the roof and the facades. For further roof surface modelling, the point cloud corresponding to the complete building must be split into several point clouds, one for the roof and others for facades. For further research, it would be interesting to also determine the connected components in the photogrammetric point cloud obtained from oblique imagery, which will make it possible to extend the usability of our developed modelling workflow to diverse datasets.

Our modelling workflow is highly dependent on the planar roof patches detection, because only the planar patches detected in this step are the object of further roof surface modelling. The efficient RANSAC algorithm used for planar patches detection enables detection of the planes in the cloud, which was proven in several previous publications [

29,

47]. A similar approach to ours was used in [

48], with the difference that our approach detects the planes only for the roof surface, because the workflow is developed to reconstruct a building model corresponding LOD2 of the OGC CityGML standard, where detailed façade modelling is not expected. Since we use a data-driven modelling approach, the RANSAC parameters are tailored to the dataset used in the workflow. The essential parameter that a user needs to consider is the minimum points for the definition of a plane, which will allow detection of all roof patches that properly define the roof surface.

The building outline generation in our workflow is important for the determination of building position and orientation in space. In our case, we used the alpha shape algorithm that determines concave 2D polygon for each building or building part detected as one connected component in the point cloud. Further, we performed the generalisation using the Douglas-Peucker algorithm, and the regularisation of extracted polygons. All operations for building outline generation were conducted within the spatial ETL, which is a novelty compared to other studies for building modelling. The use of the spatial ETL is beneficial, because it allows the monitoring of each step in the procedure, including modification of processing parameters, such as tolerance values of generalisation and regularisation parameters. We aimed to reconstruct a building model from UAV point cloud without any additional spatial data. If a building outline is available in the existing databases, it could be integrated into our workflow. However, the proper definition and quality of a building outline are required, as it affects the quality of the building model.

Since our workflow for building outline generation is data-driven and, thus, highly dependent on the completeness of given input point cloud, it is sensitive to gaps or missing parts in the point cloud, which can arise due to occlusions in the scene. Due to the characteristics of a photogrammetric point cloud, the building outline extraction is possible only in the case of a complete point cloud representing a building roof. In our case, it also appeared that points in the cloud were missing, due to shadows caused by other structures in the scene, and the algorithm is not able to extract an accurate shape of the outline. We encounter this issue in our case when there is vegetation near the building, and it covers part of the roof. Since a dense image matching algorithm is not able to produce points in the point cloud below the vegetation, the points in the cloud that correspond to the building roof are missing if it is partly covered by a tree canopy. Consequently, the algorithm for outline extraction is not able to produce an accurate shape of the outline.

Figure 16 shows an example of an inaccurate shape of the outline caused by the omission of points that represent the roof. For improving the outline generation for such cases, additional geometric constraints on the shape of the outline should be added. The completeness of outline generation could be improved by integrating additional data sources, such as LiDAR point cloud, which contains also point beneath the vegetation. For accurate results, high LiDAR point cloud density must be ensured.

The quality analysis of generated 2D outlines for selected buildings showed that the procedures of generalisation and regularisation reduce the positional accuracy, compared to input point cloud data. However, in our case study, the proposed workflow still satisfies the requirements for positional accuracy proposed for the CityGML model in LOD2, or given in the Slovenian national standards for large-scale topography.

The final part of the workflow consists of 3D building modelling, which is, again, fully developed within the spatial ETL environment. The planar roof patches and 2D building outlines are used. The developed 3D building models are in compliance with the OGC CityGML standard, LOD2. The average inner positional accuracy for the created building models is 0.023 m. The roof models are simple, and consist of planar surfaces; additional roof details, such as dormers and chimneys, are not modelled. The walls are vertical and connected with the roof on the outer boundary of the roof surface without any eaves. The reconstructed models are generalised; firstly, the generalisation and regularisation are performed within building outline generation; secondly, the generalisation refers to 3D building reconstruction where not all details of a roof are considered. This is more explicit in the model with a complex roof type (e.g., cross gable roof,

Figure 1c), where more regularisation was needed to obtain well-ordered topology. In

Figure 17, we can see that in some cases the roof surface of the model deviates from the point cloud, due to the regularisation and generalisation of the model (

Figure 17a). In other cases, only part of a surface is not aligned with the point cloud, which happens due to the non-planarity and irregularity of the roof in the scene (

Figure 17b).

The use of UAV data for 3D building reconstruction was already presented in [

48], where the authors focused on point cloud segmentation approach and the planar modelling method to create models of facades, roofs and the grounds based on local normal vectors. In our case, the building model is simplified. The resulting building models are presented with differentiated roof shapes, where the walls are vertical, modelled from the roof eaves to the ground level of the building, which approximates the maximum extent of the building in the real world. The focus of our approach has been on complete workflow from point cloud processing to 3D building modelling, where the final result was a 3D building model in the form of a solid, with a defined and validated geometry and topology in accordance to the OGC CityGML standard. For each building model, geometric features obtain semantic attributes according to their role in the model, e.g., RoofSurface, WallSurface, GroundSurface (

Figure 13). The novelty in our approach is in particular in the introduction of the spatial ETL functionalities, which enable a user-friendly environment to process photogrammetric point cloud for 3D building reconstruction.

The developed model (workflow) enables us to execute the whole processing on other datasets, where each step can be modified using different processing parameters considering the desired positional accuracy. The developed workflow for data-driven 3D building modelling could be used for various point cloud data sources and different user applications where building models in 3D are needed. Here we have in mind especially large-scale topographic mapping, 3D city modelling for spatial planning and for various spatial analysis, such as visibility analysis, energy demand assessment, and solar potential estimation. The added value of the proposed workflow is in the holistic and transparent data-driven modelling approach from input point cloud data to the development of a 3D building model consistent with the OGC CityGML standard, LOD2.

6. Conclusions

In this paper, we have presented an approach to data-driven 3D building modelling in the spatial ETL environment, using UAV photogrammetric point cloud as input data. The proposed approach covers the complete modelling workflow, from initial photogrammetric point cloud processing to the final polyhedral building model, developed in accordance with the OGC CityGML standard, LOD2.

While there are software solutions available for the photogrammetric point cloud processing, e.g., segmentation and planar patches detection, the task has been particularly challenging for 3D building model reconstruction, where the current software support is inadequate and individual algorithms are available from different providers, within different software. Aiming to have complete control over 3D building modelling based on a segmented (photogrammetric) point cloud, we decided to test the functionalities of spatial ETL. Within the spatial ETL environment, we used the available algorithms for 3D building modelling, where the final result was a 3D building model in the form of a solid, with a defined geometry and topology. The advantage of using ETL is in its transparent data processing and modelling, which enables the developer to control each step. The use of spatial ETL functionalities has shown many advantages in the modelling process, where complete control can be exercised, each step can be repeated, including the assessment of the results of each step. As already emphasised, the proposed workflow is not completely developed within the spatial ETL environment, as there was no need to pre-process UAV data, i.e., perform point cloud segmentation and planar roof patches extraction, with ETL. Additionally, it has to be outlined that we focused on the processes of 3D building modelling up to the generation of 3D solid geometry, topology validation, and the definition of basic semantics of graphical elements, as suggested by the OGC CityGML standard [

5]. However, within a spatial ETL environment, further data processing can be performed, for example loading the data into a target database, such as a topographic database, which is an additional advantage of using a spatial ETL environment.

The developed data-driven modelling workflow was applied to a study area in Slovenia, where a UAV photogrammetric point cloud produced from nadir imageries was available. The approach was tested for four selected buildings with different roof types. The quality assessment of the results showed that it is possible to reconstruct a 3D building model with an inner positional accuracy below 0.15 m, which is in accordance with the requirements of large-scale topographic mapping in Slovenia. Therefore, the developed workflow is in particular interesting for updating national topographic datasets or the large-scale topographic mapping of smaller areas for development projects, such as rural development projects, despite some disadvantages of using UAV, e.g., legal restrictions for flights in an urban environment.

For further research, the developed 3D building modelling approach could be improved in such a way as to allow reconstructing buildings with a more complex roof, containing dormers or a similar constructions on the roof. The workflow was tested only on one UAV dataset from a relatively small scene. To exploit the full potential of the modelling workflow, it could be applied to other photogrammetric and laser scanning datasets, including oblique images, related to different types and shapes of buildings, and to a higher level of details of building models, e.g., LOD3.