Generating Elevation Surface from a Single RGB Remotely Sensed Image Using Deep Learning

Abstract

:1. Introduction

2. Materials and Methods

2.1. Typical Convolutional Architecture

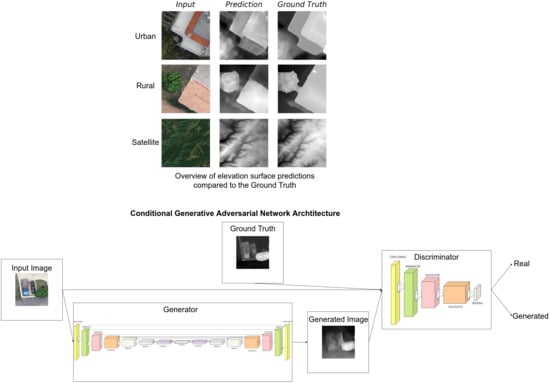

2.2. Generative Adversarial Networks

2.3. Conditional Generative Adversarial Networks

2.4. Architecture Analysis

2.5. Training

2.6. Datasets

2.6.1. Satellite Imagery

2.6.2. Multispectral Extension of Satellite Imagery

2.7. Urban and Rural Drone Imagery

2.8. Data Augmentation

2.9. GAN Training and Evaluation Pitfalls

3. Results

3.1. Main Method Evaluation

3.1.1. Satellite Imagery

3.1.2. Multispectral Extension

3.1.3. Urban and the Rural Drone Imagery

3.2. Ablation and Regularization Studies

3.3. Inverse Problem and Generator

3.4. Baselines

3.4.1. CycleGAN

3.4.2. U-net

4. Discussion

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Walker, J.P.; Willgoose, G.R. On the effect of digital elevation model accuracy on hydrology and geomorphology. Water Resour. Res. 1999, 35, 2259–2268. [Google Scholar] [CrossRef] [Green Version]

- Hancock, G.R. The use of digital elevation models in the identification and characterization of catchments over different grid scales. Hydrol. Process. Int. J. 2005, 19, 1727–1749. [Google Scholar] [CrossRef]

- Jaboyedoff, M.; Baillifard, F.; Couture, R.; Locat, J.; Locat, P. New insight of geomorphology and landslide prone area detection using digital elevation model (s). In Landslides: Evaluation and Stabilization; Balkema, Taylor & Francis Group: London, UK, 2004; pp. 191–198. [Google Scholar]

- Kenward, T.; Lettenmaier, D.P.; Wood, E.F.; Fielding, E. Effects of digital elevation model accuracy on hydrologic predictions. Remote Sens. Environ. 2000, 74, 432–444. [Google Scholar] [CrossRef]

- Wang, M.; Hjelmfelt, A.T. DEM based overland flow routing model. J. Hydrol. Eng. 1998, 3, 1–8. [Google Scholar] [CrossRef]

- Kharchenko, V.; Kuzmenko, N. Unmanned aerial vehicle collision avoidance using digital elevation model. Proc. Natl. Aviat. Univ. 2013, 1, 21–25. [Google Scholar] [CrossRef]

- Uijt_de_Haag, M.; Vadlamani, A. Flight test evaluation of various terrain referenced navigation techniques for aircraft approach guidance. In Proceedings of the 2006 IEEE/ION Position, Location, and Navigation Symposium, Coronado, CA, USA, 25–27 April 2006; pp. 440–449. [Google Scholar]

- Jenson, S.K.; Domingue, J.O. Extracting topographic structure from digital elevation data for geographic information system analysis. Photogramm. Eng. Remote Sens. 1988, 54, 1593–1600. [Google Scholar]

- Costa, E. Simulation of the effects of different urban environments on GPS performance using digital elevation models and building databases. IEEE Trans. Intell. Transp. Syst. 2011, 12, 819–829. [Google Scholar] [CrossRef]

- Hofmann-Wellenhof, B.; Lichtenegger, H.; Wasle, E. GNSS–Global Navigation Satellite Systems: GPS, GLONASS, Galileo, and More; Springer Science & Business Media: Berlin, Germany, 2007. [Google Scholar]

- Mercer, B.; Zhang, Q. Recent advances in airborne InSAR for 3D applications. In International Archives of the Photogrammetry, Remote Sensing and Spatial Information Sciences; ISPRS: Beijing, China, 2008; Volume XXXVII, Part B1; pp. 141–146. [Google Scholar]

- Quddus, M.A.; Zheng, Y. Low-Cost Tightly Coupled GPS, Dead-Reckoning, and Digital Elevation Model Land Vehicle Tracking System for Intelligent Transportation Systems; Technical Report; Transportation Research Board: Washington, DC, USA, 2011. [Google Scholar]

- Kaul, M.; Yang, B.; Jensen, C.S. Building accurate 3d spatial networks to enable next generation intelligent transportation systems. In Proceedings of the 2013 IEEE 14th International Conference on Mobile Data Management, Milan, Italy, 3–6 June 2013; Volume 1, pp. 137–146. [Google Scholar]

- Senay, G.B.; Ward, A.D.; Lyon, J.G.; Fausey, N.R.; Nokes, S.E. Manipulation of high spatial resolution aircraft remote sensing data for use in site-specific farming. Trans. ASAE 1998, 41, 489. [Google Scholar] [CrossRef]

- Lu, Y.C.; Daughtry, C.; Hart, G.; Watkins, B. The current state of precision farming. Food Rev. Int. 1997, 13, 141–162. [Google Scholar] [CrossRef]

- Grammatikopoulos, L.; Adam, K.; Petsa, E.; Karras, G. Camera Calibation using Multiple Unordered Coplanar Chessboards. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2019, 42, 59–66. [Google Scholar] [CrossRef] [Green Version]

- Leitão, J.P.; de Vitry, M.M.; Scheidegger, A.; Rieckermann, J. Assessing the quality of digital elevation models obtained from mini unmanned aerial vehicles for overland flow modelling in urban areas. Hydrol. Earth Syst. Sci. 2016, 20, 1637. [Google Scholar] [CrossRef] [Green Version]

- Ruiz, J.; Diaz-Mas, L.; Perez, F.; Viguria, A. Evaluating the accuracy of DEM generation algorithms from UAV imagery. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci 2013, 40, 333–337. [Google Scholar] [CrossRef]

- Baselice, F.; Ferraioli, G.; Pascazio, V.; Schirinzi, G. Contextual information-based multichannel synthetic aperture radar interferometry: Addressing DEM reconstruction using contextual information. IEEE Signal Process. Mag. 2014, 31, 59–68. [Google Scholar] [CrossRef]

- Devlin, J.; Chang, M.W.; Lee, K.; Toutanova, K. Bert: Pre-training of deep bidirectional transformers for language understanding. arXiv 2018, arXiv:1810.04805. [Google Scholar]

- Seide, F.; Li, G.; Yu, D. Conversational speech transcription using context-dependent deep neural networks. In Proceedings of the Twelfth Annual Conference of the International Speech Communication Association, Florence, Italy, 27–31 August 2011. [Google Scholar]

- Iandola, F.N.; Han, S.; Moskewicz, M.W.; Ashraf, K.; Dally, W.J.; Keutzer, K. SqueezeNet: AlexNet-level accuracy with 50x fewer parameters and <0.5 MB model size. arXiv 2016, arXiv:1602.07360. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. In Advances in Neural Information Processing Systems; Curran Associates, Inc.: Red Hook, NY, USA, 2012; pp. 1097–1105. [Google Scholar]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going deeper with convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 1–9. [Google Scholar]

- Mnih, V.; Kavukcuoglu, K.; Silver, D.; Graves, A.; Antonoglou, I.; Wierstra, D.; Riedmiller, M. Playing atari with deep reinforcement learning. arXiv 2013, arXiv:1312.5602. [Google Scholar]

- Mnih, V.; Kavukcuoglu, K.; Silver, D.; Rusu, A.A.; Veness, J.; Bellemare, M.G.; Graves, A.; Riedmiller, M.; Fidjeland, A.K.; Ostrovski, G.; et al. Human-level control through deep reinforcement learning. Nature 2015, 518, 529–533. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Delving deep into rectifiers: Surpassing human-level performance on imagenet classification. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 1026–1034. [Google Scholar]

- Silver, D.; Hubert, T.; Schrittwieser, J.; Antonoglou, I.; Lai, M.; Guez, A.; Lanctot, M.; Sifre, L.; Kumaran, D.; Graepel, T.; et al. Mastering Chess and Shogi by Self-Play with a General Reinforcement Learning Algorithm. arXiv 2017, arXiv:cs.AI/1712.01815. [Google Scholar]

- Ma, L.; Liu, Y.; Zhang, X.; Ye, Y.; Yin, G.; Johnson, B.A. Deep learning in remote sensing applications: A meta-analysis and review. ISPRS J. Photogramm. Remote Sens. 2019, 152, 166–177. [Google Scholar] [CrossRef]

- Zhu, X.X.; Tuia, D.; Mou, L.; Xia, G.S.; Zhang, L.; Xu, F.; Fraundorfer, F. Deep learning in remote sensing: A comprehensive review and list of resources. IEEE Geosci. Remote Sens. Mag. 2017, 5, 8–36. [Google Scholar] [CrossRef] [Green Version]

- Zhang, L.; Zhang, L.; Du, B. Deep learning for remote sensing data: A technical tutorial on the state of the art. IEEE Geosci. Remote Sens. Mag. 2016, 4, 22–40. [Google Scholar] [CrossRef]

- Ball, J.E.; Anderson, D.T.; Chan, C.S. Comprehensive survey of deep learning in remote sensing: Theories, tools, and challenges for the community. J. Appl. Remote Sens. 2017, 11, 042609. [Google Scholar] [CrossRef] [Green Version]

- Kussul, N.; Lavreniuk, M.; Skakun, S.; Shelestov, A. Deep learning classification of land cover and crop types using remote sensing data. IEEE Geosci. Remote Sens. Lett. 2017, 14, 778–782. [Google Scholar] [CrossRef]

- Zou, Q.; Ni, L.; Zhang, T.; Wang, Q. Deep learning based feature selection for remote sensing scene classification. IEEE Geosci. Remote Sens. Lett. 2015, 12, 2321–2325. [Google Scholar] [CrossRef]

- Charou, E.; Felekis, G.; Stavroulopoulou, D.B.; Koutsoukou, M.; Panagiotopoulou, A.; Voutos, Y.; Bratsolis, E.; Mylonas, P.; Likforman-Sulem, L. Deep Learning for Agricultural Land Detection in Insular Areas. In Proceedings of the 2019 10th International Conference on Information, Intelligence, Systems and Applications (IISA), Patras, Greece, 15–17 July 2019; pp. 1–4. [Google Scholar]

- Lanaras, C.; Bioucas-Dias, J.; Galliani, S.; Baltsavias, E.; Schindler, K. Super-resolution of Sentinel-2 images: Learning a globally applicable deep neural network. ISPRS J. Photogramm. Remote Sens. 2018, 146, 305–319. [Google Scholar] [CrossRef] [Green Version]

- Yuan, Y.; Zheng, X.; Lu, X. Hyperspectral image superresolution by transfer learning. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2017, 10, 1963–1974. [Google Scholar] [CrossRef]

- Torres, R.N.; Fraternali, P.; Milani, F.; Frajberg, D. A Deep Learning model for identifying mountain summits in Digital Elevation Model data. In Proceedings of the 2018 IEEE First International Conference on Artificial Intelligence and Knowledge Engineering (AIKE), Laguna Hills, CA, USA, 26–28 September 2018; pp. 212–217. [Google Scholar]

- Marmanis, D.; Adam, F.; Datcu, M.; Esch, T.; Stilla, U. Deep neural networks for above-ground detection in very high spatial resolution digital elevation models. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2015, 2, 103. [Google Scholar] [CrossRef] [Green Version]

- Kulp, S.A.; Strauss, B.H. CoastalDEM: A global coastal digital elevation model improved from SRTM using a neural network. Remote Sens. Environ. 2018, 206, 231–239. [Google Scholar] [CrossRef]

- Mou, L.; Zhu, X.X. IM2HEIGHT: Height estimation from single monocular imagery via fully residual convolutional-deconvolutional network. arXiv 2018, arXiv:1802.10249. [Google Scholar]

- Godard, C.; Mac Aodha, O.; Firman, M.; Brostow, G.J. Digging into self-supervised monocular depth estimation. In Proceedings of the IEEE International Conference on Computer Vision, Seoul, Korea, 27 October–2 November 2019; pp. 3828–3838. [Google Scholar]

- Zhou, T.; Brown, M.; Snavely, N.; Lowe, D.G. Unsupervised learning of depth and ego-motion from video. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 1851–1858. [Google Scholar]

- Abrevaya, V.F.; Boukhayma, A.; Torr, P.H.; Boyer, E. Cross-modal Deep Face Normals with Deactivable Skip Connections. arXiv 2020, arXiv:2003.09691. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Munich, Germany, 5–9 October 2015; Springer: Berlin, Germany, 2015; pp. 234–241. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Goodfellow, I.; Bengio, Y.; Courville, A. Deep Learning; MIT Press: Cambridge, MA, USA, 2016; Available online: http://www.deeplearningbook.org (accessed on 12 June 2020).

- Goodfellow, I.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative adversarial nets. In Proceedings of the Advances in Neural Information Processing Systems, Montreal, QC, Canada, 8–13 December 2014; pp. 2672–2680. [Google Scholar]

- Mirza, M.; Osindero, S. Conditional generative adversarial nets. arXiv 2014, arXiv:1411.1784. [Google Scholar]

- Reed, S.; Akata, Z.; Yan, X.; Logeswaran, L.; Schiele, B.; Lee, H. Generative adversarial text to image synthesis. arXiv 2016, arXiv:1605.05396. [Google Scholar]

- Zhang, H.; Xu, T.; Li, H.; Zhang, S.; Wang, X.; Huang, X.; Metaxas, D.N. Stackgan: Text to photo-realistic image synthesis with stacked generative adversarial networks. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 5907–5915. [Google Scholar]

- Zhang, H.; Xu, T.; Li, H.; Zhang, S.; Wang, X.; Huang, X.; Metaxas, D.N. Stackgan++: Realistic image synthesis with stacked generative adversarial networks. IEEE Trans. Pattern Anal. Mach. Intell. 2018, 41, 1947–1962. [Google Scholar] [CrossRef] [Green Version]

- Higgins, I.; Matthey, L.; Glorot, X.; Pal, A.; Uria, B.; Blundell, C.; Mohamed, S.; Lerchner, A. Early visual concept learning with unsupervised deep learning. arXiv 2016, arXiv:1606.05579. [Google Scholar]

- Rawat, W.; Wang, Z. Deep convolutional neural networks for image classification: A comprehensive review. Neural Comput. 2017, 29, 2352–2449. [Google Scholar] [CrossRef]

- Isola, P.; Zhu, J.Y.; Zhou, T.; Efros, A.A. Image-to-image translation with conditional adversarial networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 1125–1134. [Google Scholar]

- Zhu, X.; Li, Z.; Zhang, X.; Li, H.; Xue, Z.; Wang, L. Generative Adversarial Image Super-Resolution through Deep Dense Skip Connections; Computer Graphics Forum; Wiley Online Library: Hoboken, NJ, USA, 2018; Volume 37, pp. 289–300. [Google Scholar]

- Zhu, J.Y.; Park, T.; Isola, P.; Efros, A.A. Unpaired image-to-image translation using cycle-consistent adversarial networks. In Proceedings of the IEEE international Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2223–2232. [Google Scholar]

- Ioffe, S.; Szegedy, C. Batch normalization: Accelerating deep network training by reducing internal covariate shift. arXiv 2015, arXiv:1502.03167. [Google Scholar]

- Xu, B.; Wang, N.; Chen, T.; Li, M. Empirical evaluation of rectified activations in convolutional network. arXiv 2015, arXiv:1505.00853. [Google Scholar]

- Kingma, D.P.; Ba, J. Adam: A method for stochastic optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- Srivastava, N.; Hinton, G.; Krizhevsky, A.; Sutskever, I.; Salakhutdinov, R. Dropout: A simple way to prevent neural networks from overfitting. J. Mach. Learn. Res. 2014, 15, 1929–1958. [Google Scholar]

- Abadi, M.; Barham, P.; Chen, J.; Chen, Z.; Davis, A.; Dean, J.; Devin, M.; Ghemawat, S.; Irving, G.; Isard, M.; et al. Tensorflow: A system for large-scale machine learning. In Proceedings of the 12th {USENIX} Symposium on Operating Systems Design and Implementation ({OSDI} 16), Savannah, GA, USA, 2–4 November 2016; pp. 265–283. [Google Scholar]

- JAXA-EORC. ALOS Global Digital Surface Model “ALOS World 3D-30 m” (AW3D30). 2016. Available online: http://www.eorc.jaxa.jp/ALOS/en/aw3d30/index.htm (accessed on 25 May 2020).

- Gorelick, N.; Hancher, M.; Dixon, M.; Ilyushchenko, S.; Thau, D.; Moore, R. Google Earth Engine: Planetary-scale geospatial analysis for everyone. Remote Sens. Environ. 2017, 202, 18–27. [Google Scholar] [CrossRef]

- Map Ltd. Available online: http://map4u.gr/ (accessed on 23 May 2020).

- Karras, T.; Aila, T.; Laine, S.; Lehtinen, J. Progressive growing of gans for improved quality, stability, and variation. arXiv 2017, arXiv:1710.10196. [Google Scholar]

- Arora, S.; Ge, R.; Liang, Y.; Ma, T.; Zhang, Y. Generalization and equilibrium in generative adversarial nets (gans). In Proceedings of the 34th International Conference on Machine Learning-Volume 70. JMLR. org, Sydney, NSW, Australia, 6–11 August 2017; pp. 224–232. [Google Scholar]

- Ghifary, M.; Kleijn, W.B.; Zhang, M.; Balduzzi, D.; Li, W. Deep reconstruction-classification networks for unsupervised domain adaptation. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 11–14 October 2016; Springer: Berlin, Germany, 2016; pp. 597–613. [Google Scholar]

- Liu, M.Y.; Tuzel, O. Coupled generative adversarial networks. In Proceedings of the Advances in Neural Information Processing Systems, Barcelona, Spain, 5–10 December 2016; pp. 469–477. [Google Scholar]

- Cao, Z.; Long, M.; Wang, J.; Jordan, M.I. Partial transfer learning with selective adversarial networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 2724–2732. [Google Scholar]

- Sankaranarayanan, S.; Balaji, Y.; Castillo, C.D.; Chellappa, R. Generate to adapt: Aligning domains using generative adversarial networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 8503–8512. [Google Scholar]

- Long, M.; Cao, Z.; Wang, J.; Jordan, M.I. Conditional adversarial domain adaptation. In Proceedings of the Advances in Neural Information Processing Systems, Montreal, QC, Canada, 3–8 December 2018; pp. 1640–1650. [Google Scholar]

- Reed, S.; Akata, Z.; Lee, H.; Schiele, B. Learning deep representations of fine-grained visual descriptions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 49–58. [Google Scholar]

- Tzeng, E.; Hoffman, J.; Saenko, K.; Darrell, T. Adversarial discriminative domain adaptation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 7167–7176. [Google Scholar]

- Roth, K.; Lucchi, A.; Nowozin, S.; Hofmann, T. Stabilizing training of generative adversarial networks through regularization. In Proceedings of the Advances in Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017; pp. 2018–2028. [Google Scholar]

- Zhao, Z.; Singh, S.; Lee, H.; Zhang, Z.; Odena, A.; Zhang, H. Improved consistency regularization for gans. arXiv 2020, arXiv:2002.04724. [Google Scholar]

- Zhang, H.; Zhang, Z.; Odena, A.; Lee, H. Consistency Regularization for Generative Adversarial Networks. arXiv 2019, arXiv:1910.12027. [Google Scholar]

- Kurach, K.; Lucic, M.; Zhai, X.; Michalski, M.; Gelly, S. A large-scale study on regularization and normalization in GANs. arXiv 2018, arXiv:1807.04720. [Google Scholar]

- Lei, Q.; Jalal, A.; Dhillon, I.S.; Dimakis, A.G. Inverting Deep Generative models, One layer at a time. In Proceedings of the Advances in Neural Information Processing Systems, Vancouver, BC, Canada, 8–14 December 2019; pp. 13910–13919. [Google Scholar]

- Hoffman, J.; Tzeng, E.; Park, T.; Zhu, J.Y.; Isola, P.; Saenko, K.; Efros, A.A.; Darrell, T. Cycada: Cycle-consistent adversarial domain adaptation. arXiv 2017, arXiv:1711.03213. [Google Scholar]

- Perlin, K. An image synthesizer. ACM Siggraph Comput. Graph. 1985, 19, 287–296. [Google Scholar] [CrossRef]

- Zhu, L.; Chen, Y.; Ghamisi, P.; Benediktsson, J.A. Generative adversarial networks for hyperspectral image classification. IEEE Trans. Geosci. Remote Sens. 2018, 56, 5046–5063. [Google Scholar] [CrossRef]

- Nuha, F.U. Training dataset reduction on generative adversarial network. Procedia Comput. Sci. 2018, 144, 133–139. [Google Scholar] [CrossRef]

- Belkin, M.; Hsu, D.; Ma, S.; Mandal, S. Reconciling modern machine-learning practice and the classical bias–variance trade-off. Proc. Natl. Acad. Sci. USA 2019, 116, 15849–15854. [Google Scholar] [CrossRef] [Green Version]

- Sun, C.; Shrivastava, A.; Singh, S.; Gupta, A. Revisiting unreasonable effectiveness of data in deep learning era. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 843–852. [Google Scholar]

- Vetrivel, A.; Gerke, M.; Kerle, N.; Nex, F.; Vosselman, G. Disaster damage detection through synergistic use of deep learning and 3D point cloud features derived from very high resolution oblique aerial images, and multiple-kernel-learning. ISPRS J. Photogramm. Remote Sens. 2018, 140, 45–59. [Google Scholar] [CrossRef]

- Li, Z.; Zhu, H.; Zhou, C.; Cao, L.; Zhong, Y.; Zeng, T.; Liu, J. A Color Consistency Processing Method for HY-1C Images of Antarctica. Remote Sens. 2020, 12, 1143. [Google Scholar] [CrossRef] [Green Version]

- Goodfellow, I.J.; Shlens, J.; Szegedy, C. Explaining and harnessing adversarial examples. arXiv 2014, arXiv:1412.6572. [Google Scholar]

- Kurakin, A.; Goodfellow, I.; Bengio, S. Adversarial examples in the physical world. arXiv 2016, arXiv:1607.02533. [Google Scholar]

- Carlini, N.; Athalye, A.; Papernot, N.; Brendel, W.; Rauber, J.; Tsipras, D.; Goodfellow, I.; Madry, A.; Kurakin, A. On evaluating adversarial robustness. arXiv 2019, arXiv:1902.06705. [Google Scholar]

- Godard, C.; Mac Aodha, O.; Brostow, G.J. Unsupervised monocular depth estimation with left-right consistency. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 270–279. [Google Scholar]

- Liu, F.; Shen, C.; Lin, G. Deep convolutional neural fields for depth estimation from a single image. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 5162–5170. [Google Scholar]

| Band Name | Description | Wavelength |

|---|---|---|

| B2 | Blue | nm (S2A)/ nm (S2B) |

| B3 | Green | nm (S2A)/ nm (S2B) |

| B4 | Red | nm (S2A)/665 nm (S2B) |

| B5 | Red Edge 1 | nm (S2A)/ nm (S2B) |

| B6 | Red Edge 2 | nm (S2A)/ nm (S2B) |

| B7 | Red Edge 3 | nm (S2A)/ nm (S2B) |

| B8 | NIR | nm (S2A)/833 nm (S2B) |

| B8A | Red Edge 4 | nm (S2A)/864 nm (S2B) |

| B11 | SWIR 1 | nm (S2A)/ nm (S2B) |

| Method | Absolute Error (meters) | Percentage Error |

|---|---|---|

| W/O | % | |

| Less parameters | % | |

| % | ||

| % | ||

| % |

| Architecture | Absolute Error (meters) | Percentage Error |

|---|---|---|

| pix2pix | % | |

| CycleGAN | % | |

| U-net | % |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Panagiotou, E.; Chochlakis, G.; Grammatikopoulos, L.; Charou, E. Generating Elevation Surface from a Single RGB Remotely Sensed Image Using Deep Learning. Remote Sens. 2020, 12, 2002. https://0-doi-org.brum.beds.ac.uk/10.3390/rs12122002

Panagiotou E, Chochlakis G, Grammatikopoulos L, Charou E. Generating Elevation Surface from a Single RGB Remotely Sensed Image Using Deep Learning. Remote Sensing. 2020; 12(12):2002. https://0-doi-org.brum.beds.ac.uk/10.3390/rs12122002

Chicago/Turabian StylePanagiotou, Emmanouil, Georgios Chochlakis, Lazaros Grammatikopoulos, and Eleni Charou. 2020. "Generating Elevation Surface from a Single RGB Remotely Sensed Image Using Deep Learning" Remote Sensing 12, no. 12: 2002. https://0-doi-org.brum.beds.ac.uk/10.3390/rs12122002