4.1.1. Datasets

UC Merced Land-use dataset is a classical land-use dataset, which contains 21 different scenes and 2100 images. Each image has pixels and high-resolution in RGB color space with a spatial resolution of 0.3 m. They were all manually extracted from the USGS National Map Urban Area Imagery Collection.

NWPU-RESISC45 dataset has a total number of 45 scene classes and 700 images with a size of for each class. Most of the images are middle to high spatial resolution, which varies from 30 m to 0.2 m. They are all cropped from Google Earth. The dataset takes eight popular classes from UC Merced Land-use dataset and some widely used scene categories from other datasets and research.

AID is a large-scale aerial image dataset with 30 aerial scene types. The dataset is composed of 10,000 images which are multi-resolution and multi-source. The size of each image is fixed to be . The number of images in each class is imbalanced. This dataset is challenging because of the large intra-class diversities.

These datasets have many overlapped classes (e.g., sparse residential, medium residential and dense residential) that can easily confuse non-expert. It is particularly challenging for computer vision researchers with little geography knowledge to label such a dataset manually. As for crowd-sourcing or automatic labeling, it will be more prone to make errors. Actually, based on the existing public datasets, when we need to use them in real-world applications, additional data will be used. Only experts can avoid label noise, which is expensive.

Experiments are conducted on these three datasets. In addition, as shown in

Table 1, each dataset is randomly split into

training subset,

validation subset and

test subset. Because the existing datasets lack noisy labels, simulated approaches are taken to evaluate NLD. Three different types of noise are injected into the split training set of all the three datasets separately.

Symmetric noise: The symmetric noise is a type of uniform noise, which is generated by a random label among the classes to replace the ground-truth label with equal probabilities. This type of noisy subset represents an almost zero-cost annotation method, which means there are many unlabeled images, and labels are labeled in a completely random way. Experiments on this noise can prove that, through NLD, this labeling method is also feasible in some extremely low-cost scenarios.

Asymmetric noise: This type of noise is class dependent noise and it mimics some of the real-world noise for visually similar and semantically similar categories.

For UC Merced Land-use, to the best of our knowledge, there is no related noise label mapping method before. After observing the features of images and division of scene classes, asymmetric noise was generated by mapping

,

,

,

,

,

,

,

,

as shown in

Figure 4.

For NWPU-RESISC45,

,

,

,

,

,

,

,

,

,

,

,

,

are mapped, following [

12].

Figure 5 shows representative images in this dataset.

For AID, the classes are flipped by mapping

;

;

;

;

;

;

;

;

;

, following [

12].

Figure 6 shows examples from this dataset.

Pseudo-Labeling noise: Pseudo-labeling methods can assign labels to unlabeled images automatically, which can reduce costs. However, there are not completely correct pseudo-labels. To ensure a fair comparison, following the idea of SSGA-E [

6], the full training set is randomly divided into six parts and randomly select one of them as a small clean subset. Then, two different classifiers are trained on the small clean subset and make pseudo labels for the rest of the train set. In SSGA-E [

6], two networks are ResNet-50 and VGG-S [

34], respectively. However, VGG-S is rarely used in practice, which can cause many problems in deployment. As a result, VGG-S is replaced with the VGG-19 [

25], which has lower accuracy but is more widely used. These unlabeled subsets with automatically generated labels can be viewed as the noisy subset. In addition, since this method does not label all images, some of the uncertain images are removed from the subset and the noise subset will be smaller than the original subset. The number of annotations obtained for unlabeled images of different datasets is listed in

Table 2.

4.1.2. Baselines and Model Variants

To evaluate the performance improvement of NLD, our approach is compared with some pseudo-labeling methods [

6]. Several related baselines are also provided for symmetric noise, asymmetric noise. In addition, NLD is used as the base model for some other variants to verify the effectiveness of NLD. The details of the baselines and variants are as follows.

Baseline-Clean: A backbone network of the student model is trained for remote sensing scenes classification using the clean subset. This can be regarded as the lower bound of NLD. Our method uses the noisy subset to improve performance on this baseline.

Baseline-Noise: A backbone network of the student model is trained solely on noisy labels from the training set. This baseline can be viewed as a measurement of the quality of noisy labels.

Baseline-Mix: A backbone network of the student model is trained using a mix of clean and noisy labels with standard CE loss. This baseline shows the damage caused by noisy subsets.

SCE Loss: Under the supervision of SCE loss, a model is trained on the entire dataset with both clean and noisy labels. Parameters for SCE are configured as and .This is a baseline for a noise-robust method.

Noise model fine-tune with clean labels (Clean-FT): It is a common approach, which uses the clean subset directly to fine-tune the whole network of Baseline-Noise. This method is prone to overfit if there are few clean samples.

Noise model fine-tune with mix of clean and noisy labels (Mix-FT): To address the problem caused by limited clean labels, fine-tuning the Baseline-Noise with mixed data is also a common approach.

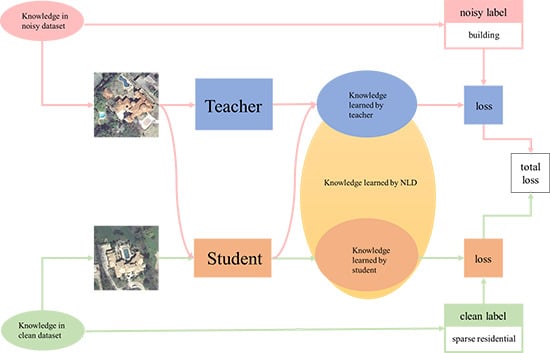

NLD with CE loss (NLD): NLD is trained on both the original clean datasets and different noisy ratios of datasets. For a completely clean dataset, one image is used as input simultaneously for the teacher and student, which is close to DML.

4.1.3. Experimental Settings

All experiments are implemented with PyTorch framework [

35] and conducted on an NVIDIA GeForce Titan X GPU. The networks used in our experiments are shown in

Table 3. These networks are all pre-trained on ImageNet. Although VGG architecture has a larger number of parameters and needs more floating point operations(FLOPs), ResNet architecture has stronger feature representation capabilities-based residual modules. Therefore, teacher networks in all experiments are ResNet architecture. For UC Merced Land-use dataset, it is worth mentioning that SSGA-E [

6] uses VGG-S and VGG-16, but after our experiments, the network with VGG architectures will be over-fitting because the size of this dataset is small. So the actual network used is modified VGG architectures with BN to learn this dataset. As a preprocessing step, random flip, random gaussian blur and resize images to

are used. For optimization, we use Adam with weight decay of

, batch size of 32 and initial learning rate of

. The leaning rate will decrease according to the exponential decay with the multiplicative factor of

in each epoch. All networks mentioned in

Section 4.1.2 are trained for 200 epochs. Besides, for NLD, a batch of images is half clean and half noise. In general, the weight factors are set to

and

. For additional experiments, experiments are conducted with more different factors, losses and networks, which will be detailed in

Section 4.6