Figure 1.

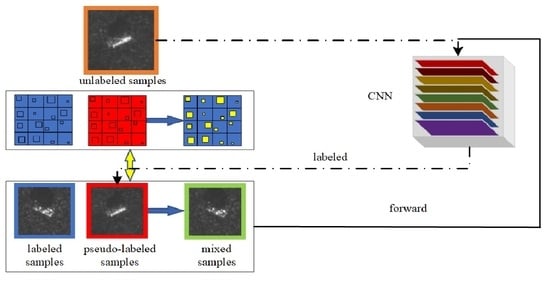

The framework for the self-consistent augmentation (SCA) method, where the training procedure includes three phases: sample labeling, data processing, and model training. In particular, sample labeling is part of data processing. First, in the early stages of data processing, SCA performs data augmentation on labeled and unlabeled samples. At this time, the model pseudo-labels unlabeled samples. Then, there is the later stage of data processing, where the labeled samples and pseudo-labeled samples are combined, and the combined data set and randomly shuffled combined data set are mixed to obtain a new sample set. Finally, the new sample set is used to train the model.

Figure 1.

The framework for the self-consistent augmentation (SCA) method, where the training procedure includes three phases: sample labeling, data processing, and model training. In particular, sample labeling is part of data processing. First, in the early stages of data processing, SCA performs data augmentation on labeled and unlabeled samples. At this time, the model pseudo-labels unlabeled samples. Then, there is the later stage of data processing, where the labeled samples and pseudo-labeled samples are combined, and the combined data set and randomly shuffled combined data set are mixed to obtain a new sample set. Finally, the new sample set is used to train the model.

Figure 2.

Overview of mixup, cutmix, and our multi-block mixed (MBM) blending methods. The left part of the figure is an example of the operation of the three hybrid methods. Among them, the mixup method performs interpolation operations on the input image; the cutmix method introduces an additional matrix (omitted in the figure) and uses element-wise multiplication to replace part of the content in the input image; the MBM method divides the picture into N parts and selects a small part of each part and the corresponding part of another input image for interpolation and mixing. The input SAR images of the three methods and the new samples generated are shown on the right side of the figure, and the generation method does not strictly correspond to the frame selection position in the left figure.

Figure 2.

Overview of mixup, cutmix, and our multi-block mixed (MBM) blending methods. The left part of the figure is an example of the operation of the three hybrid methods. Among them, the mixup method performs interpolation operations on the input image; the cutmix method introduces an additional matrix (omitted in the figure) and uses element-wise multiplication to replace part of the content in the input image; the MBM method divides the picture into N parts and selects a small part of each part and the corresponding part of another input image for interpolation and mixing. The input SAR images of the three methods and the new samples generated are shown on the right side of the figure, and the generation method does not strictly correspond to the frame selection position in the left figure.

Figure 3.

Optical images and corresponding SAR images of ten classes of objects in the moving and stationary target acquisition and recognition (MSTAR) database, (a) 2S1; (b) BMP2; (c) BRDM2; (d) BTR60; (e) BTR70; (f) D7; (g) T62; (h) T72; (i) ZIL131; (j) ZSU234.

Figure 3.

Optical images and corresponding SAR images of ten classes of objects in the moving and stationary target acquisition and recognition (MSTAR) database, (a) 2S1; (b) BMP2; (c) BRDM2; (d) BTR60; (e) BTR70; (f) D7; (g) T62; (h) T72; (i) ZIL131; (j) ZSU234.

Figure 4.

Confusion matrices of the proposed method and the corresponding supervised method under 10 labeled samples per category: (a) MBM; (b) supervised method.

Figure 4.

Confusion matrices of the proposed method and the corresponding supervised method under 10 labeled samples per category: (a) MBM; (b) supervised method.

Figure 5.

Precision comparison for each class of the MBM and the corresponding supervised method, where the Y-axis denotes per class classification accuracy improvement of the MBM relative to the corresponding supervised method, and the X-axis denotes the class index of each category.

Figure 5.

Precision comparison for each class of the MBM and the corresponding supervised method, where the Y-axis denotes per class classification accuracy improvement of the MBM relative to the corresponding supervised method, and the X-axis denotes the class index of each category.

Figure 6.

Confusion matrices of the proposed method and other mixed semi-supervised learning methods under 10 labeled samples per category (a) MBM; (b) cutmix; (c) mixup; (d) unmixed. The average performance per method is 97.21%, 96.75%, 94.92%, and 93.75%.

Figure 6.

Confusion matrices of the proposed method and other mixed semi-supervised learning methods under 10 labeled samples per category (a) MBM; (b) cutmix; (c) mixup; (d) unmixed. The average performance per method is 97.21%, 96.75%, 94.92%, and 93.75%.

Figure 7.

Precision comparisons for each class of the MBM and other mixed semi-supervised methods, where the Y-axis denotes the per class classification accuracy improvement of the MBM relative to another mixed semi-supervised method, and the X-axis denotes the class index of each category. (a) MBM and cutmix; (b) MBM and mixup; (c) MBM and unmixed.

Figure 7.

Precision comparisons for each class of the MBM and other mixed semi-supervised methods, where the Y-axis denotes the per class classification accuracy improvement of the MBM relative to another mixed semi-supervised method, and the X-axis denotes the class index of each category. (a) MBM and cutmix; (b) MBM and mixup; (c) MBM and unmixed.

Figure 8.

Recognition accuracy curves of the MBM, cutmix, mixup, and unmixed methods under 10 labeled samples per category.

Figure 8.

Recognition accuracy curves of the MBM, cutmix, mixup, and unmixed methods under 10 labeled samples per category.

Figure 9.

Recognition accuracies of the proposed semi-supervised learning method and the methods for comparison on the MSTAR data set.

Figure 9.

Recognition accuracies of the proposed semi-supervised learning method and the methods for comparison on the MSTAR data set.

Figure 10.

Boxplots of the recognition accuracy of different N values under different labeled samples in each category on the MSTAR data set. (a) 10; (b) 20; (c) 40; (d) 80.

Figure 10.

Boxplots of the recognition accuracy of different N values under different labeled samples in each category on the MSTAR data set. (a) 10; (b) 20; (c) 40; (d) 80.

Table 1.

Detailed information of the MSTAR data set used in our experiments.

Table 1.

Detailed information of the MSTAR data set used in our experiments.

| Tops | Class | Training Set | Testing Set | All Data |

|---|

| | | Depression | No. of Images | Depression | No. of Images | No. of Images |

|---|

| Artillery | 2S1 | | 299 | | 274 | 573 |

| ZSU234 | | 299 | | 274 | 573 |

| Tank | T62 | | 299 | | 273 | 572 |

| T72 | | 232 | | 196 | 428 |

| Truck | BRDM2 | | 298 | | 274 | 572 |

| BTR60 | | 256 | | 195 | 451 |

| BTR70 | | 233 | | 196 | 429 |

| BMP2 | | 233 | | 195 | 428 |

| D7 | | 299 | | 274 | 573 |

| ZIL131 | | 299 | | 274 | 573 |

| Total | —— | —— | 2747 | —— | 2425 | 5172 |

Table 2.

Recognition accuracies of the proposed semi-supervised learning method and the corresponding supervised method under different amounts of labeled samples per category on the MSTAR data set. SSRA, semi-supervised recognition accuracy.

Table 2.

Recognition accuracies of the proposed semi-supervised learning method and the corresponding supervised method under different amounts of labeled samples per category on the MSTAR data set. SSRA, semi-supervised recognition accuracy.

| | Number of Labeled Samples Per Category | |

|---|

| | 10 | 20 | 40 | 60 | 80 | Full |

|---|

| MBM SSRA | 97.21 ± 2.441 | 99.56 ± 0.155 | 99.58 ± 0.074 | 99.65 ± 0.062 | 99.67 ± 0.034 | - |

| SRA | 82.67 ± 3.236 | 93.23 ± 1.339 | 97.27 ± 0.467 | 98.516 ± 0.262 | 99.01 ± 0.121 | 99.53 ± 0.074 |

Table 3.

Recognition accuracies of the proposed semi-supervised learning method, cutmix semi-supervised learning method, mixup semi-supervised learning method, and unmixed semi-supervised learning method under different amounts of labeled samples per category on the MSTAR data set. The bold numbers represent the optimal value in each column.

Table 3.

Recognition accuracies of the proposed semi-supervised learning method, cutmix semi-supervised learning method, mixup semi-supervised learning method, and unmixed semi-supervised learning method under different amounts of labeled samples per category on the MSTAR data set. The bold numbers represent the optimal value in each column.

| | Number of Labeled Samples Per Category |

|---|

| | 10 | 20 | 40 | 60 | 80 |

|---|

| MBM SSRA | 97.21 ± 2.441 | 99.56 ± 0.155 | 99.58 ± 0.074 | 99.65 ± 0.062 | 99.67 ± 0.034 |

| cutmix SSRA | 96.74 ± 2.011 | 99.28 ± 0.638 | 99.66 ± 0.074 | 99.71 ± 0.133 | 99.71 ± 0.065 |

| mixup SSRA | 94.92 ± 4.359 | 98.86 ± 0.447 | 99.14 ± 0.259 | 99.11 ± 0.255 | 99.40 ± 0.150 |

| unmix SSRA | 93.75 ± 3.602 | 98.82 ± 0.313 | 98.99 ± 0.340 | 99.20 ± 0.147 | 99.36 ± 0.135 |

Table 4.

Recognition accuracies of the proposed semi-supervised learning method and other semi-supervised methods under different amounts of labeled samples per category on the MSTAR data set. The bold numbers represent the optimal value in each column. Hyphens indicate that accuracy is not present in the published article.

Table 4.

Recognition accuracies of the proposed semi-supervised learning method and other semi-supervised methods under different amounts of labeled samples per category on the MSTAR data set. The bold numbers represent the optimal value in each column. Hyphens indicate that accuracy is not present in the published article.

| | Number of Labeled Samples Per Category |

|---|

| | 10 | 20 | 40 | 55 | 80 |

|---|

| LNP | - | - | - | 92.04 | - |

| PSS-SVM | - | - | - | 95.01 | - |

| Triple-GAN | - | - | - | 95.70 | - |

| Improved-GAN | - | - | - | 87.52 | - |

| Gao et al. | - | - | - | 95.72 | - |

| PCA+SVM | - | 76.43 | 87.92 | - | 92.48 |

| AdaBoost | - | 75.68 | 87.45 | - | 91.95 |

| SRC | - | 79.61 | 88.07 | - | 93.16 |

| K-SVD | - | 78.52 | 87.14 | - | 93.57 |

| | 10 | 20 | 40 | 55 | 80 |

| LC-KSVD | - | 78.83 | 87.39 | - | 93.23 |

| DGM | - | 81.11 | 88.14 | - | 92.85 |

| Gauss | - | 80.55 | 87.51 | - | 94.10 |

| DNN1 | - | 77.86 | 86.98 | - | 93.04 |

| DNN2 | - | 79.63 | 87.73 | - | 93.76 |

| CNN | - | 81.80 | 88.35 | - | 93.88 |

| GAN-CNN | - | 84.39 | 90.13 | - | 94.29 |

| MGAN-CNN | - | 85.23 | 90.82 | - | 94.91 |

| SCA | 94.92 ± 4.359 | 98.86 ± 1.242 | 99.14 ± 0.259 | 99.12 ± 0.345 | 99.40 ± 0.150 |

| MBM (our proposal) | 97.21 ± 2.441 | 99.56 ± 0.155 | 99.58 ± 0.074 | 99.63 ± 0.067 | 99.67 ± 0.034 |

Table 5.

Recognition accuracies of the proposed semi-supervised learning method for different N values under different amounts of labeled samples per category on the MSTAR data set. The bold numbers represent the optimal value in each column.

Table 5.

Recognition accuracies of the proposed semi-supervised learning method for different N values under different amounts of labeled samples per category on the MSTAR data set. The bold numbers represent the optimal value in each column.

| Value of N | Number of Labeled Samples Per Category |

|---|

| | 10 | 20 | 40 | 60 |

|---|

| 1 | 96.17 ± 2.652 | 98.85 ± 0.711 | 99.29 ± 0.525 | 99.48 ± 0.046 |

| 2 | 96.84 ± 3.091 | 99.51 ± 0.107 | 99.55 ± 0.073 | 99.60 ± 0.094 |

| 4 | 97.21 ± 2.441 | 99.56 ± 0.155 | 99.58 ± 0.074 | 99.65 ± 0.067 |

| 8 | 95.51 ± 2.002 | 98.28 ± 1.828 | 99.56 ± 0.094 | 99.57 ± 0.102 |

Table 6.

Time analysis of the proposed method, cutmix, mixup, unmixed, and the supervised learning method.

Table 6.

Time analysis of the proposed method, cutmix, mixup, unmixed, and the supervised learning method.

| | Training Time Per Epoch | Testing Time Per Image |

|---|

| MBM | 17.28 s | 0.512 ms |

| cutmix | 17.57 s | 0.531 ms |

| mixup | 23.09 s | 0.511 ms |

| unmixed | 17.29 s | 0.522 ms |

| supervised method | 7.04 s | 0.518 ms |