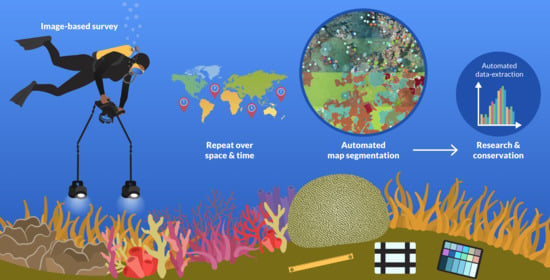

Repeatable Semantic Reef-Mapping through Photogrammetry and Label-Augmentation

Abstract

:1. Introduction

- Extensive ecological validation of semantic segmentation through label-augmentation of sparse annotations.

- Validation of 3D grid standardisation with a consumer-grade spirit-leveler.

- The Benthos data-set that includes three segmented photomosaics from different oceanic environments.

2. Materials and Methods

2.1. Imaging System and Photogrammetric Equipment

2.2. Plot Setup and Acquisition Protocol

2.3. Study Sites and Data-Sets

Labeling and Classification

2.4. Label-Augmentation

Augmentation from Sparse Annotations

2.5. Orthorectification

2.5.1. 3D Grid Definition and Orthorectification

2.5.2. Repeated-Survey Simulation

2.6. Evaluation Metrics

2.7. Community-Metrics Comparisons

- Class-specific size-frequency distributions. We divided the classes in nine bins, starting from to with a step size of . We used distance to assess the similarity of class size distribution between maps. Low values indicate high similarity between sets of data where zero is the maximal similarity.

- Relative amount of individuals per class. The number of objects from each class divided by the total number of objects in the map.

- Relative area by class. The size in per class divided by the total size of the map. The photomosaics are exported at 0.5 mm per pixel, and to transfer to we use the following equation:

3. Results

3.1. Label-Augmentation

3.2. Orthorectification

4. Discussion

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Kurzweil, R. The law of accelerating returns. In Alan Turing: Life and Legacy of a Great Thinker; Springer: Berlin/Heidelberg, Germany, 2004; pp. 381–416. [Google Scholar]

- Davies, N.; Field, D.; Gavaghan, D.; Holbrook, S.J.; Planes, S.; Troyer, M.; Bonsall, M.; Claudet, J.; Roderick, G.; Schmitt, R.J.; et al. Simulating social-ecological systems: The Island Digital Ecosystem Avatars (IDEA) consortium. GigaScience 2016, 5, s13742-016. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Laplace, P.S. A Philosophical Essay on Probabilities, Sixth French ed.; Truscott, F.W.; Emory, F.W., Translators; Chapman & Hall, Limited: London, UK, 1902. [Google Scholar]

- Brodrick, P.G.; Davies, A.B.; Asner, G.P. Uncovering ecological patterns with convolutional neural networks. Trends Ecol. Evol. 2019, 34, 734–745. [Google Scholar] [CrossRef]

- De Kock, M.; Gallacher, D. From drone data to decision: Turning images into ecological answers. In Proceedings of the Conference Paper: Innovation Arabia, Dubai, United Arab Emirates, 7–9 March 2016; Volume 9. [Google Scholar]

- Kattenborn, T.; Eichel, J.; Fassnacht, F.E. Convolutional Neural Networks enable efficient, accurate and fine-grained segmentation of plant species and communities from high-resolution UAV imagery. Sci. Rep. 2019, 9, 1–9. [Google Scholar] [CrossRef]

- Silver, M.; Tiwari, A.; Karnieli, A. Identifying vegetation in arid regions using object-based image analysis with RGB-only aerial imagery. Remote Sens. 2019, 11, 2308. [Google Scholar] [CrossRef] [Green Version]

- Zimudzi, E.; Sanders, I.; Rollings, N.; Omlin, C. Segmenting mangrove ecosystems drone images using SLIC superpixels. Geocarto Int. 2019, 34, 1648–1662. [Google Scholar] [CrossRef]

- Maggiori, E.; Tarabalka, Y.; Charpiat, G.; Alliez, P. High-resolution aerial image labeling with convolutional neural networks. IEEE Trans. Geosci. Remote Sens. 2017, 55, 7092–7103. [Google Scholar] [CrossRef] [Green Version]

- Tsuichihara, S.; Akita, S.; Ike, R.; Shigeta, M.; Takemura, H.; Natori, T.; Aikawa, N.; Shindo, K.; Ide, Y.; Tejima, S. Drone and GPS sensors-based grassland management using deep-learning image segmentation. In Proceedings of the 2019 Third IEEE International Conference on Robotic Computing (IRC), Naples, Italy, 25–27 February 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 608–611. [Google Scholar]

- Johnson-Roberson, M.; Pizarro, O.; Williams, S.B.; Mahon, I. Generation and visualization of large-scale three-dimensional reconstructions from underwater robotic surveys. J. Field Robot. 2010, 27, 21–51. [Google Scholar] [CrossRef]

- Bryson, M.; Ferrari, R.; Figueira, W.; Pizarro, O.; Madin, J.; Williams, S.; Byrne, M. Characterization of measurement errors using structure-from-motion and photogrammetry to measure marine habitat structural complexity. Ecol. Evol. 2017, 7, 5669–5681. [Google Scholar] [CrossRef]

- Burns, J.; Delparte, D.; Gates, R.; Takabayashi, M. Integrating structure-from-motion photogrammetry with geospatial software as a novel technique for quantifying 3D ecological characteristics of coral reefs. PeerJ 2015, 3, e1077. [Google Scholar] [CrossRef]

- Calders, K.; Phinn, S.; Ferrari, R.; Leon, J.; Armston, J.; Asner, G.P.; Disney, M. 3D Imaging Insights into Forests and Coral Reefs. Trends Ecol. Evol. 2020, 35, 6–9. [Google Scholar] [CrossRef]

- Edwards, C.B.; Eynaud, Y.; Williams, G.J.; Pedersen, N.E.; Zgliczynski, B.J.; Gleason, A.C.; Smith, J.E.; Sandin, S.A. Large-area imaging reveals biologically driven non-random spatial patterns of corals at a remote reef. Coral Reefs 2017, 36, 1291–1305. [Google Scholar] [CrossRef] [Green Version]

- Ferrari, R.; Figueira, W.F.; Pratchett, M.S.; Boube, T.; Adam, A.; Kobelkowsky-Vidrio, T.; Doo, S.S.; Atwood, T.B.; Byrne, M. 3D photogrammetry quantifies growth and external erosion of individual coral colonies and skeletons. Sci. Rep. 2017, 7, 16737. [Google Scholar] [CrossRef]

- González-Rivero, M.; Beijbom, O.; Rodriguez-Ramirez, A.; Holtrop, T.; González-Marrero, Y.; Ganase, A.; Roelfsema, C.; Phinn, S.; Hoegh-Guldberg, O. Scaling up ecological measurements of coral reefs using semi-automated field image collection and analysis. Remote Sens. 2016, 8, 30. [Google Scholar] [CrossRef] [Green Version]

- Hernández-Landa, R.C.; Barrera-Falcon, E.; Rioja-Nieto, R. Size-frequency distribution of coral assemblages in insular shallow reefs of the Mexican Caribbean using underwater photogrammetry. PeerJ 2020, 8, e8957. [Google Scholar] [CrossRef]

- Lange, I.; Perry, C. A quick, easy and non-invasive method to quantify coral growth rates using photogrammetry and 3D model comparisons. Methods Ecol. Evol. 2020, 11, 714–726. [Google Scholar] [CrossRef]

- Mohamed, H.; Nadaoka, K.; Nakamura, T. Towards Benthic Habitat 3D Mapping Using Machine Learning Algorithms and Structures from Motion Photogrammetry. Remote Sens. 2020, 12, 127. [Google Scholar] [CrossRef] [Green Version]

- Naughton, P.; Edwards, C.; Petrovic, V.; Kastner, R.; Kuester, F.; Sandin, S. Scaling the annotation of subtidal marine habitats. In Proceedings of the 10th International Conference on Underwater Networks & Systems; ACM: New York, NY, USA, 2015; pp. 1–5. [Google Scholar]

- Williams, I.D.; Couch, C.; Beijbom, O.; Oliver, T.; Vargas-Angel, B.; Schumacher, B.; Brainard, R. Leveraging automated image analysis tools to transform our capacity to assess status and trends on coral reefs. Front. Mar. Sci. 2019, 6, 222. [Google Scholar] [CrossRef] [Green Version]

- Beijbom, O.; Edmunds, P.J.; Kline, D.I.; Mitchell, B.G.; Kriegman, D. Automated annotation of coral reef survey images. In Proceedings of the 2012 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Providence, RI, USA, 16–21 June 2012; IEEE: Piscataway, NJ, USA, 2012; pp. 1170–1177. [Google Scholar]

- Beijbom, O.; Edmunds, P.J.; Roelfsema, C.; Smith, J.; Kline, D.I.; Neal, B.P.; Dunlap, M.J.; Moriarty, V.; Fan, T.Y.; Tan, C.J.; et al. Towards automated annotation of benthic survey images: Variability of human experts and operational modes of automation. PLoS ONE 2015, 10, e0130312. [Google Scholar] [CrossRef]

- Alonso, I.; Yuval, M.; Eyal, G.; Treibitz, T.; Murillo, A.C. CoralSeg: Learning coral segmentation from sparse annotations. J. Field Robot. 2019, 36, 1456–1477. [Google Scholar] [CrossRef]

- Friedman, A.L. Automated Interpretation of Benthic Stereo Imagery. Ph.D. Thesis, University of Sydney, Sydney, Australia, 2013. [Google Scholar]

- Pavoni, G.; Corsini, M.; Callieri, M.; Palma, M.; Scopigno, R. Semantic segmentation of benthic communities from ortho-mosaic maps. In Proceedings of the International Archives of the Photogrammetry, Remote Sensing & Spatial Information Sciences, Limassol, Cyprus, 2–3 May 2019. [Google Scholar]

- Rashid, A.R.; Chennu, A. A Trillion Coral Reef Colors: Deeply Annotated Underwater Hyperspectral Images for Automated Classification and Habitat Mapping. Data 2020, 5, 19. [Google Scholar] [CrossRef] [Green Version]

- Teixidó, N.; Albajes-Eizagirre, A.; Bolbo, D.; Le Hir, E.; Demestre, M.; Garrabou, J.; Guigues, L.; Gili, J.M.; Piera, J.; Prelot, T.; et al. Hierarchical segmentation-based software for cover classification analyses of seabed images (Seascape). Mar. Ecol. Prog. Ser. 2011, 431, 45–53. [Google Scholar] [CrossRef] [Green Version]

- King, A.; M Bhandarkar, S.; Hopkinson, B.M. Deep Learning for Semantic Segmentation of Coral Reef Images Using Multi-View Information. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Long Beach, CA, USA, 16–20 June 2019; pp. 1–10. [Google Scholar]

- Hopkinson, B.M.; King, A.C.; Owen, D.P.; Johnson-Roberson, M.; Long, M.H.; Bhandarkar, S.M. Automated classification of three-dimensional reconstructions of coral reefs using convolutional neural networks. PLoS ONE 2020, 15, e0230671. [Google Scholar] [CrossRef]

- Todd, P.A. Morphological plasticity in scleractinian corals. Biol. Rev. 2008, 83, 315–337. [Google Scholar] [CrossRef] [PubMed]

- Schlichting, C.D.; Pigliucci, M. Phenotypic Evolution: A Reaction Norm Perspective; Sinauer Associates Incorporated: Sunderland, MA, USA, 1998. [Google Scholar]

- Berman, D.; Treibitz, T.; Avidan, S. Diving into hazelines: Color restoration of underwater images. In Proceedings of the British Machine Vision Conference, London, UK, 4–7 September 2017; Volume 1. [Google Scholar]

- Akkaynak, D.; Treibitz, T. A revised underwater image formation model. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 6723–6732. [Google Scholar]

- Deng, F.; Kang, J.; Li, P.; Wan, F. Automatic true orthophoto generation based on three-dimensional building model using multiview urban aerial images. J. Appl. Remote Sens. 2015, 9, 095087. [Google Scholar] [CrossRef]

- Rossi, P.; Castagnetti, C.; Capra, A.; Brooks, A.; Mancini, F. Detecting change in coral reef 3D structure using underwater photogrammetry: Critical issues and performance metrics. Appl. Geomat. 2020, 12, 3–17. [Google Scholar] [CrossRef]

- Pizarro, O.; Friedman, A.; Bryson, M.; Williams, S.B.; Madin, J. A simple, fast, and repeatable survey method for underwater visual 3D benthic mapping and monitoring. Ecol. Evol. 2017, 7, 1770–1782. [Google Scholar] [CrossRef]

- Abadie, A.; Boissery, P.; Viala, C. Georeferenced underwater photogrammetry to map marine habitats and submerged artificial structures. Photogramm. Rec. 2018, 33, 448–469. [Google Scholar] [CrossRef]

- Pyle, R.L.; Copus, J.M. Mesophotic coral ecosystems: Introduction and overview. In Mesophotic Coral Ecosystems; Springer: Berlin/Heidelberg, Germany, 2019; pp. 3–27. [Google Scholar]

- Brown, C.J.; Smith, S.J.; Lawton, P.; Anderson, J.T. Benthic habitat mapping: A review of progress towards improved understanding of the spatial ecology of the seafloor using acoustic techniques. Estuar. Coast. Shelf Sci. 2011, 92, 502–520. [Google Scholar]

- Lecours, V.; Devillers, R.; Schneider, D.C.; Lucieer, V.L.; Brown, C.J.; Edinger, E.N. Spatial scale and geographic context in benthic habitat mapping: Review and future directions. Mar. Ecol. Prog. Ser. 2015, 535, 259–284. [Google Scholar] [CrossRef] [Green Version]

- McKinney, F.K.; Jackson, J.B. Bryozoan Evolution; University of Chicago Press: Chicago, IL, USA, 1991. [Google Scholar]

- Veron, J.E.N. Corals in Space and Time: The Biogeography and Evolution of the Scleractinia; Cornell University Press: Ithaca, NY, USA, 1995. [Google Scholar]

- Hughes, T.P. Community structure and diversity of coral reefs: The role of history. Ecology 1989, 70, 275–279. [Google Scholar] [CrossRef]

- Huston, M. Patterns of species diversity on coral reefs. Annu. Rev. Ecol. Syst. 1985, 16, 149–177. [Google Scholar] [CrossRef]

- Loya, Y. Community structure and species diversity of hermatypic corals at Eilat, Red Sea. Mar. Biol. 1972, 13, 100–123. [Google Scholar] [CrossRef]

- Plaisance, L.; Caley, M.J.; Brainard, R.E.; Knowlton, N. The diversity of coral reefs: What are we missing? PLoS ONE 2011, 6, e25026. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Shlesinger, T.; Loya, Y. Sexual reproduction of scleractinian corals in mesophotic coral ecosystems vs. shallow reefs. In Mesophotic Coral Ecosystems; Springer: Berlin/Heidelberg, Germany, 2019; pp. 653–666. [Google Scholar]

- O’Neill, R.V.; Deangelis, D.L.; Waide, J.B.; Allen, T.F.; Allen, G.E. A Hierarchical Concept of Ecosystems; Number 23; Princeton University Press: Princeton, NJ, USA, 1986. [Google Scholar]

- Morin, P.J. Community Ecology; John Wiley & Sons: Hoboken, NJ, USA, 2009. [Google Scholar]

- Ruppert, E.E.; Barnes, R.D. Invertebrate Zoology, 5th ed.; WB Saunders Company: Philadelphia, PA, USA, 1987. [Google Scholar]

- Alonso, I.; Cambra, A.; Munoz, A.; Treibitz, T.; Murillo, A.C. Coral-segmentation: Training dense labeling models with sparse ground truth. In Proceedings of the IEEE International Conference on Computer Vision Workshops, Venice, Italy, 22–29 October 2017; pp. 2874–2882. [Google Scholar]

- Alonso, I.; Murillo, A.C. Semantic segmentation from sparse labeling using multi-level superpixels. In Proceedings of the 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Madrid, Spain, 1–5 October 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 5785–5792. [Google Scholar]

- Blaschke, T. Object based image analysis for remote sensing. ISPRS J. Photogramm. Remote Sens. 2010, 65, 2–16. [Google Scholar] [CrossRef] [Green Version]

- Ben-Romdhane, H.; Marpu, P.R.; Ouarda, T.B.; Ghedira, H. Corals & benthic habitat mapping using DubaiSat-2: A spectral-spatial approach applied to Dalma Island, UAE (Arabian Gulf). Remote Sens. Lett. 2016, 7, 781–789. [Google Scholar]

- Lucieer, V. Object-oriented classification of sidescan sonar data for mapping benthic marine habitats. Int. J. Remote Sens. 2008, 29, 905–921. [Google Scholar] [CrossRef]

- Micallef, A.; Le Bas, T.P.; Huvenne, V.A.; Blondel, P.; Hühnerbach, V.; Deidun, A. A multi-method approach for benthic habitat mapping of shallow coastal areas with high-resolution multibeam data. Cont. Shelf Res. 2012, 39, 14–26. [Google Scholar] [CrossRef] [Green Version]

- Wahidin, N.; Siregar, V.P.; Nababan, B.; Jaya, I.; Wouthuyzen, S. Object-based image analysis for coral reef benthic habitat mapping with several classification algorithms. Procedia Environ. Sci. 2015, 24, 222–227. [Google Scholar] [CrossRef] [Green Version]

- Hess, M.; Petrovic, V.; Kuester, F. Interactive classification of construction materials: Feedback driven framework for annotation and analysis of 3D point clouds. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2017, 42, 343–347. [Google Scholar] [CrossRef] [Green Version]

- Rossi, P.; Ponti, M.; Righi, S.; Castagnetti, C.; Simonini, R.; Mancini, F.; Agrafiotis, P.; Bassani, L.; Bruno, F.; Cerrano, C.; et al. Needs and gaps in optical underwater technologies and methods for the investigation of marine animal forest 3D-structural complexity. Front. Mar. Sci. 2021, in press. [Google Scholar]

- Akkaynak, D.; Treibitz, T. Sea-Thru: A Method for Removing Water From Underwater Images. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 1682–1691. [Google Scholar]

- Neyer, F.; Nocerino, E.; Gruen, A. Monitoring coral growth–the dichotomy between underwater photogrammetry and geodetic control network. ISPRS-Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2018, 42, 2. [Google Scholar] [CrossRef] [Green Version]

- Conti, L.A.; Lim, A.; Wheeler, A.J. High resolution mapping of a cold water coral mound. Sci. Rep. 2019, 9, 1–15. [Google Scholar] [CrossRef]

- Misiuk, B.; Brown, C.J.; Robert, K.; Lacharité, M. Harmonizing multi-source sonar backscatter datasets for seabed mapping using bulk shift approaches. Remote Sens. 2020, 12, 601. [Google Scholar] [CrossRef] [Green Version]

- Trzcinska, K.; Janowski, L.; Nowak, J.; Rucinska-Zjadacz, M.; Kruss, A.; von Deimling, J.S.; Pocwiardowski, P.; Tegowski, J. Spectral features of dual-frequency multibeam echosounder data for benthic habitat mapping. Mar. Geol. 2020, 427, 106239. [Google Scholar] [CrossRef]

- Acuna, D.; Ling, H.; Kar, A.; Fidler, S. Efficient interactive annotation of segmentation datasets with polygon-rnn++. In Proceedings of the IEEE conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 859–868. [Google Scholar]

- Maninis, K.K.; Caelles, S.; Pont-Tuset, J.; Van Gool, L. Deep extreme cut: From extreme points to object segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 616–625. [Google Scholar]

| Region | Name | Depth (m) | Size in m | Labeling | Classification | Map Replicates |

|---|---|---|---|---|---|---|

| Red Sea | RS20 | 20 | Coarse | Genus | 3 | |

| Red Sea | RS | 24–28 | Full | Terrain | 2 | |

| Mediterranean | MD | 20 | Full | Terrain | 2 | |

| Caribbean | CR | 20 | Full | Terrain | 1 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yuval, M.; Alonso, I.; Eyal, G.; Tchernov, D.; Loya, Y.; Murillo, A.C.; Treibitz, T. Repeatable Semantic Reef-Mapping through Photogrammetry and Label-Augmentation. Remote Sens. 2021, 13, 659. https://0-doi-org.brum.beds.ac.uk/10.3390/rs13040659

Yuval M, Alonso I, Eyal G, Tchernov D, Loya Y, Murillo AC, Treibitz T. Repeatable Semantic Reef-Mapping through Photogrammetry and Label-Augmentation. Remote Sensing. 2021; 13(4):659. https://0-doi-org.brum.beds.ac.uk/10.3390/rs13040659

Chicago/Turabian StyleYuval, Matan, Iñigo Alonso, Gal Eyal, Dan Tchernov, Yossi Loya, Ana C. Murillo, and Tali Treibitz. 2021. "Repeatable Semantic Reef-Mapping through Photogrammetry and Label-Augmentation" Remote Sensing 13, no. 4: 659. https://0-doi-org.brum.beds.ac.uk/10.3390/rs13040659