1. Introduction

Object detection in remote sensing images (RSIs) [

1] is always one of the research hotspots of remote sensing technology. With the rapid development of remote sensing technology [

2,

3,

4], the quality of RSIs is constantly improved, and it is easier for researchers to obtain. Remote sensing object detection has also played a more and more important role in intelligent monitoring, urban planning, natural disaster assessment and other fields. In the last ten years of research, many excellent algorithms have been proposed to handle this task, mainly deep learning-based methods and traditional methods. These studies have greatly promoted the development of remote sensing object detection technology and brought considerable economic value in many application fields.

According to [

1], the traditional methods of object detection in RSIs can be divided into four main categories: template matching-based methods, knowledge-based methods, object-based image analysis (OBIA)-based methods and machine learning-based methods. Template matching-based [

5], knowledge-based [

6] and OBIA-based [

7] methods used to be popular approaches, but they have received less attention since the machine learning has become mainstream. Considering the existing machine learning-based detection algorithms in RSIs, it is always treated as the classification task which contains two core components: feature extractor and classifier. Almost all of the well-known artificial features and classifiers have been introduced into the RSIs detection task. For example, histogram of oriented gradient (HOG) [

8,

9], scale invariant feature transform (SIFT) [

10] and steering kernel [

11] are adopted by many scholars; other features, such as Gabor feature [

12], gray-level co-occurrence matrix (GLCM) [

13], bag of visual words (BoW) [

13,

14], etc. are also getting a lot of attention. As for classifiers, support vector machine (SVM) and its variants are the most commonly used ones [

9,

11,

15,

16]. In addition, other famous classifiers have also been introduced into RSIs-based object detection, such as conditional random field (CRF) [

17,

18,

19], k-nearest neighbor (KNN) [

20], expectation maximization (EM) [

21], AdaBoost [

22], random forest [

23], etc. Although the application of machine learning technology has greatly promoted the development object detection in RSIs, these algorithms usually handle only few categories and cannot cope with complex scenes due to the limitations of artificial features.

Recently, deep learning technology has attracted more attention. Breakthroughs have been made in many computer vision tasks since the publication of AlexNet [

24] because the deep features have stronger representation ability than artificial features. Due to the excellent performance of deep learning in NSIs object detection [

25,

26,

27], deep learning technology was introduced to remote sensing object detection in recent years and gradually became the mainstream [

28,

29]. On the one hand, the deep features extracted by deep CNN are used to describe the detected candidates for further classification in some machine learning-based methods. Some algorithms adopt existing CNN architectures directly to extract deep features of remote sensing objects. For example, a pre-trained AlexNet model is used to extract the deep features of the area surrounding an oil tank, in which the output of the first linear layer of the AlexNet is used as the extracted feature [

30]. In literature [

31], the fully convolutional network was combined with Markov random field to detect an airplane. More typically, the algorithms in [

32] were proposed to handle multi-class remote sensing object detection, which included four steps: candidate region generation, feature extraction, classification and bounding box fine tuning, and the deep CNN was used in the second step. Some other scholars took the special characteristics of RSIs into account. For example, a new rotation-invariant constraint based on existing CNN architectures was proposed in [

33] to handle the problem that objects show a variety of orientations in RSIs. On the other hand, the mature deep object detection frameworks of NSIs were also introduced into remote sensing object detection, including both two-stage framework and one-stage framework. The R-CNN series [

25], which has a clear step of region proposal generation, is representative of two-stage algorithms. There are many algorithms of remote sensing object detection that are based on R-CNN [

34,

35,

36,

37]. These algorithms make improvements regarding the orientation and scale variations of remote sensing objects, which mainly focus on the region proposal network (RPN). As for the one-stage frameworks, the most famous algorithms are the YOLO series [

26] and SSD series [

27]. There are also many improved versions based on these two frameworks which aim to remote sensing objects, such as YOLO-based methods [

38,

39] and SSD-based methods [

40,

41,

42].

The SOTA remote sensing object detection algorithms are almost based on mature deep object detection frameworks, including one-stage framework and two-stage framework. As a rule of thumb, the two-stage framework is more time consuming than the one-stage framework, both in training and application. Considering that remote sensing image processing is usually faced with massive data, this paper mainly focuses on the one-stage framework.

Although the application of deep learning technology has made great progress in RSIs object detection, there are still many challenges due to the characteristics of RSIs and many studies trying to solve them [

38,

39,

40,

41,

42]. One of the main challenges are the missing detection and inaccurate location of small objects. Remote sensing images from the same source have a fixed resolution, which leads to the objects in RSIs having the same size in comparison with reality. Therefore, the size of the target in RSIs varies greatly, and the pixel number of a small object may be less than five percent of that of a large object. To handle this problem, existing algorithms mainly adopt the combination of hierarchical feature maps, such as in [

38]; however, the effect is limited.

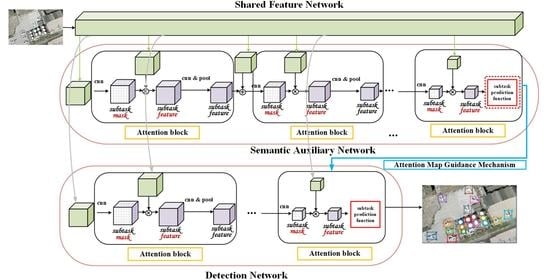

To improve the ability of accurate detection of small objects, a novel model is proposed based on a multi-task and attention mechanism, named subtask attention network (StAN). First, we treat the detection task as a subtask and build a semantic auxiliary subtask, both are performed in two attention branches based on the multi-task attention network (MTAN) [

43]. Second, the two subtasks share the deep feature from a shared feature branch, but the branch of detection subtask directly predicts on low-level features because small objects disappear in space on high-level feature maps as the map size decreases, which reduces the detection accuracy of small objects. Third, the high-level attention map of the semantic auxiliary branch is used to guide the detection branch to produce the final result; namely, the attention map guidance mechanism. In addition, the semantic auxiliary branch is trained by classification loss while the detection branch is trained just like YOLO v3 [

44]. Fourth, the multi-dimensional sampling module (MdS), global multi-view channel weights (GMulW) and target-guided pixel attention (TPA) are proposed as gain modules for further improvement of the detection accuracy in complex scenes, and integrated into StAN. In the proposed StAN, we handle the detection task on shallow layers of the neural network to avoid the disappearance of small objects on feature maps. On the other hand, another subtask branch, which uses high-level semantic features, is also used to ensure the overall detection accuracy. It is well known that deep semantic features are beneficial to object classification. Moreover, the neural structures commonly used for classification tasks do not fit to remote sensing objects. Thus, we design three gain modules based on some successful instances from literature, and integrate these modules into the shared feature branch for further improvement.

The main contributions of this paper can be summarized as follows:

- (1)

Contrary to the existing algorithms, which combine hierarchical feature maps, we conduct object detection directly on the shallow features for the problem of low detection precision of small objects and propose the StAN model. In addition, we use high-level semantic features in a subtask branch and aid the object detection through an attention map guidance mechanism.

- (2)

In order to further improve the overall detection accuracy in complex remote sensing scenes, we propose the MdS, GMulW and TPA modules and integrate them into StAN.

- (3)

Through a large number of comparative experiments, we prove that the performance of the proposed algorithm reaches the SOTA level, especially for small objects. The effect of gain modules has also been demonstrated by ablation experiments.

The rest of this paper is organized as follows:

Section 2 describes the details of the proposed StAN model and the three gain modules.

Section 3 introduces the datasets and experiment settings.

Section 4 shows the experimental results on the NWPU VHR-10 dataset and DOTA dataset which demonstrate the performance of the proposed algorithm and the effect of gain modules.

Section 5 makes a conclusion of this paper.

5. Conclusions

In this paper, the StAN model, designed based on the multi-task framework MTAN, is proposed to improve the missing detection of small objects in remote sensing object detection task. The StAN contains one shared feature branch and two subtask attention branches of a semantic auxiliary subtask and a detection subtask. In contrast to the general strategy of existing algorithms, such as SAPNet and ICN which handle the drastic scale variation by multi-scale feature fusion, the detection subtask uses only low-level features considering small objects. Then the semantic auxiliary branch here is used to keep the identification ability by the attention map guidance mechanism, which benefits from the high-level semantic features. Furthermore, three gain modules are proposed to make further improvement; namely, MdS, GMulW and TPA. These modules are integrated into the StAN model which is then named StAN-Enh. The results of experiments on two common datasets demonstrate that the proposed StAN and StAN-Enh achieve the SOTA performance. Although the mAP of our model is slightly lower than the one of SAPNet and ICN, the ability of small object detection is significantly improved. On the other hand, the calculation of the average IoU proves that the handle detection task on a shallow layer of a deep network is beneficial to accurate location.

Although the proposed algorithm performs well on small objects, it has some limitations on large objects. The reason for this may be that we adopt the same anchor setting as YOLO v3, which is unable to cope with such a drastic scale change. In the future work, we will try to combine the proposed algorithm with the two-stage detection framework which uses a region proposal network (RPN) to generate candidate boxes rather than an anchor. The challenge is in the control of the increase of time consumption. Moreover, the feature pyramid-based multi-scale feature fusion will be considered in the RPN design to improve the processing performance of multi-scale objects, which was demonstrated to be effective by both SAPNet and ICN. On the other hand, the two-stage frameworks are generally more time consuming than the one-stage frameworks. However, our one-stage model has worse time efficiency than the two-stage deformable R-FCN that takes a deeper ResNet as a backbone. The efficiency optimization of the gain modules and the subtask branch is therefore one of our future goals.