SAR Oil Spill Detection System through Random Forest Classifiers

Abstract

:1. Introduction

2. Materials and Methods

2.1. Image Pre-Processing

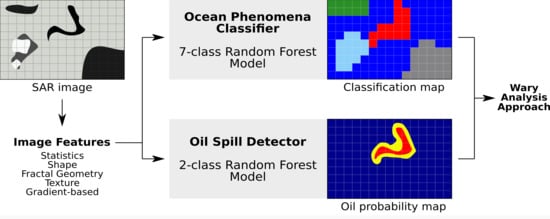

2.2. Image Classification Steps

2.3. Image Segmentation

2.4. Feature Extraction and Selection

2.5. Decision Tree Classifiers

2.6. Random Forest Classifiers

2.7. Metrics and Cross-Validation

3. Results and Discussion

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Celino, J.J.; De Oliveira, O.M.C.; Hadlich, G.M.; de Souza Queiroz, A.F.; Garcia, K.S. Assessment of contamination by trace metals and petroleum hydrocarbons in sediments from the tropical estuary of Todos os Santos Bay, Brazil. Braz. J. Geol. 2008, 38, 753–760. [Google Scholar] [CrossRef] [Green Version]

- Fingas, M. The Basics of Oil Spill Cleanup; Lewis Publisher: Boca Raton, FL, USA, 2001. [Google Scholar]

- Ciappa, A.; Costabile, S. Oil spill hazard assessment using a reverse trajectory method for the Egadi marine protected area (Central Mediterranean Sea). Mar. Pollut. Bull. 2014, 84, 44–55. [Google Scholar] [CrossRef]

- De Maio, A.; Orlando, D.; Pallotta, L.; Clemente, C. A multifamily GLRT for oil spill detection. IEEE Trans. Geosci. Remote Sens. 2016, 55, 63–79. [Google Scholar] [CrossRef]

- Franceschetti, G.; Iodice, A.; Riccio, D.; Ruello, G.; Siviero, R. SAR raw signal simulation of oil slicks in ocean environments. IEEE Trans. Geosci. Remote Sens. 2002, 40, 1935–1949. [Google Scholar] [CrossRef]

- Espedal, H.A.; Wahl, T. Satellite SAR oil spill detection using wind history information. Int. J. Remote Sens. 1999, 20, 49–65. [Google Scholar] [CrossRef]

- Fiscella, B.; Giancaspro, A.; Nirchio, F.; Pavese, P.; Trivero, P. Oil spill detection using marine SAR images. Int. J. Remote Sens. 2000, 21, 3561–3566. [Google Scholar] [CrossRef]

- Brekke, C.; Solberg, A.H.S. Oil spill detection by satellite remote sensing. Remote Sens. Environ. 2005, 95, 1–13. [Google Scholar] [CrossRef]

- Brekke, C.; Solberg, A. Classifiers and confidence estimation for oil spill detection in Envisat ASAR images. IEEE Geosci. Remote Sens. Lett. 2008, 5, 65–69. [Google Scholar] [CrossRef]

- Li, Y.; Li, J. Oil spill detection from SAR intensity imagery using a marked point process. Remote Sens. Environ. 2010, 114, 1590–1601. [Google Scholar] [CrossRef]

- Migliaccio, M.; Nunziata, F.; Montuori, A.; Li, X.; Pichel, W.G. Multi-frequency polarimetric SAR processing chain to observe oil fields in the Gulf of Mexico. IEEE Trans. Geosci. Remote Sens. 2011, 49, 4729–4737. [Google Scholar] [CrossRef]

- Migliaccio, M.; Nunziata, F.; Buono, A. SAR polarimetry for sea oil slick observation. Int. J. Remote Sens. 2015, 36, 3243–3273. [Google Scholar] [CrossRef] [Green Version]

- Salberg, A.B.; Rudjord, Ø.; Solberg, A.H.S. Oil spill detection in hybrid-polarimetric SAR images. IEEE Trans. Geosci. Remote Sens. 2014, 52, 6521–6533. [Google Scholar] [CrossRef]

- Kim, T.; Park, K.; Li, X.; Lee, M.; Hong, S.; Lyu, S.; Nam, S. Detection of the Hebei Spirit oil spill on SAR imagery and its temporal evolution in a coastal region of the Yellow Sea. Adv. Space Res. 2015, 56, 1079–1093. [Google Scholar] [CrossRef]

- Li, H.; Perrie, W.; He, Y.; Wu, J.; Luo, X. Analysis of the polarimetric SAR scattering properties of oil-covered waters. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2015, 8, 3751–3759. [Google Scholar] [CrossRef]

- Singha, S.; Ressel, R.; Velotto, D.; Lehner, S. A combination of traditional and polarimetric features for oil spill detection using TerraSAR-X. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2016, 9, 4979–4990. [Google Scholar] [CrossRef] [Green Version]

- Chen, G.; Li, Y.; Sun, G.; Zhang, Y. Application of deep networks to oil spill detection using polarimetric synthetic aperture radar images. Appl. Sci. 2017, 7, 968. [Google Scholar] [CrossRef]

- Vasconcelos, R.N.; Lima, A.T.C.; Lentini, C.A.; Miranda, G.V.; Mendonça, L.F.; Silva, M.A.; Cambuí, E.C.B.; Lopes, J.M.; Porsani, M.J. Oil Spill Detection and Mapping: A 50-Year Bibliometric Analysis. Remote Sens. 2020, 12, 3647. [Google Scholar] [CrossRef]

- Brown, C.E.; Fingas, M.F. New space-borne sensors for oil spill response. Int. Oil Spill Conf. Proc. 2001, 2001, 911–916. [Google Scholar] [CrossRef]

- Benelli, G.; Garzelli, A. Oil-spills detection in SAR images by fractal dimension estimation. IEEE Int. Geosci. Remote Sens. Symp. 1999, 1, 218–220. [Google Scholar]

- Marghany, M.; Hashim, M.; Cracknell, A.P. Fractal dimension algorithm for detecting oil spills using RADARSAT-1 SAR. In Proceedings of the International Conference on Computational Science and Its Applications, Kuala Lumpur, Malaysia, 26–29 August 2007; Springer: Berlin/Heidelberg, Germany, 2007; pp. 1054–1062. [Google Scholar]

- Marghany, M.; Hashim, M. Discrimination between oil spill and look-alike using fractal dimension algorithm from RADARSAT-1 SAR and AIRSAR/POLSAR data. Int. J. Phys. Sci. 2011, 6, 1711–1719. [Google Scholar]

- Del Frate, F.; Petrocchi, A.; Lichtenegger, J.; Calabresi, G. Neural networks for oil spill detection using ERS-SAR data. IEEE Trans. Geosci. Remote Sens. 2000, 38, 2282–2287. [Google Scholar] [CrossRef] [Green Version]

- Garcia-Pineda, O.; MacDonald, I.; Zimmer, B. Synthetic aperture radar image processing using the supervised textural-neural network classification algorithm. In Proceedings of the IGARSS 2008-2008 IEEE International Geoscience and Remote Sensing Symposium, Boston, MA, USA, 7–11 July 2008; Volume 4, p. 1265. [Google Scholar]

- Cheng, Y.; Li, X.; Xu, Q.; Garcia-Pineda, O.; Andersen, O.B.; Pichel, W.G. SAR observation and model tracking of an oil spill event in coastal waters. Mar. Pollut. Bull. 2011, 62, 350–363. [Google Scholar] [CrossRef] [PubMed]

- Singha, S.; Bellerby, T.J.; Trieschmann, O. Detection and classification of oil spill and look-alike spots from SAR imagery using an artificial neural network. In Proceedings of the 2012 IEEE, International Geoscience and Remote Sensing Symposium, Munich, Germany, 22–27 July 2012; pp. 5630–5633. [Google Scholar]

- Jiao, L.; Zhang, F.; Liu, F.; Yang, S.; Li, L.; Feng, Z.; Qu, R. A survey of deep learning-based object detection. IEEE Access 2019, 7, 128837–128868. [Google Scholar] [CrossRef]

- Yekeen, S.T.; Balogun, A.L.; Yusof, K.B.W. A novel deep learning instance segmentation model for automated marine oil spill detection. ISPRS J. Photogramm. Remote Sens. 2000, 167, 190–200. [Google Scholar] [CrossRef]

- Skøelv, Å.; Wahl, T. Oil spill detection using satellite based SAR, Phase 1B competition report. Tech. Rep. Nor. Def. Res. Establ. 1993. Available online: https://www.asprs.org/wp-content/uploads/pers/1993journal/mar/1993_mar_423-428.pdf (accessed on 27 March 2021).

- Vachon, P.W.; Thomas, S.J.; Cranton, J.A.; Bjerkelund, C.; Dobson, F.W.; Olsen, R.B. Monitoring the coastal zone with the RADARSAT satellite. Oceanol. Int. 1998, 98, 10–13. [Google Scholar]

- Manore, M.J.; Vachon, P.W.; Bjerkelund, C.; Edel, H.R.; Ramsay, B. Operational use of RADARSAT SAR in the coastal zone: The Canadian experience. In Proceedings of the 27th international Symposium on Remote Sensing of the Environment, Tromso, Norway, 8–12 June 1998; pp. 115–118. [Google Scholar]

- Kolokoussis, P.; Karathanassi, V. Oil spill detection and mapping using sentinel 2 imagery. J. Mar. Sci. Eng. 2018, 6, 4. [Google Scholar] [CrossRef] [Green Version]

- Konik, M.; Bradtke, K. Object-oriented approach to oil spill detection using ENVISAT ASAR images. ISPRS J. Photogramm. Remote Sens. 2016, 118, 37–52. [Google Scholar] [CrossRef]

- Xu, L.; Javad Shafiee, M.; Wong, A.; Li, F.; Wang, L.; Clausi, D. Oil spill candidate detection from SAR imagery using a thresholding-guided stochastic fully-connected conditional random field model. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Boston, MA, USA, 7–12 June 2015; pp. 79–86. [Google Scholar]

- Marghany, M. RADARSAT automatic algorithms for detecting coastal oil spill pollution. Int. J. Appl. Earth Obs. Geoinf. 2001, 3, 191–196. [Google Scholar] [CrossRef]

- Shirvany, R.; Chabert, M.; Tourneret, J.Y. Ship and oil-spill detection using the degree of polarization in linear and hybrid/compact dual-pol SAR. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2012, 5, 885–892. [Google Scholar] [CrossRef] [Green Version]

- Solberg, A.S.; Solberg, R. A large-scale evaluation of features for automatic detection of oil spills in ERS SAR images. In Proceedings of the IGARSS’96. International Geoscience and Remote Sensing Symposium, Lincoln, NE, USA, 31 May 1996; Volume 3, pp. 1484–1486. [Google Scholar]

- Solberg, A.S.; Storvik, G.; Solberg, R.; Volden, E. Automatic detection of oil spills in ERS SAR images. IEEE Trans. Geosci. Remote Sens. 1999, 37, 1916–1924. [Google Scholar] [CrossRef] [Green Version]

- Solberg, A.H.; Dokken, S.T.; Solberg, R. Automatic detection of oil spills in Envisat, Radarsat and ERS SAR images. In Proceedings of the IGARSS—IEEE International Geoscience and Remote Sensing Symposium, (IEEE Cat. No. 03CH37477). Toulouse, France, 21–25 July 2003; Volume 4, pp. 2747–2749. [Google Scholar]

- Solberg, A.H.; Brekke, C.; Husoy, P.O. Oil spill detection in Radarsat and Envisat SAR images. IEEE Trans. Geosci. Remote Sens. 2007, 45, 746–755. [Google Scholar] [CrossRef]

- Awad, M. Segmentation of satellite images using Self-Organizing Maps. Intech Open Access Publ. 2010, 249–260. [Google Scholar] [CrossRef] [Green Version]

- Cantorna, D.; Dafonte, C.; Iglesias, A.; Arcay, B. Oil spill segmentation in SAR images using convolutional neural networks. A comparative analysis with clustering and logistic regression algorithms. Appl. Soft Comput. 2019, 84, 105716. [Google Scholar] [CrossRef]

- Zhang, J.; Feng, H.; Luo, Q.; Li, Y.; Wei, J.; Li, J. Oil spill detection in quad-polarimetric SAR Images using an advanced convolutional neural network based on SuperPixel model. Remote Sens. 2020, 12, 944. [Google Scholar] [CrossRef] [Green Version]

- Castelvecchi, D. Can we open the black box of AI? Nat. News 2016, 538, 20. [Google Scholar] [CrossRef] [Green Version]

- Breiman, L. Random forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef] [Green Version]

- Olaya-Marín, E.J.; Francisco, M.; Paolo, V. A comparison of artificial neural networks and random forests to predict native fish species richness in Mediterranean rivers. Knowl. Manag. Aquat. Ecosyst. 2013, 409, 7. [Google Scholar] [CrossRef] [Green Version]

- De Zan, F.; Monti Guarnieri, A. TOPSAR: Terrain observation by progressive scans. IEEE Trans. Geosci. Remote Sens. 2006, 44, 2352–2360. [Google Scholar] [CrossRef]

- Mera, D.; Cotos, J.M.; Varela-Pet, J.; Garcia-Pineda, O. Adaptive thresholding algorithm based on SAR images and wind data to segment oil spills along the northwest coast of the Iberian Peninsula. Mar. Pollut. Bull. 2012, 64, 2090–2096. [Google Scholar] [CrossRef]

- Shorten, C.; Taghi, M.K. A survey on image data augmentation for deep learning. J. Big Data 2019, 6, 1–48. [Google Scholar] [CrossRef]

- Singh, T.R.; Roy, S.; Singh, O.I.; Sinam, T.; Singh, K. A new local adaptive thresholding technique in binarization. Arxiv Prepr. Arxiv 2012, 1201, 5227. [Google Scholar]

- Roy, P.; Dutta, S.; Dey, N.; Dey, G.; Chakraborty, S.; Ray, R. Adaptive thresholding: A comparative study. In Proceedings of the 2014 International conference on control, Instrumentation, communication and Computational Technologies, Kanyakumari, India, 10–11 July 2014. [Google Scholar]

- Li, J.; Qian, D.; Sun, C. An improved box-counting method for image fractal dimension estimation. Pattern Recognit. 2009, 42, 2460–2469. [Google Scholar] [CrossRef]

- Soille, P.; Jean-F, R. On the validity of fractal dimension measurements in image analysis. J. Vis. Commun. Image Represent. 1996, 7, 217–229. [Google Scholar] [CrossRef]

- Popovic, N.; Radunovic, M.; Badnjar, J.; Popovic, T. Fractal dimension and lacunarity analysis of retinal microvascular morphology in hypertension and diabetes. Microvasc. Res. 2018, 118, 36–43. [Google Scholar] [CrossRef]

- Virtanen, P.; Gommers, R.; Oliphant, T.E.; Haberland, M.; Reddy, T.; Cournapeau, D.; Van Mulbregt, P. SciPy 1.0: Fundamental algorithms for scientific computing in Python. Nat. Methods 2020, 17, 261–272. [Google Scholar] [CrossRef] [Green Version]

- Cutler, A.; Richard, D.C.; Stevens, J.R. Random forests. In Ensemble Machine Learning; Springer: Boston, MA, USA, 2012; pp. 157–175. [Google Scholar]

- Pal, M. Random forest classifier for remote sensing classification. Int. J. Remote Sens. 2005, 26, 217–222. [Google Scholar] [CrossRef]

- Gislason, P.O.; Benediktsson, J.A.; Sveinsson, J.R. Random forests for land cover classification. Pattern Recognit. Lett. 2006, 27, 294–300. [Google Scholar] [CrossRef]

- Fürnkranz, J.; Peter, A.F. An analysis of rule evaluation metrics. In Proceedings of the 20th international conference on machine learning (ICML-03), Washington, DC, USA, 21–24 August 2003. [Google Scholar]

- Fushiki, T. Estimation of prediction error by using K-fold cross-validation. Stat. Comput. 2011, 21, 137–146. [Google Scholar] [CrossRef]

- Shima, Y. Image augmentation for object image classification based on combination of pre-trained CNN and SVM. J. Phys. Conf. Series. Iop Publ. 2018, 1004, 012001. [Google Scholar] [CrossRef] [Green Version]

- Dekker, R.J. Texture analysis and classification of ERS SAR images for map updating of urban areas in the Netherlands. IEEE Trans. Geosci. Remote Sens. 2003, 41, 1950–1958. [Google Scholar] [CrossRef]

- Johnson, S.C. Hierarchical clustering schemes. Psychometrika 1967, 32, 241–254. [Google Scholar] [CrossRef]

- Fauvel, M.; Chanussot, J.; Benediktsson, J.A. Kernel principal component analysis for the classification of hyperspectral remote sensing data over urban areas. EURASIP J. Adv. Signal Process. 2009, 1–14. [Google Scholar] [CrossRef] [Green Version]

- Pedregosa, F.; Varoquaux, G.; Gramfort, A.; Michel, V.; Thirion, B.; Grisel, O.; Duchesnay, E. Scikit-learn: Machine Learning in Python. J. Mach. Learn. Res. 2011, 12, 2825–2830. [Google Scholar]

| Group | Feature | Acronym | Calculation |

|---|---|---|---|

| Statistics | Image mean | mean | Mean image value |

| Foreground mean | fgmean | Mean dark spot value | |

| Background mean | bgmean | Mean background value | |

| Image standard deviation | std | Image value’s standard deviation | |

| Foregrond standard deviation | fgstd | Dark spot values’ standard deviation | |

| Background standard deviation | bgstd | Background values’ standard deviation | |

| Image skewness | skew | Image values’ skewness | |

| Foreground skewness | fgskew | Foreground values’ skewness | |

| Background skewness | bgskew | Background values’ skewness | |

| Image kurtosis | kurt | Image values’ kutosis | |

| Foreground kurtosis | fgkurt | Dark spot values’ kurtosis | |

| Background kurtosis | bgkurt | Background values’ kurtosis | |

| Foreground-to-background mean ratio | fgobgmean | Ratio between foreground and background means | |

| Foreground-to-background standard deviation ratio | fgobgstd | Ratio between foreground and background standard deviations | |

| Foreground-to-background skewness ratio | fgobgskew | Ratio between foreground and background skewnesses | |

| Foreground-to-background kurtoses ratio | fgobgkurt | Ratio between foreground and background kurtoses | |

| Image Shannon entropy | entropy | Shannon entropy calculated over the image | |

| Segmentation mask’s Shannon entropy | segentropy | Entropy of the segmentation mask generated | |

| Shape | Foreground area | fgarea | Dark spot area |

| Foreground perimeter | fgper | Dark spot perimeter | |

| Foreground perimeter-to-area ratio | fgperoarea | Dark spot perimeter-to-area ratio | |

| Foreground complexity | complex | P/√(4πA), where P and A are, respectively, the foreground’s perimeter and area | |

| Foreground spreading | spread | λ2/(λ1 + λ2), where λ1 and λ2 are the two eigenvalues of the foreground coordinates’ covariance matrix and λ1 > λ2 | |

| Fractal geometry | Power spectral density function-based fractal dimension | psdfd | Fractal dimension as calculated by the image’s frequency components’ energy decay |

| Segmentation mask’s box-counting-based fractal dimension | bcfd | Fractal dimension as calculated by box counting | |

| Semivariogram-based fractal dimension | svfd | Fractal dimension as calculated from the image semivariogram | |

| Box-counting-based lacunarity | bclac | Image lacunarity as calculated by box counting | |

| Segmentation mask’s box-counting-based lacunarity | segbclac | Segmentation mask’s lacunarity as calculated by box counting | |

| Texture | Image correlation | corr | Image correlation from grey level co-occurrence matrix (GLCM) |

| Segmentation correlation | segcorr | Segmentation mask correlation from GLCM | |

| Image homogeneity | homo | Image homogeneity from GLCM | |

| Segmentation mask homogeneity | seghomo | Segmentation mask homogeneity from GLCM | |

| Image dissimilarity | diss | Image dissimilarity from GLCM | |

| Segmentation dissimilarity | segdiss | Segmentation mask dissimilarity from GLCM | |

| Image energy | ener | Image energy from GLCM | |

| Segmentation mask energy | segener | Segmentation mask energy from GLCM | |

| Image contrast | cont | Image contrast from GLCM | |

| Segmentation contrast | segcont | Segmentation mask contrast from GLCM | |

| Gradient | Maximum image gradient | gradmax | Maximum value of image gradient |

| Mean image gradient | gradmean | Mean value of image gradient | |

| Median image gradient | gradmedian | Median value of image gradient | |

| Gradient mean to median ratio | gradmeanomedian | Ratio between mean and median gradient values |

| Correlated Group | Maintained Features | Removed Features |

|---|---|---|

| 1 | complex | segcorr, fgperoarea, fgper, seghomo, segcont, segdiss, bcfd |

| 2 | entropy | ener, homo, gradmax, cont, diss |

| 3 | None | fgkurt, bgkurt |

| 4 | gradmean | gradmedian |

| 5 | psdfd | corr, std, svfd, gradmeanomedian |

| 6 | segentropy | fgarea |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Conceição, M.R.A.; de Mendonça, L.F.F.; Lentini, C.A.D.; da Cunha Lima, A.T.; Lopes, J.M.; de Vasconcelos, R.N.; Gouveia, M.B.; Porsani, M.J. SAR Oil Spill Detection System through Random Forest Classifiers. Remote Sens. 2021, 13, 2044. https://0-doi-org.brum.beds.ac.uk/10.3390/rs13112044

Conceição MRA, de Mendonça LFF, Lentini CAD, da Cunha Lima AT, Lopes JM, de Vasconcelos RN, Gouveia MB, Porsani MJ. SAR Oil Spill Detection System through Random Forest Classifiers. Remote Sensing. 2021; 13(11):2044. https://0-doi-org.brum.beds.ac.uk/10.3390/rs13112044

Chicago/Turabian StyleConceição, Marcos Reinan Assis, Luis Felipe Ferreira de Mendonça, Carlos Alessandre Domingos Lentini, André Telles da Cunha Lima, José Marques Lopes, Rodrigo Nogueira de Vasconcelos, Mainara Biazati Gouveia, and Milton José Porsani. 2021. "SAR Oil Spill Detection System through Random Forest Classifiers" Remote Sensing 13, no. 11: 2044. https://0-doi-org.brum.beds.ac.uk/10.3390/rs13112044