FEF-Net: A Deep Learning Approach to Multiview SAR Image Target Recognition

Abstract

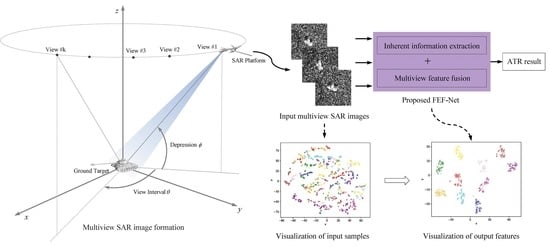

:1. Introduction

2. Materials and Methods

2.1. Problem Formulation

2.2. Proposed Method

2.2.1. Network Framework

2.2.2. Deformable Convolution

2.2.3. SE Module

2.2.4. Other Modules

2.2.5. Loss Function and Network Training

3. Results and Discussion

3.1. Dataset

3.2. Network Configuration

3.3. Performance Analysis

4. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Moreira, A.; Prats-Iraola, P.; Younis, M.; Krieger, G.; Hajnsek, I.; Papathanassiou, K.P. A tutorial on synthetic aperture radar. IEEE Geosci. Remote Sens. Mag. 2013, 1, 6–43. [Google Scholar] [CrossRef] [Green Version]

- Brown, W.M. Synthetic Aperture Radar. IEEE Trans. Aerosp. Electron. Syst. 1967, AES-3, 217–229. [Google Scholar] [CrossRef]

- Doerry, A.W.; Dickey, F.M. Synthetic aperture radar. Opt. Photonics News 2004, 15, 28–33. [Google Scholar] [CrossRef]

- Blacknell, D.; Griffiths, H. Radar Automatic Target Recognition (ATR) and Non-Cooperative Target Recognition (NCTR); The Institution of Engineering and Technology: Stevenage, UK, 2013. [Google Scholar]

- Bhanu, B. Automatic Target Recognition: State of the Art Survey. IEEE Trans. Aerosp. Electron. Syst. 1986, AES-22, 364–379. [Google Scholar] [CrossRef]

- Mishra, A.K.; Mulgrew, B. Automatic target recognition. Encycl. Aerosp. Eng. 2010, 1–8. [Google Scholar] [CrossRef]

- El-Darymli, K.; Gill, E.W.; Mcguire, P.; Power, D.; Moloney, C. Automatic Target Recognition in Synthetic Aperture Radar Imagery: A State-of-the-Art Review. IEEE Access 2016, 4, 6014–6058. [Google Scholar] [CrossRef] [Green Version]

- Kechagias-Stamatis, O.; Aouf, N. Automatic Target Recognition on Synthetic Aperture Radar Imagery: A Survey. IEEE Aerosp. Electron. Syst. Mag. 2021, 36, 56–81. [Google Scholar] [CrossRef]

- El-Darymli, K.; McGuire, P.; Power, D.; Moloney, C. Target detection in synthetic aperture radar imagery: A state-of-the-art survey. J. Appl. Remote Sens. 2013, 7, 071598. [Google Scholar] [CrossRef] [Green Version]

- Kreithen, D.E.; Halversen, S.D.; Owirka, G.J. Discriminating targets from clutter. Lincoln Lab. J. 1993, 6, 25–52. [Google Scholar]

- Gao, G. An Improved Scheme for Target Discrimination in High-Resolution SAR Images. IEEE Trans. Geosci. Remote Sens. 2011, 49, 277–294. [Google Scholar] [CrossRef]

- Novak, L.M.; Owirka, G.J.; Weaver, A.L. Automatic target recognition using enhanced resolution SAR data. IEEE Trans. Aerosp. Electron. Syst. 1999, 35, 157–175. [Google Scholar] [CrossRef]

- Zhao, Q.; Principe, J.C. Support vector machines for SAR automatic target recognition. IEEE Trans. Aerosp. Electron. Syst. 2001, 37, 643–654. [Google Scholar] [CrossRef] [Green Version]

- O’Sullivan, J.A.; DeVore, M.D.; Kedia, V.; Miller, M.I. SAR ATR performance using a conditionally Gaussian model. IEEE Trans. Aerosp. Electron. Syst. 2001, 37, 91–108. [Google Scholar] [CrossRef] [Green Version]

- Sun, Y.; Liu, Z.; Todorovic, S.; Li, J. Adaptive boosting for SAR automatic target recognition. IEEE Trans. Aerosp. Electron. Syst. 2007, 43, 112–125. [Google Scholar] [CrossRef]

- Zhang, H.; Nasrabadi, N.M.; Huang, T.S.; Zhang, Y. Joint sparse representation based automatic target recognition in SAR images. SPIE Defense Secur. Sens. Int. Soc. Opt. Photonics 2011, 8051, 805112. [Google Scholar]

- Srinivas, U.; Monga, V.; Raj, R.G. SAR Automatic Target Recognition Using Discriminative Graphical Models. IEEE Trans. Aerosp. Electron. Syst. 2014, 50, 591–606. [Google Scholar] [CrossRef]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. Adv. Neural Inf. Process. Syst. 2012, 25, 1097–1105. [Google Scholar] [CrossRef]

- Chen, S.; Wang, H.; Xu, F.; Jin, Y.Q. Target Classification Using the Deep Convolutional Networks for SAR Images. IEEE Trans. Geosci. Remote Sens. 2016, 54, 4806–4817. [Google Scholar] [CrossRef]

- Wagner, S.A. SAR ATR by a combination of convolutional neural network and support vector machines. IEEE Trans. Aerosp. Electron. Syst. 2016, 52, 2861–2872. [Google Scholar] [CrossRef]

- Ding, J.; Chen, B.; Liu, H.; Huang, M. Convolutional Neural Network With Data Augmentation for SAR Target Recognition. IEEE Geosci. Remote Sens. Lett. 2016, 13, 364–368. [Google Scholar]

- Zhou, F.; Wang, L.; Bai, X.; Hui, Y. SAR ATR of Ground Vehicles Based on LM-BN-CNN. IEEE Trans. Geosci. Remote Sens. 2018, 56, 7282–7293. [Google Scholar]

- Brendel, G.F.; Horowitz, L.L. Benefits of aspect diversity for SAR ATR: Fundamental and experimental results. In AeroSense 2000; International Society for Optics and Photonics: Orlando, FL, USA, 2000; pp. 567–578. [Google Scholar]

- Ding, B.; Wen, G. Exploiting multi-view SAR images for robust target recognition. Remote Sens. 2017, 9, 1150. [Google Scholar]

- Zhang, F.; Hu, C.; Yin, Q.; Li, W.; Li, H.; Hong, W. Multi-Aspect-Aware Bidirectional LSTM Networks for Synthetic Aperture Radar Target Recognition. IEEE Access 2017, 5, 26880–26891. [Google Scholar] [CrossRef]

- Pei, J.; Huang, Y.; Huo, W.; Zhang, Y.; Yang, J.; Yeo, T. SAR Automatic Target Recognition Based on Multiview Deep Learning Framework. IEEE Trans. Geosci. Remote Sens. 2018, 56, 2196–2210. [Google Scholar] [CrossRef]

- Clemente, C.; Pallotta, L.; Proudler, I.; De Maio, A.; Soraghan, J.J.; Farina, A. Pseudo-Zernike-based multi-pass automatic target recognition from multi-channel synthetic aperture radar. IET Radar Sonar Navigat. 2015, 9, 457–466. [Google Scholar]

- Dai, J.; Qi, H.; Xiong, Y.; Li, Y.; Zhang, G.; Hu, H.; Wei, Y. Deformable convolutional networks. In Proceedings of the IEEE International Conference On Computer Vision, Venice, Italy, 22–29 October 2017; pp. 764–773. [Google Scholar]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-excitation networks. In Proceedings of the IEEE Conference On Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 7132–7141. [Google Scholar]

- Lecun, Y.; Bottou, L.; Bengio, Y.; Haffner, P. Gradient-based learning applied to document recognition. Proc. IEEE 1998, 86, 2278–2324. [Google Scholar] [CrossRef] [Green Version]

- Trevor Hastie, R.T.; Friedman, J. The Elements of Statistical Learning; Data Mining, Inference and Prediction; Springer: New York, NY, USA, 2009. [Google Scholar]

- Murphy, K.P. Machine Learning: A Probabilistic Perspective; MIT Press: London, UK, 2012. [Google Scholar]

- Ross, T.D.; Worrell, S.W.; Velten, V.J.; Mossing, J.C.; Bryant, M.L. Standard SAR ATR evaluation experiments using the MSTAR public release data set. In Aerospace/Defense Sensing and Controls; International Society for Optics and Photonics: Orlando, FL, USA, 1998; pp. 566–573. [Google Scholar]

- Gonzalez, R.C.; Woods, R.E.; Eddins, S.L. Digital Image Processing Using MATLAB; Pearson Education India: Noida, India, 2004. [Google Scholar]

- Maaten, L.V.D.; Hinton, G. Visualizing data using t-SNE. J. Mach. Learn. Res. 2008, 9, 2579–2605. [Google Scholar]

| Training | Testing | ||

|---|---|---|---|

| Target Types | Raw Images | Target Types | Raw Images |

| BMP2sn-9563 | 78 | BMP2sn-9563 | 195 |

| BTR70 | 78 | BTR70 | 196 |

| T72sn-132 | 78 | T72sn-132 | 196 |

| BTR60 | 86 | BTR60 | 195 |

| 2S1 | 100 | 2S1 | 274 |

| BRDM2 | 100 | BRDM2 | 274 |

| D7 | 100 | D7 | 274 |

| T62 | 100 | T62 | 273 |

| ZIL131 | 100 | ZIL131 | 274 |

| ZSU23/4 | 100 | ZSU23/4 | 274 |

| Layer | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Module | Deformable Conv. | Max-Pool | Conv. | Max-Pool | SE | Conv. | Max-Pool | Conv. | Fully-Connected | Softmax |

| Configuration | WS: | WS: | NN: 48 | WS: | NN: 64 | NN: 10 | ||||

| SS: | SS: | SS: | SS: | SS: | SS: | SS: |

| Class | BMP2sn-9563 | BTR70 | T72sn-132 | BTR60 | 2S1 | BRDM2 | D7 | T62 | ZIL131 | ZSU23/4 |

|---|---|---|---|---|---|---|---|---|---|---|

| BMP2sn-9563 | 99.10 | 0.00 | 0.10 | 0.00 | 0.65 | 0.00 | 0.00 | 0.15 | 0.00 | 0.00 |

| BTR70 | 0.00 | 99.95 | 0.00 | 0.00 | 0.05 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 |

| T72sn-132 | 0.75 | 0.00 | 99.25 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 |

| BTR60 | 0.25 | 0.65 | 0.00 | 98.10 | 0.10 | 0.65 | 0.00 | 0.25 | 0.00 | 0.00 |

| 2S1 | 0.00 | 0.05 | 0.00 | 0.00 | 99.85 | 0.10 | 0.00 | 0.00 | 0.00 | 0.00 |

| BRDM2 | 0.00 | 0.00 | 0.00 | 0.00 | 0.25 | 98.85 | 0.00 | 0.00 | 0.90 | 0.00 |

| D7 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 98.90 | 0.00 | 1.05 | 0.05 |

| T62 | 0.00 | 0.00 | 0.00 | 0.10 | 0.05 | 0.00 | 0.00 | 99.85 | 0.00 | 0.00 |

| ZIL131 | 0.00 | 0.00 | 0.00 | 0.00 | 0.60 | 0.00 | 0.00 | 0.00 | 99.40 | 0.00 |

| ZSU23/4 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.10 | 99.90 |

| Network Instances | Raw SAR Images | Generated Training Samples | Recognition Rates |

|---|---|---|---|

| 2-Views | 1377 | 21,834 | 98.42% |

| 3-Views | 920 | 48,764 | 99.31% |

| 4-Views | 690 | 43,533 | 99.34% |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Pei, J.; Wang, Z.; Sun, X.; Huo, W.; Zhang, Y.; Huang, Y.; Wu, J.; Yang, J. FEF-Net: A Deep Learning Approach to Multiview SAR Image Target Recognition. Remote Sens. 2021, 13, 3493. https://0-doi-org.brum.beds.ac.uk/10.3390/rs13173493

Pei J, Wang Z, Sun X, Huo W, Zhang Y, Huang Y, Wu J, Yang J. FEF-Net: A Deep Learning Approach to Multiview SAR Image Target Recognition. Remote Sensing. 2021; 13(17):3493. https://0-doi-org.brum.beds.ac.uk/10.3390/rs13173493

Chicago/Turabian StylePei, Jifang, Zhiyong Wang, Xueping Sun, Weibo Huo, Yin Zhang, Yulin Huang, Junjie Wu, and Jianyu Yang. 2021. "FEF-Net: A Deep Learning Approach to Multiview SAR Image Target Recognition" Remote Sensing 13, no. 17: 3493. https://0-doi-org.brum.beds.ac.uk/10.3390/rs13173493