Very High-Resolution Imagery and Machine Learning for Detailed Mapping of Riparian Vegetation and Substrate Types

Abstract

:1. Introduction

- (I)

- To evaluate the final results for Random Forest classification models for the levels Basic surface type (BA, e.g., substrate types, water), Vegetation units (VE, e.g., reed, herbaceous vegetation), Dominant stands (DO, up to species level) and Substrate types (SU, e.g., sand, gravel);

- (II)

- To compare classification results from the Random Forest algorithm (RF) with Support Vector Machine (SVM) and Extreme Gradient Boosting (XGBoost);

- (III)

- To identify areas in the classification results with high degrees of uncertainty or certainty, respectively; and

- (IV)

- To transfer the workflow to data acquired by gyrocopter and to compare the achieved results with those from UAS data.

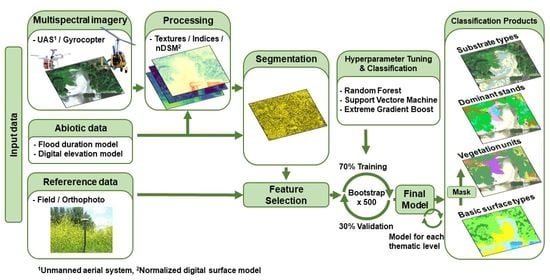

2. Materials and Methods

2.1. Study Area

2.2. Image Data and Pre-Processing

2.3. Additional Abiotic Data

2.4. Reference Data

2.5. Image Segmentation

2.6. Feature Selection

2.7. Classification Algorithm

2.8. Accuracy Measures and Model Fitting

3. Results and Discussion

3.1. Random Forest Classification of Different Thematic Levels

3.1.1. Results

3.1.2. Discussion

3.2. Comparison of Algorithms

3.2.1. Results

3.2.2. Discussion

3.3. Spatial Evaluation of Classification Probability

3.3.1. Results

3.3.2. Discussion

3.4. Transfer of Classification Method from UAS to Larger Scale Gyrocopter Data

3.4.1. Results

3.4.2. Discussion

4. Conclusions

- (I)

- Classification results for UAS data with RF decreased with increasing class detail from BA (OA = 88.9%) and VE (OA = 88.4%) to DO (OA = 74.8%) and SU (OA = 62%). Classes with high spatial coverage or those which are homogeneous could be mapped sufficiently. Classes with low sample sizes had high intra-class variability and, even when good median accuracies were achieved. In general, RF was a suitable algorithm to classify vegetation and substrate types in riparian zones. The results of the feature selections showed for BA level, that the spectral indices have the largest explanatory power in the models, whereas for the VE and DO level the highest explanatory power lies on the hydrotopographic parameters and for the SU level textural indices were predominant.

- (II)

- Classification performance did not change notably when using SVM or XGBoost instead of RF. SVM introduced more heterogeneous and patchy maps while classifying vegetation that did not match with the visual interpretation and would be difficult to work with in the field. On the other hand, XGBoost consumed the highest computational time. Thus, for the rest of this study RF was used.

- (III)

- Classification probability maps can be used to identify areas of low performance and prioritize them during (re-)visits in the field. For instance, areas located in the transition zone and shaded areas of vegetation had low classification probabilities and were often classified incorrectly. Hence, when using probability maps efficiency of field surveys may be increased.

- (IV)

- Gyrocopter data can be used within the same classification workflow and achieve comparable results as UAS data for classes of the levels BA and VE as well as for classes covering larger and homogeneous areas. For management purposes, it might be useful to collect information over larger areas, possibly in combination with UAS.

5. Outlook and Further Work

- To apply the workflow on the whole gyrocopter area and including classes, such as “urban areas” or “agriculture”, which are not represented in the area under investigation in this study. This step also includes the collection of training and validation data of those classes based on the imagery.

- To evaluate the transferability of the classification workflow in new areas. This step also includes the application of the already existing classification models on new areas and evaluation of the question of what extent the existing reference data can be used in addition to newly collected reference data to build new models.

- To examine the effect of multi-temporal imagery on classification results, as demonstrated by van Iersel, Straatsma, Middelkoop and Addink [18], and to evaluate if a potential increase in classification performance may justify the additional workload.

- To implement the proposed workflow in management routines of the waterway and shipping administration and to adjust them to the future needs of the stakeholder concerns [13]. Potential routine could be to use the classification maps as a basis for more detailed vegetation mapping or to use them within the hydromorphological evaluation and classification tool, Valmorph [89].

Supplementary Materials

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Baattrup-Pedersen, A.; Jensen, K.M.B.; Thodsen, H.; Andersen, H.E.; Andersen, P.M.; Larsen, S.E.; Riis, T.; Andersen, D.K.; Audet, J.; Kronvang, B. Effects of stream flooding on the distribution and diversity of groundwater-dependent vegetation in riparian areas. Freshw. Biol. 2013, 58, 817–827. [Google Scholar] [CrossRef]

- Capon, S.J.; Pettit, N.E. Turquoise is the new green: Restoring and enhancing riparian function in the Anthropocene. Ecol. Manag. Restor. 2018, 19, 44–53. [Google Scholar] [CrossRef]

- Chakraborty, S.K. Riverine Ecology; Springer International Publishing: Basel, Switzerland, 2021; Volume 2, ISBN 978-3-030-53941-2. [Google Scholar]

- Grizzetti, B.; Liquete, C.; Pistocchi, A.; Vigiak, O.; Zulian, G.; Bouraoui, F.; De Roo, A.; Cardoso, A.C. Relationship between ecological condition and ecosystem services in European rivers, lakes and coastal waters. Sci. Total Environ. 2019, 671, 452–465. [Google Scholar] [CrossRef] [PubMed]

- Cole, L.J.; Stockan, J.; Helliwell, R. Managing riparian buffer strips to optimise ecosystem services: A review. Agric. Ecosyst. Environ. 2020, 296, 106891. [Google Scholar] [CrossRef]

- Meybeck, M. Global analysis of river systems: From Earth system controls to Anthropocene syndromes. Philos. Trans. R. Soc. B Biol. Sci. 2003, 358, 1935–1955. [Google Scholar] [CrossRef] [PubMed]

- Voulvoulis, N.; Arpon, K.D.; Giakoumis, T. The EU Water Framework Directive: From great expectations to problems with implementation. Sci. Total Environ. 2017, 575, 358–366. [Google Scholar] [CrossRef] [Green Version]

- Council of the European Commission, Council Directive 92/43/EEC of 21 May 1992 on the conservation of natural habitats and of wild fauna and flora. Off. J. 1992, 206, 7–50. Available online: https://eur-lex.europa.eu/legal-content/EN/TXT/?uri=CELEX%3A31992L0043 (accessed on 12 January 2022).

- The European Parliament and Council of the European Union, Directive 2000/60/EC of the European Parliament and of the Council Establishing a Framework for the Community Action in the Field of Water Policy. Off. J. 2020, 327, 1–73. Available online: https://eur-lex.europa.eu/legal-content/en/TXT/?uri=CELEX:32000L0060 (accessed on 12 January 2022).

- Germany’s Blue Belt’ Programme. Available online: https://www.bundesregierung.de/breg-en/federal-government/-germany-s-blue-belt-programme-394228 (accessed on 12 January 2022).

- Dronova, I. Object-Based Image Analysis in Wetland Research: A Review. Remote Sens. 2015, 7, 6380–6413. [Google Scholar] [CrossRef] [Green Version]

- Ren, L.; Liu, Y.; Zhang, S.; Cheng, L.; Guo, Y.; Ding, A. Vegetation Properties in Human-Impacted Riparian Zones Based on Unmanned Aerial Vehicle (UAV) Imagery: An Analysis of River Reaches in the Yongding River Basin. Forests 2020, 12, 22. [Google Scholar] [CrossRef]

- Huylenbroeck, L.; Laslier, M.; Dufour, S.; Georges, B.; Lejeune, P.; Michez, A. Using remote sensing to characterize riparian vegetation: A review of available tools and perspectives for managers. J. Environ. Manag. 2020, 267, 110652. [Google Scholar] [CrossRef] [PubMed]

- Piégay, H.; Arnaud, F.; Belletti, B.; Bertrand, M.; Bizzi, S.; Carbonneau, P.; Dufour, S.; Liébault, F.; Ruiz-Villanueva, V.; Slater, L. Remotely sensed rivers in the Anthropocene: State of the art and prospects. Earth Surf. Process. Landf. 2020, 45, 157–188. [Google Scholar] [CrossRef]

- Tomsett, C.; Leyland, J. Remote sensing of river corridors: A review of current trends and future directions. River Res. Appl. 2019, 35, 779–803. [Google Scholar] [CrossRef]

- Langhammer, J. UAV Monitoring of Stream Restorations. Hydrology 2019, 6, 29. [Google Scholar] [CrossRef] [Green Version]

- Arif, M.S.M.; Gülch, E.; Tuhtan, J.A.; Thumser, P.; Haas, C. An investigation of image processing techniques for substrate classification based on dominant grain size using RGB images from UAV. Int. J. Remote Sens. 2016, 38, 2639–2661. [Google Scholar] [CrossRef]

- Van Iersel, W.; Straatsma, M.; Middelkoop, H.; Addink, E. Multitemporal Classification of River Floodplain Vegetation Using Time Series of UAV Images. Remote Sens. 2018, 10, 1144. [Google Scholar] [CrossRef] [Green Version]

- Gómez-Sapiens, M.; Schlatter, K.J.; Meléndez, Á.; Hernández-López, D.; Salazar, H.; Kendy, E.; Flessa, K.W. Improving the efficiency and accuracy of evaluating aridland riparian habitat restoration using unmanned aerial vehicles. Remote Sens. Ecol. Conserv. 2021, 7, 488–503. [Google Scholar] [CrossRef]

- van Iersel, W.; Straatsma, M.; Addink, E.; Middelkoop, H. Monitoring height and greenness of non-woody floodplain vegetation with UAV time series. ISPRS J. Photogramm. Remote Sens. 2018, 141, 112–123. [Google Scholar] [CrossRef]

- Martin, F.-M.; Müllerová, J.; Borgniet, L.; Dommanget, F.; Breton, V.; Evette, A. Using Single- and Multi-Date UAV and Satellite Imagery to Accurately Monitor Invasive Knotweed Species. Remote Sens. 2018, 10, 1662. [Google Scholar] [CrossRef] [Green Version]

- Michez, A.; Piégay, H.; Jonathan, L.; Claessens, H.; Lejeune, P. Mapping of riparian invasive species with supervised classification of Unmanned Aerial System (UAS) imagery. Int. J. Appl. Earth Obs. Geoinf. 2016, 44, 88–94. [Google Scholar] [CrossRef]

- IKSR. Überblicksbericht über die Entwicklung des ‘Biotopverbund am Rhein’ 2005–2013; Internationale Kommission zum Schutz des Rheins: Koblenz, Germany, 2015; ISBN 3-941994-79-4. [Google Scholar]

- Ma, L.; Li, M.; Ma, X.; Cheng, L.; Du, P.; Liu, Y. A review of supervised object-based land-cover image classification. ISPRS J. Photogramm. Remote Sens. 2017, 130, 277–293. [Google Scholar] [CrossRef]

- Weber, I.; Jenal, A.; Kneer, C.; Bongartz, J. Gyrocopter-based remote sensing platform. ISPRS Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2015, XL-7/W3, 1333–1337. [Google Scholar] [CrossRef] [Green Version]

- Fricke, K.; Baschek, B.; Jenal, A.; Kneer, C.; Weber, I.; Bongartz, J.; Wyrwa, J.; Schöl, A. Observing Water Surface Temperature from Two Different Airborne Platforms over Temporarily Flooded Wadden Areas at the Elbe Estuary—Methods for Corrections and Analysis. Remote Sens. 2021, 13, 1489. [Google Scholar] [CrossRef]

- Calvino-Cancela, M.; Mendez-Rial, R.; Reguera-Salgado, J.; Martin-Herrero, J. Alien Plant Monitoring with Ultralight Airborne Imaging Spectroscopy. PLoS ONE 2014, 9, e102381. [Google Scholar] [CrossRef] [PubMed]

- Hellwig, F.M.; Stelmaszczuk-Górska, M.A.; Dubois, C.; Wolsza, M.; Truckenbrodt, S.C.; Sagichewski, H.; Chmara, S.; Bannehr, L.; Lausch, A.; Schmullius, C. Mapping European Spruce Bark Beetle Infestation at Its Early Phase Using Gyrocopter-Mounted Hyperspectral Data and Field Measurements. Remote Sens. 2021, 13, 4659. [Google Scholar] [CrossRef]

- Manfreda, S.; McCabe, M.F.; Miller, P.E.; Lucas, R.; Madrigal, V.P.; Mallinis, G.; Ben Dor, E.; Helman, D.; Estes, L.; Ciraolo, G.; et al. On the Use of Unmanned Aerial Systems for Environmental Monitoring. Remote Sens. 2018, 10, 641. [Google Scholar] [CrossRef] [Green Version]

- Jiménez López, J.; Mulero-Pázmány, M. Drones for Conservation in Protected Areas: Present and Future. Drones 2019, 3, 10. [Google Scholar] [CrossRef] [Green Version]

- Mahdavi, S.; Salehi, B.; Granger, J.; Amani, M.; Brisco, B.; Huang, W. Remote sensing for wetland classification: A comprehensive review. GISci. Remote Sens. 2017, 55, 623–658. [Google Scholar] [CrossRef]

- Maxwell, A.E.; Warner, T.A.; Fang, F. Implementation of machine-learning classification in remote sensing: An applied review. Int. J. Remote Sens. 2018, 39, 2784–2817. [Google Scholar] [CrossRef] [Green Version]

- Zhang, C.; Xie, Z. Object-based Vegetation Mapping in the Kissimmee River Watershed Using HyMap Data and Machine Learning Techniques. Wetlands 2013, 33, 233–244. [Google Scholar] [CrossRef]

- Nguyen, U.; Glenn, E.P.; Dang, T.D.; Pham, L. Mapping vegetation types in semi-arid riparian regions using random forest and object-based image approach: A case study of the Colorado River Ecosystem, Grand Canyon, Arizona. Ecol. Inform. 2019, 50, 43–50. [Google Scholar] [CrossRef]

- Fassnacht, F.E.; Latifi, H.; Stereńczak, K.; Modzelewska, A.; Lefsky, M.; Waser, L.T.; Straub, C.; Ghosh, A. Review of studies on tree species classification from remotely sensed data. Remote Sens. Environ. 2016, 186, 64–87. [Google Scholar] [CrossRef]

- Georganos, S.; Grippa, T.; VanHuysse, S.; Lennert, M.; Shimoni, M.; Kalogirou, S.; Wolff, E. Less is more: Optimizing classification performance through feature selection in a very-high-resolution remote sensing object-based urban application. GIScience Remote Sens. 2017, 55, 221–242. [Google Scholar] [CrossRef]

- Sandino, J.; Gonzalez, F.; Mengersen, K.; Gaston, K.J. UAVs and Machine Learning Revolutionising Invasive Grass and Vegetation Surveys in Remote Arid Lands. Sensors 2018, 18, 605. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Zhang, H.; Eziz, A.; Xiao, J.; Tao, S.; Wang, S.; Tang, Z.; Zhu, J.; Fang, J. High-Resolution Vegetation Mapping Using eXtreme Gradient Boosting Based on Extensive Features. Remote Sens. 2019, 11, 1505. [Google Scholar] [CrossRef] [Green Version]

- Congalton, R.G.; Green, K. Assessing the Accuracy of Remotely Sensed Data: Principles and Practices, 3rd ed.; CRC Press: Boca Raton, FL, USA, 2019; ISBN 0429629354. [Google Scholar]

- Malley, J.D.; Kruppa, J.; Dasgupta, A.; Malley, K.G.; Ziegler, A. Probability Machines. Methods Inf. Med. 2012, 51, 74–81. [Google Scholar] [CrossRef]

- Millard, K.; Richardson, M. On the Importance of Training Data Sample Selection in Random Forest Image Classification: A Case Study in Peatland Ecosystem Mapping. Remote Sens. 2015, 7, 8489–8515. [Google Scholar] [CrossRef] [Green Version]

- UNEP-WCMC. Protected Area Profile for Nsg Emmericher Ward from the World Database of Protected Areas. Available online: www.protectedplanet.net (accessed on 20 December 2021).

- Westoby, M.J.; Brasington, J.; Glasser, N.F.; Hambrey, M.J.; Reynolds, J.M. ‘Structure-from-Motion’ photogrammetry: A low-cost, effective tool for geoscience applications. Geomorphology 2012, 179, 300–314. [Google Scholar] [CrossRef] [Green Version]

- Jensen, J.R. Remote Sensing of the Environment: An Earth Resource Perspective; Pearson Prentice Hall: Upper Saddle River, NJ, USA, 2007; ISBN 9780136129134. [Google Scholar]

- Xue, J.; Su, B. Significant Remote Sensing Vegetation Indices: A Review of Developments and Applications. J. Sens. 2017, 2017, 1353691. [Google Scholar] [CrossRef] [Green Version]

- Jorge, J.; Vallbé, M.; Soler, J.A. Detection of irrigation inhomogeneities in an olive grove using the NDRE vegetation index obtained from UAV images. Eur. J. Remote Sens. 2019, 52, 169–177. [Google Scholar] [CrossRef] [Green Version]

- Michez, A.; Piégay, H.; Lisein, J.; Claessens, H.; Lejeune, P. Classification of riparian forest species and health condition using multi-temporal and hyperspatial imagery from unmanned aerial system. Environ. Monit. Assess. 2016, 188, 146. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Mishra, V.N.; Prasad, R.; Rai, P.K.; Vishwakarma, A.K.; Arora, A. Performance evaluation of textural features in improving land use/land cover classification accuracy of heterogeneous landscape using multi-sensor remote sensing data. Earth Sci. Inform. 2018, 12, 71–86. [Google Scholar] [CrossRef]

- Laliberte, A.S.; Rango, A. Texture and Scale in Object-Based Analysis of Subdecimeter Resolution Unmanned Aerial Vehicle (UAV) Imagery. IEEE Trans. Geosci. Remote Sens. 2009, 47, 761–770. [Google Scholar] [CrossRef] [Green Version]

- Haralick, R.M.; Shanmugam, K.; Dinstein, I.H. Textural Features for Image Classification. IEEE Trans. Syst. Man Cybern. 1973, SMC-3, 610–621. [Google Scholar] [CrossRef] [Green Version]

- Gini, R.; Sona, G.; Ronchetti, G.; Passoni, D.; Pinto, L. Improving Tree Species Classification Using UAS Multispectral Images and Texture Measures. ISPRS Int. J. Geo-Inf. 2018, 7, 315. [Google Scholar] [CrossRef] [Green Version]

- Dorigo, W.; Lucieer, A.; Podobnikar, T.; Čarni, A. Mapping invasive Fallopia japonica by combined spectral, spatial, and temporal analysis of digital orthophotos. Int. J. Appl. Earth Obs. Geoinf. 2012, 19, 185–195. [Google Scholar] [CrossRef]

- Trimble. eCognition Developer 10.1 Reference Book; Trimble Germany GmbH: Munich, Germany, 2021. [Google Scholar]

- Tu, Y.-H.; Johansen, K.; Phinn, S.; Robson, A. Measuring Canopy Structure and Condition Using Multi-Spectral UAS Imagery in a Horticultural Environment. Remote Sens. 2019, 11, 269. [Google Scholar] [CrossRef] [Green Version]

- Kupidura, P.; Osińska-Skotak, K.; Lesisz, K.; Podkowa, A. The Efficacy Analysis of Determining the Wooded and Shrubbed Area Based on Archival Aerial Imagery Using Texture Analysis. ISPRS Int. J. Geo-Inf. 2019, 8, 450. [Google Scholar] [CrossRef] [Green Version]

- Weber, A. Annual flood durations along River Elbe and Rhine from 1960–2019 computed with flood3. in preparation.

- Benz, U.C.; Hofmann, P.; Willhauck, G.; Lingenfelder, I.; Heynen, M. Multi-resolution, object-oriented fuzzy analysis of remote sensing data for GIS-ready information. ISPRS J. Photogramm. Remote Sens. 2004, 58, 239–258. [Google Scholar] [CrossRef]

- R Core Team. R: A Language and Environment for Statistical Computing; R Foundation for Statistical Computing: Vienna, Austria, 2021. [Google Scholar]

- Guyon, I.; Elisseeff, A. Introduction to Variable and Feature Selection. J. Mach. Learn. Res. 2003, 3, 1157–1182. [Google Scholar]

- Bommert, A.; Sun, X.; Bischl, B.; Rahnenführer, J.; Lang, M. Benchmark for filter methods for feature selection in high-dimensional classification data. Comput. Stat. Data Anal. 2020, 143, 106839. [Google Scholar] [CrossRef]

- Wright, M.N.; Ziegler, A. Ranger: A fast implementation of random forests for high dimensional data in C++ and R. J. Stat. Softw. 2017, 77, 1–17. [Google Scholar] [CrossRef] [Green Version]

- Belgiu, M.; Drăguţ, L. Random forest in remote sensing: A review of applications and future directions. ISPRS J. Photogramm. Remote Sens. 2016, 114, 24–31. [Google Scholar] [CrossRef]

- Breiman, L. Random forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef] [Green Version]

- Cortes, C.; Vapnik, V. Support-vector networks. Mach. Learn. 1995, 20, 273–297. [Google Scholar] [CrossRef]

- Mountrakis, G.; Im, J.; Ogole, C. Support vector machines in remote sensing: A review. ISPRS J. Photogramm. Remote Sens. 2011, 66, 247–259. [Google Scholar] [CrossRef]

- Chen, T.; Guestrin, C. XGBoost. In Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, San Francisco, CA, USA, 13–17 August 2016; pp. 785–794. [Google Scholar]

- Bischl, B.; Lang, M.; Kotthoff, L.; Schiffner, J.; Richter, J.; Studerus, E.; Casalicchio, G.; Jones, Z. mlr: Machine Learning in R. J. Mach. Learn. Res. 2016, 17, 5938–5942. [Google Scholar]

- Meyer, D.; Dimitriadou, E.; Hornik, K.; Weingessel, A.; Leisch, F.; Chang, C.-C.; Lin, C.-C. e1071: Misc Functions of the Department of Statistics, Probability Theory Group (Formerly: E1071), TU Wien; R Package Version 1.7-9. 2021. Available online: https://cran.r-project.org/web/packages/e1071/index.html (accessed on 12 January 2022).

- Chen, T.; He, T.; Benesty, M.; Khotilovich, V.; Tang, Y.; Cho, H.; Chen, K.; Mitchell, R.; Cano, I.; Zhou, T.; et al. xgboost: Extreme Gradient Boosting; R Package Version 1.5.0.1; 2021. Available online: https://cran.r-project.org/web/packages/xgboost/index.html (accessed on 12 January 2022).

- Pontius, R.G., Jr.; Millones, M. Death to Kappa: Birth of quantity disagreement and allocation disagreement for accuracy assessment. Int. J. Remote Sens. 2011, 32, 4407–4429. [Google Scholar] [CrossRef]

- Olofsson, P.; Foody, G.M.; Herold, M.; Stehman, S.V.; Woodcock, C.E.; Wulder, M.A. Good practices for estimating area and assessing accuracy of land change. Remote Sens. Environ. 2014, 148, 42–57. [Google Scholar] [CrossRef]

- Hsiao, L.-H.; Cheng, K.-S. Assessing Uncertainty in LULC Classification Accuracy by Using Bootstrap Resampling. Remote Sens. 2016, 8, 705. [Google Scholar] [CrossRef] [Green Version]

- Efron, B.; Tibshirani, R. An Introduction to the Bootstrap; Chapman & Hall: New York, NY, USA, 1993; ISBN 0412042312. [Google Scholar]

- Lyons, M.B.; Keith, D.A.; Phinn, S.R.; Mason, T.J.; Elith, J. A comparison of resampling methods for remote sensing classification and accuracy assessment. Remote Sens. Environ. 2018, 208, 145–153. [Google Scholar] [CrossRef]

- Roberts, D.R.; Bahn, V.; Ciuti, S.; Boyce, M.; Elith, J.; Guillera-Arroita, G.; Hauenstein, S.; Lahoz-Monfort, J.J.; Schroder, B.; Thuiller, W.; et al. Cross-validation strategies for data with temporal, spatial, hierarchical, or phylogenetic structure. Ecography 2017, 40, 913–929. [Google Scholar] [CrossRef]

- Lopatin, J.; Dolos, K.; Kattenborn, T.; Fassnacht, F. How canopy shadow affects invasive plant species classification in high spatial resolution remote sensing. Remote Sens. Ecol. Conserv. 2019, 5, 302–317. [Google Scholar] [CrossRef]

- Dunford, R.; Michel, K.; Gagnage, M.; Piégay, H.; Trémelo, M.-L. Potential and constraints of Unmanned Aerial Vehicle technology for the characterization of Mediterranean riparian forest. Int. J. Remote Sens. 2009, 30, 4915–4935. [Google Scholar] [CrossRef]

- Hay, A.M. Sampling designs to test land-use map accuracy. Photogramm. Eng. Remote Sens. 1979, 45, 529–533. [Google Scholar]

- Rogan, J.; Franklin, J.; Stow, D.; Miller, J.; Woodcock, C.; Roberts, D. Mapping land-cover modifications over large areas: A comparison of machine learning algorithms. Remote Sens. Environ. 2008, 112, 2272–2283. [Google Scholar] [CrossRef]

- Burai, P.; Deák, B.; Valkó, O.; Tomor, T. Classification of Herbaceous Vegetation Using Airborne Hyperspectral Imagery. Remote Sens. 2015, 7, 2046–2066. [Google Scholar] [CrossRef] [Green Version]

- Xu, S.; Zhao, Q.; Yin, K.; Zhang, F.; Liu, D.; Yang, G. Combining random forest and support vector machines for object-based rural-land-cover classification using high spatial resolution imagery. J. Appl. Remote Sens. 2019, 13, 014521. [Google Scholar] [CrossRef]

- Zhang, L.; Sun, X.; Wu, T.; Zhang, H. An Analysis of Shadow Effects on Spectral Vegetation Indexes Using a Ground-Based Imaging Spectrometer. IEEE Geosci. Remote Sens. Lett. 2015, 12, 2188–2192. [Google Scholar] [CrossRef]

- Pande-Chhetri, R.; Abd-Elrahman, A.; Liu, T.; Morton, J.; Wilhelm, V.L. Object-based classification of wetland vegetation using very high-resolution unmanned air system imagery. Eur. J. Remote Sens. 2017, 50, 564–576. [Google Scholar] [CrossRef] [Green Version]

- Johnson, B.; Xie, Z. Unsupervised image segmentation evaluation and refinement using a multi-scale approach. ISPRS J. Photogramm. Remote Sens. 2011, 66, 473–483. [Google Scholar] [CrossRef]

- Schuster, C.; Forster, M.; Kleinschmit, B. Testing the red edge channel for improving land-use classifications based on high-resolution multi-spectral satellite data. Int. J. Remote Sens. 2012, 33, 5583–5599. [Google Scholar] [CrossRef]

- Li, X.; Chen, G.; Liu, J.; Cheng, X.; Liao, Y. Effects of RapidEye imagery’s red-edge band and vegetation indices on land cover classification in an arid region. Chin. Geogr. Sci. 2017, 27, 827–835. [Google Scholar] [CrossRef]

- Naethe, P.; Asgari, M.; Kneer, C.; Knieps, M.; Jenal, A.; Weber, I.; Moelter, T.; Dzunic, F.; Deffert, P.; Rommel, E.; et al. Calibration and Validation of two levels of airborne, multi-spectral imaging using timesynchronous spectroscopy on the ground. in preparation.

- Räsänen, A.; Virtanen, T. Data and resolution requirements in mapping vegetation in spatially heterogeneous landscapes. Remote Sens. Environ. 2019, 230, 111207. [Google Scholar] [CrossRef]

- Quick, I.; König, F.; Baulig, Y.; Borgsmüller, C.; Schriever, S. The Hydromorphological Classification Tool Valmorph 2 for Large and Navigable Surface Waters; BfG Rep. No. 1910; Federal Institute of Hydrology: Koblenz, Germany, 2017. [Google Scholar]

| Platform | Sensor | Center of Wavebands in nm (Bandwidth in Parenthesis) | Spatial Resolution in cm | Flight Altitude in m | ||||

|---|---|---|---|---|---|---|---|---|

| Blue | Green | Red | Red-Edge | Near-Infrared | ||||

| UAS (DJI Phantom 4 Pro) | Micasence RedEdge-M | 475 (20) | 560 (20) | 668 (10) | 717 (10) | 840 (40) | 5 | 70 |

| Gyrocopter | PanX 2.0 | 475 (50) | 550 (50) | 650 (25) | _ | 875 (25) | 28 | 650 |

| Index | Equation | Case Study |

|---|---|---|

| NDVI | Jensen [44] | |

| gNDVI | Xue and Su [45] | |

| NDRE | Jorge, et al. [46] | |

| NDWI | Jensen [44] | |

| NRBI | Michez, et al. [47] | |

| SAVI | Jensen [44] | |

| SR | Jensen [44] | |

| GVI | Michez, et al. [47] | |

| Total Brightness | This study, based on Jensen [44] |

| Algorithm(R-Package) | Hyperparameter | Start | End | Tuned Parameter Values for Each Level | |||

|---|---|---|---|---|---|---|---|

| BA | VE | DO | SU | ||||

| RF (ranger) | mtry | 1 | 63 | 2 | 19 | 12 | 17 |

| num.tree | 100 | 1000 | 201 | 513 | 499 | 200 | |

| SVM (ksvm) | cost (C) | 0.1 | 10,000 | 841 | 71.7 | 31.7 | 3.04 |

| sigma | 0.001 | 10 | 0.00135 | 0.00344 | 0.00221 | 0.02040 | |

| XGBoost (xgboost) | nrounds | 100 | 500 | 103 | 286 | 155 | 276 |

| max-depth | 1 | 10 | 6 | 3 | 4 | 10 | |

| eta | 0.1 | 0.5 | 0.170 | 0.137 | 0.177 | 0.181 | |

| lambda | 0.1 | 1 | 0.496 | 0.704 | 0.840 | 0.127 | |

| Classification Level with Classified Classes | UAS | Gyrocopter | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| RF | SVM | XGBoost | RF | |||||||

| PA | UA | PA | UA | PA | UA | n | PA | UA | n | |

| Basic surface types (BA) | OA = 88.9 Kc = 0.85 | OA = 88.6 Kc = 0.85 | OA = 88.3 Kc = 0.85 | OA = 88.4 Kc = 0.85 | ||||||

| Water | 90 | 88 | 90 | 87 | 89 | 88 | 150 | 87 | 94 | 156 |

| Water shallow | 77 | 81 | 75 | 83 | 77 | 80 | 165 | 79 | 85 | 172 |

| Substrate types | 88 | 93 | 88 | 92 | 87 | 93 | 259 | 87 | 94 | 244 |

| Substrate types wet | 83 | 76 | 85 | 77 | 82 | 76 | 198 | 90 | 78 | 232 |

| Vegetation | 96 | 94 | 95 | 94 | 96 | 94 | 623 | 97 | 90 | 555 |

| Vegetation shadow | 80 | 89 | 80 | 87 | 81 | 86 | 180 | 72 | 89 | 170 |

| Vegetation units (VE) | OA = 88.4 Kc = 0.82 | OA = 89.2 Kc = 0.83 | OA = 89.1 Kc = 0.83 | OA = 86.4 Kc = 0.79 | ||||||

| Grassland | 95 | 91 | 91 | 88 | 90 | 94 | 27 | 95 | 96 | |

| Herbaceous vegetation | 94 | 92 | 94 | 92 | 94 | 92 | 222 | 93 | 91 | 221 |

| Pioneers | 84 | 83 | 83 | 91 | 85 | 76 | 40 | 83 | 89 | 37 |

| Reed | 72 | 71 | 76 | 78 | 71 | 83 | 48 | 65 | 65 | 49 |

| Woody | 83 | 90 | 85 | 87 | 87 | 89 | 81 | 79 | 81 | |

| Dominant stands (DO) | OA = 74.8 Kc = 0.72 | OA = 73.8 Kc = 0.71 | OA = 66.5 Kc = 0.63 | OA = 65.6 Kc = 0.62 | ||||||

| Agrostis stolonifera | 32 | 65 | 37 | 50 | 8 | 14 | 8 | 13 | 28 | |

| Arctium lappa | 86 | 80 | 89 | 84 | 75 | 77 | 22 | 76 | 78 | |

| Brassica nigra | 76 | 64 | 82 | 66 | 64 | 60 | 33 | 63 | 55 | |

| Carduus crispus | 87 | 77 | 90 | 79 | 77 | 71 | 44 | 81 | 72 | 47 |

| Cirsium arvense | 20 | 53 | 9 | 21 | 8 | 13 | 11 | 19 | 38 | |

| Grassland | 95 | 92 | 81 | 69 | 81 | 81 | 13 | 78 | 72 | 15 |

| Lythrum salicaria | 68 | 92 | 62 | 64 | 99 | 99 | 8 | 67 | 97 | |

| Pasture | 92 | 91 | 73 | 86 | 91 | 88 | 19 | 74 | 82 | 18 |

| Phalaris arundinacea | 64 | 62 | 61 | 49 | 64 | 65 | 24 | 54 | 54 | 25 |

| Phragmites australis | 79 | 67 | 66 | 61 | 77 | 66 | 24 | 61 | 59 | |

| Pioneers | 83 | 70 | 61 | 82 | 72 | 63 | 20 | 84 | 80 | |

| Pioneers small | 88 | 84 | 97 | 79 | 84 | 93 | 7 | 90 | 82 | 6 |

| Populus spp. | 57 | 73 | 73 | 79 | 49 | 55 | 40 | 59 | 63 | 39 |

| Rubus caesius | 38 | 79 | 75 | 82 | 39 | 46 | 13 | 40 | 47 | |

| Salix spp. | 82 | 75 | 75 | 83 | 74 | 72 | 55 | 71 | 63 | 56 |

| Tanacetum vulgare | 4 | 19 | 9 | 18 | 12 | 16 | 10 | 1 | 4 | 11 |

| Tripleurospermum perforatum | 76 | 89 | 66 | 62 | 8 | 13 | 10 | 79 | 63 | |

| Urtica dioica | 87 | 76 | 86 | 84 | 80 | 70 | 66 | 77 | 68 | 61 |

| Woody | 82 | 82 | 81 | 75 | 74 | 81 | 18 | 47 | 73 | 15 |

| Substrate types (SU) | OA = 62 Kc = 0.53 | OA = 64.9 Kc = 0.56 | OA = 59.7 Kc = 0.50 | OA = 52 Kc = 0.37 | ||||||

| Armour stones | 73 | 70 | 83 | 74 | 74 | 74 | 22 | 24 | 62 | |

| Fine grained material | 68 | 58 | 66 | 61 | 60 | 57 | 29 | 66 | 55 | |

| Gravel | 73 | 68 | 79 | 68 | 70 | 66 | 75 | 73 | 54 | |

| Sand | 65 | 69 | 69 | 73 | 58 | 61 | 59 | 49 | 49 | |

| Layer of shells | 64 | 68 | 56 | 68 | 67 | 71 | 18 | 52 | 60 | |

| Stones | 28 | 50 | 21 | 44 | 34 | 42 | 17 | 5 | 17 | |

| Wood | 33 | 32 | 36 | 38 | 34 | 34 | 29 | 16 | 29 | |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Rommel, E.; Giese, L.; Fricke, K.; Kathöfer, F.; Heuner, M.; Mölter, T.; Deffert, P.; Asgari, M.; Näthe, P.; Dzunic, F.; et al. Very High-Resolution Imagery and Machine Learning for Detailed Mapping of Riparian Vegetation and Substrate Types. Remote Sens. 2022, 14, 954. https://0-doi-org.brum.beds.ac.uk/10.3390/rs14040954

Rommel E, Giese L, Fricke K, Kathöfer F, Heuner M, Mölter T, Deffert P, Asgari M, Näthe P, Dzunic F, et al. Very High-Resolution Imagery and Machine Learning for Detailed Mapping of Riparian Vegetation and Substrate Types. Remote Sensing. 2022; 14(4):954. https://0-doi-org.brum.beds.ac.uk/10.3390/rs14040954

Chicago/Turabian StyleRommel, Edvinas, Laura Giese, Katharina Fricke, Frederik Kathöfer, Maike Heuner, Tina Mölter, Paul Deffert, Maryam Asgari, Paul Näthe, Filip Dzunic, and et al. 2022. "Very High-Resolution Imagery and Machine Learning for Detailed Mapping of Riparian Vegetation and Substrate Types" Remote Sensing 14, no. 4: 954. https://0-doi-org.brum.beds.ac.uk/10.3390/rs14040954