Georeferencing Urban Nighttime Lights Imagery Using Street Network Maps

Abstract

:1. Introduction

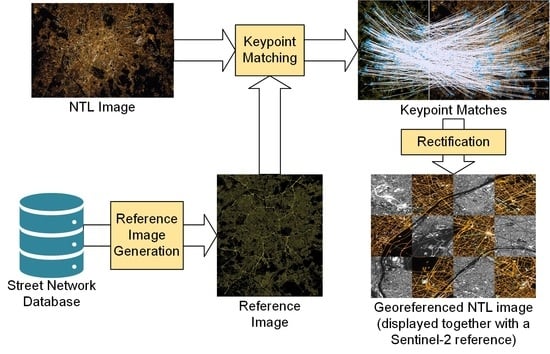

2. Methodology

- Reference image generation;

- BRISK keypoint extraction;

- Keypoint matching and outlier removal;

- Original imagery rectification.

2.1. Reference Image Generation

2.2. BRISK Keypoint Extraction

2.3. Keypoint Matching and Outlier Removal

2.4. Original Imagery Rectification

3. Evaluation

3.1. Evaluation Approach

3.2. Datasets

- Paris

- The Paris dataset (see Figure 1) is regarded as the optimal dataset for this methodology. With a spatial resolution of ~7.6 m, a visual interpretation indicates a well-defined and easily recognizable street network.

- Berlin

- Even though the Berlin dataset only has a slightly lower spatial resolution of ~8.7 m than the Paris dataset, it features a much more blurry street network, which is expected to make it more difficult to identify point matches with the reference NTL image. An interesting aspect of this dataset is the fact that, for historic reasons, two different types of street lamps are used in Berlin. In West Berlin, fluorescent and mercury vapor lamps are emitting white light. The lamps in East Berlin, on the other hand, mostly use sodium vapor, resulting in light with a yellow hue, see [22].

- Milan

- The two datasets selected from Milan offer the opportunity to study the influence of the lighting type on the presented methodology. The first image, acquired in March 2012, features street lighting almost exclusively based on sodium vapor lamps. The second image from April 2015 was acquired after LED lighting was installed in the city center, which features a different radiance in the used NTL imagery. For more details on the change in radiance for these datasets, see [23].

- Vienna

- The dataset from Vienna features a higher tilt angle of 26. This not only results in a slightly lower spatial resolution but also means that the direct view of many of the roads might be obstructed.

- Rome

- The dataset from Rome features a mix of organically grown networks and grid plans. With a smaller tilt angle of 15, it shows well-recognizable streets.

- Harbin

- The dataset from Harbin, acquired in 2021, is one of the most up-to-date datasets. It features a mostly grid plan based street network, typical for modern Asian cities. While this regular pattern might be challenging for the used matching approach, the image features very well-recognizable roads and is expected to be well-suited for keypoint detection.

- Algiers

- The dataset from Algiers features an organically grown street network shaped by the mountainous terrain. For this scene, no tilt angle is provided, but according to the provided ISS position and the manually determined center of the image, it is determined to be be close to nadir view. In some parts of the image, the view is obstructed by clouds, which might impair the matching process.

- Las Vegas

- The dataset from Las Vegas is one of the less up-to-date datasets and is selected to test the limitations of the proposed approach. It is acquired with a shorter focal length of 180 mm and therefore features an estimated spatial resolution of ~26.6 m, which means only major roads are distinguished. In addition to that, the regular street pattern poses an additional challenge for the matching, as it may result in very similar looking descriptors for the selected keypoints.

3.3. Results

4. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Claverie, M.; Ju, J.; Masek, J.G.; Dungan, J.L.; Vermote, E.F.; Roger, J.C.; Skakun, S.V.; Justice, C. The Harmonized Landsat and Sentinel-2 Surface Reflectance Data Set. Remote Sens. Environ. 2018, 219, 145–161. [Google Scholar] [CrossRef]

- Elvidge, C.D.; Baugh, K.; Zhizhin, M.; Hsu, F.C.; Ghosh, T. VIIRS Night-Time Lights. Int. J. Remote Sens. 2017, 38, 5860–5879. [Google Scholar] [CrossRef]

- Sánchez de Miguel, A.; Kyba, C.C.M.; Aubé, M.; Zamorano, J.; Cardiel, N.; Tapia, C.; Bennie, J.; Gaston, K.J. Colour Remote Sensing of the Impact of Artificial Light at Night (I): The Potential of the International Space Station and Other DSLR-based Platforms. Remote Sens. Environ. 2019, 224, 92–103. [Google Scholar] [CrossRef]

- Sánchez de Miguel, A.; Zamorano, J.; Aubé, M.; Bennie, J.; Gallego, J.; Ocaña, F.; Pettit, D.R.; Stefanov, W.L.; Gaston, K.J. Colour Remote Sensing of the Impact of Artificial Light at Night (II): Calibration of DSLR-based Images from the International Space Station. Remote Sens. Environ. 2021, 264, 112611. [Google Scholar] [CrossRef]

- Ghosh, T.; Hsu, F. Advances in Remote Sensing with Nighttime Lights (Special Issue). Remote Sens. 2019, 11, 2194. [Google Scholar]

- Sánchez de Miguel, A.; Gomez Castaño, J.; Lombraña, D.; Zamorano, J.; Gallego, J. Cities at Night: Citizens Science to Rescue an Archive for the Science. IAU Gen. Assem. 2015, 29, 2251113. [Google Scholar]

- Müller, R.; Lehner, M.; Müller, R.; Reinartz, P.; Schroeder, M.; Vollmer, B. A Program for Direct Georeferencing of Airborne and Spaceborne Line Scanner Images. In Proceedings of the Proceedings of ISPRS Commission I Symposium, Hyderabad, India, 3–6 December 2002; pp. 148–153. [Google Scholar]

- Wang, W.; Cao, C.; Bai, Y.; Blonski, S.; Schull, M.A. Assessment of the NOAA S-NPP VIIRS Geolocation Reprocessing Improvements. Remote Sens. 2017, 9, 974. [Google Scholar] [CrossRef] [Green Version]

- Mooney, P.; Minghini, M. A Review of OpenStreetMap Data. In Mapping and the Citizen Sensor; Ubiquity Press: London, UK, 2017. [Google Scholar]

- Canavosio-Zuzelski, R.; Agouris, P.; Doucette, P. A Photogrammetric Approach for Assessing Positional Accuracy of OpenStreetMap Roads. ISPRS Int. J. Geo-Inf. 2013, 2, 276–301. [Google Scholar] [CrossRef]

- Haklay, M. How Good is Volunteered Geographical Information? A Comparative Study of OpenStreetMap and Ordnance Survey Datasets. Environ. Plann. B Plann. Des. 2010, 37, 682–703. [Google Scholar] [CrossRef] [Green Version]

- Boeing, G. OSMnx: New Methods for Acquiring, Constructing, Analyzing, and Visualizing Complex Street Networks. Comput. Environ. Urban Syst. 2017, 65, 126–139. [Google Scholar] [CrossRef] [Green Version]

- United Nations Centre for Human Settlements (Habitat). The Relevance of Street Patterns and Public Space in Urban Areas; UN: Nairobi, Kenya, 2013. [Google Scholar]

- Leutenegger, S.; Chli, M.; Siegwart, R.Y. BRISK: Binary Robust Invariant Scalable Keypoints. In Proceedings of the 2011 IEEE International Conference on Computer Vision (ICCV), Barcelona, Spain, 6–13 November 2011; pp. 2548–2555. [Google Scholar]

- Schwind, P.; d’Angelo, P. Evaluating the Applicability of BRISK for the Geometric Registration of Remote Sensing Images. Remote Sens. Lett. 2015, 6, 677–686. [Google Scholar] [CrossRef] [Green Version]

- Lowe, D.G. Distinctive Image Features from Scale-Invariant Keypoints. Int. J. Comput. Vis. 2004, 60, 91110. [Google Scholar] [CrossRef]

- Bay, H.; Tuytelaars, T.; Van Gool, L. SURF: Speeded Up Robust Features. In Computer Vision–ECCV 2006; Springer: Berlin/Heidelberg, Germany, 2006; pp. 404–417. [Google Scholar]

- Rublee, E.; Rabaud, V.; Konolige, K.; Bradski, G. ORB: An efficient alternative to SIFT or SURF. In Proceedings of the 2011 IEEE International Conference on IEEE Computer Vision (ICCV), Barcelona, Spain, 6–13 November 2011; pp. 2564–2571. [Google Scholar]

- Muja, M.; Lowe, D.G. Fast Approximate Nearest Neighbors with Automatic Algorithm Configuration. In Proceedings of the International Conference on Computer Vision Theory and Application 2009 (VISSAPP), Lisboa, Portugal, 5–8 February 2009; pp. 331–340. [Google Scholar]

- Fischler, M.A.; Bolles, R.C. Random Sample Consensus: A Paradigm for Model Fitting with Applications to Image Analysis and Automated Cartography. Commun. ACM 1981, 24, 381–395. [Google Scholar] [CrossRef]

- Clerc, S.; Team, M. Sentinel-2 L1C Data Quality Report; Technical Report; ESA: Paris, France, 2020. [Google Scholar]

- Levin, N.; Kyba, C.C.M.; Zhang, Q.; Sánchez de Miguel, A.; Román, M.O.; Li, X.; Portnov, B.A.; Molthan, A.L.; Jechow, A.; Miller, S.D.; et al. Remote sensing of night lights: A review and an outlook for the future. Remote Sens. Environ. 2020, 237, 111443. [Google Scholar] [CrossRef]

- Kyba, C.C.M.; Kuester, T.; Sánchez de Miguel, A.; Baugh, K.; Jechow, A.; Hölker, F.; Bennie, J.; Elvidge, C.D.; Gaston, K.J.; Guanter, L. Artificially Lit Surface of Earth at Night Increasing in Radiance and Extent. Sci. Adv. 2017, 3, e1701528. [Google Scholar] [CrossRef] [Green Version]

- Ayala, C.; Aranda, C.; Galar, M. Towards Fine-Grained Road Maps Extraction using Sentinel-2 Imagery. ISPRS Ann. Photogram. Remote Sens. Spat. Inf. Sci. 2021, 3, 9–14. [Google Scholar] [CrossRef]

| PhotoID | ISS043- E-93480 | ISS035- E-17210 | ISS030- E-258865 | ISS043- E-93510 | ISS030- E-211480 | ISS043- E-121713 | ISS064- E-28381 | ISS065- E-203810 | ISS026- E-6241 |

|---|---|---|---|---|---|---|---|---|---|

| Quicklook (rotated 90) |  |  |  |  |  |  |  |  |  |

| Name | Paris, France | Berlin, Germany | Milan #1, Italy | Milan #2, Italy | Vienna, Austria | Rome, Italy | Harbin, China | Algiers, Algeria | Las Vegas, USA |

| Center point (Lat (), Long ()) | 48.9, 2.3 | 52.5, 13.4 | 45.5, 9.2 | 45.5, 9.2 | 48.2, 16.4 | 41.9, 12.5 | 45.8, 126.6 | 36.7, 3.1 | 36.1, −115.2 |

| Spatial resolution (m) | 7.6 | 8.7 | 8.5 | 7.3 | 9.4 | 7.6 | 6.9 | 6.4 | 26.6 |

| Acquisition time (GMT) | 08.04.2015 23:18:37 | 06.04.2013 22:37:37 | 31.03.2012 00:45:28 | 08.04.2015 23:19:50 | 11.04.2012 00:02:41 | 14.04.2015 21:12:33 | 30.01.2021 11:47:52 | 24.07.2021 23:36:27 | 30.11.2010 12:05:27 |

| Camera position (Lat (), Long (), Altitude (km)) | 48.2, 1.5, 394 | 51.7, 13.2, 396 | 46.7, 10.1, 391 | 46.3, 7.5, 394 | 47.8, 18.1, 391 | 42.6, 13.1, 394 | 44.5, 126.3, 415 | 36.7, 2.9, 415 | 38.7, −112.2, 350 |

| Camera model | Nikon D4 | Nikon D3S | Nikon D3 | Nikon D4 | Nikon D3S | Nikon D4 | Nikon D5 | Nikon D5 | Nikon D3S |

| Sensor format (mm × mm) | |||||||||

| Focal length (mm) | 400 | 400 | 400 | 400 | 400 | 400 | 400 | 400 | 180 |

| Tilt angle () | 17 | 13 | 23 | N/A (22) | 26 | 15 | 20 | N/A (2) | 52 |

| Name | Before RANSAC (#machtes) | After RANSAC (#matches) |

Range (px) |

Mean (px) |

RMSE (px) |

|---|---|---|---|---|---|

| Paris | 816 | 770 | 0.00–15.00 | 4.73 | 5.82 |

| Berlin | 49 | 19 | 0.00–14.38 | 5.07 | 6.36 |

| Milan #1 | 42 | 23 | 0.00–14.75 | 6.16 | 7.22 |

| Milan #2 | 28 | 11 | 0.00–12.30 | 5.59 | 6.84 |

| Vienna | 339 | 306 | 0.00–14.79 | 4.80 | 5.78 |

| Rome | 248 | 233 | 0.00–13.19 | 4.86 | 5.76 |

| Harbin | 38 | 14 | 0.00–14.43 | 5.41 | 6.89 |

| Algiers | 122 | 100 | 0.00–14.48 | 7.39 | 8.21 |

| Las Vegas | 58 | 18 | 0.00–8.22 | 2.62 | 3.44 |

| Name | Sentinel-2 Image ((Unit) Acquisition Time) | Min (px) | Max (px) | Mean (px) | RMSE (px) |

|---|---|---|---|---|---|

| Paris | (A) 25.07.2019 10:50:31 | 0.72 | 3.51 | 1.87 | 2.03 |

| Berlin | (A) 16.02.2019 10:21:11 | 0.40 | 9.93 | 4.15 | 4.85 |

| Milan #1 | (B) 16.01.2022 10:22:49 | 0.63 | 5.05 | 2.73 | 3.08 |

| Milan #2 | (B) 16.01.2022 10:22:49 | 0.14 | 6.62 | 2.62 | 3.34 |

| Vienna | (B) 07.01.2022 09:53:09 | 0.72 | 5.48 | 2.10 | 2.52 |

| Rome | (A) 25.01.2022 10:03:11 | 0.97 | 3.33 | 2.08 | 2.19 |

| Harbin | (B) 20.04.2021 02:25:49 | 1.46 | 13.95 | 5.53 | 6.70 |

| Algiers | (B) 18.02.2022 10:29:49 | 0.29 | 5.22 | 3.02 | 3.40 |

| Las Vegas | (A) 04.02.2022 18:25:41 | 0.62 | 8.55 | 4.38 | 5.28 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Schwind, P.; Storch, T. Georeferencing Urban Nighttime Lights Imagery Using Street Network Maps. Remote Sens. 2022, 14, 2671. https://0-doi-org.brum.beds.ac.uk/10.3390/rs14112671

Schwind P, Storch T. Georeferencing Urban Nighttime Lights Imagery Using Street Network Maps. Remote Sensing. 2022; 14(11):2671. https://0-doi-org.brum.beds.ac.uk/10.3390/rs14112671

Chicago/Turabian StyleSchwind, Peter, and Tobias Storch. 2022. "Georeferencing Urban Nighttime Lights Imagery Using Street Network Maps" Remote Sensing 14, no. 11: 2671. https://0-doi-org.brum.beds.ac.uk/10.3390/rs14112671