Development of a Lightweight Single-Band Bathymetric LiDAR

Abstract

:1. Introduction

1.1. Background

1.2. Related Work

2. GQ-Cor 19 Bathymetric LiDAR System

2.1. Architecture of GQ-Cor 19

2.2. Design and Implementation of GQ-Cor 19 Emitting Optical System

2.2.1. Laser Selection

2.2.2. Laser Beam Collimation

2.2.3. Laser Scanning System

2.3. Receiving Optical System

2.4. Design and Implementation of Control System

2.5. Design and Implementation of High-Speed A/D Sampling System

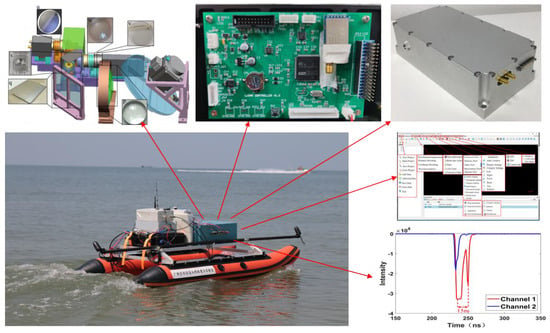

2.6. Design and implementation of System Assembly

2.7. Design and Implementation of Software for Data Processing

3. Validations through Indoor and Outdoor Experiments

3.1. Verification through Indoor Tank

3.2. Verification through Indoor Swimming Pool

3.3. Validation through the Outdoor Water Well

3.4. Validation through the Outdoor Pond

3.5. Validation though Outdoor Reservoir

4. Discussion

5. Results

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Finkl, C.; Benedet, L.; Andrews, J.; Finld, C. Interpretation of Seabed Geomorphology Based on Spatial Analysis of High-Density Airborne Laser Bathymetry. J. Coast. Res. 2005, 21, 501–514. [Google Scholar] [CrossRef] [Green Version]

- Legleiter, C. Remote measurement of river morphology via fusion of LiDAR topography and spectrally based bathymetry. Earth Surf. Process. Landf. 2012, 37, 499–518. [Google Scholar] [CrossRef]

- Costa, B.; Battista, T.; Pittman, S. Comparative evaluation of airborne LiDAR and ship-based multibeam SoNAR bathymetry and intensity for mapping coral reef ecosystems. Remote Sens. Environ. 2009, 113, 1082–1100. [Google Scholar] [CrossRef]

- Westfeld, P.; Maas, H.; Richter, K.; Weiß, R. Analysis and correction of ocean wave pattern induced systematic coordinate errors in airborne LiDAR bathymetry. ISPRS J. Photogramm. Remote Sens. 2017, 128, 314–325. [Google Scholar] [CrossRef]

- Miller, H.; Cotterill, C.; Bradwell, T. Glacial and paraglacial history of the Troutbeck Valley, Cumbria, UK: Integrating airborne LiDAR, multibeam bathymetry, and geological field mapping. Proc. Geol. Assoc. 2014, 125, 31–40. [Google Scholar] [CrossRef] [Green Version]

- Mandlburger, G.; Hauer, C.; Wieser, M.; Pfeifer, N. Topo-Bathymetric LiDAR for Monitoring River Morphodynamics and Instream Habitats—A Case Study at the Pielach River. Remote Sens. 2015, 7, 6160–6195. [Google Scholar] [CrossRef] [Green Version]

- Kinzel, P.; Legleiter, C. sUAS-Based Remote Sensing of River Discharge Using Thermal Particle Image Velocimetry and Bathymetric Lidar. Remote Sens. 2019, 11, 2317. [Google Scholar] [CrossRef] [Green Version]

- Khrimenko, M.; Hopkinson, C. A Simplified End-User Approach to Lidar Very Shallow Water Bathymetric Correction. IEEE Geosci. Remote Sens. Lett. 2019, 17, 3–7. [Google Scholar] [CrossRef]

- Zhou, G. Urban High-Resolution Remote Sensing: Algorithms and Modelling; CRC Press: Boca Raton, FL, USA, 2020; ISBN 978-03-67-857509. [Google Scholar]

- Zhou, G.; Zhou, X. Seamless Fusion of LiDAR and Aerial Imagery for Building Extraction. IEEE Trans. Geosci. Remote Sens. 2014, 52, 7393–7407. [Google Scholar] [CrossRef]

- Zhou, G.; Zhou, X.; Yang, J.; Yue, T.; Nong, X.; Baysal, O. Flash LiDAR Sensor using Fiber Coupled APDs. IEEE Sens. J. 2015, 15, 4758–4768. [Google Scholar] [CrossRef]

- Zhou, G.; Li, C.; Zhang, D.; Liu, D.; Zhou, X.; Zhan, J. Overview of Underwater Transmission Characteristics of Oceanic LiDAR. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 8144–8159. [Google Scholar] [CrossRef]

- Zhou, G.; Long, S.; Xu, J.; Zhou, X.; Song, B.; Deng, R.; Wang, C. Comparison analysis of five waveform decomposition algorithms for the airborne LiDAR echo signal. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 7869–7880. [Google Scholar] [CrossRef]

- Zhou, G.; Deng, R.; Zhou, X.; Long, S.; Li, W.; Lin, G.; Li, X. Gaussian Inflection Point Selection for LiDAR Hidden Echo Signal Decomposition. IEEE Geosci. Remote Sens. Lett. 2022, 19, 1–5. [Google Scholar] [CrossRef]

- Zhou, G.; Li, W.; Zhou, X.; Tan, Y.; Lin, G.; Li, X.; Deng, R. An Innovative Echo Detection System with STM32 Gated and PMT Adjustable Gain for Airborne LiDAR. Int. J. Remote Sens. 2021, 42, 9187–9211. [Google Scholar] [CrossRef]

- Zhou, G.; Zhou, X.; Song, Y.; Xie, D.; Wang, L.; Yan, G.; Hu, M.; Liu, B.; Shang, W.; Gong, C.; et al. Design of supercontinuum laser hyperspectral light detection and ranging (LiDAR) (SCLaHS LiDAR). Int. J. Remote Sens. 2021, 42, 3731–3755. [Google Scholar] [CrossRef]

- Nayegandhi, A.; Brock, J.; Wright, C. Small-Footprint, waveform-resolving lidar estimation of submerged and sub-canopy topography in coastal environments. Int. J. Remote Sens. 2009, 30, 861–878. [Google Scholar] [CrossRef]

- Liu, Y.; Guo, K.; He, X.; Xu, W.; Feng, Y. Research Progress of Airborne Laser Bathymetry Technology. Geomat. Inf. Sci. Wuhan Univ. 2017, 42, 1185–1194. [Google Scholar] [CrossRef]

- Collin, A.; Ramambason, C.; Pastol, Y.; Casella, E.; Rovere, A.; Thiault, L.; Espiau, B.; Siu, G.; Lerouvreur, F.; Nakamura, N.; et al. Very high-resolution mapping of coral reef state using airborne bathymetric LiDAR surface-intensity and drone imagery. Int. J. Remote Sens. 2018, 39, 5676–5688. [Google Scholar] [CrossRef] [Green Version]

- Shen, X.; Liu, Z.; Zhou, Y.; Liu, Q.; Xu, P.; Mao, Z.; Liu, C.; Tang, L.; Ying, N.; Hu, M.; et al. Instrument response effects on the retrieval of oceanic lidar. Appl. Opt. 2020, 59, C21–C30. [Google Scholar] [CrossRef]

- Lucas, K.; Carter, G. Change in distribution and composition of vegetated habitats on Horn Island, Mississippi, northern Gulf of Mexico, in the initial five years following Hurricane Katrina. Geomorphology 2013, 199, 129–137. [Google Scholar] [CrossRef]

- Pe’eri, S.; Long, B. LIDAR Technology Applied in Coastal Studies and Management. J. Coast. Res. 2011, 62, 1–5. [Google Scholar] [CrossRef]

- Zhou, G.; Xie, M. GIS-based Three-dimensional Morphologic Analysis of Assateague Island National Seashore from LIDAR Series Datasets. J. Coast. Res. 2009, 25, 435–447. [Google Scholar] [CrossRef]

- Collin, A.; Archambault, P.; Long, B. Mapping the Shallow Water Seabed Habitat With the SHOALS. IEEE Trans. Geosci. Remote Sens. 2008, 46, 2947–2955. [Google Scholar] [CrossRef]

- Zhao, X.; Liang, G.; Liang, Y.; Zhao, J.; Zhou, F. Background noise reduction for airborne bathymetric full waveforms by creating trend models using optech czmil in the yellow sea of china. Appl. Opt. 2020, 59, 11019–11026. [Google Scholar] [CrossRef] [PubMed]

- Ding, K.; Li, Q.; Zhu, J.; Wang, C.; Xu, T. Evaluation of Airborne LiDAR Bathymetric Parameters on the Northern South China Sea Based on MODIS Data. Acta Geod. Cartogr. Sin. 2018, 47, 180. [Google Scholar] [CrossRef]

- Li, Q.; Wang, J.; Han, Y.; Gao, Z.; Zhang, Y.; Jin, D. Potential evaluation of China’s coastal airborne LiDAR bathymetry based on CZMIL Nova. Remote Sens. Land Resour. 2020, 32, 184–190. [Google Scholar] [CrossRef]

- Dee, S.; Cuttler, M.; O’Leary, M.; Hacker, J.; Browne, N. The complexity of calculating an accurate carbonate budge. Coral Reefs 2020, 39, 1525–1534. [Google Scholar] [CrossRef]

- Tonina, D.; Mckean, J.; Benjankar, R.; Yager, E.; Carmichael, R.; Chen, Q.; Carpenter, A.; Kelsey, L.G.; Edmondson, M.R. Evaluating the performance of topobathymetric lidar to support multi-dimensional flow modelling in a gravel-bed mountain stream. Earth Surf. Process. Landf. 2020, 45, 2850–2868. [Google Scholar] [CrossRef]

- Ding, K. Research on the Signal-Wavelength Airbome LiDAR Bathymetry Full-Waveform Date Processing Algorithm and Its Application. Ph.D. Thesis, Information and Communication Engineering, Shenzhen University, GuangDong, China, 2018. [Google Scholar]

- Zhou, G.; Zhao, D.; Zhou, X.; Xu, C.; Liu, Z.; Wu, G.; Lin, J.; Zhang, H.; Yang, J.; Nong, X.; et al. An RF Amplifier Circuit for Enhancement of Echo Signal Detection in Bathymetric LiDAR. IEEE Sens. J. 2022, 22, 20612–20625. [Google Scholar] [CrossRef]

- Zhou, G.; Song, C.; Schickler, W. Urban 3D GIS from LIDAR and digital aerial images. Comput. Geosci. 2004, 30, 345–353. [Google Scholar] [CrossRef]

- Zhou, G.; Baysal, O.; Kaye, J. Concept design of future intelligent earth observing satellites. Int. J. Remote Sens. 2004, 25, 2667–2685. [Google Scholar] [CrossRef]

- Xu, Y.; Boone, C.; Pileggi, L. Metal-mask configurable RF front-end circuits. IEEE J. Solid-State Circuits 2004, 39, 1347–1351. [Google Scholar] [CrossRef]

- Harada, M.; Tsukahara, T.; Kodate, J.; Yamagishi, A.; Yamada, J. 2-GHz RF front-end circuits in CMOS/SIMOX operating at an extremely low voltage of 0.5 V. IEEE J. Solid-State Circuits 2000, 35, 2000–2004. [Google Scholar] [CrossRef]

- Nguyen, X.; Kim, H.; Lee, H. An Efficient Sampling Algorithm With a K-NN Expanding Operator for Depth Data Acquisition in a LiDAR System. IEEE Trans. Circuits Syst. Video Technol. 2020, 30, 4700–4714. [Google Scholar] [CrossRef]

- Zheng, H.; Ma, R.; Liu, M.; Zhu, Z. A Linear-Array Receiver Analog Front-End Circuit for Rotating Scanner LiDAR Application. IEEE Sens. J. 2019, 19, 5053–5061. [Google Scholar] [CrossRef]

- Liang, Y.; Xu, B.; Fei, Q.; Wu, W.; Shan, X.; Huang, K.; Zeng, H. Low-Timing-Jitter GHz-Gated InGaAs/InP Single-Photon Avalanche Photodiode for LIDAR. IEEE J. Sel. Top. Quantum Electron. 2022, 28, 3801807. [Google Scholar] [CrossRef]

- Hong, C.; Kim, S.H.; Kim, J.H.; Park, S.M. A Linear-Mode LiDAR Sensor Using a Multi-Channel CMOS Transimpedance Amplifier Array. IEEE Sensors. J. 2018, 18, 7032–7040. [Google Scholar] [CrossRef]

- Kurtti, S.; Nissinen, J.; Kostamovaara, J. A Wide Dynamic Range CMOS Laser Radar Receiver with a Time-Domain Walk Error Compensation Scheme. IEEE Trans. Circuits Syst. I Regul. Pap. 2017, 64, 550–561. [Google Scholar] [CrossRef]

| Parameters | Values |

|---|---|

| Laser wavelength | 532 nm |

| Weight | 12 kg |

| Size | 470 mm × 352 mm × 204 mm |

| Maximum measured water depth | >25 m |

| Measurement accuracy | 30 cm |

| Platform | Unmanned shipborne |

| Endurance time | 2 h |

| Full angle of beam divergence | ≤2 mrad |

| Scanning angle | 10° |

| LiDAR | Laser Frequency (kHz) | Minimum/Maximum Detection Depth (m) | Bathymetric Accuracy (m) | Flight Height (m) | Carrier | Country |

|---|---|---|---|---|---|---|

| SHOALS 3000T [21,22,23,24] | 3 | 0.2/50 | 0.25 | 300–400 | Aircraft | Canada |

| Hawk Eye III [25,26] | Shallow water: 35 Deep water: 10 | 0.4/Shallow water:15 Deep water: 50 | 0.3 | 400–600 | Aircraft | Sweden |

| CZMIL [27] | 10 | 0.15/50 | 0.3 | 400–1000 | Aircraft | Canada |

| VQ-880G [28] | 550 | −/1.5 secchi | 0.3 | 600 | Aircraft | Austrian |

| LADS MK-Ⅲ [29] | 1.5 | 0.4/80 | 0.2 | 360–900 | Aircraft | Australia |

| Parameters | Values |

|---|---|

| Wave length | 532 nm |

| Peak power | 100 kW |

| Pulse Width | 3 ns |

| Repeat frequency | 1 kHz |

| Divergence angle | 0.2 mrad |

| Parameters | Values |

| Thickness of the first lens | 3 mm |

| Thickness of second lens | 5 mm |

| Effective focal length of second lens | 25 mm |

| Material/refractive index of material (532 nm) | BK7/1.5195 |

| Parameters | Values |

|---|---|

| Receiving angle of FOV | 95 mrad |

| Entrance pupil diameter | 82 mm |

| Exit pupil diameter | 8 mm |

| Magnification | 10.25xa and 42x |

| Band width | ±1 nm |

| Main aperture | 80 mm |

| Eyepiece group focal length | 505 mm |

| PMT objective group focal length | 49.27 mm |

| APD objective group focal length | 12.01 mm |

| Split-field mirror diameter | 70 mm |

| Eyepiece group aperture | 64 mm |

| Symbol | Parameters | Position | |

|---|---|---|---|

| p | Customized, K9 glass | Objective lens set lens 1 | |

| q | Φ12.5/Thickness 2.3, Ordinary aluminum film + Protection | Objective lens set lens 2 | |

| r | Transmittance 95%, Bandwidth 10 nm | Objective lens set lens 3 | |

| s | Customized, Silver Plated Film | Split Field Mirror | |

| t | Diameter Φ25, Focal length 25, Back Focus 20.28, Visible light enhancement film coating | APD eyepiece set lens 1 | |

| u | Diameter Φ10 mm, Center thickness 2.6 mm, Effective pore size 19 | APD eyepiece set lens 2 | |

| v | Transmittance 95%, Bandwidth 10 nm | APD eyepiece set lens 3 | |

| w | Diameter Φ20, Focal length 25, Back Focus 22.76, Visible light enhancement film coating | PMT eyepiece set lens 1 | |

| x | Flat Convex Mirror | Diameter Φ20 mm, Center thickness 4.6 mm, Effective pore size 19 | PMT eyepiece set lens 2 |

| y | Filter | Transmittance 95%, Bandwidth 10 nm | PMT eyepiece set lens 3 |

| Experimental Environment | Measuring Distance (m) | Actual Distance (m) | Error (m) |

|---|---|---|---|

| Indoor tank | 0.768 | 0.700 | 0.068 |

| 1.661 | 1.600 | 0.061 | |

| 2.248 | 2.300 | 0.052 | |

| Average error (m) | 0.060 | ||

| Indoor swimming pool | 10.15 | 9.88 | 0.27 |

| 13.42 | 13.24 | 0.18 | |

| 14.96 | 15.22 | 0.26 | |

| 16.66 | 16.50 | 0.16 | |

| 19.72 | 19.75 | 0.03 | |

| 25.62 | 25.58 | 0.04 | |

| Average error (m) | 0.157 | ||

| Outdoor water wells | 15.19 | 15 | 0.19 |

| Outdoor water pond | 0.70 | 0.75 | 0.05 |

| 0.88 | 0.90 | 0.02 | |

| 0.92 | 1.00 | 0.08 | |

| Average error (m) | 0.05 | ||

| Outdoor reservoir | 1.69 | 1.74 | 0.05 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhou, G.; Zhou, X.; Li, W.; Zhao, D.; Song, B.; Xu, C.; Zhang, H.; Liu, Z.; Xu, J.; Lin, G.; et al. Development of a Lightweight Single-Band Bathymetric LiDAR. Remote Sens. 2022, 14, 5880. https://0-doi-org.brum.beds.ac.uk/10.3390/rs14225880

Zhou G, Zhou X, Li W, Zhao D, Song B, Xu C, Zhang H, Liu Z, Xu J, Lin G, et al. Development of a Lightweight Single-Band Bathymetric LiDAR. Remote Sensing. 2022; 14(22):5880. https://0-doi-org.brum.beds.ac.uk/10.3390/rs14225880

Chicago/Turabian StyleZhou, Guoqing, Xiang Zhou, Weihao Li, Dawei Zhao, Bo Song, Chao Xu, Haotian Zhang, Zhexian Liu, Jiasheng Xu, Gangchao Lin, and et al. 2022. "Development of a Lightweight Single-Band Bathymetric LiDAR" Remote Sensing 14, no. 22: 5880. https://0-doi-org.brum.beds.ac.uk/10.3390/rs14225880