Tree Species Classifications of Urban Forests Using UAV-LiDAR Intensity Frequency Data

Abstract

:1. Introduction

2. Study Area and Method

2.1. Study Area

2.2. Field Data

2.3. LiDAR Data Acquisition and Processing

2.4. ITC Information Acquisition

2.4.1. Extraction of ITC

2.4.2. Intensity Correction of ITCs and Resampling

2.5. Intensity Frequency Feature Calculation and Difference Analysis

2.6. Random Forest and Tree Species Classification

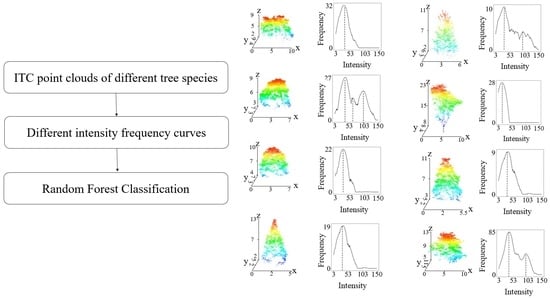

2.7. Tree Species Classification Process

2.8. Accuracy Evaluation

3. Results

3.1. ITC Extraction Results

3.2. Intensity Correction Results

3.3. Results of Intensity Frequency of Different Species

3.4. Intensity Frequency Difference Analysis Results

3.5. Screening Results of Important Random Forest Features

3.6. Tree Species Classification Results

4. Discussion

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Mikael, K.; Lauri, K.; Matti, M.; Aki, S.; Petteri, P. How much can airborne laser scanning based forest inventory by tree species benefit from auxiliary optical data? Int. J. Appl. Earth Obs. Geoinf. 2018, 72, 91–98. [Google Scholar] [CrossRef]

- Gogoi, A.; Ahirwal, J.; Sahoo, U. Evaluation of ecosystem carbon storage in major forest types of Eastern Himalaya: Implications for carbon sink management. J. Environ. Manag. 2022, 302, 113972. [Google Scholar] [CrossRef] [PubMed]

- Litza, K.; Alignier, A.; Closset-Kopp, D.; Ernoult, A.; Mony, C.; Osthaus, M.; Staley, J.; Berge, S.V.D.; Vanneste, T.; Diekmann, M. Hedgerows as a habitat for forest plant species in the agricultural landscape of Europe. Agric. Ecosyst. Environ. 2022, 326, 107809. [Google Scholar] [CrossRef]

- Pisarek, P.; Bueno, M.; Thiry, Y.; Legout, A.; Gallard, H.; Le Hécho, I. Influence of tree species on selenium and iodine partitioning in an experimental forest ecosystem. Sci. Total Environ. 2022, 809, 151174. [Google Scholar] [CrossRef]

- Cavender-Bares, J.; Schneider, F.D.; Santos, M.J.; Armstrong, A.; Carnaval, A.; Dahlin, K.M.; Fatoyinbo, L.; Hurtt, G.C.; Schimel, D.; Townsend, P.A.; et al. Integrating remote sensing with ecology and evolution to advance biodiversity conservation. Nat. Ecol. Evol. 2022, 6, 506–519. [Google Scholar] [CrossRef]

- Neyns, R.; Canters, F. Mapping of Urban Vegetation with High-Resolution Remote Sensing: A Review. Remote Sens. 2022, 14, 1031. [Google Scholar] [CrossRef]

- Fassnacht, F.E.; Latifi, H.; Stereńczak, K.; Modzelewska, A.; Lefsky, M.; Waser, L.T.; Straub, C.; Ghosh, A. Review of studies on tree species classification from remotely sensed data. Remote Sens. Environ. 2016, 186, 64–87. Available online: https://sci-hub.se/10.1016/j.rse.2016.08.013 (accessed on 1 January 2021).

- Shen, X.; Cao, L. Tree-Species Classification in Subtropical Forests Using Airborne Hyperspectral and LiDAR Data. Remote Sens. 2017, 9, 1180. [Google Scholar] [CrossRef] [Green Version]

- García-Pardo, K.A.; Moreno-Rangel, D.; Domínguez-Amarillo, S.; García-Chávez, J.R. Remote sensing for the assessment of ecosystem services provided by urban vegetation: A review of the methods applied. Urban For. Urban Green. 2022, 74, 127636. [Google Scholar] [CrossRef]

- Qin, H.; Zhou, W.; Yao, Y.; Wang, W. Individual tree segmentation and tree species classification in subtropical broadleaf forests using UAV-based LiDAR, hyperspectral, and ultrahigh-resolution RGB data. Remote Sens. Environ. 2022, 280, 113143. [Google Scholar] [CrossRef]

- Hovi, A.; Korhonen, L.; Vauhkonen, J.; Korpela, I. LiDAR waveform features for tree species classification and their sensitivity to tree- and acquisition related parameters. Remote Sens. Environ. 2016, 173, 224–237. [Google Scholar] [CrossRef]

- Beaudoin, A.; Hall, R.J.; Castilla, G.; Filiatrault, M.; Villemaire, P.; Skakun, R.; Guindon, L. Improved k-NN Mapping of Forest Attributes in Northern Canada Using Spaceborne L-Band SAR, Multispectral and LiDAR Data. Remote Sens. 2022, 14, 1181. [Google Scholar] [CrossRef]

- Rahman, F.; Onoda, Y.; Kitajima, K. Forest canopy height variation in relation to topography and forest types in central Japan with LiDAR. For. Ecol. Manag. 2022, 503, 119792. [Google Scholar] [CrossRef]

- Yin, D.; Wang, L. Individual mangrove tree measurement using UAV-based LiDAR data: Possibilities and challenges. Remote Sens. Environ. 2019, 223, 34–49. [Google Scholar] [CrossRef]

- Blair, J.; Rabine, D.L.; A Hofton, M. The Laser Vegetation Imaging Sensor: A medium-altitude, digitisation-only, airborne laser altimeter for mapping vegetation and topography. ISPRS J. Photogramm. Remote Sens. 1999, 54, 130–137. [Google Scholar] [CrossRef]

- Wortley, L.; Hero, J.-M.; Howes, M. Evaluating ecological restoration success: A review of the literature. Restor. Ecol. 2013, 21, 537–543. [Google Scholar] [CrossRef]

- Wang, Y.; Fang, H. Estimation of LAI with the LiDAR technology: A review. Remote Sens. 2020, 12, 3457. [Google Scholar] [CrossRef]

- Hershey, J.L.; McDill, M.E.; Miller, D.A.; Holderman, B.; Michael, J.H. A Voxel-Based Individual Tree Stem Detection Method Using Airborne LiDAR in Mature Northeastern U.S. Forests. Remote Sens. 2022, 14, 806. [Google Scholar] [CrossRef]

- Corte, A.P.D.; Neto, E.M.D.C.; Rex, F.E.; Souza, D.; Behling, A.; Mohan, M.; Sanquetta, M.N.I.; Silva, C.A.; Klauberg, C.; Sanquetta, C.R.; et al. High-density UAV-LiDAR in an integrated crop-livestock-forest system: Sampling forest inventory or forest inventory based on individual tree detection (ITD). Drones 2022, 6, 48. [Google Scholar] [CrossRef]

- Shi, Y.; Wang, T.; Skidmore, A.K.; Heurich, M. Important LiDAR metrics for discriminating forest tree species in Central Europe. ISPRS J. Photogramm. Remote Sens. 2018, 137, 163–174. [Google Scholar] [CrossRef]

- Ørka, H.; Naesset, E.; Bollandsås, O. Utilizing Airborne Laser Intensity for Tree Species Classification. In Proceedings of the ISPRS Workshop Laser Scanning 2007 SilviLaser, Espoo, Finland, 12–14 September 2007; Available online: https://www.isprs.org/proceedings/XXXVI/3-W52/final_papers/Oerka_2007.pdf (accessed on 1 January 2021).

- Vaughn, N.R.; Moskal, L.M.; Turnblom, E.C. Tree Species Detection Accuracies Using Discrete Point Lidar and Airborne Waveform Lidar. Remote Sens. 2012, 4, 377–403. [Google Scholar] [CrossRef]

- Hamraz, H.; Jacobs, N.B.; Contreras, M.A.; Clark, C.H. Deep learning for conifer/deciduous classification of airborne LiDAR 3D point clouds representing individual trees. ISPRS J. Photogramm. Remote Sens. 2019, 158, 219–230. [Google Scholar] [CrossRef] [Green Version]

- Korpela, I.; Ørka, H.; Maltamo, M.; Tokola, T.; Hyyppä, J. Tree species classification using airborne LiDAR—Effects of stand and tree parameters, downsizing of training set, intensity normalization, and sensor type. Silva Fenn. 2010, 44, 319–339. [Google Scholar] [CrossRef] [Green Version]

- Budei, B.C.; St-Onge, B.; Hopkinson, C.; Audet, F.-A. Identifying the genus or species of individual trees using a three-wavelength airborne lidar system. Remote Sens. Environ. 2018, 204, 632–647. [Google Scholar] [CrossRef]

- Voss, M.; Sugumaran, R. Seasonal Effect on Tree Species Classification in an Urban Environment Using Hyperspectral Data, LiDAR, and an Object- Oriented Approach. Sensors 2008, 8, 3020–3036. [Google Scholar] [CrossRef] [Green Version]

- Shi, Y.; Skidmore, A.K.; Wang, T.; Holzwarth, S.; Heiden, U.; Pinnel, N.; Zhu, X.; Heurich, M. Tree species classification using plant functional traits from LiDAR and hyperspectral data. Int. J. Appl. Earth Obs. Geoinf. 2018, 73, 207–219. [Google Scholar] [CrossRef]

- Kukkonen, M.; Maltamo, M.; Korhonen, L.; Packalen, P. Multispectral airborne LiDAR data in the prediction of boreal tree species composition. IEEE Trans. Geosci. Remote Sens. 2019, 57, 3462–3471. [Google Scholar] [CrossRef]

- Ioki, K.; Tsuyuki, S.; Hirata, Y.; Phua, M.-H.; Wong, W.V.C.; Ling, Z.-Y.; Johari, S.A.; Korom, A.; James, D.; Saito, H.; et al. Evaluation of the similarity in tree community composition in a tropical rainforest using airborne LiDAR data. Remote Sens. Environ. 2016, 173, 304–313. [Google Scholar] [CrossRef]

- Kashani, A.G.; Olsen, M.J.; Parrish, C.E.; Wilson, N. A Review of LIDAR Radiometric Processing: From Ad Hoc Intensity Correction to Rigorous Radiometric Calibration. Sensors 2015, 15, 28099–28128. [Google Scholar] [CrossRef] [Green Version]

- Heinzel, J.; Koch, B. Exploring full-waveform LiDAR parameters for tree species classification. Int. J. Appl. Earth Obs. Geoinf. 2011, 13, 152–160. [Google Scholar] [CrossRef]

- Mizoguchi, T.; Ishii, A.; Nakamura, H.; Inoue, T.; Takamatsu, H. Lidar-based individual tree species classification using convolutional neural network. SPIE 2017, 10332, 193–199. [Google Scholar] [CrossRef]

- McBride, J.; Jacobs, D. Urban forest development: A case study, Menlo park, California. Urban Ecol. 1976, 2, 1–14. [Google Scholar] [CrossRef]

- Rowntree, R.A. Ecology of the urban forest—Introduction to part II. Urban Ecol. 1986, 9, 229–243. [Google Scholar] [CrossRef]

- Avellar, G.S.C.; Pereira, G.A.S.; Pimenta, L.C.D.A.; Iscold, P. Multi-UAV routing for area coverage and remote sensing with minimum time. Sensors 2015, 15, 27783–27803. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Means, J.; Means, J. Use of Large-Footprint Scanning Airborne Lidar To Estimate Forest Stand Characteristics in the Western Cascades of Oregon. Remote Sens. Environ. 1999, 67, 298–308. [Google Scholar] [CrossRef]

- Li, W.; Guo, Q.; Jakubowski, M.K.; Kelly, M. A new method for segmenting individual trees from the lidar point cloud. Photogramm. Eng. Remote Sens. 2012, 78, 75–84. [Google Scholar] [CrossRef] [Green Version]

- Lohani, B.; Ghosh, S. Airborne LiDAR technology: A review of data collection and processing systems. Proc. Natl. Acad. Sci. India Sect. A Phys. Sci. 2017, 87, 567–579. [Google Scholar] [CrossRef]

- Hobbs, S.; Jelalian, A.V. Laser radar systems: Artech house, boston, £64.00 (hb). J. Atmos. Terr. Phys. 1992, 54, 1646. [Google Scholar] [CrossRef]

- Tan, K.; Cheng, X.J. Correction of methods of laser intensity and accuracy of point cloud classification. J. Tongji Univ. Nat. Sci. 2014, 42, 131–135. [Google Scholar]

- You, H.; Wang, T.; Skidmore, A.K.; Xing, Y. Quantifying the effects of Normalisation of airborne LiDAR intensity on coniferous forest leaf area index estimations. Remote Sens. 2017, 9, 163. [Google Scholar] [CrossRef] [Green Version]

- Zhugeng, D.; Lingxiao, W.; Xueliang, J. Effect of point cloud density on forest remote sensing retrieval index extraction based on unmanned aerial vehicle lidar data. Geomat. Inf. Sci. Wuhan Univ. 2021, 41, 711–721. [Google Scholar] [CrossRef]

- Savitzky, A.; Golay, M.J.E. Smoothing and differentiation of data by simplified least squares procedures. Anal. Chem. 1964, 36, 1627–1639. [Google Scholar] [CrossRef]

- Li, X.; Du, H.; Mao, F.; Zhou, G.; Xing, L.; Liu, T.; Han, N.; Liu, E.; Ge, H.; Liu, Y.; et al. Mapping spatiotemporal decisions for sustainable productivity of bamboo forest land. Land Degrad. Dev. 2020, 31, 939–958. [Google Scholar] [CrossRef]

- Rodriguez-Galiano, V.F.; Ghimire, B.; Rogan, J.; Chica-Olmo, M.; Rigol-Sanchez, J.P. An assessment of the effectiveness of a random forest classifier for land-cover classification. ISPRS J. Photogramm. Remote Sens. 2012, 67, 93–104. [Google Scholar] [CrossRef]

- Lawrence, R.L.; Wood, S.D.; Sheley, R.L. Mapping invasive plants using hyperspectral imagery and Breiman Cutler classifications (randomForest). Remote Sens. Environ. 2006, 100, 356–362. [Google Scholar] [CrossRef]

- Yan, W.Y.; Shaker, A. Radiometric correction and normalization of airborne LiDAR intensity data for improving land-cover classification. IEEE Trans. Geosci. Remote Sens. 2014, 52, 7658–7673. [Google Scholar] [CrossRef]

- Korpela, I.; Koskinen, M.; Vasander, H.; Holopainen, M.; Minkkinen, K. Airborne small-footprint discrete-return LiDAR data in the assessment of boreal mire surface patterns, vegetation, and habitats. For. Ecol. Manag. 2009, 258, 1549–1566. [Google Scholar] [CrossRef]

- Yan, W.Y.; Shaker, A. Airborne LiDAR intensity banding: Cause and solution. ISPRS J. Photogramm. Remote Sens. 2018, 142, 301–310. [Google Scholar] [CrossRef]

- Michałowska, M.; Rapiński, J. A review of tree species classification based on airborne LiDAR data and applied classifiers. Remote Sens. 2021, 13, 353. [Google Scholar] [CrossRef]

- Lefsky, M.; Cohen, W.; Acker, S.; Parker, G.; Spies, T.; Harding, D. Lidar remote sensing of the canopy structure and biophysical properties of douglas-fir western hemlock forests. Remote Sens. Environ. 1999, 70, 339–361. [Google Scholar] [CrossRef]

- Ballanti, L.; Blesius, L.; Hines, E.; Kruse, B. Tree Species Classification Using Hyperspectral Imagery: A Comparison of Two Classifiers. Remote Sens. 2016, 8, 445. [Google Scholar] [CrossRef] [Green Version]

- Coops, N.C.; Tompalski, P.; Goodbody, T.R.; Queinnec, M.; Luther, J.E.; Bolton, D.K.; White, J.C.; Wulder, M.A.; van Lier, O.R.; Hermosilla, T. Modelling lidar-derived estimates of forest attributes over space and time: A review of approaches and future trends. Remote Sens. Environ. 2021, 260, 112477. [Google Scholar] [CrossRef]

- Heinzel, J.; Koch, B. Investigating multiple data sources for tree species classification in temperate forest and use for single tree delineation. Int. J. Appl. Earth Obs. Geoinf. 2012, 18, 101–110. [Google Scholar] [CrossRef]

- Dalponte, M.; Bruzzone, L.; Gianelle, D. Tree species classification in the Southern Alps based on the fusion of very high geometrical resolution multispectral/hyperspectral images and LiDAR data. Remote Sens. Environ. 2012, 123, 258–270. [Google Scholar] [CrossRef]

- Lang, M.W.; Kim, V.; McCarty, G.W.; Li, X.; Yeo, I.-Y.; Huang, C.; Du, L. Improved detection of inundation below the forest canopy using normalized LiDAR intensity data. Remote Sens. 2020, 12, 707. [Google Scholar] [CrossRef] [Green Version]

- Liu, L.; Coops, N.C.; Aven, N.W.; Pang, Y. Mapping urban tree species using integrated airborne hyperspectral and LiDAR remote sensing data. Remote Sens. Environ. 2017, 200, 170–182. [Google Scholar] [CrossRef]

- St-Onge, B.; Audet, F.-A.; Bégin, J. Characterizing the height structure and composition of a boreal forest using an individual tree crown approach applied to photogrammetric point clouds. Forests 2015, 6, 3899–3922. [Google Scholar] [CrossRef]

- Ørka, H.O.; Næsset, E.; Bollandsås, O.M. Classifying species of individual trees by intensity and structure features derived from airborne laser scanner data. Remote Sens. Environ. 2009, 113, 1163–1174. [Google Scholar] [CrossRef]

- Tehseen, S.; Ramayah, T.; Sajilan, S. Testing and Controlling for Common Method Variance: A Review of Available Methods. J. Manag. Sci. 2017, 4, 142–168. [Google Scholar] [CrossRef] [Green Version]

- Dalponte, M.; Ørka, H.O.; Ene, L.T.; Gobakken, T.; Næsset, E. Tree crown delineation and tree species classification in boreal forests using hyperspectral and ALS data. Remote Sens. Environ. 2014, 140, 306–317. [Google Scholar] [CrossRef]

- Luo, S.; Chen, J.M.; Wang, C.; Gonsamo, A.; Xi, X.; Lin, Y.; Qian, M.; Peng, D.; Nie, S.; Qin, H. Comparative performances of airborne lidar height and intensity data for leaf area index estimation. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2018, 11, 300–310. [Google Scholar] [CrossRef]

| Parameters | Information | Parameters | Information |

|---|---|---|---|

| Sensor | Velodyne Puck LITE ™ | Ranging Accuracy | 3 cm |

| Date of Acquisition | 2021.4.17, 2021.5.15 | Mean Point Density | 230 points/m² |

| Height | 60 m | Wavelength | 903 nm |

| Data Set Name | Sampling Rate | Mean Point Density |

|---|---|---|

| D1 | 100% | 230 points/m2 |

| D2 | 80% | 184 points/m2 |

| D3 | 50% | 115 points/m2 |

| D4 | 30% | 69 Points/m2 |

| Tree Species | Nt | No | Nc | R (%) | P (%) | F (%) |

|---|---|---|---|---|---|---|

| M.F | 85 | 6 | 10 | 93.4% | 89.5% | 91.4% |

| C.C | 167 | 24 | 19 | 87.4% | 89.8% | 88.6% |

| C.S | 51 | 5 | 7 | 91.1% | 87.9% | 89.5% |

| S.B | 81 | 10 | 8 | 89.0% | 91.0% | 90.0% |

| A.B | 62 | 11 | 13 | 84.9% | 82.7% | 83.8% |

| G.B | 435 | 54 | 67 | 89.0% | 86.7% | 87.8% |

| M.G | 39 | 8 | 11 | 83.0% | 78.0% | 80.4% |

| G.L | 56 | 12 | 15 | 82.4% | 78.9% | 80.6% |

| Sampling Rate | 100% | 80% | 50% | 30% | |||||

|---|---|---|---|---|---|---|---|---|---|

| p | H(k) | p | H(k) | p | H(k) | p | H(k) | ||

| Intraspecies | M.F | 0.474 | 51 | 0.474 | 51 | 0.474 | 51 | 0.474 | 51 |

| C.C | 0.476 | 63 | 0.476 | 63 | 0.476 | 63 | 0.476 | 63 | |

| C.S | 0.462 | 25 | 0.462 | 25 | 0.462 | 25 | 0.462 | 25 | |

| A.B | 0.478 | 70 | 0.478 | 70 | 0.478 | 70 | 0.478 | 70 | |

| S.B | 0.479 | 77 | 0.479 | 77 | 0.479 | 77 | 0.479 | 77 | |

| G.L | 0.470 | 40 | 0.470 | 40 | 0.470 | 40 | 0.470 | 40 | |

| M.G | 0.463 | 26 | 0.463 | 26 | 0.463 | 26 | 0.463 | 26 | |

| G.B | 0.480 | 85 | 0.480 | 85 | 0.480 | 85 | 0.480 | 85 | |

| Interspecies | <0.01 | 181.590 | <0.01 | 133.241 | <0.01 | 106.956 | <0.01 | 87.638 | |

| Tree Species | Important Intensity Frequency Features in D1 |

|---|---|

| M.F | |

| C.C | |

| C.S | |

| A.B | |

| S.B | |

| G.L | |

| M.G | |

| G.B |

| Name | M.F n = 16 | C.C n = 19 | C.S n = 8 | A.B n = 21 | S.B n = 23 | Gl. n = 12 | M.G n = 8 | G.B n = 26 | UA (%) | CE (%) |

|---|---|---|---|---|---|---|---|---|---|---|

| Num. | ||||||||||

| M.F | 12 | 0 | 1 | 0 | 0 | 0 | 0 | 1 | 85.7 | 14.3 |

| C.C | 1 | 19 | 1 | 0 | 2 | 0 | 2 | 0 | 82.6 | 17.4 |

| C.S | 0 | 0 | 7 | 0 | 0 | 0 | 0 | 0 | 100.0 | 0 |

| A.B | 0 | 0 | 0 | 20 | 0 | 0 | 0 | 0 | 100.0 | 0 |

| S.B | 3 | 0 | 2 | 0 | 21 | 0 | 0 | 0 | 100.0 | 0 |

| Gl. | 0 | 0 | 0 | 0 | 0 | 9 | 0 | 1 | 90.0 | 10.0 |

| M.G | 0 | 0 | 0 | 0 | 0 | 0 | 6 | 0 | 100 | 0 |

| G.B | 1 | 0 | 0 | 0 | 0 | 2 | 1 | 23 | 85.1 | 14.9 |

| PA (%) | 85.7 | 95.0 | 87.5 | 95.2 | 91.3 | 75.0 | 75.0 | 88.5 | OA: 86.7% | |

| OE (%) | 14.3 | 5.0 | 12.5 | 4.8 | 8.7 | 25.0 | 25.0 | 11.5 | SCI: 117 | |

| Name | M.F n = 16 | C.C n = 19 | C.S n = 8 | A.B n = 21 | S.B n = 23 | Gl. n = 12 | M.G n = 8 | G.B n = 26 | UA (%) | CE (%) |

|---|---|---|---|---|---|---|---|---|---|---|

| Num. | ||||||||||

| M.F | 10 | 1 | 1 | 0 | 0 | 1 | 0 | 0 | 76.9 | 23.1 |

| C.C | 1 | 15 | 0 | 0 | 0 | 0 | 0 | 0 | 93.3 | 6.7 |

| C.S | 0 | 1 | 4 | 0 | 0 | 0 | 0 | 0 | 80.0 | 20.0 |

| A.B | 0 | 0 | 0 | 19 | 0 | 0 | 0 | 1 | 95.0 | 5.0 |

| S.B | 1 | 0 | 0 | 0 | 17 | 1 | 0 | 0 | 88.0 | 12.0 |

| Gl. | 1 | 0 | 0 | 0 | 0 | 7 | 0 | 3 | 63.6 | 56.4 |

| M.G | 0 | 1 | 0 | 0 | 0 | 0 | 6 | 0 | 85.7 | 14.3 |

| G.B | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 19 | 95.0 | 5.0 |

| PA (%) | 62.5 | 78.9 | 50.0 | 90.5 | 95.7 | 58.3 | 75.0 | 84.6 | OA: 87.4% | |

| OE (%) | 37.5 | 21.1 | 50.0 | 9.5 | 4.3 | 41.7 | 25.0 | 15.4 | SCI: 97 | |

| Name | M.F n = 16 | C.C n = 19 | C.S n = 8 | A.B n = 21 | S.B n = 23 | Gl. n = 12 | M.G n = 8 | G.B n = 26 | UA (%) | CE (%) |

|---|---|---|---|---|---|---|---|---|---|---|

| Num. | ||||||||||

| M.F | 7 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 87.5 | 12.5 |

| C.C | 3 | 12 | 1 | 0 | 0 | 0 | 0 | 2 | 66.7 | 33.3 |

| C.S | 1 | 0 | 3 | 0 | 0 | 0 | 0 | 0 | 75.0 | 25.0 |

| A.B | 0 | 0 | 0 | 20 | 0 | 0 | 0 | 1 | 100.0 | 0 |

| S.B | 1 | 0 | 0 | 0 | 8 | 0 | 0 | 0 | 88.0 | 12.0 |

| Gl. | 1 | 0 | 0 | 0 | 0 | 3 | 0 | 0 | 75.0 | 25.0 |

| M.G | 0 | 0 | 0 | 0 | 0 | 0 | 4 | 1 | 80.0 | 20.0 |

| G.B | 0 | 1 | 1 | 0 | 0 | 1 | 0 | 17 | 85.0 | 15.0 |

| PA (%) | 62.5 | 63.2 | 37.5 | 95.2 | 34.7 | 25.0 | 50.0 | 65.4 | OA: 84.1% | |

| OE (%) | 37.5 | 36.8 | 62.5 | 4.8 | 65.3 | 75.0 | 50.0 | 34.6 | SCI: 74 | |

| Name | M.F n = 16 | C.C n = 19 | C.S n = 8 | A.B n = 21 | S.B n = 23 | Gl. n = 12 | M.G n = 8 | G.B n = 26 | UA (%) | CE (%) |

|---|---|---|---|---|---|---|---|---|---|---|

| Num. | ||||||||||

| M.F | 3 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 75.0 | 25 |

| C.C | 0 | 7 | 0 | 0 | 0 | 0 | 0 | 2 | 77.8 | 22.1 |

| C.S | 0 | 2 | 4 | 0 | 0 | 0 | 0 | 0 | 66.7 | 33.3 |

| A.B | 0 | 0 | 0 | 19 | 0 | 0 | 0 | 1 | 100.0 | 0 |

| S.B | 0 | 0 | 2 | 0 | 11 | 0 | 0 | 0 | 84.6 | 15.4 |

| Gl. | 1 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 50.0 | 50.0 |

| M.G | 0 | 1 | 0 | 0 | 0 | 0 | 4 | 0 | 80.0 | 20.0 |

| G.B | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 16 | 94.1 | 5.9 |

| PA (%) | 18.8 | 63.2 | 37.5 | 90.4 | 47.8 | 25.0 | 8.3 | 61.5 | OA: 85.5% | |

| OE (%) | 81.2 | 36.8 | 62.5 | 9.6 | 52.2 | 75.0 | 91.7 | 38.5 | SCI: 65 | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Gong, Y.; Li, X.; Du, H.; Zhou, G.; Mao, F.; Zhou, L.; Zhang, B.; Xuan, J.; Zhu, D. Tree Species Classifications of Urban Forests Using UAV-LiDAR Intensity Frequency Data. Remote Sens. 2023, 15, 110. https://0-doi-org.brum.beds.ac.uk/10.3390/rs15010110

Gong Y, Li X, Du H, Zhou G, Mao F, Zhou L, Zhang B, Xuan J, Zhu D. Tree Species Classifications of Urban Forests Using UAV-LiDAR Intensity Frequency Data. Remote Sensing. 2023; 15(1):110. https://0-doi-org.brum.beds.ac.uk/10.3390/rs15010110

Chicago/Turabian StyleGong, Yulin, Xuejian Li, Huaqiang Du, Guomo Zhou, Fangjie Mao, Lv Zhou, Bo Zhang, Jie Xuan, and Dien Zhu. 2023. "Tree Species Classifications of Urban Forests Using UAV-LiDAR Intensity Frequency Data" Remote Sensing 15, no. 1: 110. https://0-doi-org.brum.beds.ac.uk/10.3390/rs15010110