1. Introduction

Different from a conventional optical system, synthetic aperture radar (SAR) is able to work under all-day and all-weather conditions, and hence has been widely used in several military and civilian fields [

1,

2]. Automatic target recognition (ATR) is one of the important research topics in SAR image processing [

3,

4]. It consists of three modules, target detection, target discrimination, and target classification, whose key process is target classification. However, the limitation of feature extraction technique hinders the development of target classification. The appearance of deep learning can automatically extract target features instead of manual extraction, which promotes the development of image processing. In 2006, Hinton et al. introduced the concept of deep learning, indicating that a multi-layer convolutional neural network (CNN) has great potential for learning target features [

5]. In 2012, Krizhevsky et al. proposed the first deep CNN model called AlexNet for image classification and won the ImageNet competition with its top-5 error being 17.0%. It made deep learning become popular in the field of image processing [

6,

7]. Since then, various networks such as GoogLeNet [

8], ResNet [

9], DenseNet [

10] have appeared. Nowadays, deep learning methods represented by CNN have been successfully applied to optical image processing. However, compared with abundant labeled datasets in optical images, acquiring labeled SAR images is difficult and expensive. Lack of sufficient labeled SAR data limits the development of deep learning in SAR target classification. In addition, those models that work for optical images could not be used directly for SAR images. Therefore, how to use limited SAR data to improve the performance of SAR target classification has become a research hot spot in the recent years.

Research on a small sample can be separated into three areas, i.e., data augmentation, transfer learning, and model design. Data augmentation mainly focuses on the input data. Transfer learning aims to utilize information from datasets of other domains to support the target domain. Model design aims to propose a new framework suitable for SAR image processing. In 2016, Ding et al. proposed to use three types of data augmentation technologies to alleviate the problem of target translation, speckle noise, and pose missing, respectively [

11]. In the same year, Chen et al. introduced the new classification framework all-convolutional networks (A-ConvNet), which only contains convolutional layers and down-sampling layers, greatly reducing the parameters in the network [

12]. Experimental results based on the MSTAR dataset demonstrate that its classification accuracy reaches 99.13% when all training samples are input data. In 2017, Hansen et al. revealed the feasibility of transfer learning between a simulated dataset and a real SAR image. It shows that transfer learning enables CNNs to achieve faster convergence and better performance [

13]. Lin et al. imitated the principle of ResNet and designed a convolutional highway unit (CHU) to solve the gradient-vanishing problem caused by training deep networks with limited SAR data [

14]. In 2019, Zhong et al. proposed to transfer the convolutional layers of the model which is pretrained on ImageNet and compress the model using a filter-based pruning method. As the training data increase to 2700 images per class, the classification accuracy can reach 98.39% [

15]. Wang and Xu proposed a novel concatenated rectified linear unit (CReLU) instead of rectified linear unit (ReLU) in order to preserve negative phase information and obtained double feature maps of the previous layer. Experiments on the MSTAR dataset show that the accuracy can reach 88.17% with only 20% of training data [

16]. Considering the limited labeled SAR images, Zhang et al. proposed to train a generative adversarial network (GAN) using abundant unlabeled SAR images to apply general characteristics of SAR images to SAR target recognition [

17]. Then, Zheng et al. proposed a multi-discriminator GAN (MD-GAN) based on GAN to promote the framework to distinguish generated pseudo-samples from real input data, ensuring the quality of generated images [

18]. In 2020, Guo et al. proposed a compact convolutional autoencoder (CCAE) to learn target characteristics. A typical convolutional autoencoder (CAE) fails to enhance the compactness of targets between the same class. CCAE adopts a pair of samples from the same class as input so as to minimize the distance between intra-class samples. Experiments on target classification demonstrate that CCAE can reach 98.59% accuracy [

19]. Huang et al. evaluated the efficiency of transfer learning in 2020 and introduced a multi-source domain method to reduce the difference between data of source and target domains to improve the performance of transfer learning. Results on the OpenSARShip dataset validate that the smaller difference gap, the better transfer learning performs [

20]. In 2021, Guo et al. verified the feasibility of cross-domain learning from an optical image to a SAR image, and applied the idea to object detection by adding a domain adaptation module to the Faster R-CNN model [

21]. In 2022, Yang et al. proposed a dynamic joint correlation alignment network (DJ-CORAL) used for SAR ship classification. It firstly transforms heterogeneous features from two domains into a public subspace to eliminate the heterogeneity, then conducts heterogeneous domain adaptation (HDA) to realize domain shift minimization. Compared with existing semi-supervised transfer learning methods, DJ-CORAL presents better performance [

22].

Different from optical images, phase information is unique to SAR images; research on how to make full use of SAR images has made progress. In 2017, Zhang et al. first proposed an architecture called complex-valued CNN (CV-CNN) oriented for SAR target classification. Experimental results show that CV-CNN achieves higher classification accuracy with phase information being part of input data [

23]. Coman et al. divided phase information into real-part and imaginary-part information, and adopted amplitude-real-imaginary three-layer data to form the input data. Results on MSTAR present about 90% accuracy, which alleviates the over-fitting problem caused by the lack of training data [

24]. On the basis of CV-CNN, Yu et al. improved the network by introducing a complex-valued fully convolutional neural network (CV-FCNN) in 2020. CV-FCNN only consists of convolutional layers in order to avoid the overfitting problem caused by large amount of parameters. Experiments on MSTAR validate that CV-FCNN presents better performance than CV-CNN [

25].

Many achievements in SAR target classification are on the basis of matched filtering (MF) recovered images. The introduction of sparse SAR imaging technology solves the problem that the quality of MF-based SAR images is severely affected by noise, sidelobes, and clutters. Typical sparse recovery algorithms such as iterative soft thresholding (IST) [

26,

27] and orthogonal matching pursuit (OMP) [

28,

29] are able to improve the image quality by protruding the target while suppressing background clutter. In 2018, a novel sparse recovery algorithm based on IST was proposed, named as BiIST [

30,

31]. Compared with OMP and IST, BiIST can enhance target characteristics while remaining an image statistical distribution. Then, in 2021, Bi et al. combined a sparse SAR dataset with typical detection frameworks, i.e., YOLOv3 and Faster R-CNN, showing that higher accuracy can be achieved when using a sparse SAR image instead of an MF-based image as input data [

32]. In 2022, Deng et al. proposed a novel sparse SAR target classification framework called amplitude-phase CNN (AP-CNN) to utilize both magnitude and phase of a sparse SAR image recovered by BiIST. Classification network comparison shows that the combination of the sparse SAR dataset and AP-CNN achieves optimal performance [

33].

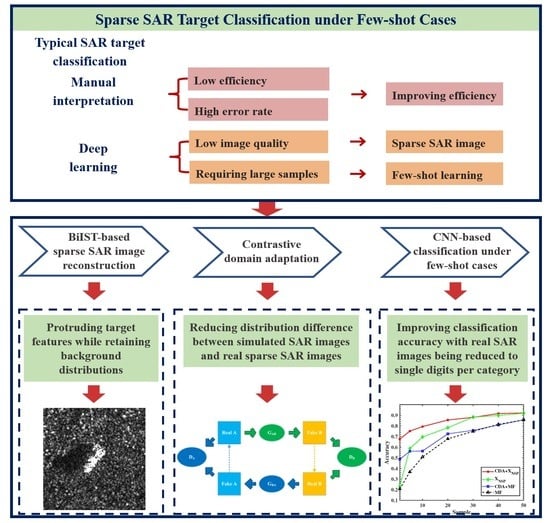

In this paper, we propose a novel sparse SAR target classification method based on contrastive domain adaptation (CDA) for a few shots. In the proposed method, we firstly use the BiIST algorithm to improve the MF-based SAR image performance, and hence construct a novel sparse SAR dataset. Then, the simulated dataset and constructed sparse dataset are sent into an unsupervised domain adaptation framework, so as to transfer target features and minimize distribution difference. After the CDA operation, the reconstructed simulated SAR image which has a similar background distribution and target features to real SAR image will be manually labeled for subsequent classification. Finally, the reconstructed simulated SAR image will be trained in a shallow CNN along with a few real samples. Experimental results based on the MSTAR dataset under standard operating conditions (SOC) show that compared to several typical deep learning methods for SAR target classification, the proposed method presents similar or better performance with enough real samples. With the decrease of the number of real SAR images, the proposed method achieves higher accuracy than other methods, showing great potential for application in real scenes under the extreme shortage of labeled real images.

The main contributions of this paper can be concluded as follows. 1. This paper first applies an unsupervised contrastive domain adaptation framework to minimize the distribution difference between a simulated SAR dataset and real sparse SAR images so as to facilitate transfer learning in sparse SAR image classification. 2. In view of the serious shortage of labeled SAR images in practical applications, the proposed method improves classification accuracy with the real sparse SAR images being reduced to single digits per class.

The rest of this paper is organized as follows.

Section 2 introduces the procedure of constructing a sparse real dataset by the BiIST algorithm and the principle of the common transfer learning method. The key process of the proposed algorithm, i.e., CDA, is discussed in

Section 3, including components of the unsupervised domain adaptation framework and theories of minimizing the distribution difference between the two datasets. The model of CNN used for target classification is described in

Section 4. Experiments based on the sparse MSTAR dataset and the reconstructed simulated dataset under different conditions are shown in

Section 5.

Section 6 analyzes the experimental results in detail. At last,

Section 7 concludes this work.