1. Introduction

Climate change, fisheries, cetacean recovery, and human disturbance are all hypothesized as drivers of ecological change in Antarctica [

1,

2,

3,

4]. Climate change is of particular concern on the western Antarctic Peninsula, because mean annual temperatures have risen 2 °C while mean winter temperatures have risen more than 5 °C [

5,

6,

7,

8,

9]. The consequences of climate change may be seen across the marine food web from phytoplankton to top predators [

3,

10,

11,

12,

13], though study of these processes has been traditionally limited by the challenges of doing field research across most of the Antarctic continent.

The brushtailed penguins (

Pygoscelis spp.) are represented throughout Antarctica, and are composed of the Adélie penguin (

P. adeliae), the chinstrap penguin (

P. antarctica), and the gentoo penguin (

P. papua). Adélie penguins are found around the entire continent, while chinstrap penguins and gentoo penguins are restricted to the Antarctic Peninsula and sub-Antarctic islands further north [

14]. Owing to their narrow diets and the relative tractability of monitoring their populations at the breeding colony, penguins can serve as indicators of environmental and ecological change in the Southern Ocean [

15,

16,

17]. Changes in the abundance and distribution of Antarctic penguin populations have been widely reported [

13,

18], though it is only just recently, with the development of remote sensing (RS) as a tool for direct estimation of penguin populations, that we have been able to survey the Antarctic coastline comprehensively rather than at a few selected, easily-accessed breeding sites (e.g., [

19,

20,

21]). These rapid and spatially-heterogeneous changes in penguin populations, and the appeal of penguins as an indicator of comprehensive shifts in the ecological functioning of the Southern Ocean, motivate the development of robust, automated (or semi-automated) workflows for the interpretation of satellite imagery for routine (annual) tracking of penguin populations at the continental scale.

The Antarctic is quickly becoming a model system for the use of Earth Observation (EO) for the physical and biological sciences because the costs of field access are high, the landscape is simple (e.g., rock, snow, ice, water) and free of woody vegetation, and polar orbiting satellites provide extensive regular coverage of the region. Logistical challenges, difficulty in comprehensively searching out animals (individuals or groups) that are scattered across a vast continent, and limited scalability and repeatability of traditional survey approaches have further catalyzed the addition of EO-based methods to more traditional ground-based census methods [

22]. Increased access to sub-meter commercial satellite images of very high spatial resolution (VHSR) EO sensors like IKONOS, QuickBird, GeoEye, Pléiades, Worldview-2, and Worldview-3, which rival aerial imagery in terms of spatial resolution, has added new methods for direct survey of individual animals or animal groups to the existing suite of tools available for land cover types.

Since the early studies conducted by [

23,

24], which uncovered the potential of penguin guano detection from medium-resolution EO sensors, a number of efforts to detect the distribution and abundance of penguin populations have been conducted using aerial sensors [

25,

26], medium-resolution satellite sensors like Landsat and SPOT [

19,

21,

27,

28,

29,

30,

31], and VHSR satellite sensors, such as IKONOS, Quickbird, and WorldView [

13,

20,

22,

32]. While the use of VHSR imagery for Antarctic surveys has been demonstrated as feasible, there remain several challenges before VHSR imagery can be operationalized for regular ecological surveys or conservation assessments. Classification currently hinges on the experience of trained interpreters with direct on-the-ground experience with penguins, and manual interpretation is so labor intensive that large-scale surveys can be completed only infrequently. At current sub-meter spatial resolutions, penguin colonies are detected by the guano stain they create. Because chinstrap and Adélie penguins nest at a relatively fixed density, the area of the guano stain can be used to estimate the size of the penguin colony [

22], though weathering of the guano and the timing of the image relative to the colony's breeding phenology can affect the size of the guano stain as measured by satellites. While these guano stains are spectrally distinct from bare rock, there are spectrally similar features in the Antarctic created by certain clay soils (e.g., kaolinite and illite) and by the guano of other, non-target, bird species. Manual interpretation of guano hinges on auxiliary information such as the size and shape of the guano stain, texture within the guano stain, and the spatial context of the stain, such as its proximity to the coastline and relationship to surrounding land cover classes. Creating the image-to-assessment pipeline will require automated algorithms and workflows for classification such as have been successfully developed for medium-resolution imagery [

21,

30].

While the encoded high-resolution information in VHSR imagery is an indispensable resource for fine scale human-augmented wildlife census, it is problematic with respect to automated image interpretation because traditional pixel-based image classification methods fail to extract meaningful information on target classes. The GEographic Object-Based Image Analysis (GEOBIA) framework, a complementary approach to per-pixel based classification methods, attempts to mimic the innate cognitive processes that humans use in image interpretation [

33,

34,

35,

36,

37]. GEOBIA has evolved in response to the proliferation of very high resolution satellite imagery and is now widely acknowledged as an important component of multi-faceted RS applications [

36,

38,

39,

40,

41]. GEOBIA involves more than sequential feature extraction [

42]. It provides a cohesive methodological framework for machine-based characterization and classification of spatially-relevant, real-world entities by using multiscale regionalization techniques augmented with nested representations and rule-based classifiers [

42,

43]. Image segmentation, a process of partitioning a complex image-scene into non-overlapping homogeneous regions with nested and scaled representations in scene space [

44], is the fundamental step within the geographic object-based information retrieval chain [

40,

45,

46,

47,

48]. This step is critical because the resulting image segments, which are candidates for well-defined objects on the landscape [

36,

49], form the basis for the subsequent classification (unsupervised/supervised methods or knowledge-based methods) based on spectral, spatial, topological, and semantic features [

34,

35,

39,

44,

47,

50,

51,

52,

53].

The use of VHSR imagery for penguin survey is rapidly evolving to the point at which spatially-comprehensive EO-based population estimates can be seamlessly integrated into continent-scale models linking spatiotemporal changes in penguin populations to climate change or competition with Southern Ocean fisheries. To reach this objective, however, it is critical to move beyond manual image interpretation by exploiting sophisticated image processing techniques. The study described below reports the first attempt to use a geographic object-based image analysis approach to detect and delineate penguin guano stains. The central objective of this research is to develop a GEOBIA-driven methodological framework that integrates spectral, spatial, textural, and semantic information to extract the fine-scale boundaries of penguin colonies from VHSR images and to closely examine the degree of transferability of knowledge-based rulesets across different study sites and across different sensors focusing on the same semantic class.

The remainder of this paper is structured as follows.

Section 2 describes the study area, data, and conceptual framework and implementation.

Section 3 reports the image segmentation, rule-set transferability, and classification accuracy assessment results.

Section 4 contains a discussion explaining the potential of GEOBIA in semi-/fully-automated detection of penguin guano stains from VHSR images, transferability of object-based rulesets, and opportunities of migrating the proposed conceptual framework to an operational context. Finally, conclusions are drawn in

Section 5.

2. Materials and Methods

2.1. Study Area and Image Data

In this study, we used a set of cloud-free QuickBird-2 and Worldview-2 images (

Figure 1) over sites containing either Adélie or chinstrap penguin colonies (

Table 1).

Colonies are generally too large to count all at once, so they are divided up by the surveyor(s) into easily distinguished sub-groups. In each sub-group, the number of nests (or chicks, depending on the time of year) are counted three times using a handheld tally counter. Each count is required to be within 5% of each other and if they are not, the process is repeated. The average of the three counts are used to determine to total number of breeding pairs at a given site. The candidate locations have been previously surveyed on the ground and therefore automated classification could be validated against prior experience, photodocumentation, and existing GPS data on colony locations. Both Adélie and chinstrap penguins form tightly packed breeding colonies, which aids in the interpretation of satellite imagery. Gentoo penguins (P. papua) also breed in this area, but their colonies are more difficult to interpret in satellite imagery (even for an experienced interpreter) because they often nest in smaller groupings or at irregular densities that can weaken the guano stain signature. For this reason, while Gentoo penguins breed in the Aitcho Islands, and at other locations that might have been included in our study, we have excluded them from this analysis.

Candidate scenes that have been radiometrically corrected, orthorectified, and spatially registered to the WGS 84 datum and the Stereographic South Pole projection were provided by the Polar Geospatial Center (University Minnesota). The QuickBird-2 sensor has a ground sampling distance (GSD) of 0.61 cm for the PAN and 2.44 m for MS bands at nadir with 11-bit radiometric resolution. The Worldview-2 hyperspatial sensor records the PAN and the 8 MS bands with a GSD of 0.46 m and 1.84 m at nadir, respectively.

2.2. Conceptual Framewok

While VHSR sensors enable the detection of a single seal (or, under some circumstances, a penguin) hauled out on pack ice, it remains very difficult to intelligently transform very high resolution image pixels to predefined semantic labels. Automated processes for interpreting VHSR imagery are increasingly critical because recent policy easements allow the release of 0.31 m resolution satellite images (e.g., WorldView 3) for civilian use, and the number of commercial satellites supplying EO imagery continues to grow. While there are some developments in fully-automated VHSR image interpretation, the intriguing question of “how to meaningfully link RS quantifications and user defined class labels” remains largely unanswered and voices the argument of considering this issue as a technological challenge rather a broader knowledge management problem [

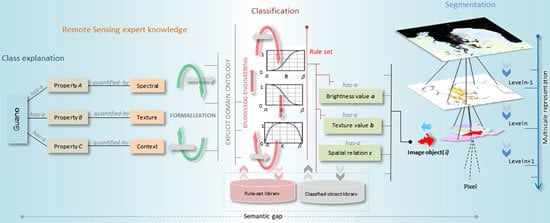

59] in VHRS image processing. To address the “big data” challenge in EO of Antarctic wildlife, we propose a conceptual outline (

Figure 2), which resonates with the framework of [

59], for automated segmentation and classification of guano in VHSR imagery. In this framework, scene complexity is reduced through multi-scale segmentation, which incorporates multisource RS/GIS/thematic data and creates a hierarchical object structure based on the premise that real world features are found in an image at different scales of analysis [

36]. Image object candidates extracted at different scales are adaptively streamed into semantic categories through knowledge-based classification (KBC), which interfaces low-level image object features and expert knowledge by means of fuzzy membership functions. The classified image objects can then be integrated into ecological models; for example, areas of guano staining can be used to estimate the abundance of breeding penguins [

22].

In a cyclic manner, classified image objects and model outputs are further integrated into the segmentation and rule-set tuning process. Operator- and scene-dependency of rulesets can impair the repeatability and transferability of KBC workflows across scenes and sensors for the same land cover of interest. We propose to organize rulesets into target- and sensor-specific libraries [

59,

60] and systematically store a set of classified image objects as “bag-of-image-objects” (BIO) for refining and adapting KBC workflows.

It is important to emphasize that our approach is not a fully-developed system architecture but instead represents a road map for an “image-to-assessment pipeline” that links integral components like class explanation, remote sensing expert knowledge, domain ontology [

61,

62], multiscale image content modeling, and ecological model integration. This work is crucial to decision support software that links image interpretation with statistical models of occupancy (presence and absence of populations) and abundance and, at a subsequent step, model exploration applications that allow stakeholders to perform geographic searches for populations, aggregate abundance across areas of interest (e.g., proposed Marine Protected Areas), and visualize predicted dynamics for future years. In this exploratory study, we develop those elements critical to the image interpretation components of this pipeline, specifically image segmentation, object-based classification, and ruleset transferability.

2.3. Image Segmentation

When thinking beyond perfect image object candidates (

i.e., an ideal correspondence between an image object and a real-world entity), two failure modes expected in segmentation are over-segmentation and under-segmentation [

37,

40,

63]. The former is generally acceptable, although it could be problematic if the geometric properties of image object candidates are used in the classification step. The latter is highly unfavorable because the resulting segments represent a mixture of elements that cannot be assigned a single target class [

37,

47]. Thus, better classification accuracy hinges on optimal segmentation, in which the average size of image objects is similar to that of the targeted real-world objects [

40,

64,

65,

66,

67].

Image segmentation is inherently a time- and processor-intense process. The processing time exponentially rises with respect to the scene size, spatial resolution, and the size of image segments. We employed two algorithms to make the segmentation process more efficient, a multi-threshold segmentation (MTS) algorithm and a multiresolution segmentation (MRS) algorithm within the eCognition Developer 9.0 (Trimble, Raunheim, Germany) software package. The former is a simple and less time-intense algorithm, which splits the image object domain and classifies resulting image objects based on a defined pixel value threshold. In contrast, the latter is a complex and time-intense algorithm, which partitions the image scene based on both spectral and spatial characteristics, providing an approximate delineation to real-world entities. In the initial passes of segmentation, we employed the MTS algorithm in a self-adaptive manner for regionalizing the entire scene into manageable homogenous segments. The MRS algorithm was then tasked for targeted segments for generating progressively smaller image objects in a multiscale architecture.

2.3.1. Multi-Threshold Segmentation Algorithm

The MTS algorithm uses a combination of histogram-based methods and the homogeneity measurement of multiresolution segmentation to calculate a threshold dividing the selected set of pixels into two subsets whereby the heterogeneity is increased to a maximum [

68]. This algorithm splits the image object domain and classifies resulting image objects based on a defined pixel value threshold. The user can define the pixel value threshold or it can operate in self-adaptive manner when coupled with the automatic threshold (ATA) algorithm in eCognition Developer [

69]. The ATA algorithm initially calculates the threshold for the entire scene, which is then saved as a scene variable, and makes it available for the MTS algorithms for segmentation. Subsequently, if another pass of segmentation is needed, the ATA algorithm calculates the thresholds for image objects and saves them as object variables for the next iteration of the MTS algorithm.

2.3.2. Multiresolution Segmentation Algorithm

Of the many segmentation algorithms available, multiresolution segmentation (MRS, see [

69]) is the most successful segmentation algorithm capable of producing meaningful image objects in many remote sensing application domains [

41], though it is relatively complex and image- and user-dependent [

37,

51]. The MRS algorithm uses the Fractal Net Evolution Approach (FNEA), which iteratively merges pixels based on homogeneity criteria driven by three parameters: scale, shape, and compactness [

39,

46,

47]. The scale parameter is considered the most important, as it controls the relative size of the image objects and has a direct impact on the subsequent classification steps [

64,

66,

70].

2.3.3. Segmentation Quality Evaluation

As discussed previously, optimal segmentation maximizes the accuracy of the classification results. Over-segmentation is acceptable but leads to plurality of solutions while under-segmentation is unfavorable and produces mixed classified objects that defy classification altogether. In this study, we used a supervised metric, namely the Euclidean Distance 2 (ED2), to estimate the quality of segmentation results. This metric optimizes two parameters in Euclidian space: potential-segmentation error (PSE) and the number-of-segmentation ratio (NSR). The PSE gauges the geometrical congruency between reference objects and image segments (image object candidates) while the NSR measures the arithmetic correspondence (

i.e., one-to-one, one-to-many, or many-to-one) between the reference and image object candidates. The highest segmentation quality is reported when the calculated ED2 metric value is close to zero. We refer the reader to [

46] for further detail on the mathematical formulation of PSE and NSR. In order to generate a reference data set for quality evaluation, we tasked an expert to manually digitize guano stains.

2.4. Quantifying the Transferability of GEOBIA Rulesets

While GEOBIA provides rich opportunities for expert (predefined) knowledge integration for the classification process, it also makes the classification more vulnerable to operator-/scene-/sensor-/target-dependency, which in turn hampers the repeatability and transferability of rules. Building a well-defined ruleset is a time-, labor-, and knowledge-intense task. Assuming that the segmentation process is optimal, robustness of GEOBIA rulesets rely heavily on the design goals of the ruleset describing the target classes of interest (e.g., class description) and their respective properties (e.g., low-level image object features) (see

Figure 2).

Table 2 lists important spectral, spatial, and textural properties, which we elected for developing the original ruleset.

Use of fuzzy rules (which can take membership values between 0 and 1) is much favored in object-based classification over crisp rules (which can take membership values constrained to two logical values “true” (=1) and “false” (=0)) because the “fuzzification” of expert-steered thresholds improves transferability. In the case of GEOBIA, the classes of interest can be realized as a fuzzy set of image objects where a membership function(s) defines the degree of membership (μ [0, 1], μ = 0 means no membership to the class of interest, μ = 1 means the conditions for being a member of the class of interest is completely satisfied) of each image object to the class of interest with respect to a certain property. A fuzzy function describing a property p can be defined using: α (lower border of p), β (upper border of p), a (mean of membership function), and v (range of membership function).

In this study, we employed the framework proposed by [

71] for quantifying the robustness of fuzzy rulesets. While briefly explaining the conceptual basis and implementation of the robustness measure, we encourage the reader to refer [

71] for further details.

A ruleset is considered to be more robust if it is capable of managing the variability of the target classes detected in different images given that the images are comparable and the segmentation results are optimal. [

71] identified three types (C, O, F) of changes that a reference rule set (R

r) is exposed to during adaptation and used them to quantify the total deviation (

d) of the adapted ruleset (R

a).

Type C: Adding, removing, or deactivating a class.

Type O: Change of the fuzzy-logic connection of membership functions.

Type F: Inclusion or exclusion (Type Fa) and changing the range of fuzzy membership functions (Type Fb).

The total deviation (

d) occurring when adapting R

r to R

a is given by summation of individual changes of Types C, O, and F;

Figure 3 exhibits the possible changes of a membership function, which is subjected to adaptation under Type F

b. Let R

r be the master ruleset [

72] developed based on the reference image (I

r) and R

a be the adapted version on R

r over a candidate image I (I

a). The robustness (

rr) of the master ruleset can be quantified by coupling with a quality parameter

q (

qa and

qr denotes the classification accuracy of image I

a and I

r, respectively) and total deviations associated with R

a (

da).

2.5. Classification Accuracy Assessment

The areas correctly detected (True Positive—TP: correctly extracted pixels), omitted (True Negative—TN: correctly unextracted pixels), or committed (False Positive—FP: incorrectly extracted pixels) were computed by comparison to the manually-extracted guano stains. Based on these areas the Correctness, Completeness, and Quality indices were computed as follows [

73,

74]:

Correctness is a measure ranging between 0 and 1 that indicates the detection accuracy rate relative to ground truth. It can be interpreted as the converse of commission error. Completeness is also a measure ranging from 0 and 1 that can be interpreted as the converse of omission error. The two metrics are complementary measures and should be interpreted simultaneously. For example, if all scene pixels are classified as TPs, then the Completeness value approaches 1 while the Correctness approaches 0. Quality is a more meaningful metric that normalizes the Completeness and Correctness. The Quality measure can never be higher than either the Completeness or Correctness. The Quality parameter (Equation (6)) is then used as q in Equation (3) to compute the robustness of the master ruleset in each adaptation.

4. Discussion

This is the first attempt of which we are aware to use of geographic object-based image analysis paradigm in penguin guano stain detection as an alternative for time- and labor-intensive human-augmented image interpretation. In this exploratory study, we tasked object-based methods to classify chinstrap and Adélie guano stains from very high spatial resolution satellite imagery and closely examined the transferability of knowledge-based GEOBIA rules across different study sites focusing on the same semantic class. A master ruleset was developed based on a QuickBird image scene encompassing the Hope Bay area and it was re-tasked “without adaptation” and “with adaptation” on candidate image scenes (QuickBird and WorldView-2) comprising guano stains from six different study sites. The modular design of our experiment aimed to systematically gauge the segmentation quality, classification accuracy, and the robustness of fuzzy rulesets.

Image segmentation serves as the precursor step in the GEOBIA. Based on the highlighted success rate, in this study, we used eCognition developer’s multiresolution segmentation (MRS) algorithm and complemented it with the multi-threshold segmentation (MTS) algorithm, which can be manipulated in a self-adaptive manner. The underlying idea of coupling MTS and MRS algorithms is to initially reduce the scene complexity at multiscale settings and later feed those “pointer segments” (semantically ill-defined and mixed objects) to the MRS algorithm for more meaningful fine-scale segmentation that creates segments resembling real-world target objects. This hybrid procedure consumes notably less time for scene-wise segmentation than tasking the complex MRS algorithm alone over the entire scene. Segmentation algorithms are inherently scene dependent, which impairs the transferability of a particular segmentation algorithm’s parameter settings (e.g., scale parameter of the MRS algorithm) from one scene to another. Thus, we fine-tuned the segmentation process on a trial-and-error basis at each study site until an acceptable segmentation quality was achieved before tasking either master or adapted rulesets. In general, optimal segmentation results, an equilibrium stage of under- and over-segmentation, are favored for achieving a better classification. From that respect, we purposely employed an empirical segmentation quality measure- Euclidean Distance 2 (ED2), which operates on arithmetic and geometric agreement between reference objects and the image object candidates. Compared to other sites, HOPE reported equally high geometric and arithmetic fit yielding the best overall segmentation quality (lowest ED2). The marked spectral contrast between the guano stains and the dark background materials has led to a better delineation of object boundaries in the segmentation process. In contrast, ZAVO showed the highest ED2 metric value, which was mainly due to the weak arithmetic congruency (high NSR score) at the expense of high geometrical fit. A similar trend can be observed in the remaining study sites as well, for example, BIRD, PAUL, and SPIN. This “one-to-many” relationship between the reference objects and the image object candidates arises primarily due to two reasons: (1) the occurrence of target objects at multiple scales, which impairs the proper isolation of object boundaries at a single scale; (2) when producing reference objects, human interpreters tend to generalize object boundaries [

75] based on their experience and semantic meanings of objects than low-level image features like color, texture, or shape. It is interesting to see the fact that study sites with high ED2 scores (poor segmentation quality) have achieved higher classification accuracy than the sites with low ED2 scores. For example, overall classification accuracy (

Table 3) of ZAVO has surpassed that of HOPE. By performing a segmentation quality evaluation, we aimed to rule out the possibility that the variations in classification accuracies across candidate study sites attributed not merely because of perturbations in fuzzy rules but because of inferior or superior segmentation.

GEOBIA’s wide adoption in VSHR image based land use land cover (LULC) mapping reflects the fact that object-based workflows are more transferable across image scenes than alternative image analysis methods. However, GEOBIA cannot explicitly link low-level image attributes encoded in image object candidates to high-level user expectations (semantics). While the challenge is universal to VHSR image based LULC mapping in general, within the scope of this study, the intriguing question is “what is the degree of transferability of a master ruleset for guano across scenes and across sites?” Designing a master ruleset based on a certain image scene and targeting a set of target classes is a time-, labor-, and knowledge-intensive process as the operator has to choose the optimal object and scene features and variables to meaningfully link class labels and image object candidates. Thus, in an operations context, reapplication of the master ruleset without adaptations across different scenes would be ideal; however, it is highly unrealistic in most image classification scenarios to achieve acceptable classification accuracy without ruleset modification.

When the master ruleset is reapplied without adaptations (

Table 3), the QuickBird scene over BAIL and WorldView-2 scene over BIRD showed relatively high detection of guano stains but still the classification accuracies were inferior to the reference QuickBird scene over HOPE. This is mainly due to the similar spectral, textural, and most importantly neighborhood characteristics (spatially-disjoint patches embedded with dark substrate) of the target class in these three study sites. On the other hand, the WorldView-2 scene over ZAVO showed a zero detection of guano stains when the master ruleset was re-tasked as is. One could argue that this is due to the different sensor characteristics, however; results from BAIL and BIRD challenge that argument. We suspect that notably high reflectance of clouds and wave crests, spectral characteristics of guano, and the hierarchical design (labelling of sub-objects based on super-objects) may have led to an ill detection of guano. From the view point of “ruleset without adaptations”, our analysis reveals that the success rate of the master ruleset’s repeatability is low and unpredictable even in instances where the classification is constrained to a particular semantic class. As seen in

Table 3, scene- or site-specific adaptations inevitably enhance the classification accuracy at the expense of operator engagement in re-tuning of fuzzy rules.

When revisiting the fine-scale adjustments associated with the fuzzy rules across all candidate sites (

Table 4,

Table 5,

Table 6,

Table 7,

Table 8 and

Table 9), it is clear that a majority of changes fall onto the Type F

b category, which harbors the changes associated with the underlying fuzzy membership function. In certain instances, for example SPIN and ZAVO, the border contrast rule was left inactive because of the spectral ambiguity between the guano and the surrounding geology. Across all candidate sites, Type F

b deviations have been dominated by the

δv component, which elucidates the fact that the shape (range

v) of the fuzzy membership function (stretch or shrink) has changed rather than a shift in the mean value (

a). This reflects the potential elasticity available to the operator to refine rules. In most cases, our changes to the fuzzy membership functions associated with the class guano are designed to capture the spectral and textural characteristics that distinguish guano stains from the substrate dominating clay minerals like illite and kaolinite [

19]. Based on the adapted versions of the master ruleset, study sites like BAIL, BIRD, and ZAVO exhibited superior classification results compared to that of the reference image. Of those, BIRD showed the lowest amount of deviations (

d), while ZAVO showed the highest number of total changes in fuzzy membership functions (

Table 10). This might be due the fact that HOPE and BIRD share comparable substrate characteristics and contextual arrangement. Except AITC, other candidate sites exhibited either superior or comparable detection of guano stains with respect to the reference image scene. Even the manual delineation of guano stains in AITC was difficult due the existence of guano-like substrate and moss (early stage or dry). This site appeals for inclusion of new rules to discriminate guano rather than simple adaptation of the master ruleset. When considering the robustness of the master ruleset (

Table 11) on a site-wide basis and neglecting deviations (

d = 0 in Equation (3)), BAIL, BIRD, PAUL, and ZAVO reported highest robustness (

r > 1) while AITC reported the lowest. Overall, the mean robustness of all sites is high (0.965), which gives the impression that the master ruleset has been able to produce classification results in all candidate sites almost comparable to the reference study site. When associating Type F

a and Type F

b (

d ≠ 0 in Equation (3)) changes to the analysis, site-wise and mean robustness exhibit low values compared to the previous scenario as

d penalizes the robustness measure. While accepting the importance of ruleset deviations as a parameter in the robustness measure, we think that Equation (3) does not necessarily include the capacity of the master ruleset to withstand perturbations because the parameter d in the penalization process appears to be asymmetrical. Thus, we realize that the documentation of rule-specific changes and total deviations are of high value in judging the degree of transferability.

The general consensus of the GEOBIA community is to fully exploit all opportunities—spectral, spatial, textural, topological, and contextual features and variables at scene, object, and process levels—available for manipulating image segments in the semantic labelling process. This approach inevitably enhances the classification accuracy; however, it does so at the expense of transferability. We highlight the importance of designing a middleware [

76] ruleset, which inherits a certain degree of elasticity rather than a complete master ruleset. From the perspective of knowledge-based classification (KBC), middleware products harbor the optimal set of rules, which combine explicit domain ontology and remote sensing quantifications. In our study, we have purposely confined ourselves to a relatively small number of rules for discriminating focal target classes. These rules are some of the most likely remote sensing quantifications and contextual information that a human interpreter would use in semantic labelling process other than his/her procedural or structured knowledge. There is always potential for introducing higher- and lower-level rules for the middleware products to refine coarse- and fine-scale objects, respectively. Rather than opaquely claiming the success of object-based image analysis in a particular classification effort, a systematic categorization and documentation (e.g.,

Table 4,

Table 5,

Table 6,

Table 7,

Table 8 and

Table 9) of individual changes in fuzzy membership functions and a summary of overall changes in the reference ruleset (e.g.,

Table 10) makes the workflow more transparent and provides rich opportunities to analyze the modification required for each property. This helps identify crucial aspects of the rule set, interpret possible reasons for such deviations, and introduce necessary mitigations. Moreover, this kind of retrospective analysis provides valuable insights on the cost (e.g., time, knowledge, and labor) and benefits (classification accuracy) encoded in the workflow.

We are no longer able to rely on low-level image attributes (e.g., color, texture, and shape) for describing LULC classes but require high-level semantic interpretations of image scenes. Image semantics are context sensitive, which means that semantics are not an intrinsic property captured during the image acquisition process but rather an emergent property of the interactions of human perception and the image content [

77,

78,

79,

80]. GEOBIA’s Achilles’ heel—the semantic gap (lack of explicit link)—impedes repeatability, transferability, and interoperability of classification workflows. On the one hand, the rule-based classification reduces the semantic gap. On the other hand, it leads to plurality of solutions and hampers the transferability due to the need for significant operator involvement. For instance, in this study, another level of thematically-rich guano classification based on species (e.g., Adélie, gentoo, chinstrap, and possibly other seabirds) is critical for ecological model integration and will require deviations in the ruleset across image scenes or even possibly an array of new rules. From a conceptual perspective, we recognize GEOBIA’s main challenge as a knowledge management problem and possibly mitigated with fuzzy ontologies [

59,

74,

75,

81]. This serves as the key catalyst for our proposed conceptual sketch (

Figure 2) for an “image-to-assessment pipeline” that leverages class explanation, remote sensing expert knowledge, domain ontology, multiscale image content modeling, and ecological model integration. We have proposed the concepts of target- and sensor-specific ruleset libraries and a repository of classified image objects as “bag-of-image-objects” (BIO) for refining and adapting classification workflows. Revisiting our findings, we should highlight that the deviations in the master ruleset across sites (also sensors) are not necessarily influenced by the variations in the class guano itself (Adélie

vs. chinstrap penguins) but may be attributed to site-/region-specific characteristics such as existence of extensive vegetation. This precludes the use of a master ruleset that operates at the continent-scale but suggests instead an array of ruleset libraries representing regions, habitats, and possibly different sites within the continent. We emphasize the fact that a deep analysis on what factor(s) affected deviation(s) in a specific rule(s) seriously appeals for new components to the current experiment. Such analysis is beyond the scope of this study. Thus, here we provided plausible causes that hamper the transferability. We did not include gentoo penguins in our study even though their colonies also produce a guano stain visible in satellite imagery because their nesting densities are so highly variable that identification of gentoo colonies in imagery can be challenging even at well-mapped sites. For that reason, segmentation and classification of sites with gentoo penguins should be approached with particular caution until gentoo-specific methods can be developed. Future research will need to focus on a deeper understanding of the specific causes controlling the transferability, the engagement of machine learning algorithms, and a well-structured cognitive experiment to understand human visual reasoning in wildlife detection from remote sensing imagery.