A measure of the performance of a time series model is its ability to produce good forecasts. This ability is often tested using split-sample experiments, in which the model is fitted to the first part of a known data sequence and forecasts are obtained for the latter part of the series. The predicted values are then compared with the known observations. The goal is to minimize the foresting errors so that the predicted future values are as close as possible to actual future values.

Time series forecasting is normally made only one-step prediction based on past observations, as shown in

Table 3 and

Figure 5a. To solve problems of multiple-step ahead prediction, two approaches are often considered: (1) a direct model re-training approach and (2) a recursive approach [

29]. The re-training strategy re-trains the forecasting model when new predictions are made as shown in

Figure 5b. In the case of predicting SIC for the next two months, for example, we would develop a model for the first month and a separate model for the second month. This approach may develop more fitted models and may produce unexpected results, but its extremely high computational overhead is problematic in practice, especially for training our massive sea ice data. The recursive approach uses a single prediction model multiple times. The predicted value for time step

is used as an input for making a prediction at the following time step

, as shown in

Figure 5c. The recursive approach is not computationally intensive, because it does not require a re-training process when new predictions are entered. However, prediction errors may quickly increase and may be accumulated, because the prediction at

is dependent on the prediction at

.

Monthly forecasts for 2015 were made by fitting a model to the first 434 months of the time series and using this model to predict monthly SIC values for the last 12 months of the time series in two ways: (1) one-month (short-term) prediction and (2) one-year (long-term) prediction using a recursive approach. In one-month prediction, the sensitivity of the prediction model according to month can be obtained, but it can only predict one time step into the future. For long-term prediction, sequentially predicted SIC values are used as input values of the pre-trained single DL model.

3.1.1. Short-Term (One-Step Ahead) Predictions of Monthly Sea Ice Concentration

As mentioned in the previous section, we used the most popular initialization, activation and optimization algorithms and tuned the batch size, the numbers of epochs, the number of hidden layers, and the number of neurons (or memory cells for the LSTM) using a parallelized grid search to configure the hyperparameters for fitting the network topologies. However, if we used the network deep enough and had enough iterations, the DL models eventually converged to a high accuracy outcome. Therefore, we chose three hidden layers with 32 neurons (or memory cells for the LSTM) each. The batch size and the number of epochs were set to 12 and 200, respectively. Then we initialized the network weights using small random values from a uniform distribution and used the rectifier, also known as rectified linear unit (ReLU) activation function, which has shown improved performance in recent studies, on each layer. Dropout layers with a rate of 0.3 were added after each hidden layer to prevent overfitting. The Adam optimization algorithm, which is efficient in practice, was chosen. Detailed network topologies for MLP and LSTM are described in

Figure 3. To quantitatively and qualitatively evaluate the performance of the proposed DL-based prediction models, we used an autoregressive (AR) model, which is a simple and traditional statistics-based time series model. An AR model also uses observations from previous time steps as inputs to a regression equation to predict the value at the next time step [

30,

31,

32]. Because an AR model can be used to solve various types of time series problems [

32], it is a good baseline model for evaluating the performance of our proposed DL-based models. Both AR and DL-based models require that the number of past observations be known. Because SIC data often exhibit annual patterns, 12 previous observations were employed to develop all prediction models.

We first evaluated quantitative accuracies of the models by calculating root mean square errors (RMSEs) between the actual and predicted values as listed in

Table 4. To prevent the overall RMSE decreasing because of the effect on very small error values over open sea or melted areas in summer, we used pixels that actually contained sea ice in either observed or predicted data for RMSE computations. Over the course of the year, both MLP and LSTM DL-based predictions typically exhibited better agreement with the observed values than the AR-based predictions. The monthly mean RMSE of the LSTM slightly outperformed the MLP.

RMSE values in the summer melting season, especially from July to October, were much larger than those in other seasons. As shown in

Figure 6, which illustrates the 10-year moving mean (blue solid line) and variability (orange solid line) of sea ice anomalies (blue dotted line) from 1979 to 2015, winter and spring variability were small and did not significantly change from 1979 to 2015, but summer and fall variability dramatically increased in the 2000s. Therefore, it should be noted that there is a relationship between the high RMSE values of recent sea ice predictions and the high variability of sea ice anomalies in summer. The seasonal average comparisons summarized in

Table 5 show that the DL models also outperformed AR model for both freezing and melting seasons. The average RMSE difference between the results of the AR and DL approaches for melting season (3.64% for MLP; 3.94% for LSTM) was relatively large compared to the difference for freezing season (2.68% for MLP; 2.99% for LSTM).

As a qualitative evaluation of the models, we performed a visual inspection of the monthly predicted SICs by comparison with the observed images, to assess how well the forecasting models predicted future SICs.

Figure 7 illustrates 12-month SIC sets for 2015. Each monthly set consists of four images: (1) the observed SIC (upper left); (2) the predicted SIC obtained using the AR model (upper right); (3) the predicted SIC obtained using the MLP-DL model (lower left), and; (4) the predicted SIC obtained using the LSTM-DL model (lower right). Brighter pixels are associated with higher SIC values; the grey circle centered on the North Pole indicates where the central Arctic is invisible to the satellite instruments used to generate these images.

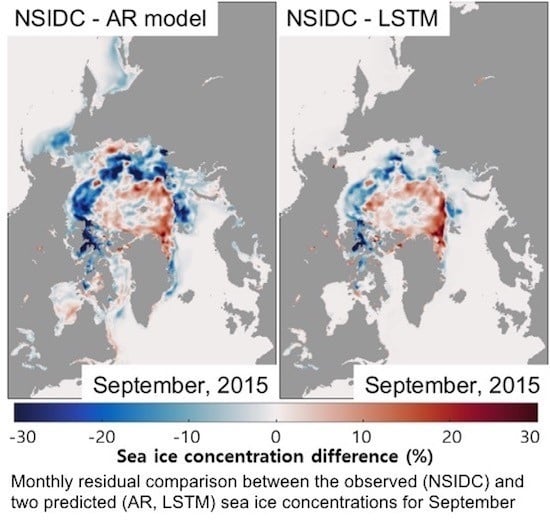

Figure 8 shows the spatial patterns of monthly residual images, highlighting the differences between the observed and predicted values. The image on the left in each monthly set represents the AR model error, the image on the middle represents the MLP-DL model error, and the image on the right represents the LSTM-DL model error. In

Figure 8, areas with predicted ice concentration greater than the observed ice concentration (overestimated areas) are indicated in blue, and areas with predicted ice concentration less than the observed ice concentration (underestimated areas) are indicated in red. Overall, as both

Figure 7 and

Figure 8 show, the errors in the predicted winter ice cover over the central Arctic Ocean were small. Most of the anomalies in the predicted SIC occurred in the North Atlantic and North Pacific Oceans, as a result of fluctuations in the ice edge. Greater variations occurred in the predicted summer sea ice over the central Arctic Ocean. Evaluation of the prediction model performance showed that the AR model often generated more overestimated and underestimated pixels than the DL model-based images. While both DL models generated quantitatively and qualitatively similar outputs, the SIC images predicted by the LSTM model showed slightly better agreements with the observed images than the MLP model-based images (i.e., geographical locations of overestimated and underestimated regions are similar, but the amount of the LSTM errors is slightly smaller than the MLP). A brief qualitative explanation for each month is presented below. The names of the locations are shown in

Figure 4.

The January and February images show that both models yielded very accurate predictions over the central Arctic, but some discrepancies were present on the Pacific and Atlantic margins. The AR model overestimated sea ice in the Sea of Okhotsk and the Bering Sea, while sea ice in the Barents Sea was underestimated by the AR model in comparison to the DL-based models. For March, the AR model predicted more sea ice areas in both the Sea of Okhotsk and the Barents Sea than the proposed model. The April image predicted by the AR model contained more inaccurately predicted SIC values in both the Atlantic and Pacific Oceans than the DL models. From January to April, both prediction images over the central Arctic, where SIC values are high, exhibited better agreement with the observed images, whereas errors were typically observed near the ice edge. For May and June, when sea ice melting begins, much higher residuals were observed in multiple areas (e.g., the Bering Sea, the Chukchi Sea, the Laptev Sea, the Barents Sea, the Baffin Bay, and the Hudson Bay) in the AR-based prediction images than in the DL model-based images. Sea ice residuals that were often present near the ice edge in winter move to the central Arctic as melting progresses. For the summer melt season (July to September), both models predicted higher-than-observed SICs in the Arctic Ocean. The total amount of sea ice predicted for the Northern Hemisphere by the AR model was much larger than that predicted by the DL models. As mentioned in the introduction, Arctic SIE has declined, and the summer rate of decline has accelerated, in recent years. Large overestimations for the summer months were obtained with both the AR and DL models, especially in the AR-predicted images. This indicates that the AR model may not capture this unusual trend as well as the DL models. The AR predictions for October, when sea ice is freezing, exhibited large underestimations along the ice margin in the Chukchi, Laptev, and Barents Seas. The DL models yielded similar underestimates, but the underestimated areas are much smaller than those resulting from use of the AR model. Mid-September is the sea ice minima and late-September is the onset of the freezing season. In the last few years, however, late-September sea ice growth has slowed down resulting in an increased October growth rate. In October 2015, the average SIE increased by roughly 67% compared to September (i.e., 4.63 million square kilometers in September; 7.72 million square kilometers in October as reported by the NSIDC [

33]). Similar to summer predictions, the prediction models, especially the AR model, could not properly capture this quick change. The DL models also could not capture the change, but they were better than the AR model. This indicates that the DL models, which can make more complicated decisions, may respond better to this rapid increase in sea ice than the AR model. Similar to other winter SIC images, for the period from November to December, the predicted images showed visually similar error patterns, but the images predicted by the AR model contained more dark blue and dark red pixels, as

Figure 8 shows, indicating more significantly overestimated and underestimated sea ice areas, respectively, in the central Arctic area, in comparison to the DL model predictions.