1. Introduction

Traditional subsea inspection, maintenance and repair (IMR) operations are costly because they require manual control using Remotely Operated Vehicles (ROVs). One goal of the subsea industry is to move towards more autonomous IMR operations which are cost-effective and facilitates extraction of oil in more remote areas. To achieve robust and fault-safe autonomous IMR requires 3D sensors that can provide a high-resolution and -precision 3D view of objects of interest. Furthermore, if the 3D sensor can provide “error-bars” on the 3D data, the autonomous system can make more reliable decisions with regards to e.g., the likelihood that a pipeline is defect, how reliably a robotic arm can grip a handle, or determine whether a valve on the subsea template is open or closed.

Sonars have traditionally been the underwater 3D technology of choice because of its range and robustness against water turbidity. However, sonar’s image update rates are limited by the speed of sound, while diffraction limits the lateral resolution. The result is slow update rates and low-resolution 3D data which makes it impractical and imprecise to be used in many autonomous IMR operations.

Video cameras are extensively used on ROVs today as a guidance tool for the operators, as well as for some autonomy using e.g., markers [

1]. Video cameras do not suffer from the same physical limitations as sonars. Cameras allow fast sampling rates and high lateral resolution, due to the high speed and short wavelengths of light. However, in practice the light-water interaction causes light attenuation and scattering which affects the performance of optical 3D systems. The effect of both attenuation and scattering are dependent on the turbidity of the water. Light attenuation limits the potential range of the system, while the scattering has primarily two effects. The backscatter causes a contrast degradation in the image while the forward scatter causes a blurring of features. Some typical approaches to remove the scattering effect on 2D images is to apply image de-hazing [

2,

3], de-convolution [

4] or specific forward- or backward-scatter models [

5,

6].

Single camera systems are capable of extracting 3D information through photogrammetry [

7]. Stereopsis is widely used as a 3D sensing technology, mainly because of its relatively simple and cheap construction [

8]. A stereo-camera system consists of two calibrated cameras and a stereo-matching algorithm which searches for corresponding features along corresponding epipolar lines in the cameras [

9]. Once a correspondence (disparity) is established, the distance can be estimated through simple triangulation. Establishing the feature correspondences in underwater imagery is challenging because of attenuation and scattering which reduces the image contrast, color separation and smears out image features [

5]. Furthermore, many underwater structures are textureless which makes it almost impossible to find feature correspondences.

532 nm lasers are frequently used as a light source underwater to increase the imaging range by diminishing the effect of light attenuation and scattering. Triangulation systems project the laser light, typically as laser sheets [

10,

11,

12] or points [

13], and generally incorporate a relative slow scanning mechanism to cover an extended field of view. At short distances, the imaging sensor can easily be saturated because of the high energy focused light. An alternative faster approach is to illuminate the whole field-of-view with short powerful light pulses and use a fast range-gated camera to estimate the time-of-flight [

14,

15,

16]. Range-gated solutions are effective solutions to suppress backscatter [

15,

17].

Structured light sensors typically use a DMD for fast time-multiplexing of spatially coded light [

18]. The advantage over laser-based scanning approaches for subsea intervention and inspection is the potential for real-time frame-rates and high-resolution both laterally and depth-wise in the 0.5 m-2 m range. Typical patterns used are binary [

18], Gray codes [

19] or sinusoidal fringe patterns [

20]. Gray-codes are often used to phase-unwrap the phase-maps from high-frequency phase-shifted sinusoidal patterns [

21]. The depth-precision of such systems are limited by the number of codes that can be reliably segmented [

22]. With increasing turbidity, the boundary between illuminated and non-illuminated areas are blurred and an accurate segmentation is difficult [

23].

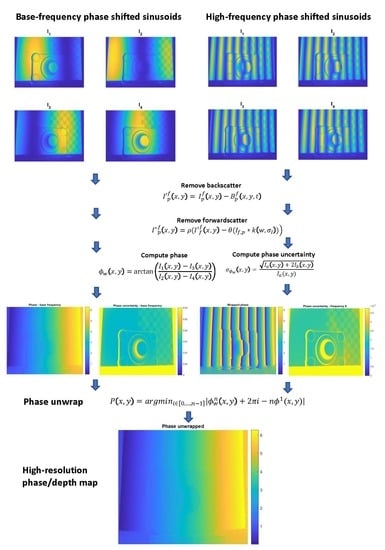

An alternative to using binary/Gray codes for phase unwrapping is to use multi-frequency phase-shifted (MFPS) projection and unwrap the phase based on the remainder theorem [

24] or the phase uncertainty [

25]. In this paper we propose an underwater structured light system which uses an MFPS method adapted for underwater use. Forward scattering of light in water can be viewed as a convolution with a symmetric point spread function (PSF). In an ideal setting, a symmetric PSF convolved with an infinite sinusoidal wave will not change the phase or frequency only the amplitude. Unfortunately, the projected sinusoid is bounded and height differences shifts the sinusoidal pattern such that it is not a spatial sinusoidal signal seen from the camera. Consequently, the scattering affects the phase estimate of sinusoidal fringe patterns. We propose models for removing the effect of both back- and forward-scatter. A relationship between the shot-noise of the signal and the phase-estimates is established. The phase uncertainty is used to unwrap the high-frequency phase estimates. The result is a dense depth map with corresponding depth uncertainties. We compare our results to GCPS.

2. Materials and Methods

In this section we first introduce the hardware that were used to produce the results in the paper. Even though the concepts of depth precision from phase stepping and phase unwrapping have been published elsewhere, we provide a short summary of them in

Section 2.2 and

Section 2.3 to introduce our notation.

Section 2.4 and

Section 2.5 summarizes our proposed methodology for adaptive phase unwrapping and scatter-removal.

2.1. Underwater Housing and Sensor

The structured light sensor is made up of a machine vision camera and a DMD (digital mirror device) projector (see

Figure 1a). The projector is a LightCrafter RGB projector (DLP

® LightCrafter™ 4500, Texas Instruments, Dallas, TX, USA) which facilitates 120 Hz 8-bit pattern display. The machine vision camera is a BU238M USB 3.0 black and white camera (Toshiba, Tokyo, Japan) with a resolution of 1920 × 1200. The camera was operated with a gain of 4× which resulted in a digital number to photo-electron conversion factor

. We only used the green LED of the projector to avoid chromatic aberration in the air-to-water transitions. The green part of the optical spectrum is also the least attenuated in water [

5].

The underwater housing, which can be seen in

Figure 1b, consists of a cylindrical plastic housing with windows for the camera and projector. The housing was fitted with three windows to facilitate adjusting the baseline. Longer baselines may be better for use-cases where long imaging distance is required, while shorter baselines are adequate for shorter imaging distances. The camera and projector are mounted on a metal plate which is attached to one of the end plates. The end plates are screwed onto the cylinder and sealed with a double set of O-rings. A 10-m hose is attached to the housing. We are currently using a USB 3.0 camera which does not facilitate longer cables than 10 m, however, by switching to a gigabit Ethernet camera we could potentially extend the cabling to 100 m if the use-case warrants it.

2.2. Depth-Precision of Phase Stepping

The phase stepping depth estimation procedure consists of capturing three or more images of phase-shifted sinusoids that are projected onto a scene and measuring the per-pixel phase shift. In this paper we focus on the case of four phase-shifted sinusoids where the unwrapped phase-shift can be estimated through [

21]:

The projected sinusoids imaged in

are phase shifted with 90° with respect to each other. The images are represented by a signal level (amplitude)

and a background signal level of

measured in units of photo-electrons. The total signal is determined as

. The phase uncertainty is dependent on the image noise, primarily shot-noise of each of the phase-shifted images, and can be shown to be [

22]:

2.3. Phase Unwrapping

With sinusoids with more than one period, the phase estimate in (1) will be wrapped at

. To produce an unwrapped phase estimate:

we need to find

which determines the sinusoidal period a pixel belongs to out of the

periods that are projected. The pixelwise standard deviation of the unwrapped phase is:

A standard approach for estimating

for patterns with more than a single frequency, is to use Gray codes [

21]. To estimate an n-bit Gray-code, n+2 images are projected, viz.

and

. The Gray codes are estimated based on

. With shot-noise being the primary noise contribution, the noise of the black and signal pixels are

and

. In

Figure 2 we show the results of simulating the Gray code segmentation under different signal levels. The error rate varies with the total intensity level

and the distribution of intensity between the background and signal. Most scenes have objects with varying albedos and consequently varying signal levels

and

. The result is that in some areas of the scene, the signal level will be low, and the error rate of the Gray-decoding will be high which will result in pixels with wrong phase-unwrapping. This is particularly a problem underwater where the transition will be smeared out spatially because of forward scatter, i.e.,

will be lower around the transition than further away, and

may be elevated because of backscatter. The Gray code approach to phase-unwrapping do not take the signal levels nor the signal uncertainty into account when choosing the appropriate period for a pixel which makes it an un-flexible approach.

2.4. Adaptive Multi-Frequency Phase Stepping (MFPS)

In this section we introduce a flexible approach for phase unwrapping which is data-driven. Phase-stepping provides higher depth-precision with higher frequency sinusoidal patterns according to Equation (4). However, the main problem is to accurately phase-unwrap the signal under different signal levels. The proposed approach uses the pixel-wise signal levels and the associated signal uncertainty to determine the highest sinusoidal frequency that can be phase unwrapped without ambiguity.

Assume we have projected a one-period sinusoidal pattern

and an

n-period sinusoidal pattern

. The traditional (MFPS) approach to phase unwrap

is to solve for:

Higher n will lead to higher error-rates when performing the phase unwrapping procedure because of the uncertainty in . We propose a data-driven approach to the MFPS unwrap procedure which based on the signal uncertainty decides how high an n a pixel can jump to.

The maximum number of periods that we can phase unwrap for a pixel from the base-frequency with an error rate less than

, where

is a parameter, is determined by the jump factor:

Because this is a pixel-wise factor which varies from scene to scene, it is not practical to change the frequency of the projected patterns on-the-fly. In practice, a pre-defined schedule of sinusoidal frequency schedule is projected, and the pixels are individually phase-unwrapped to the highest permissible frequency according to the pixel-wise jump factor. Notice that if a pixel can jump with a factor of 2 from the base period, it can also jump with a factor of 2 from any higher periods that have been phase-unwrapped.

Determining a frequency schedule is a trade-off between the frame rate (with more frequencies, a greater number of patterns need to be projected which takes more time), the potential accuracy (the higher the frequency, the higher potential accuracy can be achieved), and the chance of falsely unwrapping the phase increases with larger frequency jumps. In this paper we propose to use a frequency schedule as follows: 1, 8, and 64. This results in 12 projected patterns which is the same required for the Gray code phase stepping (GCPS) approach for a 64 frequency signal (4 phase shifted 64-frequency sinusoids, one fully illuminated and on non-illuminated image, and 6 images to code the Gray code). Given that the jump factor is identical (a factor of 8) between the frequencies, we maximize the likelihood that as many pixels as possible will be able to jump to 64 as well as minimize the likelihood of phase unwraps. The advantage in comparison to Gray codes is that the phase estimates are adaptive with regards to the signal level. The phase estimates will have a predictable lower-bound phase-precision (uncertainty) with a low error rate with regards to the phase unwrapping independent of signal level. The phase-precision is a lower-bound because it considers shot-noise as the sole noise contribution, and disregards other noise sources such as from the turbidity.

2.5. Scatter Removal

The light scattering in turbid media generally affect imaging systems with active illumination in two ways. The backward scattering of the light increases the background level while the forward scatter blur image features. In this section we characterize the effect of these two on the projected images, and we propose algorithms to remove the scatter effect before applying the MFPS algorithm. We propose to remove backscatter by subtracting a turbidity dependent backscatter model from the raw images, while forward scatter is removed with an un-sharp filter. The effect of scatter removal on the phase estimates is shown in the results section.

2.5.1. Backward Scatter Removal

The backscatter profile is a complex volumetric function which depends on the illumination volume, the water attenuation, and angular dependent scattering cross-section of the water. In MFPS, the illumination volume changes for each consecutive sinusoidal pattern that is projected, which makes this a difficult function to approximate through a parametric function. Instead, we sample the backscatter profile for each MFPS pattern at different turbidities by imaging into a void. This was performed in an aquarium (125 cm × 50 cm × 50 cm) where the turbidity was changed by adding clay to the water. The inside walls of the aquarium were covered in black fabric to make sure that all returned light was originating from backscatter. The turbidity, in terms of attenuation length, was measured as described in

Appendix B.

Figure 3 shows the backscatter profiles for the different shifted sinusoids at high turbidity for a 1 and 4 period sinusoidal pattern. The backscatter for a pixel changes with respect to the frequency

f of the projected sinusoid, the phase shift

p (1 through 4) of the sinusoid and the turbidity. Assuming we know the frequencies and the phase shifts that will be used, we create a backscatter model by projecting and imaging sinusoids with the mentioned frequencies and phases at pre-determined turbidities. Based on the sampled backscatter images we construct a 3-dimensional backscatter volume

, where the two first dimensions define the image pixels, while the third dimension parametrizes the turbidity. The backscatter model is interpolated along the turbidity dimension to facilitate arbitrary turbidities within the original sampled turbidity range. In this paper we sampled the backscatter at four turbidities/attenuation lengths—viz. 0.8 m, 1.1 m, 2.0 m, 5.8 m. Assuming we are projecting a sinusoid with phase

p and frequency

f, at turbidity

t, and the raw sampled image including backscatter is

The backscatter is simply subtracted from the raw image as follows:

. This model assumes the backscatter is the same independent of the distance to objects in the scene.

2.5.2. Forward Scatter Removal

Forward scattering causes a blurring of features.

Figure 4 shows the capture range of the forward scatter PSF―it is increasing with turbidity and the pixels on the boundary of the image are even affected at high turbidities.

The width of the PSF is also dependent on the distance to the scene. The convolution between a perfect sinusoid and a symmetric kernel will not change the phase or frequency of the signal, only the amplitude. However, because the PSF is so wide, the boundaries of the pattern will affect the sinusoid far from the boundary and change the phase of it. Furthermore, after the sinusoid has reflected off a scene with depth differences, the reflected pattern will not be a spatial sinusoid because of the phase-shifts and the PSF will consequently change the phase/frequency of the pattern.

One approach for forward scatter removal is to model the PSF and use a deconvolution algorithm to remove the forward scatter effect. It is not obvious from

Figure 4 which parametric function can accurately approximate the PSF. Furthermore, the PSF may vary somewhat over the image due to small changes in turbidity and the images may be noisy due to the turbidity and shot noise. All these factors make it difficult to design a reliable deconvolution algorithm. Instead we propose to use an unsharp filtering approach which is more robust to wide and spatially varying PSF and noise.

The unsharp filtering approach subtracts a low-pass filtered version of the image from the original image to enhance the high-frequency content:

The kernel

k is a low-pass gaussian defined by the width in pixels

w and the standard deviation

. The subtraction scale

and readjustment scale

are turbidity dependent.

Figure 5 shows the result from forward scatter adjustment using the unsharp filtering technique described here on the signal shown in

Figure 4. The method reduces the effect of the PSF towards the left boundary of the image, while preserving the intensity features seen between pixels 1000 and 1800. In this paper we manually tune the scaling factors. However, they can be automatically estimated by finding the highest scaling factor which does not result in any negative valued pixels when applying the algorithm to the one-period sinusoidal patterns.

3. Results

All test data were acquired in an aquarium under controlled turbidity conditions. In this section we summarise the test setup and data acquisition followed by an evaluation of the performance of the scatter correction and compare the adaptive MFPS in relation to the GCPS algorithm. The conversion from phase to distance is performed with the simple calibration procedure outlined in

Appendix A using L = 80 cm, B = 15 cm and a proportionality constant of 6.5.

3.1. Test Setup and Data Acquisition

An aquarium of dimensions 125 cm × 50 cm × 50 cm was used for the experiments and can be seen in

Figure 6. The camera is placed outside the aquarium. The aquarium was filled with tap water. First, we acquired data of the white matte plate which was placed at 80 cm from the camera inside the aquarium and used it as a reference plane in the calibration procedure outlined in

Appendix A. After acquisition of the reference plane, we changed it to the plate shown in

Figure 6 where half the plate is matte white and the other half is covered with a checkerboard pattern (dark squares have albedo 0.5). This was to test the effect different albedos would have on the algorithm. Next, an object with a height of 11 cm was placed in front of the plate. We acquired data of this scene at clear tap water (

) and at three other turbidity levels (

. Brown clay was added to the water successively to increase the turbidity. The turbidity was measured with the method described in

Appendix B. At each turbidity level we acquired the following data:

- (1)

GCPS data. We used 6 Gray code patterns, one black and one white picture and 4 phase shifted 64-period sinusoids for a total of 12 images.

- (2)

Adaptive MFPS data. We used 4 phase-shifted sinusoids with 1, 8 and 64 frequencies for a total of 12 images.

Figure 7 shows images of the acquired datasets. The images show a sinusoid with frequency eight at different turbidity levels.

3.2. Effect of Scattering on Phase Estimates

The scattering of light in water affects the phase estimates. In

Figure 8 we show the combined effect of forward and backward scattering on the phase estimates. The phase deviation is much larger for the one frequency component than the eight frequency component. The scatter PSF has a wide reach and the boundary affects the sinusoid far towards the middle of the image as shown in

Figure 8a. The effect is not as evident in

Figure 8d because the boundary effect is more quickly mitigated because of the higher frequency signal. The increase in background signal (

left to right shown in

Figure 8b,e indicates that the backscatter is more prominent for pixels on the middle/right than for pixels on the left. This can be explained by the angular dependent cross-section of camera versus projector rays.

3.3. Backscatter Compensation

As shown in

Figure 3, backscatter affects the sinusoidal components differently. In

Figure 9 we add the estimated backscatter contributions to ideal phase-shifted sinusoids for increasing turbidities and estimate the phase difference in relation to the ground truth. The maximum phase deviation at the highest turbidity reaches 0.2 radians which we can adjust for with the backscatter compensation. The effect is not as prominent for higher period patterns because the integral of the illumination profile along camera rays in this case will cross many periods and the backscattered signal over the image will even out. Using the simplified calibration formula in Equation (A1) with a baseline of 15 cm and a distance to the target of 80 cm and a proportionality constant of 6.5, a 0.2 radian error equates to a metric error of approximately 6.9 cm. Correcting for this bias caused by the backscatter improves the accuracy for the low SNR pixels that are not able to jump to high-frequency sinusoids. The bias is most prominent for the low frequency sinusoids, which means that the phase is shifted in relation to the high-frequency sinusoids. If this effect was uncorrected, at extreme cases there may occur a systematic error in the phase unwrapping when jumping from low frequencies to high frequencies.

In

Figure 10 we show results after backscatter correction at a turbidity of

on the dataset in

Figure 7. We only show the effect for the one-period pattern because that is where the backscatter effect is strongest as shown in

Figure 3. The effect on phase is in line with the simulated results shown in

Figure 9, i.e., the backscatter corrects for phase differences to the right in the picture.

3.4. Forward Scatter Compensation

Figure 10 shows the effect of forward scatter removal on the backscatter removed intensity profiles along row 200 of the dataset in

Figure 7 at

. We used scale factors of

and

and a Gaussian low-pass filter with w = 1000 and

. One can see that the components crossing (e.g., the location where the purple and orange curve crosses at around pixel 300) after scatter correction in (d) are more in line with the component crossing in (a) than what they are in (b). The effect on the phase estimates can be seen in (e) where the deviation from the phase at

is largely corrected for, except for at the boundaries.

3.5. MFPS versus GPCS

In

Figure 11 we show comparative results between GCPS and MFPS with scatter correction. Even in relatively clear water (

the phase unwrapping of the GCPS produces errors. This is evident from the errors along vertical parallel stripes in the GCPS phase images. Notice that while turbidity increases the errors along the Gray code boundaries for the GCPS method, increasing turbidity increases the errors on the boundary of the plate for the MFPS which is not scatter corrected. This is in line with the scatter effect that was shown in

Figure 10. Much of the boundary effect is removed with the proposed scatter correction algorithm.

In

Table 1 we show qualitative results based on the areas shown in

Figure 11. The error estimates are taken as the percentage of pixels in the full region shown in

Figure 11 that have a distance from the ground truth which is more than

. This would indicate the number of pixels that have been wrongly phase unwrapped. The error, i.e., the accuracy and precision of the error, is estimated over a 100 × 100 area in the middle of the region shown in

Figure 11. The last column denotes the predicted phase standard deviation using Equation (4). The results show that the predicted phase is a lower bound for the MFPS algorithm, mainly because it only considers shot noise. It is a better predictor at low turbidity because the particles causing turbidity adds considerable noise to the individual phase images. The scatter corrected MFPS algorithm contains less errors and provides a significant increase in precision and accuracy across the turbidities.

The MFPS algorithm unwraps the phase in multiple stages. In this paper we have used the frequency schedule 1, 8 and 64. In

Table 2 we show the quantitative results for each of these phases at turbidity

. These results indicate the performance that can be achieved by e.g., only using the 1 or 8 frequencies. We found that the phase uncertainty allowed all pixels perform a frequency jump of 8. At low turbidity, the signal allowed most pixels to jump directly from the base frequency to 64.

In

Figure 12 we show qualitative results from imaging the object in

Figure 7. We compare the proposed scatter corrected MFPS results with the results using GCPS. These images support the quantitative results: the scatter corrected MFPS has significant fewer outliers, higher accuracy and precision and degrade better with increasing turbidity.

4. Discussion

We have introduced a structured light method for high-precision depth measurements in turbid water. The method is based on the MFPS phase unwrapping method but includes a predictable lower-bound on the phase/distance uncertainty which is used to determine the highest reliable frequency that can be unwrapped. Furthermore, the effect of scattering has been investigated and methods that reduce the scatter effect on the phase estimates have been proposed. The results show that the method provides more accurate and precise depth estimates, and degrades better and more predictable with turbidity, than GCPS.

For the examples provided here, the pixel-wise uncertainty was low enough to be able to jump according to the frequency schedule that was used, i.e., 1, 8, 64. However, we still observe that pixels are wrongly unwrapped. This can be explained by several factors. First, the uncertainty is only a lower-bound because it only relies on the shot noise. The turbidity is the major factor, besides shot noise, that contributes to the increase in phase uncertainty. With increasing turbidity, more particles are present and moving in the water such that the pixel-wise scatter effect may be different from exposure to exposure and thereby adding noise to the measurements. However, this effect is difficult to parametrise and robust ways of quantifying this noise will be part of future work. If the shot-noise is the limiting factor to achieve a frequency jump, one solution to reduce the noise is to adaptively bin pixels. If we reduce the shot-noise by a factor of three by binning 3 × 3 pixels, the frequency jumping factor will increase by the same factor.

In this paper we used three frequencies and four phase shifted sinusoids for each frequency to perform the distance measurements. Consequently, 12 images were acquired to perform a distance measurement, which takes approximately 100 ms using the current system. In dynamic situations where the camera or scene is moving, this may be too long to avoid motion artefacts. One solution may be to use only three phase shifted sinusoids at the cost of higher phase uncertainty, or reduce the frequency schedule to only contain the base frequency and a higher frequency. The higher the frequency, the more accurate the distance estimate will be if the phase uncertainty permits to unwrap the signal. The minimum number of exposures that are required is 3 if only the base frequency is used, or 6 if another higher frequency is also used. If high precision estimates are required, and very reliable phase unwrapping, more frequencies can be used.

The backscatter primarily affects the lower frequencies and the rightmost pixels. This is because camera rays corresponding to pixels on the right cross the whole projector illumination volume before hitting a target. Consequently, the backscattered light will be different for the different shifted sinusoids for low-frequency signals. For higher-frequency signals, the effect cancels out because a camera ray crosses multiple peaks of the projected signal. The forward scatter is caused by a large PSF which is convolved with the projected signal. Boundary effects are the major contributor to the phase deviations we observe. The unsharp-filter can adjust for most of the forward scatter effect even at high turbidities.

We compared the proposed method with a standard GCPS method without error correction. It may be that using the error correction of the Gray codes could improve the GCPS results somewhat compared to the results reported here. We find that MFPS provides more accurate and precise results across turbidities and contains less phase unwrap errors due to the removal of the scattering effects. The results for both GCPS and MFPS are computed using the same number of patterns. While GCPS is fast and only rely on pixel-by-pixel computations, the scatter corrected MFPS is slower because it requires the application of a large convolving filter in the un-sharp filtering procedure and that the solution of Equation (5) is not as trivial as computing the Gray code. On a standard laptop running Matlab with non-optimized code, the Gray code method runs in 0.6 s, the standard MFPS runs in 2.6 s while the forward scatter removal filter adds another 9 s. However, we believe that a clever implementation on the GPU can reduce this gap considerably.

Author Contributions

Conceptualization, P.R. and J.T.; methodology, J.T. and J.T.T. and T.K. and P.R.; software, T.K. and P.R.; validation, P.R. and T.K.; formal analysis, P.R.; data curation, T.K. and P.R.; writing—original draft preparation, P.R.; writing—review and editing, P.R. and J.T.T. and J.T. and T.K.

Funding

This research was funded by the Norwegian Research Council (grant number 280934), Equinor, FMC Technologies, IKM and Oceaneering.

Acknowledgments

The work is carried out in the NRC funded project SEAVENTION (

www.sintef.no/SEAVENTION). The authors acknowledge the valuable input from all project partners: Equinor, Norwegian University of Science and Technology, FMC Technologies, IKM and Oceaneering.

Conflicts of Interest

The authors declare no conflict of interest.

Appendix A. Calibration

Air-based structured light systems can typically be calibrated in essentially a similar way as traditional stereo-systems [

26]. However, the validity of the pin-hole camera model in water is questionable because of the air-water refraction and more complex models are required to establish an accurate calibration model [

27]. Since the focus of this paper is on the methodology for accurately estimating the phase-shift in scattering media, we use a simplified calibration methodology [

28] which provides a metric distance map, but not a Euclidean point cloud. However, any calibration designed for underwater structured light systems can be used to convert the phase-maps to Euclidean point clouds.

Assume we have a camera-projector base line

and a distance

L to a reference plane, as shown in

Figure A1. Using simple trigonometry, we find the following relationships:

, or

where

Z is the height over the reference plane. Simplifying the relationship leads to:

where we exchange the distance shift

d with the estimated phase-shift

.

Figure A1.

Overview of the calibration setup. The calibration uses the relative phase-shift between a reference plane and the object to compute the height Z.

Figure A1.

Overview of the calibration setup. The calibration uses the relative phase-shift between a reference plane and the object to compute the height Z.

Appendix B. Attenuation Measurements

The attenuation of light in water is determined by the attenuation coefficient k in , Where and are the intensity of a light source at distance 0 and z. It is difficult to measure directly, so instead we measure the log intensity at different distances and find the zero crossing which is an estimate of . Consequently, by measuring at distance z for a new turbidity we can determine the attenuation coefficient by .

References

- dos Santos Cesar, D.B.; Gaudig, C.; Fritsche, M.; dos Reis, M.A.; Kirchner, F. An evaluation of artificial fiducial markers in underwater environments. In Proceedings of the OCEANS 2015—Genova, Genoa, Italy, 18–21 May 2015; pp. 1–6. [Google Scholar]

- Serikawa, S.; Lu, H. Underwater image dehazing using joint trilateral filter. Comput. Electr. Eng. 2014, 40, 41–50. [Google Scholar] [CrossRef]

- Emberton, S.; Chittka, L.; Cavallaro, A. Underwater image and video dehazing with pure haze region segmentation. Comput. Vis. Image Underst. 2018, 168, 145–156. [Google Scholar] [CrossRef] [Green Version]

- Murez, Z.; Treibitz, T.; Ramamoorthi, R.; Kriegman, D. Photometric Stereo in a Scattering Medium. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1880–1891. [Google Scholar] [CrossRef] [PubMed]

- Negahdaripour, S.; Sarafraz, A. Improving Stereo Correspondence in Scattering Media by Incorporating Backscatter Cue. IEEE Trans. Image Process. 2014, 23, 5743–5755. [Google Scholar] [CrossRef] [PubMed]

- Fujimura, Y.; Iiyama, M.; Hashimoto, A.; Minoh, M. Photometric Stereo in Participating Media Considering Shape-Dependent Forward Scatter. In Proceedings of the 2018 Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–22 June 2018; pp. 7445–7453. [Google Scholar]

- Telem, G.; Filin, S. Photogrammetric modeling of underwater environments. ISPRS J. Photogramm. Remote Sens. 2010, 65, 433–444. [Google Scholar] [CrossRef]

- Leone, A.; Diraco, G.; Distante, C. Stereoscopic System for 3-D Seabed Mosaic Reconstruction. In Proceedings of the 2007 IEEE International Conference on Image Processing, San Antonio, TX, USA, 16 September–19 October 2007; pp. 541–544. [Google Scholar]

- Brown, M.Z.; Burschka, D.; Hager, G.D. Advances in computational stereo. IEEE Trans. Pattern Anal. Mach. Intell. 2003, 25, 993–1008. [Google Scholar] [CrossRef] [Green Version]

- Jaffe, J. Computer Modeling and the Design of Optimal Underwater Imaging Systems. IEEE J. Ocean. Eng. 1990, 15, 101–111. [Google Scholar] [CrossRef]

- Hildebrandt, M.; Kerdels, J.; Albiez, J.; Kirchner, F. A practical underwater 3D-Laserscanner. In Proceedings of the OCEANS 2008, Quebec City, QC, Canada, 15–18 September 2008; pp. 1–5. [Google Scholar]

- Prats, M.; Fernandez, J.J.; Sanz, P.J. An approach for semi-autonomous recovery of unknown objects in underwater environments. In Proceedings of the 2012 13th International Conference on Optimization of Electrical and Electronic Equipment (OPTIM), Brasov, Romania, 24–26 May 2012; pp. 1452–1457. [Google Scholar]

- Moore, K.D.; Jaffe, J.S.; Ochoa, B.L. Development of a New Underwater Bathymetric Laser Imaging System: L-Bath. J. Atmos. Ocean. Technol. 2000, 17, 1106–1117. [Google Scholar] [CrossRef]

- Dalgleish, F.; Caimi, F.; Britton, W.; Andren, C. Improved LLS imaging performance in scattering-dominant waters. In Ocean Sensing and Monitoring; International Society for Optics and Photonics: Orlando, FL, USA, 2009; Volume 7317. [Google Scholar]

- Risholm, P.; Thorstensen, J.; Thielemann, J.T.; Kaspersen, K.; Tschudi, J.; Yates, C.; Softley, C.; Abrosimov, I.; Alexander, J.; Haugholt, K.H. Real-time super-resolved 3D in turbid water using a fast range-gated CMOS camera. Appl. Opt. 2018, 57, 3927–3937. [Google Scholar] [CrossRef] [PubMed]

- McLeod, D.; Jacobson, J.; Hardy, M.; Embry, C. Autonomous inspection using an underwater 3D LiDAR. In Proceedings of the 2013 OCEANS—San Diego, San Diego, CA, USA, 23–27 September 2013; pp. 1–8. [Google Scholar]

- Tan, C.; Seet, G.; Sluzek, A.; He, D. A novel application of range-gated underwater laser imaging system (ULIS) in near-target turbid medium. Opt. Lasers Eng. 2005, 43, 995–1009. [Google Scholar] [CrossRef]

- Salvi, J.; Fernandez, S.; Pribanic, T.; Llado, X. A state of the art in structured light patterns for surface profilometry. Pattern Recognit. 2010, 43, 2666–2680. [Google Scholar] [CrossRef]

- Tornblom, N. Underwater 3D Surface Scanning Using Structured Light; Uppsala University: Uppsala, Sweden, 2010. [Google Scholar]

- Zhang, Q.; Wang, Q.; Hou, Z.; Liu, Y.; Su, X. Three-dimensional shape measurement for an underwater object based on two-dimensional grating pattern projection. Opt. Laser Technol. 2011, 43, 801–805. [Google Scholar] [CrossRef]

- Sansoni, G.; Carocci, M.; Rodella, R. Three-dimensional vision based on a combination of gray-code and phase-shift light projection: Analysis and compensation of the systematic errors. Appl. Opt. 1999, 38, 6565–6573. [Google Scholar] [CrossRef] [PubMed]

- Bouquet, G.; Thorstensen, J.; Bakke, K.A.H.; Risholm, P. Design tool for TOF and SL based 3D cameras. Opt. Express 2017, 25, 27758–27769. [Google Scholar] [CrossRef] [PubMed]

- Risholm, P.; Kirkhus, T.; Thielemann, J. High-resolution structured light 3D sensor for autonomous underwater inspection. In Proceedings of the OCEANS, Charleston, SC, USA, 20–26 October 2018. [Google Scholar]

- Pribanic, T.; Dzapo, H.; Joaquim, S. Efficient and low-cost 3D structured light system based on a modified number-theoretic approach. EURASIP J. Adv. Signal Process. 2010, 2010, 474389. [Google Scholar] [CrossRef]

- Thorstensen, J.; Thielemann, J.T. Adaptive multi-frequency phase stepping for optimal 3D depth reconstruction. In Imaging and Applied Optics; The optical Society: Orlando, FL, USA, 2018. [Google Scholar]

- Huang, P.S. Novel method for structured light system calibration. Opt. Eng. 2006, 45, 083601. [Google Scholar] [CrossRef]

- Shortis, M. Calibration Techniques for Accurate Measurements by Underwater Camera Systems. Sensors 2015, 15, 30810–30826. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Geng, J. Structured-light 3D surface imaging: A tutorial. Adv. Opt. Photonics 2011, 3, 128–160. [Google Scholar] [CrossRef]

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).