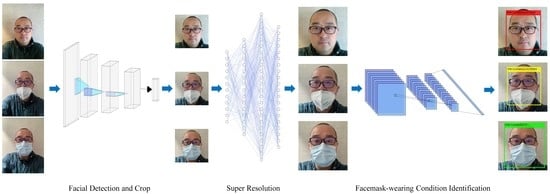

This section describes the technology behind the SRCNet and facemask-wearing condition identification, including the proposed algorithm, image pre-processing, facial detection and cropping, SR network, facemask-wearing condition identification network, datasets, and training details. Facemask-wearing condition identification is a kind of three-category classification problem, including no facemask-wearing (NFW), incorrect facemask-wearing (IFW), and correct facemask-wearing (CFW). Our goal is to form a facemask-wearing condition identification function, FWI(x), which inputs an unprocessed image and outputs the conditions of wearing facemasks for all faces in the image.

3.4. SR Network

The first stage of SRCNet is the SR network. The cropped facial images have a huge variance in size, which could possibly damage the final identification accuracy of SRCNet. Hence, SR is applied before classification. The structure of the SR network was inspired by RED [

17], which uses convolutional layers as an auto-encoder and deconvolutional layers for image up-sampling. Symmetric skip connections were also applied to preserve image details. The detailed architectural information of the SR network is shown in

Figure 4.

The SR network has five convolutional layers and six deconvolutional layers. Except for the final deconvolutional layer, all other convolutional layers are connected to their corresponding convolutional layers by skip connections. With skip connections, the information is propagated from convolutional feature maps to the corresponding deconvolutional layers and from input to output. The network is then fitted by solving the residual of the problem, which is denoted as

where

is the ground truth,

is the input image, and

is the function of the SR network for the

image.

In convolutional layers, the number of kernels was designed to increase by a factor of 2. With kernels size 4 × 4 and stride 2, after passing through the first convolutional layer for feature extraction, every time the image passes through a convolutional layer, the size of the feature maps decreases by a factor of ½. Hence, the convolutional layers act as an auto-encoder and extract features from the input image.

In the deconvolutional layers, the number of output feature maps is symmetric to the corresponding convolutional layers, in order to satisfy the skip connections. The number of kernels in every deconvolutional layer decreases by a factor of ½ (except for the final deconvolutional layer), while, with kernels size 4 × 4 and stride 2, the size of feature maps increases by a factor of 2. After information combination in the final deconvolutional layer, the output is an image with the same size as the input image. The deconvolutional layers act as a decoder, which take the output of the encoder as input and up-sample them to obtain a super-resolution image.

It is worth mentioning that the function used for down-and up-sampling is the stride in the convolutional and deconvolutional layers—rather than pooling and un-pooling layers—as the aim of the SR network is to restore image details rather than learning abstractions (pooling and un-pooling layers damage the details of images and deteriorate the restoration performance [

17].

The function of the final deconvolutional layer is to combine all the information from the previous deconvolutional layer and input image and normalize all pixels to [0, 1] as the output. The stride for the final deconvolutional layer was set to 1, for information combination without up-sampling. The activation function of the final deconvolutional layer is Clipped Rectified Linear Unit, which forces normalization of the output and avoids error in computing the loss. The definition of Clipped Rectified Linear Unit is as follows:

where

x is the input value.

One main difference between our model and RED is the improvement in the activation functions, which was changed from a Rectified Linear Unit (ReLU) to a Leaky Rectified Linear Unit (LeakyReLU) for all convolutional and deconvolutional layers except the final deconvolutional layer, which use Clipped Rectified Linear Unit as the activation function to limit values in the range [0, 1]. Previous studies have shown that different activation functions have an impact on the final performance of a CNN [

67,

68]. Hence, the improvement in the activation functions contributed to the better image restoration by the SR network. The ReLU and LeakyReLU are defined as follows:

where

x is the input value and

is a scale factor.

The reason for this improvement was that the skip connections propagated the image from input to output. For an SR network, the network shall have the capability to subtract or add values for pixels, where the ReLU function can only add values for feature maps. The LeakyReLU function, however, can activate neurons with negative values, thus improving the performance of the network.

Another difference is the density of skip connections. Rather than using skip connections every few (e.g., two in RED) layers from convolutional layers to their symmetrical deconvolutional feature maps, the density of skip connections increased, and all convolutional layers were connected to their mirrored deconvolutional layers. The reason for this was to cause all layers to learn to solve the residual problem, which reduced the loss of information between layers while not significantly increasing the network parameters.

The goal of SR network training is to update all learnable parameters to minimize the loss. For SR networks, the mean squared error (MSE) is widely used as the loss function [

17,

27,

34,

35]. A regularization term (weight decay) for the weights is added to the MSE loss to reduce overfitting. The MES with

regularization was applied as the loss function

, which is defined as

where

is the ground truth,

is the output image, and

is the loss for collections of given

.

It is worth mentioning that the size of the input image can be arbitrary and that the output image has the same size as the input image. The convolutional and deconvolutional layers are symmetric for the SR network. Furthermore, the network is predicted pixel-wise. For better detail enhancement, we chose a dedicated image input size for SR network training and, so, the input images were resized to 224 × 224 × 3 with bicubic interpolation (which was the same as the input image size of the facemask-wearing condition identification network). The output of the SR network is enhanced images with the same size of the inputs (224 × 224 × 3), and the enhanced images will be sent directly to the facemask-wearing condition identification network for classification.

3.5. Facemask-Wearing Condition Identification Network

The second stage of SRCNet is facemask-wearing condition identification. As CNNs are one of the most common types of network for image classification, which perform well in facial recognition, a CNN was adopted for the facemask-wearing condition identification network in the second stage of SRCNet. The goal was to form a function G(FI), where FI is the input face image, which outputs the probabilities of the three categories (i.e., NFW, IFW, and CFW). The classifier then outputs the classification result based on the output possibilities.

MobileNet-v2 was applied as the facemask-wearing condition identification network, which is a lightweight CNN that can achieve high accuracy in image classification. The main features of MobileNet-v2 are residual blocks and depthwise separable convolution [

42,

43]. The residual blocks contribute to the training of the deep network, addressing the gradient vanishing problem and achieving benefits by back-propagating the gradient to the bottom layers. As for facemask-wearing condition identification, there are slight differences between IFW and CFW. Hence, the capability of feature extraction or the depth of the network are essential, contributing to the final identification accuracy. Depthwise separable convolution is applied for the reduction of computation and model size while maintaining the final classification accuracy, which separable convolution splits into two layers: One layer for filtering and another layer for combining.

Transfer learning is applied in the network training procedure, which is a kind of knowledge migration between the source and target domains. The network is trained in three steps: Initialization, forming a general facial recognition model, and knowledge transfer to facemask-wearing condition identification. The first step is initialization, which contributes to the final identification accuracy and training speed [

38,

69]. Then, a general facial recognition model is formed using a large facial image dataset, where the network gains the capability of facial feature extraction. After watching millions of faces, the network then concentrates on facial information, rather than the interference from backgrounds and the differences caused by image shooting parameters. The final step is knowledge transfer between facial recognition and facemask-wearing condition identification. The final fully connected layer is modified to meet with category requirements of facemask-wearing condition identification.

The reason for adopting transfer learning was the considerable differences in data volumes and their consequences. The facemask-wearing condition identification dataset is relatively small, compared to general facial recognition datasets, which may cause overfitting problems and a reduction in identification accuracy during the training process. Hence, the network gains knowledge about faces in the general facial recognition model training process for the reduction in overfitting and the improvement in accuracy.

The final stage of the classifier is the softmax function, which calculates the probabilities of all classes using the outputs of its direct ancestor (i.e., fully connected layer neurons) [

70]. The definition is:

where

is the total input received by unit

and

is the prediction probability of the image belonging to class

.

The training goal of the facemask-wearing condition identification network was to minimize the cross-entropy loss with weight decay. For image classification, cross-entropy is widely used as the loss function [

71,

72]. A regularization term (weight decay) can help to significantly avoid overfitting. Hence, cross-entropy with

regularization was applied as the loss function

, defined as

For the cross-entropy term, is the number of samples, is the number of classes, is the indicator that the sample belongs to the class (which is 1 when labels correspond and 0 when they are different), and is the output for sample for class , which is the output value of the softmax layer. For the cross-entropy term, is the learned parameters in every learned layer and is the regularization factor (coefficient).

3.6. Datasets

Different facial image data sets were used for different network training for the improvement in the generalization ability of SRCNet. The public facial image dataset CelebA was processed and used for SR network training [

73]. As the goal of the SR network was detail enhancement, a large and high-resolution facial image data set was needed; CelebA met these requirements.

The processing of CelebA included three steps: Image pre-processing, facial detection and cropping, and image selection. All raw images were pre-processed as mentioned above. The facial areas were then detected by the multitask cascaded convolutional neural network and cropped for training, as the SR network was designed for restoring detailed information of faces rather than the background. Cropped images that were smaller than 224 × 224 (i.e., the input size of the facemask-wearing condition identification network) or non-RGB images were discarded automatically. All other cropped facial images were inspected manually and images with blur or dense noise were also discarded. Finally, 70,534 high-resolution facial images were split into a training dataset (90%) and a testing dataset (10%) and were adopted for SR network training and testing.

Training of the facemask-wearing condition identification network comprised three steps. Each step used a different data set for training. For initialization, the goal was generalization. A large-scale classification data set was needed for better generalization and, so, the ImageNet dataset was adopted for network initialization [

13]. During this procedure, non-zero values were assigned to parameters, which increased the generalization ability. Furthermore, proper initialization significantly improves the training speed and better informs the general facial recognition model.

The general facial recognition model was trained with a large-scale facial recognition database, the CASIA WebFace facial dataset [

74]. All images were screened manually and those containing insufficient subjects or with poor image quality were discarded [

74]. Finally, the large-scale facial recognition dataset contained 493,750 images with 10,562 subjects, which was split into a training data set (90%) and testing data set (10%). The training set was applied for general facial recognition model training.

The public facemask-wearing condition dataset Medical Masks Dataset (

https://www.kaggle.com/vtech6/medical-masks-dataset) was applied for fine-tuning the network, in order to transfer knowledge from general facial recognition to facemask-wearing condition identification. The 2D RGB images were taken in uncontrolled environments, and all faces in the data set had their position co-ordinates with facemask-wearing condition labels. The Medical Masks Data set was processed in four steps: Facial cropping and labeling, label confirmation, image pre-processing, and SR. All faces were cropped and labeled using the given position coordinates and labels. All cropped facial images were then screened manually and those with incorrect labels were discarded. Then, the facial images were confirmed and pre-processed using the methods mentioned in

Section 3.2. For the final accuracy of SRCNet, the data set was expanded for the case of not wearing a mask. The resolution of pre-processed images varied, as shown in

Table 1. For accuracy improvement of the facemask-wearing condition identification network, the facial image must contain enough details. Hence, the SR network was applied to add details to low-quality images. Images of sizes no larger than 150 × 150 (i.e., width or length no more than 150) were processed using the SR network. Finally, the dataset contained 671 images of NFW, 134 images of IFW, and 3030 images of CFW. The whole dataset was separated into a training dataset (80%) and a testing dataset (20%) for facemask-wearing condition identification network training and testing.

3.7. Training Details

The training of SRCNet contained two main steps: SR network training and facemask-wearing condition identification network training.

For SR network training, the training goal was to restore facial details, which we used the training set of CelebA to achieve. Based on the characteristics of the Medical Masks Dataset, the input images were pre-processed to imitate the low-quality images in the Medical Masks Dataset. The high-resolution processed images in CelebA were first filtered with a Gaussian filter with a kernel size of 5 × 5 and a standard deviation of 10. Then, they were down-sampled to 112 × 112. As the size of the input and output was the same, the down-sampled images were then up-sampled to 224 × 224 with bicubic as input, with the same size as the input of the facemask-wearing condition identification network. Adam was adopted as the optimizer, with

,

, and

[

75]. The network was trained for 200 epochs with an initial learning rate of

and with a learning rate dropping factor of 0.9 every 20 epochs. The mini-batch size was 48.

The first step of facemask-wearing condition identification network training was initialization. The network was trained using the ImageNet dataset, with the training parameters proposed in [

43].

The second step was to form a general facial recognition model. The output classes were modified to match with the class numbers (10,562). For initialization, the weight and bias in the final modified fully connected layer were initialized using a normal distribution with 0 mean and 0.01 standard deviation. The network was trained for 50 epochs, with the training data set shuffled in every epoch. To increase the training speed, the learning rate drop was 0.9 for every 6 epochs with an initial learning rate of

, which eliminated the problem of the loss becoming stable. The network was trained using Adam as the optimizer, with

,

,

, and

weight decay for

regularization, in order to avoid overfitting [

75].

Transfer learning was applied for fine-tuning the facemask-wearing condition identification network, where the final fully connected layer and classifier were modified to match the classes (NFW, IFW, and CFW). The weights and biases in the final modified layer were initialized by independently sampling from a normal distribution with zero mean and 0.01 standard deviation, which produced superior results, compared to other initializers. Adam was chosen as the optimizer, while the learning rate was set as

. To avoid overfitting, a

weight decay for

regularization was also applied [

75]. The batch size was set to 16 and the network was trained for 8 epochs in total. The grid search method was applied to search for the best combination of all the parameters mentioned above, in order to improve the performance of the facemask-wearing condition identification network.

Data augmentation can reduce the overfitting problem and contribute to the final accuracy of the network [

36,

52,

76]. To train the general facial recognition network, the training dataset was randomly rotated in a range of 10° (in a normal distribution), shifted vertically and horizontally in a range of 8 pixels, and horizontally flipped in every epoch. During the fine-tuning stage, the augmentation was mild, with rotation within 6° (in normal distribution), shifting by up to 4 pixels (vertically and horizontally), and with a random horizontal flip in every epoch.