Detailed Land Cover Mapping from Multitemporal Landsat-8 Data of Different Cloud Cover

Abstract

:1. Introduction

2. Materials and Methods

2.1. Study Area

2.2. Landsat-8 Annual Datasets

2.3. Land Cover Classes and Reference Data

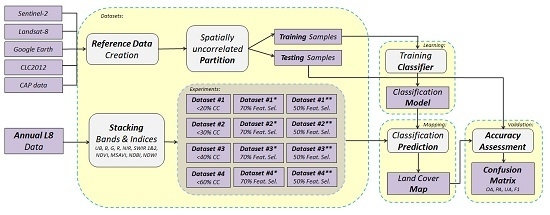

2.4. Land Cover Mapping Framework

2.4.1. Classification Features

2.4.2. Training and Testing Areas

2.4.3. Experimental Setup

3. Experimental Results and Discussion

3.1. Comparing the Performance of the SVM and CNN Frameworks

3.2. Assessing the Influence of Different Cloud Cover Conditions

3.3. Assessing the Influence of Feature Selection

3.4. Assessing the Contribution of Spectral Features

3.5. Per-Class Analysis, Validation and Discussion

3.5.1. Per-Class Quantitative Assessment

3.5.2. Discussing Classification Errors in Challenging Cases

4. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Bojinski, S.; Verstraete, M.; Peterson, T.C.; Richter, C.; Simmons, A.; Zemp, M. The Concept of Essential Climate Variables in Support of Climate Research, Applications, and Policy. Bull. Am. Meteorol. Soc. 2014, 95, 1431–1443. [Google Scholar] [CrossRef] [Green Version]

- Gong, P.; Wang, J.; Yu, L.; Zhao, Y.; Zhao, Y.; Liang, L.; Niu, Z.; Huang, X.; Fu, H.; Liu, S.; et al. Finer resolution observation and monitoring of global land cover: First mapping results with Landsat TM and ETM+ data. Int. J. Remote Sens. 2013, 34, 2607–2654. [Google Scholar] [CrossRef]

- Yu, L.; Wang, J.; Li, X.; Li, C.; Zhao, Y.; Gong, P. A multi-resolution global land cover dataset through multisource data aggregation. Sci. China Earth Sci. 2014, 57, 2317–2329. [Google Scholar] [CrossRef]

- Chen, J.; Chen, J.; Liao, A.; Cao, X.; Chen, L.; Chen, X.; He, C.; Han, G.; Peng, S.; Lu, M.; et al. Global land cover mapping at 30 m resolution: A POK-based operational approach. ISPRS J. Photogramm. Remote Sens. 2015, 103, 7–27. [Google Scholar] [CrossRef]

- Homer, C. Completion of the 2001 National Land Cover Database for the Conterminous United States. Photogramm. Eng. Remote Sens. 2007, 73, 337–341. [Google Scholar]

- Inglada, J.; Vincent, A.; Arias, M.; Tardy, B.; Morin, D.; Rodes, I. Operational high resolution land cover map production at the country scale using satellite image time series. Remote Sens. 2017, 9, 95. [Google Scholar] [CrossRef]

- Xiong, J.; Thenkabail, P.S.; Tilton, J.C.; Gumma, M.K.; Teluguntla, P.; Oliphant, A.; Congalton, R.G.; Yadav, K.; Gorelick, N. Nominal 30-m Cropland Extent Map of Continental Africa by Integrating Pixel-Based and Object-Based Algorithms Using Sentinel-2 and Landsat-8 Data on Google Earth Engine. Remote Sens. 2017, 9, 1065. [Google Scholar] [CrossRef]

- Pelletier, C.; Valero, S.; Inglada, J.; Champion, N.; Dedieu, G. Assessing the robustness of Random Forests to map land cover with high resolution satellite image time series over large areas. Remote Sens. Environ. 2016, 187, 156–168. [Google Scholar] [CrossRef]

- Inglada, J.; Arias, M.; Tardy, B.; Hagolle, O.; Valero, S.; Morin, D.; Dedieu, G.; Sepulcre, G.; Bontemps, S.; Defourny, P.; et al. Assessment of an operational system for crop type map production using high temporal and spatial resolution satellite optical imagery. Remote Sens. 2015, 7, 12356–12379. [Google Scholar] [CrossRef]

- Marais Sicre, C.; Inglada, J.; Fieuzal, R.; Baup, F.; Valero, S.; Cros, J.; Huc, M.; Demarez, V. Early Detection of Summer Crops Using High Spatial Resolution Optical Image Time Series. Remote Sens. 2016, 8, 591. [Google Scholar] [CrossRef]

- Belgiu, M.; Csillik, O. Sentinel-2 cropland mapping using pixel-based and object-based time-weighted dynamic time warping analysis. Remote Sens. Environ. 2018, 204, 509–523. [Google Scholar] [CrossRef]

- Rogan, J.; Franklin, J.; Roberts, D.A. A comparison of methods for monitoring multitemporal vegetation change using Thematic Mapper imagery. Remote Sens. Environ. 2002, 80, 143–156. [Google Scholar] [CrossRef] [Green Version]

- Rodriguez-Galiano, V.F.; Ghimire, B.; Rogan, J.; Chica-Olmo, M.; Rigol-Sanchez, J.P. An assessment of the effectiveness of a random forest classifier for land-cover classification. ISPRS J. Photogramm. Remote Sens. 2012, 67, 93–104. [Google Scholar] [CrossRef]

- Whitcraft, A.K.; Vermote, E.F.; Becker-Reshef, I.; Justice, C.O. Cloud cover throughout the agricultural growing season: Impacts on passive optical earth observations. Remote Sens. Environ. 2015, 156, 438–447. [Google Scholar] [CrossRef]

- Banskota, A.; Kayastha, N.; Falkowski, M.J.; Wulder, M.A.; Froese, R.E.; White, J.C. Forest Monitoring Using Landsat Time Series Data: A Review. Can. J. Remote Sens. 2014, 40, 362–384. [Google Scholar] [CrossRef]

- Valero, S.; Morin, D.; Inglada, J.; Sepulcre, G.; Arias, M.; Hagolle, O.; Dedieu, G.; Bontemps, S.; Defourny, P.; Koetz, B. Production of a Dynamic Cropland Mask by Processing Remote Sens. Image Series at High Temporal and Spatial Resolutions. Remote Sens. 2016, 8, 55. [Google Scholar] [CrossRef]

- Hansen, M.C.; Loveland, T.R. A review of large area monitoring of land cover change using Landsat data. Remote Sens. Environ. 2012, 122, 66–74. [Google Scholar] [CrossRef]

- Khatami, R.; Mountrakis, G.; Stehman, S.V. A meta-analysis of remote sensing research on supervised pixel-based land-cover image classification processes: General guidelines for practitioners and future research. Remote Sens. Environ. 2016, 177, 89–100. [Google Scholar] [CrossRef] [Green Version]

- Vapnik, V.N. The Nature of Statistical Learning Theory; Springer: New York, NY, USA, 1995; ISBN 978-1-4757-2440-0. [Google Scholar]

- Vapnik, V. Statistical Learning Theory; Wiley: New York, NY, USA, 1998. [Google Scholar]

- Breiman, L. Random forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Pelletier, C.; Valero, S.; Inglada, J.; Champion, N.; Marais Sicre, C.; Dedieu, G. Effect of Training Class Label Noise on Classification Performances for Land Cover Mapping with Satellite Image Time Series. Remote Sens. 2017, 9, 173. [Google Scholar] [CrossRef]

- Zheng, B.; Myint, S.W.; Thenkabail, P.S.; Aggarwal, R.M. A support vector machine to identify irrigated crop types using time-series Landsat NDVI data. Int. J. Appl. Earth Obs. Geoinf. 2015, 34, 103–112. [Google Scholar] [CrossRef]

- Peña, M.A.; Brenning, A. Assessing fruit-tree crop classification from Landsat-8 time series for the Maipo Valley, Chile. Remote Sens. Environ. 2015, 171, 234–244. [Google Scholar] [CrossRef]

- Paneque-Gálvez, J.; Mas, J.-F.; Moré, G.; Cristóbal, J.; Orta-Martínez, M.; Luz, A.C.; Guèze, M.; Macía, M.J.; Reyes-García, V. Enhanced land use/cover classification of heterogeneous tropical landscapes using support vector machines and textural homogeneity. Int. J. Appl. Earth Obs. Geoinf. 2013, 23, 372–383. [Google Scholar] [CrossRef]

- Karantzalos, K.; Bliziotis, D.; Karmas, A. A scalable geospatial web service for near real-time, high-resolution land cover mapping. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2015, 8, 4665–4674. [Google Scholar] [CrossRef]

- Mountrakis, G.; Im, J.; Ogole, C. Support vector machines in remote sensing: A review. ISPRS J. Photogramm. Remote Sens. 2011, 66, 247–259. [Google Scholar] [CrossRef]

- Shao, Y.; Lunetta, R.S. Comparison of support vector machine, neural network, and CART algorithms for the land-cover classification using limited training data points. ISPRS J. Photogramm. Remote Sens. 2012, 70, 78–87. [Google Scholar] [CrossRef]

- Foody, G.M.; Mathur, A. Toward intelligent training of supervised image classifications: Directing training data acquisition for SVM classification. Remote Sens. Environ. 2004, 93, 107–117. [Google Scholar] [CrossRef]

- Foody, G.M.; Mathur, A. The use of small training sets containing mixed pixels for accurate hard image classification: Training on mixed spectral responses for classification by a SVM. Remote Sens. Environ. 2006, 103, 179–189. [Google Scholar] [CrossRef]

- Gómez, C.; White, J.C.; Wulder, M.A. Optical remotely sensed time series data for land cover classification: A review. ISPRS J. Photogramm. Remote Sens. 2016, 116, 55–72. [Google Scholar] [CrossRef]

- Belgiu, M.; Drăguţ, L. Random forest in remote sensing: A review of applications and future directions. ISPRS J. Photogramm. Remote Sens. 2016, 114, 24–31. [Google Scholar] [CrossRef]

- Sharma, A.; Liu, X.; Yang, X.; Shi, D. A patch-based convolutional neural network for remote sensing image classification. Neural Netw. 2017, 95, 19–28. [Google Scholar] [CrossRef] [PubMed]

- Papadomanolaki, M.; Vakalopoulou, M.; Zagoruyko, S.; Karantzalos, K. Benchmarking deep learning frameworks for the classification of very high resolution satellite multispectral data. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2016, 7, 83–88. [Google Scholar] [CrossRef]

- Volpi, M.; Tuia, D. Dense semantic labeling of subdecimeter resolution images with convolutional neural networks. IEEE Trans. Geosci. Remote Sens. 2017, 55, 881–893. [Google Scholar] [CrossRef]

- Kussul, N.; Lavreniuk, M.; Skakun, S.; Shelestov, A. Deep learning classification of land cover and crop types using remote sensing data. IEEE Trans. Geosci. Remote Sens. Lett. 2017, 14, 778–782. [Google Scholar] [CrossRef]

- Maggiori, E.; Tarabalka, Y.; Charpiat, G.; Alliez, P. High-Resolution Aerial Image Labeling With Convolutional Neural Networks. IEEE Trans. Geosci. Remote Sens. 2017, 55, 7092–7103. [Google Scholar] [CrossRef] [Green Version]

- Papadomanolaki, M.; Vakalopoulou, M.; Karantzalos, K. Patch-based deep learning architectures for sparse annotated very high resolution datasets. In Proceedings of the Urban Remote Sensing Event (JURSE), 2017 Joint, Dubai, UAE, 6–8 March 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 1–4. [Google Scholar]

- Rußwurm, M.; Körner, M. Multi-Temporal Land Cover Classification with Long Short-Term Memory Neural Networks. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2017, 42, 551. [Google Scholar] [CrossRef]

- Dixon, B.; Candade, N. Multispectral landuse classification using neural networks and support vector machines: One or the other, or both? Int. J. Remote Sens. 2008, 29, 1185–1206. [Google Scholar] [CrossRef]

- Peel, M.C.; Finlayson, B.L.; McMahon, T.A. Updated world map of the Köppen-Geiger climate classification. Hydrol. Earth Syst. Sci. Discuss. 2007, 4, 439–473. [Google Scholar] [CrossRef]

- Köppen, W.; Volken, E.; Brönnimann, S. Die Wärmezonen der Erde, nach der Dauer der heissen, gemässigten und kalten Zeit und nach der Wirkung der Wärme auf die organische Welt betrachtet, Meteorol. Z. 1884, 1, 215–226; reprinted in The thermal zones of the earth according to the duration of hot, moderate and cold periods and to the impact of heat on the organic world. Meteorol. Z. 2011, 20, 351–360. [Google Scholar]

- Karakizi, C.; Vakalopoulou, M.; Karantzalos, K. Annual Crop-type Classification from Multitemporal Landsat-8 and Sentinel-2 Data based on Deep-learning. In Proceedings of the 37th International Symposium on Remote Sensing of Environment (ISRSE-37), Tshwane, South Africa, 8–12 May 2017. [Google Scholar]

- Karakizi, C.; Karantzalos, K. Comparing Land Cover Maps derived from all Annual Landsat-8 and Sentinel-2 Data at Different Spatial Scales. In Proceedings of the ESA WorldCover Conference, Frascati, Rome, Italy, 14–16 March 2017. [Google Scholar]

- Zhu, Z.; Wang, S.; Woodcock, C.E. Improvement and expansion of the Fmask algorithm: Cloud, cloud shadow, and snow detection for Landsats 4–7, 8, and Sentinel 2 images. Remote Sens. Environ. 2015, 159, 269–277. [Google Scholar] [CrossRef]

- European Environment Agency (EEA) under the Framework of the Copernicus Programme CORINE Land Cover Nomenclature. Available online: https://land.copernicus.eu/user-corner/technical-library/corine-land-cover-nomenclature-guidelines/docs/pdf/CLC2018_Nomenclature_illustrated_guide_20170930.pdf (accessed on 30 July 2018).

- Shih, H.; Stow, D.A.; Weeks, J.R.; Coulter, L.L. Determining the Type and Starting Time of Land Cover and Land Use Change in Southern Ghana Based on Discrete Analysis of Dense Landsat Image Time Series. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2016, 9, 2064–2073. [Google Scholar] [CrossRef]

- Müller, H.; Rufin, P.; Griffiths, P.; Siqueira, A.J.B.; Hostert, P. Mining dense Landsat time series for separating cropland and pasture in a heterogeneous Brazilian savanna landscape. Remote Sens. Environ. 2015, 156, 490–499. [Google Scholar] [CrossRef] [Green Version]

- Yeom, J.; Han, Y.; Kim, Y. Separability analysis and classification of rice fields using KOMPSAT-2 High Resolution Satellite Imagery. Res. J. Chem. Environ. 2013, 17, 136–144. [Google Scholar]

- Rouse, J., Jr.; Haas, R.H.; Schell, J.A.; Deering, D.W. Monitoring Vegetation Systems in the Great Plains with ERTS; Goddard Space Flight Center: Greenbelt, MD, USA, 1974. [Google Scholar]

- Qi, J.; Chehbouni, A.; Huete, A.R.; Kerr, Y.H.; Sorooshian, S. A modified soil adjusted vegetation index. Remote Sens. Environ. 1994, 48, 119–126. [Google Scholar] [CrossRef]

- Gao, B.-C. NDWI—A normalized difference water index for remote sensing of vegetation liquid water from space. Remote Sens. Environ. 1996, 58, 257–266. [Google Scholar] [CrossRef]

- Zha, Y.; Gao, J.; Ni, S. Use of normalized difference built-up index in automatically mapping urban areas from TM imagery. Int. J. Remote Sens. 2003, 24, 583–594. [Google Scholar] [CrossRef]

- Congalton, R.G.; Green, K. Assessing the Accuracy of Remotely Sensed Data: Principles and Practices; CRC Press: Boca Raton, FL, USA, 2008. [Google Scholar]

- Kinkeldey, C. A concept for uncertainty-aware analysis of land cover change using geovisual analytics. ISPRS Int. J. Geo-Inf. 2014, 3, 1122–1138. [Google Scholar] [CrossRef]

- Chang, C.-C.; Lin, C.-J. LIBSVM: A Library for Support Vector Machines. ACM Trans. Intell. Syst. Technol. 2011, 2, 27. [Google Scholar] [CrossRef]

- Schölkopf, B.; Smola, A.J. Learning with Kernels: Support Vector Machines, Regularization, Optimization, and Beyond; MIT Press: Cambridge, MA, USA, 2002. [Google Scholar]

- Hsu, C.-W.; Chang, C.-C.; Lin, C.-J. A Practical Guide to Support Vector Classification; Department of Computer Science, National Taiwan University: Taipei, Taiwan, 2003. [Google Scholar]

- Marcos, D.; Volpi, M.; Kellenberger, B.; Tuia, D. Land cover mapping at very high resolution with rotation equivariant CNNs: Towards small yet accurate models. ISPRS J. Photogramm. Remote Sens. 2018. [Google Scholar] [CrossRef]

- Guyon, I.; Elisseeff, A. An introduction to variable and feature selection. J. Mach. Learn. Res. 2003, 3, 1157–1182. [Google Scholar]

- Yao, C.; Liu, Y.-F.; Jiang, B.; Han, J.; Han, J. LLE score: A new filter-based unsupervised feature selection method based on nonlinear manifold embedding and its application to image recognition. IEEE Trans. Image Process. 2017, 26, 5257–5269. [Google Scholar] [CrossRef] [PubMed]

- Wu, B.; Zhang, L.; Zhao, Y. Feature selection via Cramer’s V-test discretization for remote-sensing image classification. IEEE Trans. Geosci. Remote Sens. 2014, 52, 2593–2606. [Google Scholar] [CrossRef]

- Gnana, D.A.A.; Balamurugan, S.A.A.; Leavline, E.J. Literature review on feature selection methods for high-dimensional data. Int. J. Comput. Appl. 2016, 136. [Google Scholar] [CrossRef]

- Gu, Q.; Li, Z.; Han, J. Generalized fisher score for feature selection. arXiv, 2012; arXiv:1202.3725. Available online: https://arxiv.org/abs/1202.3725(accessed on 30 July 2018).

- Wang, D.; Hollaus, M.; Pfeifer, N. Feasibility of machine learning methods for separating wood and leaf points from terrestrial laser scanning data. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2017, 4, 157–164. [Google Scholar] [CrossRef]

- Jaganathan, P.; Rajkumar, N.; Kuppuchamy, R. A comparative study of improved F-score with support vector machine and RBF network for breast cancer classification. Int. J. Mach. Learn. Comput. 2012, 2, 741. [Google Scholar] [CrossRef]

- Chang, Y.-W.; Lin, C.-J. Feature Ranking Using Linear SVM. Proc. Mach. Learn. Res. 2008, 3, 53–64. Available online: http://proceedings.mlr.press/v3/chang08a/chang08a.pdf (accessed on 30 July 2018).

- Chen, Y.-W.; Lin, C.-J. Combining SVMs with various feature selection strategies. In Feature Extraction; Springer: New York, NY, USA, 2006; pp. 315–324. [Google Scholar]

- Congalton, R.G. A review of assessing the accuracy of classifications of remotely sensed data. Remote Sens. Environ. 1991, 37, 35–46. [Google Scholar] [CrossRef]

- Foody, G.M. Thematic Map Comparison: Evaluating the Statistical Significance of Differences in Classification Accuracy. Photogramm. Eng. Remote Sens. 2004, 70, 627–633. [Google Scholar] [CrossRef]

- Dash, J.; Mathur, A.; Foody, G.M.; Curran, P.J.; Chipman, J.W.; Lillesand, T.M. Land cover classification using multi-temporal MERIS vegetation indices. Int. J. Remote Sens. 2007, 28, 1137–1159. [Google Scholar] [CrossRef]

- Yan, L.; Roy, D.P. Conterminous United States crop field size quantification from multi-temporal Landsat data. Remote Sens. Environ. 2016, 172, 67–86. [Google Scholar] [CrossRef]

- Barsi, J.A.; Lee, K.; Kvaran, G.; Markham, B.L.; Pedelty, J.A. The spectral response of the Landsat-8 operational land imager. Remote Sens. 2014, 6, 10232–10251. [Google Scholar] [CrossRef]

| Image # | LCC | Date of Acquisition | Season | LCC Limit | # of Dataset | |||

|---|---|---|---|---|---|---|---|---|

| 1 | 1.19% | 8/1/2016 * | Winter | <20% | 1 | 2 | 3 | 4 |

| 2 | 15.23% | 24/1/2016 | Winter | |||||

| 3 | 10.24% | 13/4/2016 * | Spring | |||||

| 4 | 0.05% | 31/5/2016 * | Spring | |||||

| 5 | 0.19% | 16/6/2016 * | Summer | |||||

| 6 | 10.86% | 2/7/2016 * | Summer | |||||

| 7 | 12.99% | 18/7/2016 | Summer | |||||

| 8 | 11.46% | 19/8/2016 | Summer | |||||

| 9 | 12.36% | 4/9/2016 | Autumn | |||||

| 10 | 12.03% | 20/9/2016 | Autumn | |||||

| 11 | 0.73% | 9/12/2016 * | Winter | |||||

| 12 | 27.11% | 28/3/2016 | Spring | <30% | ||||

| 13 | 24.14% | 3/8/2016 | Summer | |||||

| 14 | 26.92% | 6/10/2016 | Autumn | |||||

| 15 | 37.06% | 9/2/2016 | Winter | <40% | ||||

| 16 | 31.94% | 15/5/2016 | Spring | |||||

| 17 | 46.66% | 7/11/2016 | Autumn | <60% | ||||

| 18 | 58.79% | 25/12/2016 | Winter | |||||

| Land Cover Nomenclature | Code | Description | Pol. | Tr. Pixels | Test Pixels |

|---|---|---|---|---|---|

| 1. Dense Urban Fabric | DUF | Land covered by structures in a continuous dense fabric—big cities. | 126 | 3452 | 1332 |

| 2. Sparse Urban Fabric | SUF | Land covered by structures in a discontinuous sparse fabric | 270 | 2608 | 1739 |

| 3. Industrial Commercial Units | ICU | Artificially surfaced industrial or commercial units. | 128 | 1046 | 697 |

| 4. Road/Asphalt Networks | RAN | Asphalt sealed areas and networks. | 79 | 558 | 183 |

| 5. Mineral Extraction Sites | MES | Areas with open-pit-extraction of construction material or minerals. | 36 | 1898 | 1265 |

| 6. Broad-leaved Forest | BLF | Forest with broad-leaved species predomination. | 1359 | 15,000 | 10,000 |

| 7. Coniferous Forest | CNF | Forest with coniferous species predomination. | 938 | 5737 | 3825 |

| 8. Natural Grasslands | NGR | Areas of herbaceous vegetation. | 265 | 445 | 297 |

| 9. Dense Sclerophyllous Vegetation | DSV | Dense bushy sclerophyllous vegetation, including maquis and garrigue. | 502 | 4058 | 2075 |

| 10. Sparse Sclerophyllous Vegetation | SSV | Sparse bushy sclerophyllous vegetation, including maquis and garrigue. | 396 | 5056 | 1233 |

| 11. Moss and Lichen | MNL | High altitude moors areas with moss and lichen. | 154 | 1046 | 295 |

| 12. Sparsely Vegetated Areas | SVA | Scattered vegetation areas on stones, boulders, or rubble on steep slopes. | 65 | 767 | 511 |

| 13. Vineyards | VNY | Areas of vine cultivation. | 269 | 836 | 557 |

| 14. Olive Groves | OLG | Areas of olive trees plantations. | 517 | 1048 | 262 |

| 15. Fruit Trees | FRT | Areas of fruit trees plantations, including citrus, apricot, pear, quince, cherry, apple and nut trees. | 579 | 973 | 649 |

| 16. Kiwi Plants | KWP | Areas of kiwi plants cultivation. | 172 | 479 | 303 |

| 17. Cereals | CRL | Areas of cereal crops cultivation, including wheat, barley, rye and triticale. | 504 | 1268 | 851 |

| 18. Maize | MAZ | Areas of maize crop cultivation. | 179 | 351 | 234 |

| 19. Rice Fields | RCF | Areas of rice cultivation—periodically flooded. | 999 | 11,775 | 6483 |

| 20. Potatoes | PTT | Areas of potato cultivation. | 78 | 277 | 185 |

| 21. Grass Fodder | GRF | Areas of grass fodder cultivation, including clover and alfalfa. | 500 | 610 | 407 |

| 22. Greenhouses | GRH | Structures with glass or translucent plastic roof where plants are grown. | 96 | 114 | 76 |

| 23. Rocks and Sand | RNS | Bare land areas consisting of rock, cliffs, beaches and sand. | 295 | 2331 | 1554 |

| 24. Marshes | MRS | Low-lying land usually flooded by water/sea water and partially covered with herbaceous vegetation. | 52 | 7082 | 1833 |

| 25. Water Courses | WCR | Natural or artificial water courses—rivers and canals. | 67 | 776 | 277 |

| 26. Water Bodies | WBD | Natural or artificial stretches of water—lakes. | 17 | 15,000 | 6567 |

| 27. Coastal Water | CWT | Sea water of low depth on coastal areas. | 30 | 3928 | 982 |

| Dataset | Classifier | Average | OA | Kappa | ||

|---|---|---|---|---|---|---|

| PA | UA | F1 | ||||

| Dataset #0 (6 dates) | SVM-linear, C = 100 | 68.28% | 68.74% | 66.20% | 68.71% | 67.25% |

| SVM-RBF, C = 10, g = 1 | 67.63% | 69.50% | 66.29% | 67.36% | 65.83% | |

| CNN | 63.36% | 64.55% | 61.67% | 67.23% | 65.69% | |

| Dataset #1 (11 dates) | SVM-linear, C = 10 | 69.01% | 69.67% | 67.04% | 68.66% | 67.21% |

| SVM-RBF, C = 1000, g = 0.01 | 67.71% | 67.91% | 65.18% | 67.18% | 65.67% | |

| CNN | 63.61% | 66.09% | 62.64% | 69.35% | 67.91% | |

| Dataset | Fr. #1 | Fr.#2 | p-Value | Statistical Significance |

|---|---|---|---|---|

| Dataset #0 (6 dates) | SVM-linear | SVM-RBF | 0.00002 | Yes, 0.1% |

| SVM-linear | CNN | 0.00044 | Yes, 0.1% | |

| SVM-RBF | CNN | 0.56414 | No, 5% | |

| Dataset #1 (11 dates) | SVM-linear | SVM-RBF | 0.00001 × 10−6 | Yes, 0.1% |

| SVM-linear | CNN | 0.01151 | Yes, 5% | |

| SVM-RBF | CNN | 0.46276 | No, 5% |

| Class | DUF | SUF | ICU | RAN | MES | BLF | CNF | NGR | DSV | SSV | MNL | SVA | VNY | OLG | FRT | KWP | CRL | MAZ | RCF | PTT | GRF | GRH | RNS | MRS | WCR | WBD | CWT |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| # Dataset | 2 | 1 | 1 | 2 | 1 | 1 | 2 | 2 | 4 | 2 | 1 | 2 | 1 | 2 | 4 | 4 | 3 | 4 | 2 | 2 | 2 | 2 | 1 | 2 | 2 | 1 | 1 |

| F1(max.) | 73.3 | 77.5 | 62.6 | 65.2 | 60.5 | 91.4 | 76.1 | 43.3 | 41.5 | 52.1 | 41.9 | 42.3 | 73.8 | 49.9 | 51.6 | 91.8 | 92.8 | 78.4 | 99.5 | 80.1 | 50.6 | 70.8 | 53.9 | 62.0 | 76.2 | 99.6 | 97.4 |

| Cloudy pixels | 8.0 | 2.3 | 1.1 | 6.9 | 4.7 | 9.7 | 14.2 | 10.5 | 10.2 | 9.0 | 48.1 | 24.9 | 2.5 | 5.2 | 11.8 | 3.1 | 10.3 | 13.8 | 7.6 | 8.3 | 5.6 | 3.3 | 10.1 | 5.6 | 7.4 | 6.8 | 6.3 |

| Dataset | Dataset 1 (11 Dates) < 20% CC | Dataset 2 (14 Dates) < 30% CC | Dataset 3 (16 Dates) < 40% CC | Dataset 4 (18 Dates) < 60% CC | |||||||||||||||||||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| All 121 Features | Top 70% 85 Features | Top 50% 60 Features | All 154 Features | Top 70% 107 Features | Top 50% 77 Features | All 176 Features | Top 70% 123 Features | Top 50% 88 Features—15 Dates * | All 198 Features | Top 70% 138 Features | Top 50% 99 Features—16 Dates ** | ||||||||||||||||||||||||||

| Class | Train. Pixels | PA | UA | F1 | PA | UA | F1 | PA | UA | F1 | PA | UA | F1 | PA | UA | F1 | PA | UA | F1 | PA | UA | F1 | PA | UA | F1 | PA | UA | F1 | PA | UA | F1 | PA | UA | F1 | PA | UA | F1 |

| DUF | 3452 | 63.0 | 78.6 | 69.9 | 63.1 | 78.2 | 69.8 | 49.3 | 65.1 | 56.1 | 63.4 | 86.9 | 73.3 | 65.8 | 81.1 | 72.6 | 63.0 | 74.8 | 68.4 | 66.8 | 75.8 | 71.0 | 64.0 | 78.5 | 70.5 | 67.5 | 78.3 | 72.5 | 61.9 | 70.8 | 66.1 | 57.7 | 80.4 | 67.2 | 64.1 | 83.4 | 72.5 |

| SUF | 2608 | 84.9 | 71.3 | 77.5 | 87.3 | 70.8 | 78.2 | 86.1 | 63.7 | 73.3 | 87.1 | 69.1 | 77.1 | 88.2 | 72.2 | 79.4 | 86.7 | 68.3 | 76.4 | 77.9 | 66.2 | 71.5 | 85.5 | 73.1 | 78.8 | 87.0 | 72.7 | 79.2 | 77.7 | 63.9 | 70.1 | 84.5 | 67.0 | 74.8 | 88.2 | 74.2 | 80.6 |

| ICU | 1046 | 71.3 | 55.8 | 62.6 | 64.0 | 55.5 | 59.5 | 56.1 | 48.9 | 52.3 | 65.7 | 48.4 | 55.7 | 69.6 | 48.9 | 57.4 | 58.5 | 47.7 | 52.6 | 70.7 | 41.8 | 52.6 | 69.9 | 41.7 | 52.3 | 65.0 | 62.1 | 63.5 | 88.1 | 41.3 | 56.3 | 90.0 | 38.6 | 54.0 | 68.6 | 52.8 | 59.6 |

| RAN | 558 | 54.6 | 66.2 | 59.9 | 53.0 | 62.6 | 57.4 | 42.1 | 72.0 | 53.1 | 57.4 | 75.5 | 65.2 | 57.4 | 73.4 | 64.4 | 51.9 | 72.0 | 60.3 | 57.9 | 66.7 | 62.0 | 57.4 | 65.6 | 61.2 | 52.5 | 69.1 | 59.6 | 57.9 | 44.5 | 50.4 | 56.8 | 62.3 | 59.4 | 57.4 | 67.3 | 61.9 |

| MES | 1898 | 50.8 | 74.8 | 60.5 | 49.5 | 69.5 | 57.8 | 50.0 | 68.7 | 57.9 | 51.2 | 66.5 | 57.9 | 49.2 | 73.2 | 58.8 | 50.1 | 73.1 | 59.5 | 34.3 | 67.6 | 45.5 | 34.3 | 64.7 | 44.8 | 60.8 | 73.8 | 66.7 | 4.0 | 38.1 | 7.3 | 4.5 | 40.1 | 8.1 | 44.7 | 67.9 | 53.9 |

| BLF | 15,000 | 90.8 | 91.9 | 91.4 | 92.0 | 92.8 | 92.4 | 91.6 | 92.9 | 92.2 | 85.0 | 88.9 | 86.9 | 86.2 | 89.0 | 87.6 | 88.2 | 89.1 | 88.7 | 79.2 | 86.0 | 82.5 | 84.6 | 84.6 | 84.6 | 86.5 | 89.7 | 88.1 | 79.9 | 89.2 | 84.3 | 80.9 | 87.8 | 84.2 | 87.0 | 88.9 | 87.9 |

| CNF | 5737 | 86.7 | 65.1 | 74.4 | 85.9 | 66.2 | 74.8 | 87.8 | 66.4 | 75.6 | 93.0 | 64.4 | 76.1 | 88.0 | 64.9 | 74.7 | 88.2 | 66.8 | 76.0 | 88.1 | 60.9 | 72.0 | 89.6 | 69.1 | 78.0 | 89.3 | 65.6 | 75.7 | 79.0 | 58.9 | 67.5 | 85.6 | 64.1 | 73.4 | 89.8 | 68.8 | 77.9 |

| NGR | 445 | 75.4 | 27.7 | 40.5 | 75.4 | 25.5 | 38.2 | 83.5 | 25.7 | 39.3 | 75.8 | 30.3 | 43.3 | 75.1 | 29.5 | 42.4 | 87.9 | 26.5 | 40.7 | 87.9 | 22.4 | 35.7 | 80.5 | 25.7 | 39.0 | 87.5 | 28.1 | 42.5 | 88.2 | 23.8 | 37.5 | 75.1 | 31.8 | 44.6 | 79.5 | 28.5 | 42.0 |

| DSV | 4058 | 27.3 | 56.4 | 36.8 | 28.6 | 50.9 | 36.6 | 28.2 | 53.4 | 36.9 | 21.9 | 46.1 | 29.7 | 24.6 | 45.3 | 31.9 | 25.8 | 46.6 | 33.3 | 20.9 | 37.9 | 26.9 | 21.3 | 43.9 | 28.7 | 26.5 | 46.9 | 33.9 | 39.1 | 44.2 | 41.5 | 30.9 | 44.9 | 36.6 | 27.2 | 46.6 | 34.4 |

| SSV | 5056 | 50.7 | 51.7 | 51.2 | 61.7 | 64.5 | 63.1 | 57.8 | 62.5 | 60.1 | 48.9 | 55.7 | 52.1 | 55.5 | 58.8 | 57.1 | 52.6 | 58.5 | 55.4 | 52.2 | 50.0 | 51.1 | 51.2 | 52.2 | 51.7 | 58.3 | 59.9 | 59.1 | 49.3 | 47.7 | 48.5 | 64.2 | 44.9 | 52.8 | 58.6 | 59.7 | 59.1 |

| MNL | 1046 | 40.7 | 43.2 | 41.9 | 65.8 | 65.8 | 65.8 | 71.5 | 89.0 | 79.3 | 36.6 | 45.6 | 40.6 | 63.1 | 55.7 | 59.1 | 74.6 | 90.2 | 81.6 | 8.1 | 80.0 | 14.8 | 56.9 | 53.3 | 55.1 | 65.4 | 74.2 | 69.5 | 14.9 | 57.1 | 23.7 | 50.2 | 53.4 | 51.7 | 73.2 | 57.0 | 64.1 |

| SVA | 767 | 55.0 | 31.0 | 39.7 | 52.1 | 30.6 | 38.5 | 38.4 | 33.5 | 35.8 | 70.5 | 30.2 | 42.3 | 68.3 | 32.6 | 44.1 | 48.5 | 33.5 | 39.6 | 58.1 | 26.6 | 36.5 | 65.2 | 31.6 | 42.6 | 55.6 | 31.1 | 39.9 | 57.9 | 25.6 | 35.6 | 62.4 | 26.2 | 36.9 | 55.8 | 30.2 | 39.2 |

| VNY | 836 | 66.1 | 83.6 | 73.8 | 69.3 | 85.2 | 76.4 | 65.9 | 83.6 | 73.7 | 51.5 | 74.9 | 61.1 | 57.6 | 82.7 | 67.9 | 54.0 | 82.7 | 65.4 | 50.4 | 55.8 | 53.0 | 63.6 | 69.4 | 66.4 | 54.9 | 82.3 | 65.9 | 36.1 | 59.3 | 44.9 | 56.4 | 71.2 | 62.9 | 63.4 | 82.3 | 71.6 |

| OLG | 1048 | 50.4 | 44.9 | 47.5 | 51.9 | 60.4 | 55.9 | 64.9 | 53.8 | 58.8 | 54.6 | 46.0 | 49.9 | 56.1 | 54.6 | 55.4 | 67.9 | 52.5 | 59.2 | 55.3 | 37.5 | 44.7 | 54.2 | 46.7 | 50.2 | 58.0 | 57.4 | 57.7 | 53.1 | 44.8 | 48.6 | 59.2 | 47.1 | 52.5 | 55.3 | 61.2 | 58.1 |

| FRT | 973 | 43.6 | 49.4 | 46.3 | 60.7 | 60.9 | 60.8 | 46.7 | 61.7 | 53.2 | 47.5 | 36.8 | 41.5 | 64.1 | 52.2 | 57.5 | 68.4 | 64.5 | 66.4 | 35.3 | 40.5 | 37.7 | 44.8 | 48.4 | 46.6 | 57.8 | 52.5 | 55.0 | 44.4 | 61.7 | 51.6 | 45.8 | 46.0 | 45.9 | 57.8 | 47.5 | 52.1 |

| KWP | 479 | 99.7 | 70.2 | 82.4 | 99.3 | 84.8 | 91.5 | 99.3 | 69.2 | 81.6 | 99.0 | 77.5 | 87.0 | 99.7 | 82.1 | 90.0 | 99.7 | 82.5 | 90.3 | 99.7 | 73.1 | 84.4 | 100.0 | 71.8 | 83.6 | 100.0 | 75.6 | 86.1 | 99.7 | 85.1 | 91.8 | 99.3 | 72.2 | 83.6 | 100.0 | 75.2 | 85.8 |

| CRL | 1268 | 90.5 | 89.8 | 90.2 | 92.4 | 89.4 | 90.9 | 90.8 | 91.2 | 91.0 | 93.7 | 91.6 | 92.6 | 92.9 | 92.7 | 92.8 | 91.8 | 93.0 | 92.4 | 92.8 | 92.8 | 92.8 | 92.6 | 92.2 | 92.4 | 91.8 | 92.0 | 91.9 | 89.7 | 93.3 | 91.4 | 91.0 | 92.9 | 91.9 | 91.8 | 91.9 | 91.8 |

| MAZ | 351 | 77.4 | 71.5 | 74.3 | 82.5 | 73.7 | 77.8 | 83.3 | 72.8 | 77.7 | 81.6 | 72.6 | 76.9 | 86.3 | 73.2 | 79.2 | 77.8 | 66.9 | 71.9 | 78.6 | 66.9 | 72.3 | 91.5 | 73.0 | 81.2 | 82.5 | 73.4 | 77.7 | 94.0 | 67.3 | 78.4 | 97.4 | 68.7 | 80.6 | 85.5 | 73.5 | 79.1 |

| RCF | 11,775 | 99.9 | 98.2 | 99.1 | 99.8 | 98.9 | 99.4 | 99.7 | 99.2 | 99.5 | 100.0 | 99.1 | 99.5 | 100.0 | 99.1 | 99.5 | 99.7 | 98.9 | 99.3 | 100.0 | 98.7 | 99.3 | 100.0 | 99.4 | 99.7 | 99.9 | 99.0 | 99.5 | 100.0 | 98.3 | 99.2 | 100.0 | 99.5 | 99.7 | 99.9 | 99.1 | 99.5 |

| PTT | 277 | 63.8 | 99.2 | 77.6 | 65.9 | 100.0 | 79.5 | 66.5 | 100.0 | 79.9 | 68.6 | 96.2 | 80.1 | 69.7 | 99.2 | 81.9 | 69.7 | 100.0 | 82.2 | 54.1 | 99.0 | 69.9 | 68.1 | 99.2 | 80.8 | 75.1 | 100.0 | 85.8 | 45.4 | 100.0 | 62.5 | 60.5 | 100.0 | 75.4 | 74.1 | 100.0 | 85.1 |

| GRF | 610 | 49.4 | 41.8 | 45.3 | 52.3 | 50.4 | 51.3 | 50.1 | 48.0 | 49.0 | 50.4 | 50.9 | 50.6 | 51.1 | 50.5 | 50.8 | 48.9 | 46.2 | 47.5 | 39.3 | 54.4 | 45.6 | 54.8 | 55.8 | 55.3 | 52.3 | 48.5 | 50.4 | 52.1 | 42.7 | 46.9 | 48.4 | 55.2 | 51.6 | 53.6 | 50.7 | 52.1 |

| GRH | 114 | 88.2 | 39.2 | 54.3 | 85.5 | 42.2 | 56.5 | 90.8 | 27.5 | 42.2 | 89.5 | 58.6 | 70.8 | 88.2 | 61.5 | 72.4 | 88.2 | 23.3 | 36.8 | 88.2 | 25.5 | 39.5 | 89.5 | 60.7 | 72.3 | 86.8 | 33.3 | 48.2 | 89.5 | 25.8 | 40.0 | 90.8 | 75.0 | 82.1 | 88.2 | 69.8 | 77.9 |

| RNS | 2331 | 49.2 | 59.6 | 53.9 | 47.1 | 59.3 | 52.5 | 61.3 | 72.5 | 66.4 | 40.0 | 64.0 | 49.2 | 45.8 | 63.4 | 53.2 | 45.5 | 63.1 | 52.9 | 35.8 | 63.6 | 45.8 | 47.6 | 63.5 | 54.4 | 47.6 | 60.9 | 53.4 | 44.0 | 58.5 | 50.2 | 38.0 | 56.2 | 45.3 | 48.3 | 56.3 | 52.0 |

| MRS | 7082 | 41.7 | 92.2 | 57.4 | 42.1 | 89.0 | 57.1 | 57.6 | 86.7 | 69.2 | 48.2 | 86.9 | 62.0 | 46.5 | 91.5 | 61.7 | 45.1 | 84.1 | 58.7 | 37.6 | 77.8 | 50.7 | 36.0 | 89.1 | 51.2 | 39.0 | 91.7 | 54.7 | 37.5 | 91.2 | 53.2 | 40.3 | 95.7 | 56.7 | 41.6 | 92.8 | 57.4 |

| WCR | 776 | 93.9 | 42.8 | 58.8 | 93.9 | 41.8 | 57.8 | 91.0 | 26.6 | 41.2 | 95.3 | 63.5 | 76.2 | 97.1 | 62.1 | 75.8 | 97.1 | 27.7 | 43.1 | 96.8 | 47.7 | 63.9 | 97.8 | 41.7 | 58.5 | 96.8 | 57.3 | 71.9 | 96.8 | 45.0 | 61.4 | 96.8 | 44.1 | 60.6 | 95.7 | 56.6 | 71.1 |

| WBD | 15,000 | 99.8 | 99.4 | 99.6 | 99.8 | 98.9 | 99.4 | 90.5 | 98.3 | 94.3 | 99.8 | 99.3 | 99.6 | 99.8 | 98.6 | 99.2 | 90.8 | 98.3 | 94.4 | 98.4 | 98.9 | 98.6 | 97.8 | 98.1 | 98.0 | 99.7 | 96.8 | 98.3 | 97.9 | 98.4 | 98.1 | 98.3 | 98.1 | 98.2 | 99.3 | 96.6 | 97.9 |

| CWT | 3928 | 94.9 | 100.0 | 97.4 | 92.9 | 100.0 | 96.3 | 92.9 | 100.0 | 96.3 | 89.3 | 100.0 | 94.4 | 90.2 | 100.0 | 94.9 | 92.5 | 100.0 | 96.1 | 86.9 | 94.8 | 90.6 | 83.7 | 100.0 | 91.1 | 81.7 | 100.0 | 89.9 | 81.9 | 90.3 | 85.9 | 81.6 | 100.0 | 89.8 | 80.0 | 100.0 | 88.9 |

| Aver. | 3278 | 68.87 | 66.51 | 65.33 | 70.88 | 69.18 | 67.97 | 70.14 | 67.89 | 66.14 | 69.09 | 67.62 | 66.35 | 71.70 | 69.94 | 68.96 | 70.86 | 67.81 | 66.26 | 63.29 | 59.67 | 69.72 | 66.41 | 65.51 | 71.33 | 69.34 | 68.01 | 65.18 | 68.39 | 65.31 | 63.73 | 71.42 | 69.58 | 68.66 | |||

| OA | 80.46 | 81.38 | 80.17 | 79.44 | 80.37 | 79.06 | 75.65 | 78.29 | 80.33 | 76.78 | 80.22 | ||||||||||||||||||||||||||

| Kappa | 78.01 | 79.05 | 77.73 | 76.89 | 77.93 | 76.48 | 72.69 | 75.58 | 77.88 | 73.97 | 77.75 | ||||||||||||||||||||||||||

| Confusion Matrix | |||||||||||||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| DUF | SUF | ICU | RAN | MES | BLF | CNF | NGR | DSV | SSV | MNL | SVA | VNY | OLG | FRT | KWP | CRL | MAZ | RCF | PTT | GRF | GRH | RNS | MRS | WCR | WBD | CWT | |

| DUF | 65.77 | 23.12 | 4.88 | 0.15 | 5.11 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.08 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.90 | 0.00 | 0.00 | 0.00 | 0.00 |

| SUF | 6.33 | 88.15 | 0.00 | 0.12 | 0.06 | 0.00 | 0.00 | 0.35 | 0.00 | 0.29 | 0.00 | 0.23 | 0.06 | 0.00 | 3.22 | 0.00 | 0.17 | 0.00 | 0.00 | 0.00 | 0.69 | 0.00 | 0.29 | 0.06 | 0.00 | 0.00 | 0.00 |

| ICU | 10.33 | 2.01 | 69.58 | 0.29 | 14.35 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.29 | 0.00 | 0.00 | 0.14 | 0.00 | 0.14 | 0.00 | 0.00 | 0.00 | 0.00 | 1.29 | 1.15 | 0.43 | 0.00 | 0.00 | 0.00 |

| RAN | 0.00 | 4.92 | 27.32 | 57.38 | 1.64 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 1.64 | 0.55 | 0.00 | 1.09 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 4.92 | 0.55 | 0.00 | 0.00 | 0.00 |

| MES | 1.34 | 5.45 | 22.77 | 0.63 | 49.17 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 20.63 | 0.00 | 0.00 | 0.00 | 0.00 |

| BLF | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 86.24 | 9.40 | 0.61 | 1.15 | 1.47 | 0.12 | 0.11 | 0.00 | 0.05 | 0.00 | 0.05 | 0.00 | 0.00 | 0.00 | 0.00 | 0.78 | 0.00 | 0.00 | 0.02 | 0.00 | 0.00 | 0.00 |

| CNF | 0.00 | 0.00 | 0.00 | 0.00 | 0.08 | 4.29 | 88.03 | 0.00 | 6.48 | 0.24 | 0.03 | 0.03 | 0.00 | 0.63 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.21 | 0.00 | 0.00 | 0.00 |

| NGR | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 75.08 | 0.34 | 3.03 | 0.00 | 0.00 | 0.00 | 1.01 | 1.35 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 2.02 | 0.00 | 17.17 | 0.00 | 0.00 | 0.00 | 0.00 |

| DSV | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 33.54 | 40.87 | 0.00 | 24.63 | 0.24 | 0.00 | 0.00 | 0.00 | 0.72 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 |

| SSV | 0.00 | 0.16 | 0.00 | 0.00 | 0.00 | 2.27 | 0.00 | 6.97 | 16.55 | 55.47 | 0.00 | 10.95 | 0.00 | 4.22 | 0.24 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.57 | 0.00 | 0.00 | 2.60 | 0.00 | 0.00 | 0.00 |

| MNL | 0.00 | 0.00 | 0.00 | 0.00 | 7.80 | 0.00 | 0.00 | 5.42 | 0.00 | 0.00 | 63.05 | 12.54 | 0.00 | 0.00 | 0.00 | 0.00 | 2.71 | 0.00 | 0.00 | 0.00 | 7.46 | 1.02 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 |

| SVA | 0.00 | 0.00 | 0.00 | 0.00 | 1.57 | 0.00 | 0.00 | 0.00 | 0.00 | 16.44 | 4.70 | 68.30 | 0.00 | 0.00 | 0.39 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 1.37 | 0.00 | 7.05 | 0.20 | 0.00 | 0.00 | 0.00 |

| VNY | 0.00 | 26.21 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.18 | 0.00 | 0.00 | 57.63 | 0.18 | 10.23 | 0.00 | 0.36 | 0.54 | 4.13 | 0.00 | 0.54 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 |

| OLG | 0.00 | 4.96 | 0.00 | 0.00 | 0.00 | 1.53 | 0.00 | 2.29 | 2.67 | 15.65 | 0.00 | 0.00 | 0.38 | 56.11 | 15.27 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 1.15 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 |

| FRT | 0.00 | 3.24 | 0.00 | 0.00 | 0.00 | 2.00 | 0.00 | 0.92 | 0.00 | 2.47 | 0.00 | 0.00 | 3.39 | 3.39 | 64.10 | 8.78 | 2.31 | 0.31 | 0.77 | 0.00 | 8.17 | 0.00 | 0.00 | 0.15 | 0.00 | 0.00 | 0.00 |

| KWP | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.33 | 99.67 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 |

| CRL | 0.00 | 0.24 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 3.06 | 0.00 | 1.18 | 0.00 | 0.00 | 0.12 | 0.00 | 1.18 | 0.00 | 92.95 | 0.00 | 0.00 | 0.00 | 0.94 | 0.00 | 0.00 | 0.35 | 0.00 | 0.00 | 0.00 |

| MAZ | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 86.32 | 13.25 | 0.43 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 |

| RCF | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.05 | 99.95 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 |

| PTT | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 2.16 | 0.00 | 26.49 | 0.00 | 69.73 | 1.62 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 |

| GRF | 0.00 | 0.25 | 0.00 | 0.00 | 0.49 | 0.00 | 0.00 | 9.34 | 0.00 | 0.49 | 0.00 | 0.00 | 8.85 | 0.00 | 19.16 | 0.00 | 8.11 | 2.21 | 0.00 | 0.00 | 51.11 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 |

| GRH | 0.00 | 3.95 | 1.32 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 5.26 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 1.32 | 88.16 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 |

| RNS | 0.32 | 0.06 | 6.63 | 0.06 | 1.29 | 0.00 | 0.00 | 0.06 | 0.00 | 1.93 | 7.14 | 33.53 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 1.93 | 45.75 | 1.29 | 0.00 | 0.00 | 0.00 |

| MRS | 0.00 | 0.00 | 0.00 | 1.25 | 0.00 | 8.67 | 1.75 | 15.60 | 2.24 | 6.55 | 0.00 | 0.38 | 0.05 | 0.00 | 6.93 | 0.00 | 0.00 | 0.44 | 0.00 | 0.00 | 0.05 | 0.00 | 1.53 | 46.54 | 6.44 | 1.58 | 0.00 |

| WCR | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.36 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 2.53 | 97.11 | 0.00 | 0.00 |

| WBD | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.17 | 99.83 | 0.00 |

| CWT | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 3.56 | 6.21 | 90.22 |

| Number of testing pixels: 44,672 | |||||||||||||||||||||||||||

| OA: 80.37%, Kappa: 77.93% | |||||||||||||||||||||||||||

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Karakizi, C.; Karantzalos, K.; Vakalopoulou, M.; Antoniou, G. Detailed Land Cover Mapping from Multitemporal Landsat-8 Data of Different Cloud Cover. Remote Sens. 2018, 10, 1214. https://0-doi-org.brum.beds.ac.uk/10.3390/rs10081214

Karakizi C, Karantzalos K, Vakalopoulou M, Antoniou G. Detailed Land Cover Mapping from Multitemporal Landsat-8 Data of Different Cloud Cover. Remote Sensing. 2018; 10(8):1214. https://0-doi-org.brum.beds.ac.uk/10.3390/rs10081214

Chicago/Turabian StyleKarakizi, Christina, Konstantinos Karantzalos, Maria Vakalopoulou, and Georgia Antoniou. 2018. "Detailed Land Cover Mapping from Multitemporal Landsat-8 Data of Different Cloud Cover" Remote Sensing 10, no. 8: 1214. https://0-doi-org.brum.beds.ac.uk/10.3390/rs10081214