Hyperspectral Image Classification Based on Parameter-Optimized 3D-CNNs Combined with Transfer Learning and Virtual Samples

Abstract

:1. Introduction

2. Overview of Three-Dimensional Convolutional Neural Networks

3. Improved Classification Method Based on a Parameter-Optimized Three-Dimensional Convolutional Neural Network (3D-CNN) Combined with Transfer Learning and Virtual Samples

3.1. Parameter-Optimized 3D-CNN (PO-3DCNN)

3.2. Parameter-Optimized 3D-CNN with Transfer Learning (PO-3DCNN-TL)

3.3. Virtual Samples

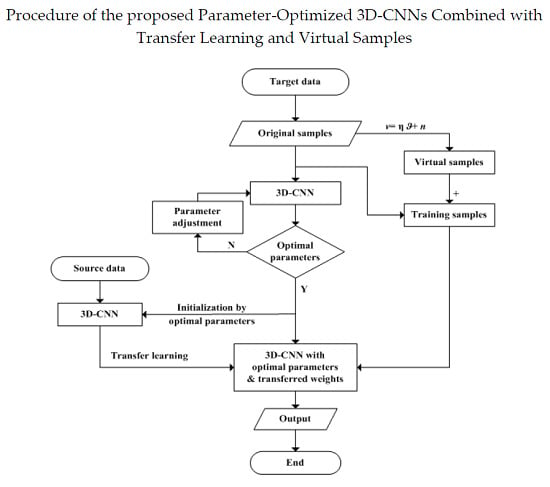

3.4. Parameter-Optimized 3D-CNN Combined with Transfer Learning and Virtual Samples (PO-3DCNN-TV)

4. Experiments

4.1. Real-World Hyperspectral Image (HSI) Data Sets

4.2. Parameter Setting of the Considered Classification Methods

4.2.1. Support Vector Machines (SVM)

4.2.2. Deep Belief Networks (DBN)

4.2.3. Parameter-Optimized 2D-CNN (PO-2DCNN)

4.3. The Parameters of Some Improved 3D-CNN Models

4.3.1. The PO-3DCNN Method

4.3.2. The PO-3DCNN-TL Method

4.3.3. The PO-3DCNN-VS method

4.4. The Parameters of the Proposed PO-3DCNN-TV Method

4.5. Classification Results and Discussion

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Santara, A.; Mani, K.; Hatwar, P.; Singh, A.; Garg, A.; Padia, K.; Mitra, P. BASS Net: Band-Adaptive Spectral-Spatial Feature Learning Neural Network for Hyperspectral Image Classification. IEEE Trans. Geosci. Remote Sens. 2017, 55, 5293–5301. [Google Scholar] [CrossRef] [Green Version]

- Fauvel, M.; Chanussot, J.; Benediktsson, J. A spatial–spectral kernel-based approach for the classification of remote-sensing images. Pattern Recognit. 2012, 45, 381–392. [Google Scholar] [CrossRef]

- Yuan, Y.; Zheng, X.; Lu, X. Spectral–Spatial Kernel Regularized for Hyperspectral Image Denoising. IEEE Trans. Geosci. Remote Sens. 2015, 53, 3815–3832. [Google Scholar] [CrossRef]

- Mou, L.; Ghamisi, P.; Zhu, X. Deep Recurrent Neural Networks for Hyperspectral Image Classification. IEEE Trans. Geosci. Remote Sens. 2017, 55, 3639–3655. [Google Scholar] [CrossRef]

- Tuia, D.; Persello, C.; Bruzzone, L. Domain Adaptation for the Classification of Remote Sensing Data: An Overview of Recent Advances. IEEE Geosci. Remote Sens. Mag. 2016, 4, 41–57. [Google Scholar] [CrossRef]

- Lacar, F.; Lewis, M.; Grierson, I. Use of hyperspectral imagery for mapping grape varieties in the Barossa Valley, South Australia. IGARSS 2001, 6, 2875–2877. [Google Scholar]

- Gevaert, C.; Suomalainen, J.; Tang, J.; Kooistra, L. Generation of Spectral–Temporal Response Surfaces by Combining Multispectral Satellite and Hyperspectral UAV Imagery for Precision Agriculture Applications. IEEE J. Sel. Top. Appl. Earth Obs. 2015, 8, 3140–3146. [Google Scholar] [CrossRef]

- Yokoya, N.; Chan, C.; Segl, K. Potential of Resolution-Enhanced Hyperspectral Data for Mineral Mapping Using Simulated EnMAP and Sentinel-2 Images. Remote Sens. 2016, 8, 172. [Google Scholar] [CrossRef]

- Olmanson, L.G.; Brezonik, P.L.; Bauer, M. Airborne hyperspectral remote sensing to assess spatial distribution of water quality characteristics in large rivers: The Mississippi River and its tributaries in Minnesota. Remote Sens. Environ. 2013, 130, 254–265. [Google Scholar] [CrossRef]

- Wu, C.; Du, B.; Zhang, L. Slow Feature Analysis for Change Detection in Multispectral Imagery. IEEE Trans. Geosci. Remote Sens. 2014, 52, 2858–2874. [Google Scholar] [CrossRef]

- Laurin, G.V.; Chan, C.W.; Chen, Q.; Lindsell, J.A.; Coomes, D.A.; Guerriero, L.; Frate, F.D.; Miglietta, F.; Valentini, R. Biodiversity Mapping in a Tropical West African Forest with Airborne Hyperspectral Data. PLoS ONE 2014, 9, e97910. [Google Scholar]

- Demir, B.; Bovolo, F.; Bruzzone, L. Updating Land-Cover Maps by Classification of Image Time Series: A Novel Change-Detection-Driven Transfer Learning Approach. IEEE Trans. Geosci. Remote Sens. 2013, 51, 300–312. [Google Scholar] [CrossRef]

- Dev, S.; Wen, B.; Lee, Y.H.; Winkler, S. Ground-Based Image Analysis: a Tutorial on Machine-Learning Techniques and Applications. IEEE Geosci. Remote Sens. Mag. 2016, 4, 79–93. [Google Scholar] [CrossRef]

- Bioucas-Dias, J.M.; Plaza, A.; Camps-Valls, G.; Scheunders, P.; Nasrabadi, N.; Chanussot, J. Hyperspectral Remote Sensing Data Analysis and Future Challenges. IEEE Geosci. Remote Sens. Mag. 2013, 1, 6–36. [Google Scholar] [CrossRef] [Green Version]

- Hang, R.; Liu, Q.; Song, H.; Sun, Y. Matrix-Based Discriminant Subspace Ensemble for Hyperspectral Image Spatial-Spectral Feature Fusion. IEEE Trans Geosci. Remote Sens. 2016, 54, 783–794. [Google Scholar] [CrossRef]

- Hang, R.; Liu, Q.; Sun, Y.; Yuan, X.; Pei, H. Robust matrix discriminative analysis for feature extraction from hyperspectral images. IEEE J. Sel. Top. Appl. Earth Obs. 2017, 10, 2002–2011. [Google Scholar] [CrossRef]

- Xu, Y.; Zhang, L.; Du, B.; Zhang, F. Spectral-Spatial Unified Networks for Hyperspectral Image Classification. IEEE Trans. Geosci. Remote Sens. 2018, 1–17. [Google Scholar] [CrossRef]

- Song, H.; Liu, Q.; Wang, G.; Hang, R.; Huang, B. Spatiotemporal Satellite Image Fusion Using Deep Convolutional Neural Networks. IEEE J. Sel. Top. Appl. Earth Obs. 2018, 11, 821–829. [Google Scholar] [CrossRef]

- Liu, W.; Yang, X.; Tao, D.; Cheng, J.; Tang, Y. Multiview dimension reduction via Hessian multiset canonical correlations. Inf. Fusion 2018, 41, 119–128. [Google Scholar] [CrossRef]

- Wang, M.; Hua, X.; Hong, R.; Tang, J.; Qi, G.; Song, Y. Unified video annotation via multigraph learning. IEEE Trans. Circuits Syst. Video Technol. 2009, 19, 733–746. [Google Scholar] [CrossRef]

- Yang, X.; Liu, W.; Tao, D.; Cheng, J.; Li, S. Multiview Canonical Correlation Analysis Networks for Remote Sensing Image Recognition. IEEE Geosci. Remote Sens. Lett. 2017, 14, 1855–1859. [Google Scholar] [CrossRef]

- Wang, M.; Hua, X.S. Active learning in multimedia annotation and retrieval: A survey. Acm Trans. Intell. Syst. Technol. 2011, 2, 1–21. [Google Scholar] [CrossRef]

- Hu, J.; He, Z.; Li, J.; He, L.; Wang, Y. 3D-Gabor Inspired Multiview Active Learning for Spectral-Spatial Hyperspectral Image Classification. Remote Sens. 2018, 10, 1070. [Google Scholar] [CrossRef]

- Lee, G. Fast computation of the compressive hyperspectral imaging by using alternating least squares methods. Signal Process. Image Comm. 2018, 60, 100–106. [Google Scholar] [CrossRef]

- Wang, L.; Bai, J.; Wu, J.; Jeon, G. Hyperspectral image compression based on lapped transform and Tucker decomposition. Signal Process. Image Commun. 2015, 36, 63–69. [Google Scholar] [CrossRef]

- Yang, W.; Yin, X.; Xia, G.S. Learning High-level Features for Satellite Image Classification with Limited Labeled Samples. IEEE Trans. Geosci. Remote Sens. 2015, 53, 4472–4482. [Google Scholar] [CrossRef]

- Cvetković, S.; Stojanovic, M.; Nikolić, S. Multi-channel descriptors and ensemble of Extreme Learning Machines for classification of remote sensing images. Signal Process. Image Commun. 2015, 39, 111–120. [Google Scholar] [CrossRef]

- Zhao, F.; Liu, G.; Wang, X. An efficient macroblock-based diverse and flexible prediction modes selection for hyperspectral images coding. Signal Process. Image Commun. 2010, 25, 697–708. [Google Scholar] [CrossRef]

- Vakil, M.; Megherbi, D.; Malas, J. A robust multi-stage information-theoretic approach for registration of partially overlapped hyperspectral aerial imagery and evaluation in the presence of system noise. Image Commun. 2017, 52, 97–110. [Google Scholar] [CrossRef]

- Huang, Z.; Pan, Z.; Lei, B. Transfer Learning with Deep Convolutional Neural Network for SAR Target Classification with Limited Labeled Data. Remote Sens. 2017, 9, 907. [Google Scholar] [CrossRef]

- Chen, Y.; Lin, Z.; Zhao, X.; Wang, G.; Gu, Y. Deep Learning-Based Classification of Hyperspectral Data. IEEE J. Sel. Top. Appl. Earth Obs. 2014, 7, 2094–2107. [Google Scholar] [CrossRef]

- Chen, Y.; Jiang, H.; Li, C.; Jia, X.; Ghamisi, P. Deep Feature Extraction and Classification of Hyperspectral Images Based on Convolutional Neural Networks. IEEE Trans. Geosci. Remote Sens. 2016, 54, 6232–6251. [Google Scholar] [CrossRef]

- Mei, S.; Yuan, X.; Ji, J.; Zhang, Y.; Wan, S. Hyperspectral Image Spatial Super-Resolution via 3D Full Convolutional Neural Network. Remote Sens. 2017, 9, 1139. [Google Scholar] [CrossRef]

- Cao, J.; Chen, Z.; Wang, B. Graph-based deep Convolutional networks for Hyperspectral image classification. IGARSS 2016, 3270–3273. [Google Scholar]

- Liu, W.; Zha, Z.; Wang, Y.; Lu, K.; Tao, D. p-Laplacian Regularized Sparse Coding for Human Activity Recognition. IEEE Trans. Ind. Electron. 2016, 63, 5120–5129. [Google Scholar] [CrossRef]

- Liu, W.; Liu, H.; Tao, D.; Wang, Y.; Lu, K. Manifold regularized kernel logistic regression for web image annotation. Neurocomputing 2016, 172, 3–8. [Google Scholar] [CrossRef]

- Yu, M.; Dong, G.; Fan, H.; Kuang, G. SAR target recognition via local sparse representation of Multi-Manifold regularized Low-Rank approximation. Remote Sens. 2018, 10, 211. [Google Scholar]

- Casale, P.; Altini, M.; Amft, O. Transfer Learning in Body Sensor Networks Using Ensembles of Randomised Trees. IEEE Int. Things J. 2015, 2, 33–40. [Google Scholar] [CrossRef]

- Yang, J.; Zhao, Y.; Chan, C. Learning and Transferring Deep Joint Spectral–Spatial Features for Hyperspectral Classification. IEEE Trans. Geosci. Remote Sens. 2017, 55, 4729–4742. [Google Scholar] [CrossRef]

- Pan, S.; Yang, Q. A Survey on Transfer Learning. IEEE Trans. Knowl. Data Eng. 2010, 22, 1345–1359. [Google Scholar] [CrossRef] [Green Version]

- Oquab, M.; Bottou, L.; Laptev, I.; Sivic, J. Learning and Transferring Mid-level Image Representations Using Convolutional Neural Networks. CVPR 2014, 1717–1724. [Google Scholar] [Green Version]

- Lin, J.; He, C.; Wang, Z.; Li, S. Structure Preserving Transfer Learning for Unsupervised Hyperspectral Image Classification. IEEE Geosci. Remote Sens. Lett. 2017, 14, 1656–1660. [Google Scholar] [CrossRef]

- Fielding, J.; Fox, L.; Heller, H.; Seltzer, S.; Tempany, C. Spiral CT in the evaluation of flank pain: Overall accuracy and feature analysis. J. Comput. Assist. Tomogr. 1997, 21, 635–638. [Google Scholar] [CrossRef] [PubMed]

- Radford, A.; Metz, L.; Chintala, S. Unsupervised Representation Learning with Deep Convolutional Generative Adversarial Network. arXiv, 2015; arXiv:1511.06434. [Google Scholar]

- Zhong, Z.; Li, J.; Luo, Z.; Chapman, M. Spectral-spatial residual network for hyperspectral image classification: A 3-D deep learning framework. IEEE Trans. Geosci. Remote Sens. 2018, 56, 847–858. [Google Scholar] [CrossRef]

- Li, Y.; Zhang, H.; Shen, Q. Spectral-Spatial Classification of Hyperspectral Imagery with 3D Convolutional Neural Network. Remote Sens. 2017, 9, 67. [Google Scholar] [CrossRef]

- Hinton, G.; Srivastava, N.; Krizhevsky, A.; Sutskever, I.; Salakhutdinov, R. Improving neural networks by preventing co-adaptation of feature detectors. Comput. Sci. 2012, 3, 212–223. [Google Scholar]

- Glorot, X.; Bordes, A.; Bengio, Y. Deep Sparse Rectifier Neural Networks. AISTATS 2011, 315–323. [Google Scholar]

- Zuo, Z.; Shuai, B.; Wang, G.; Liu, X.; Wang, X.; Wang, B.; Chen, Y. Learning Contextual Dependence with Convolutional Hierarchical Recurrent Neural Networks. IEEE Trans. Image Process. 2016, 25, 2983–2996. [Google Scholar] [CrossRef] [PubMed]

- Ghamisi, P.; Chen, Y.; Zhu, X. A Self-Improving Convolution Neural Network for the Classification of Hyperspectral Data. IEEE Geosci. Remote Sens. Lett. 2016, 13, 1537–1541. [Google Scholar] [CrossRef]

- Bengio, Y. Practical Recommendations for Gradient-Based Training of Deep Architectures. Lect. Notes Comput. Sci. 2012, 7700, 437–478. [Google Scholar] [Green Version]

- Jia, S.; Hu, J.; Zhu, J.; Jia, X.; Li, Q. Three-Dimensional Local Binary Patterns for Hyperspectral Imagery Classification. IGARSS 2016, 55, 465–468. [Google Scholar]

- Wu, Z.; Wang, Q.; Shen, Y. 3D gray-gradient-gradient tensor field feature for hyperspectral image classification. In Proceedings of the 10th International Conference on Communications and Networking in China (ChinaCom), Shanghai, China, 15–17 August 2015; pp. 432–436. [Google Scholar]

- Liu, X.; Bourennane, S.; Fossati, C. Denoising of Hyperspectral Images Using the PARAFAC Model and Statistical Performance Analysis. IEEE Trans. Geosci. Remote Sens. 2012, 50, 3717–3724. [Google Scholar] [CrossRef]

- Anguita, D.; Ridella, S.; Rivieccio, F. K-fold generalization capability assessment for support vector classifiers. IJCNN 2005, 2, 855–858. [Google Scholar]

- Zorzi, M.; Chiuso, A. The Harmonic Analysis of Kernel Functions. Automatica 2018, 94, 125–137. [Google Scholar] [CrossRef]

- Chen, Y.; Zhao, X.; Jia, X. Spectral–Spatial Classification of Hyperspectral Data Based on Deep Belief Network. IEEE J. Sel. Top. Appl. Earth Obs. 2015, 8, 2381–2392. [Google Scholar] [CrossRef]

- Liu, X.; Bourennane, S.; Fossati, C. Reduction of Signal-Dependent Noise from Hyperspectral Images for Target Detection. IEEE Trans. Geosci. Remote Sens. 2014, 52, 5396–5411. [Google Scholar]

- Zhao, W.; Zhang, H. Secure Fingerprint Recognition Based on Frobenius Norm. In Proceedings of the International Conference on Computer Science and Electronics Engineering, Hangzhou, China, 23–25 March 2012; pp. 388–391. [Google Scholar]

- Wieland, M.; Liu, W.; Yamazaki, F. Learning Change from Synthetic Aperture Radar Images: Performance Evaluation of a Support Vector Machine to Detect Earthquake and Tsunami-Induced Changes. Remote Sens. 2016, 8, 792. [Google Scholar] [CrossRef]

- ENVI (Version 5.5)-Online Help, Using ENVI, Support Vector Machine. Available online: https://www.harrisgeospatial.com/docs/SupportVectorMachine.html (accessed on 23 August 2018).

- Ustuner, M.; Sanli, F.B.; Dixon, B. Application of Suport Vector Machines for Landuse Classification Using High-Resolution RapidEye Images: A Sensitivity Analysis. J. Remote Sens. 2015, 48, 403–422. [Google Scholar]

- Li, J.; Xi, B.; Li, Y.; Du, Q.; Wang, K. Hyperspectral Classification Based on Texture Feature Enhancement and Deep Belief Networks. Remote Sens. 2018, 10, 396. [Google Scholar] [CrossRef]

- Bu, Y.; Zhao, G.; Luo, A.; Pan, J.; Chen, Y. Restricted Boltzmann machine: A non-linear substitute for PCA in spectral processing. Astron. Astrophys. 2015, 576, A96. [Google Scholar] [CrossRef]

- Zeiler, M.D. ADADELTA: An Adaptive Learning Rate Method. arXiv, 2012; arXiv:1212.5701. [Google Scholar]

| No | Color | Class | Total | Testing | Training |

|---|---|---|---|---|---|

| 1 | Asphalt | 271 | 27 | 244 | |

| 2 | Meadows | 277 | 28 | 249 | |

| 3 | Gravel | 333 | 33 | 300 | |

| 4 | Trees | 277 | 28 | 249 | |

| 5 | Metal sheets | 206 | 21 | 185 | |

| 6 | Bare Soil | 484 | 48 | 436 | |

| 7 | Bitumen | 758 | 76 | 682 | |

| 8 | Bricks | 594 | 59 | 535 | |

| 9 | Shadow | 196 | 20 | 176 | |

| All classes | 3396 | 340 | 3056 | ||

| Parameter | C1 | P1 | C2 | P2 | F | Epoch | Act-f | Pooling | Batch |

|---|---|---|---|---|---|---|---|---|---|

| Value | 64@5 × 5 | 64@5 × 5 | 128@4 × 4 | 128@2 × 2 | 128 | 300 | ReLU | Max-pooling | 128 |

| Network Layer | Convolutional Layer | Act-F | Pooling Layer | Pooling Function | Dropout |

|---|---|---|---|---|---|

| 1 | 4 × 4 × 13@16 | ReLU | 2 × 2 × 2 | Max-pooling | 0.1 |

| 2 | 5 × 5 × 13@32 | ReLU | 2 × 2 × 2 | Max-pooling | 0.1 |

| 3 | 4 × 4 × 13@64 | ReLU | - | - | - |

| σ2 | 0.00001 | 0.0001 | 0.001 | 0.01 | 0.1 | 1 |

|---|---|---|---|---|---|---|

| OA | 0.9927 | 0.9942 | 0.9947 | 0.9936 | 0.9849 | 0.9912 |

| Classifier | SVM | DBN | PO-2DCNN | PO-3DCNN | PO-3DCNN-TL | PO-3DCNN-VS | PO-3DCNN-TV | |

|---|---|---|---|---|---|---|---|---|

| Class | ||||||||

| Asphalt | 0.9004 | 0.9631 | 0.9631 | 0.9815 | 1 | 1 | 1 | |

| Meadows | 0.7748 | 0.8949 | 0.9489 | 0.9700 | 1 | 1 | 1 | |

| Gravel | 0.8195 | 1 | 1 | 0.9712 | 1 | 0.9928 | 1 | |

| Trees | 0.9747 | 1 | 0.9134 | 0.9712 | 0.9856 | 0.9892 | 0.9819 | |

| Metal sheets | 1 | 0.9272 | 0.9757 | 0.9806 | 0.9806 | 0.9757 | 0.9806 | |

| Bare Soil | 0.9669 | 1 | 0.9773 | 1 | 1 | 1 | 1 | |

| Bitumen | 0.9235 | 0.9670 | 0.9934 | 1 | 1 | 1 | 1 | |

| Bricks | 0.9529 | 0.8771 | 1 | 1 | 1 | 1 | 1 | |

| Shadow | 1 | 0.9031 | 0.9592 | 0.9439 | 0.9367 | 0.9592 | 0.9796 | |

| Overall | 0.9231 | 0.9505 | 0.9764 | 0.9891 | 0.9938 | 0.9947 | 0.9962 | |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, X.; Sun, Q.; Meng, Y.; Fu, M.; Bourennane, S. Hyperspectral Image Classification Based on Parameter-Optimized 3D-CNNs Combined with Transfer Learning and Virtual Samples. Remote Sens. 2018, 10, 1425. https://0-doi-org.brum.beds.ac.uk/10.3390/rs10091425

Liu X, Sun Q, Meng Y, Fu M, Bourennane S. Hyperspectral Image Classification Based on Parameter-Optimized 3D-CNNs Combined with Transfer Learning and Virtual Samples. Remote Sensing. 2018; 10(9):1425. https://0-doi-org.brum.beds.ac.uk/10.3390/rs10091425

Chicago/Turabian StyleLiu, Xuefeng, Qiaoqiao Sun, Yue Meng, Min Fu, and Salah Bourennane. 2018. "Hyperspectral Image Classification Based on Parameter-Optimized 3D-CNNs Combined with Transfer Learning and Virtual Samples" Remote Sensing 10, no. 9: 1425. https://0-doi-org.brum.beds.ac.uk/10.3390/rs10091425