3.1. UAV-Based Photogrammetry

Low-cost UAVs (e.g., DJI Phantom quadcopters) typically carry a consumer-grade camera, thereby causing large perspective distortions and poor camera geometry [

5]. Image processing started with the distortion correction of each UAV image using the camera parameters (

Table 1) to minimize the systematic errors. Then, OpenCV was used to remove distortion and resample UAV images. Feature extraction and matching were performed using a sub-Harris operator coupled with the scale-invariant feature transform algorithm that was presented in a previous work [

37]; this operator can obtain the evenly distributed matches among overlapping images to calculate accurate relative orientation. However, low-cost GPS and inertial measurement units shipped with DJI Phantom resulted in poor positioning and attitude accuracy, thereby posing challenges in the traditional digital photogrammetry with respect to 3D geometry generation from UAV imagery. By contrast, SfM is suitable for generating 3D geometry from the UAV imagery given the advantage of allowing 3D reconstruction from the overlapping but otherwise unordered images rather than being a prerequisite of accurate camera intrinsic and extrinsic parameters. Self-calibrating bundle adjustment was conducted to optimize the camera parameters, camera pose, and 3D structure using sparse bundle adjustment software package [

38], and absolute orientation was performed using five evenly distributed GCPs (Nos. 1–5 in

Figure 3a,b) for each of the forest plantations. In

Figure 3a,b, the results of UAV-based photogrammetry through the SGM algorithm [

23] consisted of

and

points for Plantations 1 and 2, respectively; these values correspond to a density of approximately 261 points/m

2. Thus, the DSMs for the two forest plantations were generated with a pixel size of 5 cm × 5 cm, as depicted in

Figure 3c,d. The SGM enables the fine details of the tree surface to be constructed.

In

Figure 3a,c, seven GCPs (Nos. 6–12) for each of the forest plantations were selected as checkpoints to evaluate the accuracy of DSMs. The residual error and Root Mean Square Error (RMSE) were calculated on the basis of the seven checkpoints and their corresponding 3D points measured using the DSM. The error statistics are summarized in

Table 2. The X and Y RMSE values were approximately 5 cm, which is nearly equal to the image resolution and a relatively small horizontal error. The vertical RMSE values of the two forest plantations were less than 10 cm. Thus, these RMSE values seemed relatively satisfactory and sufficient to estimate the tree heights of the forest plantations in mountainous areas.

3.2. DTM Generation

In contrast to LiDAR point clouds, UAV-based photogrammetric point clouds on the canopy of trees do not reflect the ground terrain given the lack of the ability to penetrate the upper canopy, i.e., directly separating ground points from image-derived point clouds is actually difficult. No accurate and detailed DTM is typically available in complex mountainous terrains. However, bare-earth data frequently exist in the forest plantations close to the terrain surface and allow tree heights to be modeled. To ensure that terrain fitting interpolation in the open terrain without bare-earth data (i.e., covered with abundant grass) is available, automatic classification of points and BEP extraction are jointly used in the present study to obtain ground points for DTM generation. First, initial ground points are separated from dense clouds using automatic classification with Photoscan software (version, Manufacturer, City, US State abbrev. if applicable, Country), and three parameters, namely, maximum angle, maximum distance, and cell size, are determined for Plantations 1 and 2 through multiple trials based on the most ground points that can be detected correctly. Second, 3D BEPs are extracted to replace the adjacent initial ground points, i.e., the heights of the initial ground points within 3 × 3 pixels around a BEP are replaced with the height of the BEP. BEP extraction from UAV-based photogrammetric point clouds is exploited to generate the DTM that mainly includes the following steps: (1) BEP detection and (2) denoising and 3D surface interpolation. On the basis of the gap in the spectral characteristics of RGB bands between bare land and vegetation, a bare-earth index (BEI) is created to extract 3D BEPs using inverse vegetation index and Gamma transform. The BEI is defined as follows:

where

denotes the green leaf index calculated using

[

39], which is selected in accordance with favorable vegetation extraction from UAV images [

40], and

,

,

are the three components of RGB channels; Gamma transform is exploited to enhance contrast of BEI values for highlighting BEPs;

denotes the Gamma value, which is set to 2.5 that is approximately estimated from the range of 0 to 255 of the BEI value in this study. The

value is set to 0 when

, and the

value is set to 255 when

. The BEI intensity maps of Plantations 1 and 2 are depicted in

Figure 4a,b, but some shadow is considered bare land, i.e., shadow points may exist in 3D BEPs. An SI [

32] is also used to exclude the shadow of trees from 3D BEPs. The SI is defined as follows:

where a pixel of

is considered the shadow in this study. The value 0.2 is determined through multiple trials based on nearly all shadows that can be detected. The shadow masks of Plantations 1 and 2 are exhibited in

Figure 4c,d. In

Figure 4e,f, the BEPs of Plantations 1 and 2 can be then obtained from the BEI intensity maps with the corresponding shadow masks. BEPs in the vicinity of trees are prone to be related to vehicle tracks or other infrastructure or significant geological accidents (e.g., rock outcrops). The BEPs that are unrepresentative in the terrain must be removed manually.

The RBF neural network performs exact interpolation [

33]; thus, we use this network to interpolate the height value of each DTM grid from the 3D BEPs. In

Figure 5, the RBF neural network is an artificial neural network that uses RBF as an activation function, typically including the input layer, the hidden layer of a nonlinear RBF function, and the linear output layer [

41]. The RBF neural network interpolation is called exact interpolation, i.e., the output function of the RBF neural network passes exactly through all 3D BEPs. The input layer is modeled as a real vector

of 3D BEP coordinates, and the output layer is a linear combination of the RBF of the inputs

and neuron parameters that are represented using

to generate a 1D output, i.e., a height value.

In the interpolation operator from the 3D BEPs, the vector

is mapped to the corresponding target output, which is the scalar function of the input vector

and can be computed using

where

is the norm operation and taken as the Euclidean distance here,

is the number of neurons in the hidden layer,

is the weight of neuron

in the linear output neuron,

denotes the center vector of the neuron

,

denotes the nonlinear function that is a multiquadratic function used in this study as follows:

where

denotes the distance between unknown and known data, and

is a smoothing factor between 0 and 1. In the training of the RBF neural network, the gradient descent algorithm is used to find the weights

; therefore, the RBF neural network passes through the 3D BEPs. The objective function

is defined as follows:

where

is the number of samples, and

is the height value of sample

. All weights

are adjusted at each time step by moving them in the opposite direction of the gradient of

until the minimum of

is found, the optimization of

is calculated as

where

is the learning rate, which can be between 0 and 1; and

is the iteration count. The noisy 3D BEPs must not be traversed because the RBF is a highly oscillatory function that will not provide favorable generalization in this case [

33], i.e., the RBF neural network-based interpolation performs poorly with noisy data.

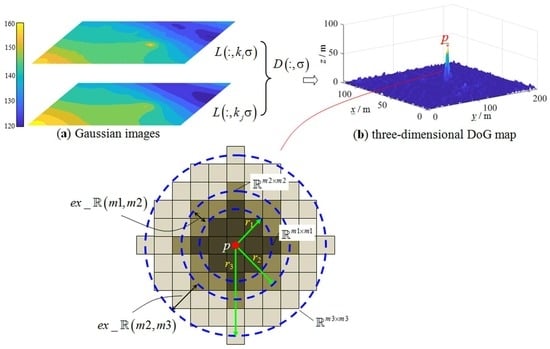

To minimize the impact of noisy data in 3D BEPs, Difference of Gaussian (DoG) operation and moving surface function are jointly applied to detect and remove the noisy data. The 3D BEPs are typically not evenly distributed in the study areas; thus, the noisy data detection is implemented in the initial DTM of a regular grid generated from the 3D BEPs adopted in the present study. The noisy data typically appear as the local maxima or minima of the height value in the initial DTM, break the continuity of the terrain surface, and form a large contrast with the surrounding height values. DoG [

42] is a feature enhancement algorithm that is suitable for identifying features, considering that the height value of noisy data changes drastically and can be considered features. In

Figure 6b, the noisy data

are easily noticed in the DoG map. The mathematical expression

of the DoG at the pixel

is calculated as

where

is the initial scale factor of the DTM and typically set as 1.6;

and

are the multiple factors; and

is the convolutional operation expressed as follows:

where

denotes Gaussian filtering, and

is the convolutional operator. A moving window with 3 × 3 pixels is used to find the local maxima or minima of height value within the DoG, which is considered a candidate

for noisy data. The radius

of the surrounding height values centered on candidate

contaminated by noisy data can be defined as

where

denotes the integer operation, and

is a multiple factor that is determined when the height values within a surrounding area do not have a significant oscillation. Specifically, in

Figure 6, for example, the radius

is increased at each time with

, and then the surrounding regions

,

and are generated with the radiuses of 2, 3, and 5 when = 1, 2, and 3, correspondingly. The extended regions

(i.e., closed loop areas) between the regions

and displayed in

Figure 6c can be determined using

We use the mean

and standard deviation

of the height values within the extended regions

to determine the final radius of the surrounding height values.

and

are computed as follows:

The

corresponding radius is considered the final radius when a newly added region

satisfies the following conditions:

where

is a given threshold.

The final radius can be determined using Equation (10). Candidate

is regarded as a noisy point when the residual value

within the

radius satisfies the following condition:

where

is set as 3 in this study. The moving quadratic surface-based interpolation method is used to correct the height value at Point

and eliminate the contamination that occurs when interpolating the surrounding grid of the DTM. The used quadratic function is expressed as

where

denotes the coordinate of a 3D BEP; and

denote the six coefficients of the quadratic function, which can be solved from the 3D BEPs

surrounding the noisy point

using the least square algorithm expressed as follows:

where

,

, and

. The correct height value of the noisy point

is then derived from the quadratic surface model. The RBF neural network is (Algorithm 1) used again to perform the DTM generation after noisy data removal that can be used for achieving the height interpolation against noisy data, and the DTMs of the two forest plantations are presented in

Figure 7a,b.

| Algorithm 1: RBF neural network against noisy data |

| Parameters: and are the width and height of the DSM, respectively; and correspond to the mean and standard deviation of the height values ; r is the radius centered on candidate ; is a multiple factor; is the residual value; and is a given threshold. |

| Generate the DTM using the RBF neural network from the ground points. |

| Compute the DoG map using the DTM. |

| for to do |

| for to do |

| if is the local maxima or minima, then |

| while !() or !() |

| |

| |

| |

| |

| end while |

| end if |

| |

| if then |

| is regarded as a noisy point. |

| is then derived from the fitted quadratic surface model. |

| Update the height value of the ground points. |

| end if |

| end for |

| end for |

| Generate the DTM using the RBF neural network from the updated ground points again. |